(→Process technology) |

|||

| (126 intermediate revisions by 47 users not shown) | |||

| Line 5: | Line 5: | ||

|designer=AMD | |designer=AMD | ||

|manufacturer=TSMC | |manufacturer=TSMC | ||

| − | |introduction=2019 | + | |manufacturer 2=GlobalFoundries |

| − | |process=7 nm | + | |introduction=July 2019 |

| − | |predecessor=Zen+ | + | |process=7 nm <!-- TSMC N7 --> |

| − | |predecessor link=amd/microarchitectures/zen+ | + | |process 2=12 nm <!-- GloFo 12LP --> |

| + | |process 3=14 nm <!-- GloFo 14LPP --> | ||

| + | |cores=4 | ||

| + | |cores 2=6 | ||

| + | |cores 3=8 | ||

| + | |cores 4=12 | ||

| + | |cores 5=16 | ||

| + | |cores 6=24 | ||

| + | |cores 7=32 | ||

| + | |cores 8=64 | ||

| + | |type=Superscalar | ||

| + | |oooe=Yes | ||

| + | |speculative=Yes | ||

| + | |renaming=Yes | ||

| + | |stages=19 | ||

| + | |decode=4-way | ||

| + | |isa=x86-64 | ||

| + | |extension=MOVBE | ||

| + | |extension 2=MMX | ||

| + | |extension 3=SSE | ||

| + | |extension 4=SSE2 | ||

| + | |extension 5=SSE3 | ||

| + | |extension 6=SSSE3 | ||

| + | |extension 7=SSE4A | ||

| + | |extension 8=SSE4.1 | ||

| + | |extension 9=SSE4.2 | ||

| + | |extension 10=POPCNT | ||

| + | |extension 11=AVX | ||

| + | |extension 12=AVX2 | ||

| + | |extension 13=AES | ||

| + | |extension 14=PCLMUL | ||

| + | |extension 15=FSGSBASE | ||

| + | |extension 16=RDRND | ||

| + | |extension 17=FMA3 | ||

| + | |extension 18=F16C | ||

| + | |extension 19=BMI | ||

| + | |extension 20=BMI2 | ||

| + | |extension 21=RDSEED | ||

| + | |extension 22=ADCX | ||

| + | |extension 23=PREFETCHW | ||

| + | |extension 24=CLFLUSHOPT | ||

| + | |extension 25=XSAVE | ||

| + | |extension 26=SHA | ||

| + | |extension 27=UMIP | ||

| + | |extension 28=CLZERO | ||

| + | |core name=Rome (Server) | ||

| + | |core name 2=Castle Peak (HEDT) | ||

| + | |core name 3=Matisse (Desktop) | ||

| + | |core name 4=Renoir (APU/Mobile) | ||

| + | |core name 5=Lucienne (APU/Mobile) | ||

| + | |predecessor=Zen | ||

| + | |predecessor link=amd/microarchitectures/zen | ||

| + | |predecessor 2=Zen+ | ||

| + | |predecessor 2 link=amd/microarchitectures/zen+ | ||

|successor=Zen 3 | |successor=Zen 3 | ||

|successor link=amd/microarchitectures/zen 3 | |successor link=amd/microarchitectures/zen 3 | ||

| − | | | + | |successor 2=Zen 4 |

| + | |successor 2 link=amd/microarchitectures/zen 4 | ||

}} | }} | ||

| − | '''Zen 2''' is | + | |

| + | '''Zen 2''' is [[AMD]]'s successor to {{\\|Zen+}}, and is a [[7 nm process]] [[microarchitecture]] for mainstream mobile, desktops, workstations, and servers. Zen 2 was replaced by {{\\|Zen 3}}. | ||

| + | |||

| + | For performance desktop and mobile computing, Zen is branded as {{amd|Athlon}}, {{amd|Ryzen 3}}, {{amd|Ryzen 5}}, {{amd|Ryzen 7}}, {{amd|Ryzen 9}}, and {{amd|Ryzen Threadripper}} processors. For servers, Zen is branded as {{amd|EPYC}}. | ||

== History == | == History == | ||

| − | [[File:amd zen | + | [[File:amd zen 2 logo.png|right|thumb|Zen 2]] |

| − | Zen 2 | + | |

| + | Zen 2 succeeded {{\\|Zen}} in [[2019]]. In February of [[2017]] Lisa Su, AMD's CEO announced their future roadmap to include Zen 2 and later {{\\|Zen 3}}. On ''Investor's Day'' May [[2017]] Jim Anderson, AMD's Senior Vice President, confirmed that Zen 2 was set to utilize [[7 nm process]]. Initial details of Zen 2 and {{amd|Rome|l=core}} were unveiled during [[AMD]]'s ''Next Horizon'' event on November 6, [[2018]]. | ||

== Codenames == | == Codenames == | ||

| − | [[File:amd zen2-3 roadmap.png| | + | [[File:amd zen2-3 roadmap.png|thumb|right|Zen 2 on the roadmap]] |

| − | |||

| + | '''Product Codenames:''' | ||

{| class="wikitable" | {| class="wikitable" | ||

|- | |- | ||

| − | ! Core !! | + | ! Core !! Series !! Cores/Treads !! Target |

| + | |- | ||

| + | | {{amd|Rome|l=core}} || [[EPYC]] 7002 || Up to 64/128 || High-end server [[multiprocessors]] | ||

| + | |- | ||

| + | | {{amd|Castle Peak|l=core}} || [[Ryzen Threadripper]] 3000 || Up to 64/128 || Workstation & Enthusiasts market processors | ||

| + | |- | ||

| + | | {{amd|Matisse|l=core}} || [[Ryzen]] 3000 || Up to 16/32 || Mainstream to high-end desktops & Enthusiasts market processors | ||

| + | |- | ||

| + | | {{amd|Renoir|l=core}} || [[Ryzen]] 4000 || Up to 8/16 || Mainstream APUs with {{\\|Vega}} GPUs | ||

| + | |- | ||

| + | | {{amd|Lucienne|l=core}} || [[Ryzen]] 5000 || Up to 8/16 || Mainstream APUs with {{\\|Vega}} GPUs | ||

| + | |- | ||

| + | | {{amd|Mendocino|l=core}} || [[Ryzen]] 7000, [[Athlon]] || Up to 4/8 || Mainstream Mobile processors | ||

| + | |- | ||

| + | |} | ||

| + | |||

| + | '''Architectural Codenames:''' | ||

| + | {| class="wikitable" | ||

| + | |- | ||

| + | ! Arch !! Codename | ||

| + | |- | ||

| + | | Core || Valhalla | ||

| + | |- | ||

| + | | CCD || Aspen Highlands | ||

| + | |} | ||

| + | |||

| + | == Brands == | ||

| + | [[File:amd ryzen black bg logo.png|thumb|right|Ryzen brand logo]] | ||

| + | |||

| + | {| class="wikitable" style="text-align: center;" | ||

| + | |- | ||

| + | ! colspan="11" | AMD Zen-based processor brands | ||

| + | |- | ||

| + | ! rowspan="2" | Logo || rowspan="2" | Family !! rowspan="2" | General Description !! colspan="8" | Differentiating Features | ||

| + | |- | ||

| + | ! Cores !! Unlocked !! {{x86|AVX2}} !! [[SMT]] !! [[IGP]] !! [[ECC]] !! [[Multiprocessing|MP]] | ||

| + | |- | ||

| + | ! colspan="11" | Mainstream | ||

| + | |- | ||

| + | | [[File:amd ryzen 3 logo.png|75px|link=Ryzen 3]] || {{amd|Ryzen 3}} || Entry level Performance || [[quad-core|Quad]] || {{tchk|yes}} || {{tchk|yes}} || {{tchk|some}} || {{tchk|some}}<sup>1</sup> || {{tchk|some}}<sup>2</sup> || {{tchk|no}} | ||

| + | |- | ||

| + | | [[File:amd ryzen 5 logo.png|75px|link=Ryzen 5]] || {{amd|Ryzen 5}} || Mid-range Performance || [[hexa-core|Hexa]] || {{tchk|yes}} || {{tchk|yes}} || {{tchk|some}} || {{tchk|some}}<sup>1</sup> || {{tchk|some}}<sup>2</sup> || {{tchk|no}} | ||

|- | |- | ||

| − | | {{amd| | + | | [[File:amd ryzen 7 logo.png|75px|link=Ryzen 7]] || {{amd|Ryzen 7}} || High-end Performance || [[octa-core|Octa]] || {{tchk|yes}} || {{tchk|yes}} || {{tchk|some}} || {{tchk|some}}<sup>1</sup> || {{tchk|some}}<sup>2</sup> || {{tchk|no}} |

|- | |- | ||

| − | | {{amd| | + | | [[File:amd ryzen 9 logo.png|75px|link=Ryzen 9]] || {{amd|Ryzen 9}} || High-end Performance || [[12 cores|12]]-[[16 cores|16]] (Desktop) <br /> [[octa-core|Octa]] (Mobile) || {{tchk|yes}} || {{tchk|yes}} || {{tchk|yes}} || {{tchk|some}}<sup>1</sup> || {{tchk|some}}<sup>2</sup> || {{tchk|no}} |

|- | |- | ||

| − | | {{amd| | + | ! colspan="10" | Enthusiasts / Workstations |

| + | |- | ||

| + | | [[File:ryzen threadripper logo.png|75px|link=Ryzen Threadripper]] || {{amd|Ryzen Threadripper}} || Enthusiasts || [[24 cores|24]]-[[64 cores|64]] || {{tchk|yes}} || {{tchk|yes}} || {{tchk|yes}} || {{tchk|no}} || {{tchk|yes}} || {{tchk|no}} | ||

| + | |- | ||

| + | ! colspan="10" | Servers | ||

| + | |- | ||

| + | | [[File:amd epyc logo.png|75px|link=amd/epyc]] || {{amd|EPYC}} || High-performance Server Processor || [[8 cores|8]]-[[64 cores|64]] || {{tchk|no}} || {{tchk|yes}} || {{tchk|yes}} || {{tchk|no}} || {{tchk|yes}} || {{tchk|yes}} | ||

| + | |- | ||

| + | ! colspan="10" | Embedded / Edge | ||

| + | |- | ||

| + | | [[File:epyc embedded logo.png|75px|link=amd/epyc embedded]] || {{amd|EPYC Embedded}} || Embedded / Edge Server Processor || [[8 cores|8]]-[[64 cores|64]] || {{tchk|no}} || {{tchk|yes}} || {{tchk|yes}} || {{tchk|no}} || {{tchk|yes}} || {{tchk|no}} | ||

| + | |- | ||

| + | | [[File:ryzen embedded logo.png|75px|link=amd/ryzen embedded]] || {{amd|Ryzen Embedded}} || Embedded APUs || [[6 cores|6]]-[[8 cores|8]] || {{tchk|no}} || {{tchk|yes}} || {{tchk|yes}} || {{tchk|yes}} || {{tchk|yes}} || {{tchk|no}} | ||

|- | |- | ||

| − | |||

|} | |} | ||

| + | <sup>1</sup> Only available on G, GE, H, HS, HX and U SKUs. <br /> | ||

| + | <sup>2</sup> ECC support is unavailable on AMD APUs. | ||

== Process technology == | == Process technology == | ||

| − | Zen 2 is fabricated on [[TSMC]]' | + | Zen 2 comprises multiple different components: |

| + | * The Core Complex Die (CCD) is fabricated on [[TSMC]] N7 ([[7 nm process|7 nm HPC process]]) | ||

| + | * The client I/O Die (cIOD) is fabricated on [[GlobalFoundries]] GloFo 12LP ([[12 nm process]]) | ||

| + | * The server I/O Die (sIOD) is fabricated on [[GlobalFoundries]] GloFo 14LPP ([[14 nm process]]) | ||

| + | |||

| + | ===Comparison=== | ||

| + | {| class="wikitable" cellpadding="3px" style="border: 1px solid black; border-spacing: 0px; width: 100%; text-align:center;" | ||

| + | ! colspan="2" | Core | ||

| + | ! {{amd|Zen|l=arch}} | ||

| + | ! {{amd|Zen+|l=arch}} | ||

| + | ! {{amd|Zen 2|l=arch}} | ||

| + | ! {{amd|Zen 3|l=arch}} | ||

| + | ! {{amd|Zen 3+|l=arch}} | ||

| + | ! {{amd|Zen 4|l=arch}} | ||

| + | ! {{amd|Zen 4c|l=arch}} | ||

| + | ! {{amd|Zen 5|l=arch}} | ||

| + | ! {{amd|Zen 5c|l=arch}} | ||

| + | ! {{amd|Zen 6|l=arch}} | ||

| + | ! {{amd|Zen 6c|l=arch}} | ||

| + | |- | ||

| + | ! style="text-align: left;" rowspan="2" | Codename | ||

| + | ! style="text-align: left;" | Core | ||

| + | | | ||

| + | | | ||

| + | | ''Valhalla'' | ||

| + | | ''Cerberus'' | ||

| + | | | ||

| + | | ''Persephone'' | ||

| + | | ''Dionysus'' | ||

| + | | ''Nirvana'' | ||

| + | | ''Prometheus'' | ||

| + | | ''Morpheus'' | ||

| + | | ''Monarch'' | ||

| + | |- | ||

| + | ! style="text-align: left;" | CCD | ||

| + | | | ||

| + | | | ||

| + | | ''Aspen <br>Highlands'' | ||

| + | | ''Brecken <br>Ridge'' | ||

| + | | | ||

| + | | ''Durango'' | ||

| + | | ''Vindhya'' | ||

| + | | ''Eldora'' | ||

| + | | | ||

| + | | | ||

| + | | | ||

| + | |- | ||

| + | ! style="text-align: left;" rowspan="2" | Cores <br>(threads) | ||

| + | ! style="text-align: left;" | CCD | ||

| + | | | ||

| + | | | ||

| + | | 8 (16) | ||

| + | | 8 (16) | ||

| + | | | ||

| + | | 8 (16) | ||

| + | | 16 (32) | ||

| + | | 8 (16) | ||

| + | | 16 (32) | ||

| + | | | ||

| + | | | ||

| + | |- | ||

| + | ! style="text-align: left;" | CCX | ||

| + | | | ||

| + | | | ||

| + | | 4 (8) | ||

| + | | 8 (16) | ||

| + | | | ||

| + | | 8 (16) | ||

| + | | 8 (16) | ||

| + | | 8 (16) | ||

| + | | | ||

| + | | | ||

| + | | | ||

| + | |- | ||

| + | ! style="text-align: left;" rowspan="2" | L3 cache | ||

| + | ! style="text-align: left;" | CCD | ||

| + | | | ||

| + | | | ||

| + | | 32 MB | ||

| + | | 32 MB | ||

| + | | | ||

| + | | 32 MB | ||

| + | | 32 MB | ||

| + | | 32 MB | ||

| + | | 32 MB | ||

| + | | | ||

| + | | | ||

| + | |- | ||

| + | ! style="text-align: left;" | CCX | ||

| + | | | ||

| + | | | ||

| + | | 16 MB | ||

| + | | 32 MB | ||

| + | | | ||

| + | | 32 MB | ||

| + | | 16 MB | ||

| + | | 32 MB | ||

| + | | | ||

| + | | | ||

| + | | | ||

| + | |- | ||

| + | ! style="text-align: left;" rowspan="2" | Die size | ||

| + | ! style="text-align: left;" | CCD area | ||

| + | | 44 mm<sup>2</sup> | ||

| + | | | ||

| + | | 74 mm<sup>2</sup> | ||

| + | | 80.7 mm<sup>2</sup> | ||

| + | | | ||

| + | | 66.3 mm<sup>2</sup> | ||

| + | | 72.7 mm<sup>2</sup> | ||

| + | | 70.6 mm<sup>2</sup> | ||

| + | | | ||

| + | | | ||

| + | | | ||

| + | |- | ||

| + | ! style="text-align: left;" | Core area<br>(Fab node) | ||

| + | | 7 mm<sup>2</sup><br>([[14 nm]]) | ||

| + | | ([[12 nm]]) | ||

| + | | 2.83 mm<sup>2</sup><br>([[7 nm]]) | ||

| + | | 3.24 mm<sup>2</sup><br>([[7 nm]]) | ||

| + | | ([[7 nm]]) | ||

| + | | 3.84 mm<sup>2</sup><br>([[5 nm]]) | ||

| + | | 2.48 mm<sup>2</sup><br>([[5 nm]]) | ||

| + | | ([[4 nm]]) | ||

| + | | ([[3 nm]]) | ||

| + | | ([[2 nm]]) | ||

| + | | ([[2 nm]]) | ||

| + | |- | ||

| + | |} | ||

| + | |||

| + | == Compiler support == | ||

| + | {| class="wikitable" | ||

| + | |- | ||

| + | ! Compiler !! Arch-Specific || Arch-Favorable | ||

| + | |- | ||

| + | | [[GCC]] || <code>-march=znver2</code> || <code>-mtune=znver2</code> | ||

| + | |- | ||

| + | | [[LLVM]] || <code>-march=znver2</code> || <code>-mtune=znver2</code> | ||

| + | |} | ||

| + | * '''Note:''' Initial support in GCC 9 and LLVM 9. | ||

== Architecture == | == Architecture == | ||

| − | + | Zen 2 inherits most of the design from {{\\|Zen+}} but improves the instruction stream bandwidth and floating-point throughput performance. | |

| − | === Key changes from {{\\|Zen}} === | + | === Key changes from {{\\|Zen+}} === |

| − | * [[7 nm process]] (from [[ | + | {| border="0" cellpadding="5" width="100%" |

| − | * PCIe 4.0 (from 3.0) | + | |- |

| − | {{ | + | |width="50%" valign="top" align="left"| |

| + | * [[7 nm process]] (from [[12 nm]]) | ||

| + | ** I/O die utilizes [[12 nm]] | ||

| + | * '''Core''' | ||

| + | ** Higher [[IPC]] (AMD self-reported up to 15% IPC) | ||

| + | * '''Front-end''' | ||

| + | ** Improved [[branch prediction unit]] | ||

| + | *** Improved prefetcher | ||

| + | ** Improved µOP cache (tags) | ||

| + | *** Larger µOP cache (4096 entries, up from 2048) | ||

| + | ** Increased dispatch bandwidth | ||

| + | * '''Back-end''' | ||

| + | <!-- *** Increased retire bandwidth (??-wide, up from 8-wide) --> | ||

| + | ** FPU | ||

| + | *** 2x wider datapath (256-bit, up from 128-bit) | ||

| + | *** 2x wider EUs (256-bit FMAs, up from 128-bit FMAs) | ||

| + | *** 2x wider LSU (2x256-bit L/S, up from 128-bit) | ||

| + | *** Improved DP vector mult. latency (3 cycles, down from 4) | ||

| + | ** Integer | ||

| + | *** Increased number of registers (180, up from 168) | ||

| + | *** Additional AGU (3, up from 2) | ||

| + | *** Larger scheduler (4x16 ALU + 1x28 AGU, up from 4x14 ALU + 2x14 AGU | ||

| + | *** Larger Reorder Buffer (224, up from 192) | ||

| + | * '''Security''' | ||

| + | ** In-silicon Spectre enhancements | ||

| + | ** Increase number of keys/VMs supported | ||

| + | |width="50%" valign="top" align="left"| | ||

| + | * '''Memory subsystem''' | ||

| + | ** 0.5x L1 instruction cache (32 KiB, down from 64 KiB) | ||

| + | ** 8-way associativity (from 4-way) | ||

| + | ** 1.33 larger L2 DTLB (2048-entry, up from 1536) | ||

| + | ** 48 entry store queue (was 44) | ||

| + | * '''{{amd|CCX}}''' | ||

| + | ** 2x L3 cache slice (16 MiB, up from 8 MiB) | ||

| + | ** Increased L3 latency (~40 cycles, up from ~35 cycles) | ||

| + | * '''I/O''' | ||

| + | ** PCIe 4.0 (from 3.0) | ||

| + | ** {{amd|Infinity Fabric}} 2 | ||

| + | *** 2.3x transfer rate per link (25 GT/s, up from ~10.6 GT/s) | ||

| + | ** Decoupling of MemClk from FClk, allowing 2:1 ratio in addition to 1:1 | ||

| + | ** DDR4-3200 support, up from DDR4-2933 | ||

==== New instructions ==== | ==== New instructions ==== | ||

Zen 2 introduced a number of new [[x86]] instructions: | Zen 2 introduced a number of new [[x86]] instructions: | ||

| + | * <code>{{x86|CLWB}}</code> - Write back modified cache line and may retain line in cache hierarchy | ||

| + | * <code>{{x86|WBNOINVD}}</code> - Write back and do not flush internal caches, initiate same of external caches | ||

| + | * <code>{{x86|MCOMMIT}}</code> - Commit stores to memory | ||

| + | * <code>{{x86|RDPID}}</code> - Read Processor ID | ||

| + | * <code>{{x86|RDPRU}}</code> - Read Processor Register | ||

| + | |||

| + | Furthermore, the User-Mode Instruction Prevention ({{x86|UMIP}}) extension. | ||

| + | |} | ||

| + | ---- | ||

| + | |||

| + | === Memory Hierarchy === | ||

| + | {| border="0" cellpadding="5" width="100%" | ||

| + | |- | ||

| + | |width="50%" valign="top" align="left"| | ||

| + | * '''Cache''' | ||

| + | ** '''L0 Op Cache:''' | ||

| + | *** 4,096 Ops, 8-way set associative | ||

| + | **** 64 sets, 8 Op line size | ||

| + | *** Parity protected | ||

| + | ** '''L1I Cache:''' | ||

| + | *** 32 KiB, 8-way set associative | ||

| + | **** 64 sets, 64 B line size | ||

| + | **** Shared by the two threads, per core | ||

| + | *** Parity protected | ||

| + | ** '''L1D Cache:''' | ||

| + | *** 32 KiB, 8-way set associative | ||

| + | **** 64 sets, 64 B line size | ||

| + | *** Write-back policy | ||

| + | *** 4-5 cycles latency for Int | ||

| + | *** 7-8 cycles latency for FP | ||

| + | *** ECC | ||

| + | ** '''L2 Cache:''' | ||

| + | *** 512 KiB, 8-way set associative | ||

| + | **** 1,024 sets, 64 B line size | ||

| + | *** Write-back policy | ||

| + | *** Inclusive of L1 | ||

| + | *** ≥ 12 cycles latency | ||

| + | *** ECC | ||

| + | ** '''L3 Cache:''' | ||

| + | *** ''Matisse, Castle Peak, Rome'': 16 MiB/CCX, shared across all cores | ||

| + | *** ''Renoir'': 4 MiB/CCX, shared across all cores | ||

| + | |width="50%" valign="top" align="left"| | ||

| + | *** 16-way set associative | ||

| + | **** 16,384 sets, 64 B line size | ||

| + | *** Write-back policy, Victim cache | ||

| + | *** 39 cycles average latency | ||

| + | *** ECC | ||

| + | *** QoS Monitoring and Enforcement | ||

| + | * '''System DRAM''' | ||

| + | ** ''Rome'': | ||

| + | *** 8 channels per socket, up to 16 DIMMs, max. 4 TiB | ||

| + | *** Up to PC4-25600L (DDR4-3200 RDIMM/LRDIMM), ECC supported | ||

| + | ** ''Castle Peak'', sTRX4: | ||

| + | *** 4 channels, up to 8 DIMMs, max. 256 GiB | ||

| + | *** Up to PC4-25600U (DDR4-3200 UDIMM), ECC supported | ||

| + | ** ''Matisse'': | ||

| + | *** 2 channels, up to 4 DIMMs, max. 128 GiB | ||

| + | *** Up to PC4-25600U (DDR4-3200 UDIMM), ECC supported | ||

| + | |||

| + | :;Translation Lookaside Buffers | ||

| + | * '''ITLB''' | ||

| + | ** 64 entry L1 TLB, fully associative, all page sizes | ||

| + | ** 512 entry L2 TLB, 8-way set associative | ||

| + | *** 4-Kbyte and 2-Mbyte pages | ||

| + | ** Parity protected | ||

| + | * '''DTLB''' | ||

| + | ** 64 entry L1 TLB, fully associative, all page sizes | ||

| + | ** 2,048 entry L2 TLB, 16-way set associative | ||

| + | *** 4-Kbyte and 2-Mbyte pages, PDEs to speed up table walks | ||

| + | ** Parity protected | ||

| + | |} | ||

| + | |||

| + | == All Zen 2 Chips == | ||

| + | |||

| + | <!-- NOTE: | ||

| + | This table is generated automatically from the data in the actual articles. | ||

| + | If a microprocessor is missing from the list, an appropriate article for it needs to be | ||

| + | created and tagged accordingly. | ||

| + | |||

| + | Missing a chip? please dump its name here: http://en.wikichip.org/wiki/WikiChip:wanted_chips | ||

| + | --> | ||

| + | {{comp table start}} | ||

| + | <table class="comptable sortable tc13 tc14 tc15 tc16 tc17 tc18 tc19"> | ||

| + | {{comp table header|main|20:List of all Zen-based Processors}} | ||

| + | {{comp table header|main|12:Processor|4:Features}} | ||

| + | {{comp table header|cols|Family|Core|C|T|TDP|L3|Base|Turbo|Max Mem|Process|Launched|Price|SMT|SEV|SME|TSME}} | ||

| + | {{comp table header|lsep|25:[[Uniprocessors]]}} | ||

| + | {{#ask: [[Category:microprocessor models by amd]] [[instance of::microprocessor]] [[microarchitecture::Zen 2]] [[max cpu count::1]] | ||

| + | |?full page name | ||

| + | |?model number | ||

| + | |?microprocessor family | ||

| + | |?core name | ||

| + | |?core count | ||

| + | |?thread count | ||

| + | |?tdp | ||

| + | |?l3$ size | ||

| + | |?base frequency#GHz | ||

| + | |?turbo frequency#GHz | ||

| + | |?max memory#GiB | ||

| + | |?process | ||

| + | |?first launched | ||

| + | |?release price | ||

| + | |?has simultaneous multithreading | ||

| + | |?has amd secure encrypted virtualization technology | ||

| + | |?has amd secure memory encryption technology | ||

| + | |?has amd transparent secure memory encryption technology | ||

| + | |format=template | ||

| + | |template=proc table 3 | ||

| + | |userparam=18:15 | ||

| + | |mainlabel=- | ||

| + | |valuesep=, | ||

| + | |limit=100 | ||

| + | }} | ||

| + | {{comp table header|lsep|25:[[Multiprocessors]] (dual-socket)}} | ||

| + | {{#ask: [[Category:microprocessor models by amd]] [[instance of::microprocessor]] [[microarchitecture::Zen 2]] [[max cpu count::>>1]] | ||

| + | |?full page name | ||

| + | |?model number | ||

| + | |?microprocessor family | ||

| + | |?core name | ||

| + | |?core count | ||

| + | |?thread count | ||

| + | |?tdp | ||

| + | |?l3$ size | ||

| + | |?base frequency#GHz | ||

| + | |?turbo frequency#GHz | ||

| + | |?max memory#GiB | ||

| + | |?process | ||

| + | |?first launched | ||

| + | |?release price | ||

| + | |?has simultaneous multithreading | ||

| + | |?has amd secure encrypted virtualization technology | ||

| + | |?has amd secure memory encryption technology | ||

| + | |?has amd transparent secure memory encryption technology | ||

| + | |format=template | ||

| + | |template=proc table 3 | ||

| + | |userparam=18:15 | ||

| + | |mainlabel=- | ||

| + | |valuesep=, | ||

| + | |limit=100 | ||

| + | }} | ||

| + | {{comp table count|ask=[[Category:microprocessor models by amd]] [[instance of::microprocessor]] [[microarchitecture::Zen 2]]}} | ||

| + | </table> | ||

| + | {{comp table end}} | ||

| + | |||

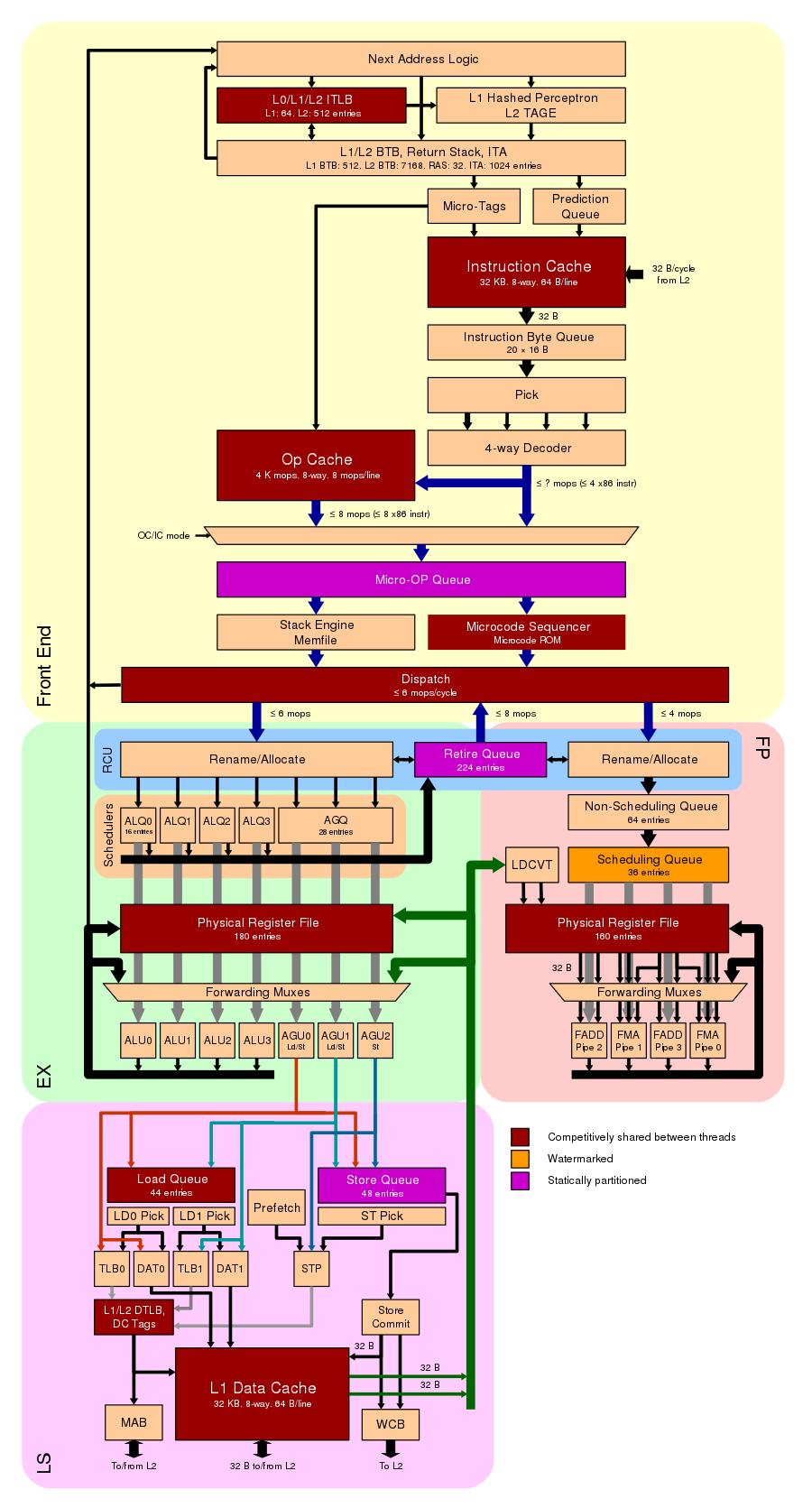

| + | == Block Diagram == | ||

| + | === Individual Core === | ||

| + | [[File:zen 2 core diagram.svg|900px]] | ||

| + | |||

| + | == Core == | ||

| + | Zen 2 largely builds on {{\\|Zen}}. Most of the fine details have not been revealed by AMD yet. | ||

| + | |||

| + | |||

| + | {{work-in-progress}} | ||

| + | |||

| + | |||

| + | |||

| + | === Front End === | ||

| + | In order to feed the backend, which has been widened to support 256-bit operation, the front-end throughput was improved. AMD reported that the [[branch prediction unit]] has been reworked. This includes improvements to the [[prefetcher]] and various undisclosed optimizations to the [[instruction cache]]. The [[µOP cache]] was also tweaked including changes to the µOP cache tags and the µOP cache itself which has been enlarged to improve the instruction stream throughput. | ||

| + | |||

| + | ==== Branch Prediction Unit ==== | ||

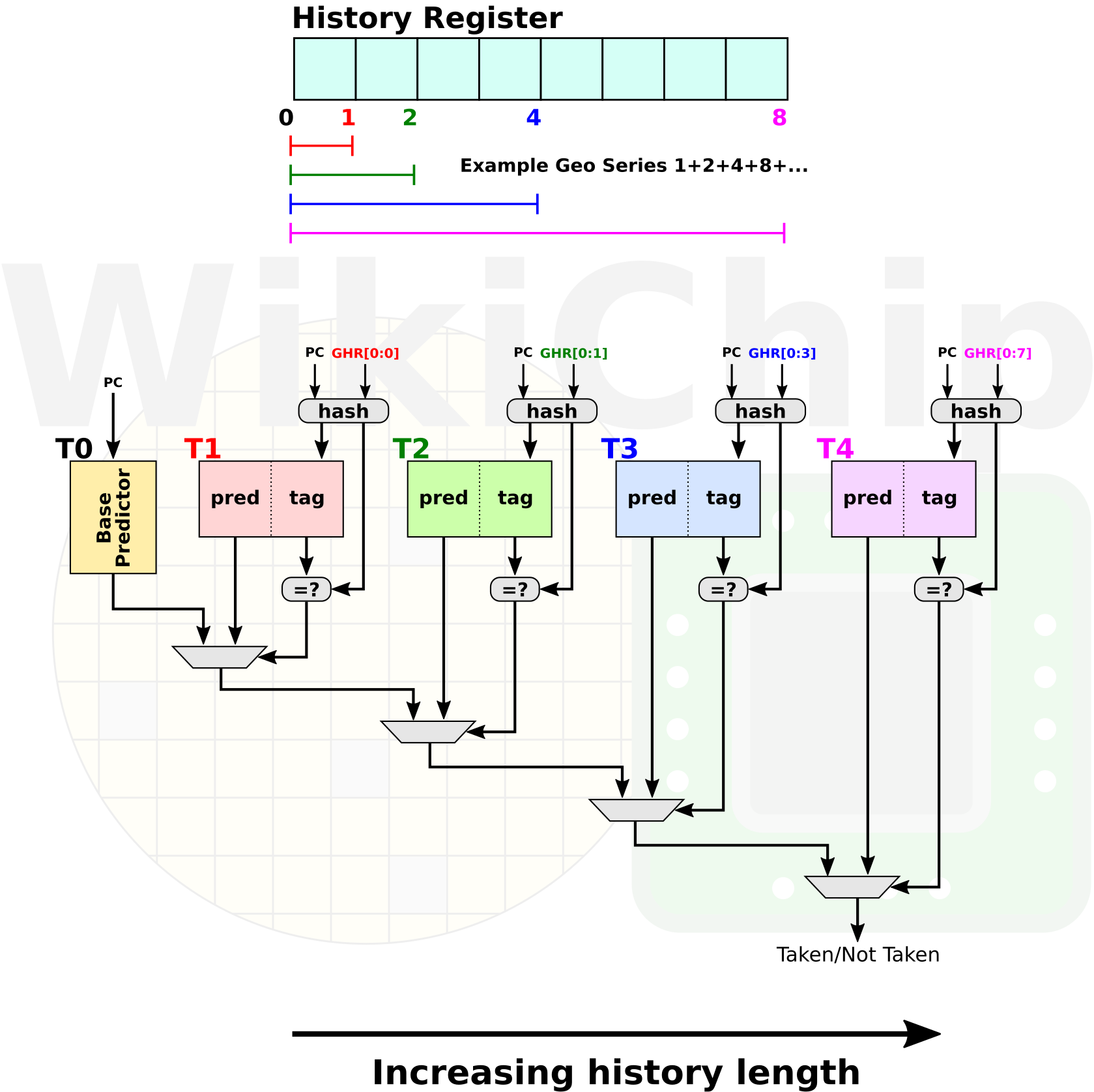

| + | The branch prediction unit guides instruction fetching and attempts to predict branches and their target to avoid pipeline stalls or the pursuit of incorrect execution paths. The Zen 2 BPU almost doubles the branch target buffer capacity, doubles the size of the indirect target array, and introduces a TAGE predictor. According to AMD it exhibits a 30% lower misprediction rate than its perceptron counterpart in the {{\\|Zen}}/{{\\|Zen+}} microarchitecture. | ||

| + | |||

| + | Once per cycle the next address logic determines if branch instructions have been identified in the current 64-byte instruction fetch block, and if so, consults several branch prediction facilities about the most likely target and calculates a new fetch block address. If no branches are expected it calculates the address of the next sequential block. Branches are evaluated much later in the integer execution unit which provides the actual branch outcome to redirect instruction fetching and refine the predictions. The dispatch unit can also cause redirects to handle mispredictions and exceptions. | ||

| + | |||

| + | Zen 2 has a three-level branch target buffer (BTB) which records the location of branch instructions and their target. Each entry can hold up to two branches if they reside in the same 64-byte cache line and the first is a conditional branch, reducing prediction latency and power consumption. Additional branches in the same cache line occupy another entry and increase latency accordingly. The L0 BTB holds 8 forward and 8 backward taken branches, up from 4 and 4 in the Zen/Zen+ microarchitecture, no calls or returns, and predicts with zero bubbles. The L1 BTB has 512 entries (256 in Zen) and creates one bubble if its prediction differs from the L0 BTB. The L2 BTB has 7168 entries (4096 in Zen) and creates four bubbles if its prediction differs from the L1 BTB. | ||

| + | |||

| + | A bubble is a pipeline stage performing no work because its input was delayed. Bubbles propagate to later stages and add up as different pipelines stall for unrelated reasons. The various decoupling queues in this design intend to hide bubbles or reduce their impact and allow earlier pipelines to run ahead. | ||

| + | |||

| + | A 32-entry return address stack (RAS) predicts return addresses from a near call. Far control transfers (far call or jump, SYSCALL, IRET, etc.) are not subject to branch prediction. 31 entries are usable in single-threaded mode, 15 per thread in dual-threaded mode. At the address of a CALL instruction the address of the following instruction is pushed onto the stack, at a RET instruction the address is popped. The RAS can recover from most mispredictions, is flushed otherwise. It includes an optimization for calls to the next address, an IA-32 idiom to obtain a copy of the instruction pointer enabling position independent code. | ||

| + | |||

| + | The 1024-entry, up from 512 entries in Zen, indirect target array (ITA) predicts indirect branches, for instance calls through a function pointer. Branches always to the same target are predicted using the static target from the BTB entry. If a branch has multiple targets, the predictor chooses among them using global history at L2 BTB correction latency. | ||

| + | |||

| + | The conditional branch direction predictor predicts if a near branch will be taken or not. Never taken branches are not entered into the BTB, thereby implicitly predicted not-taken. A taken branch is initially predicted always-taken, and dynamically predicted if its behavior changes. The Zen/Zen+ microarchitecture uses a hashed perceptron predictor for this purpose which is supplemented in Zen 2 by a TAGE predictor. AMD did not disclose details, the following explanations describe predictors of this type in general. | ||

| + | |||

| + | <!-- From https://fuse.wikichip.org/news/2458/a-look-at-the-amd-zen-2-core, a bit shortened. --> | ||

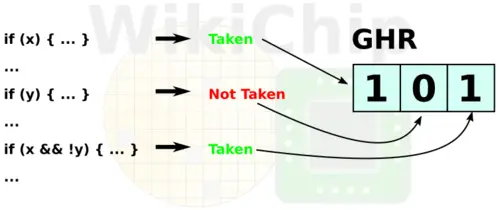

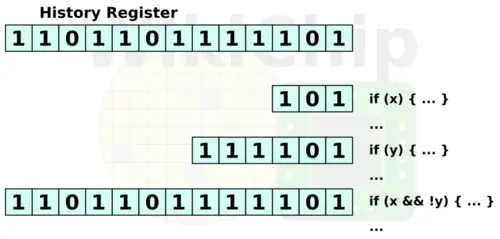

| + | When a branch takes place, it is stored in the branch target buffer so that subsequent branches could more easily be determined and taken (or not). Modern microprocessors such as Zen take this further by not only storing the history of the last branch but rather last few branches in a global history register (GHR) in order to extract correlations between branches (e.g., if an earlier branch is taken, maybe the next branch will also likely be taken). | ||

| + | |||

| + | [[File:zen-1-2-ghr.png|500px|center]] | ||

| + | |||

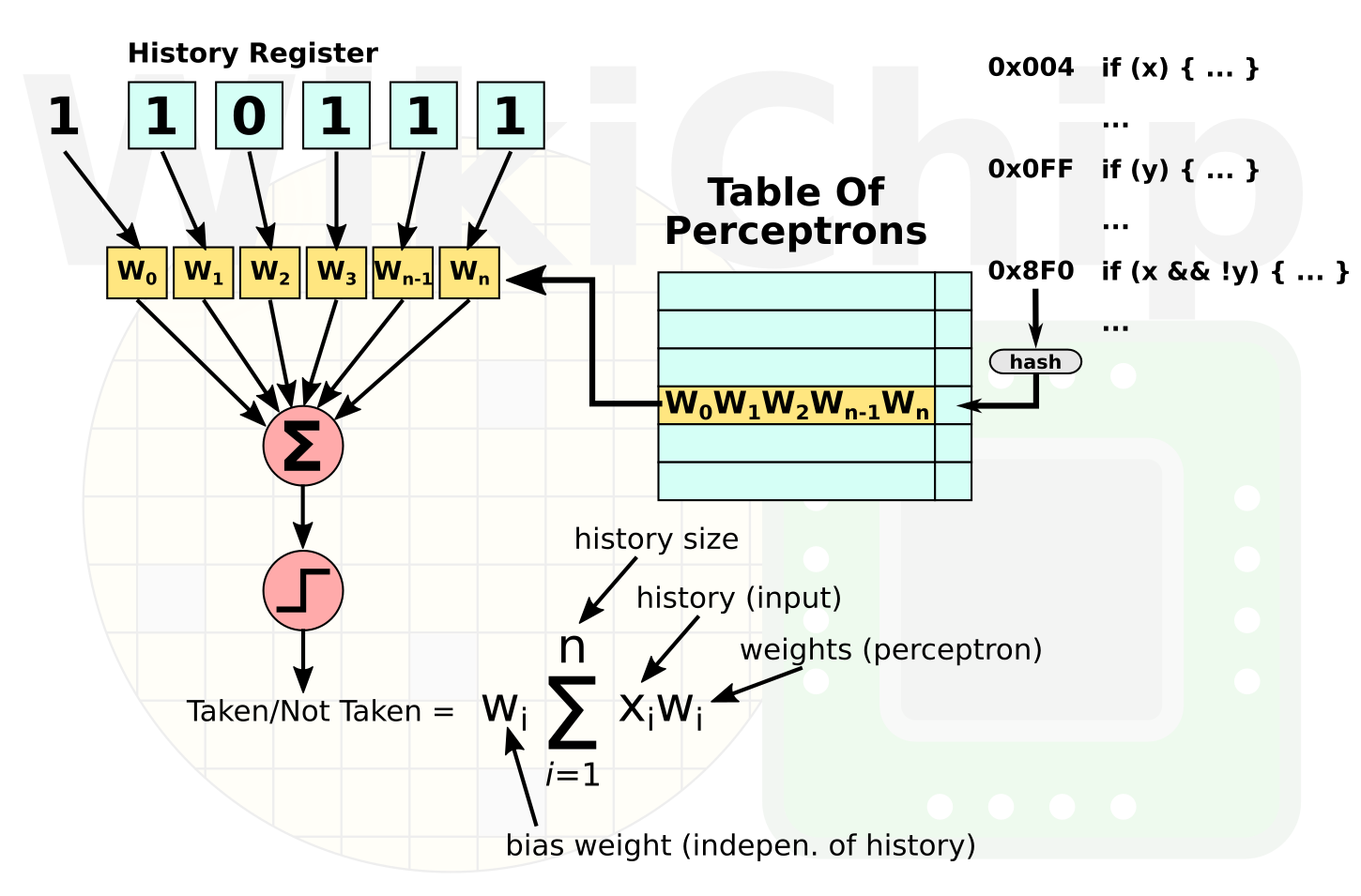

| + | Perceptrons are the simplest form of machine learning and lend themselves to somewhat easier hardware implementations compared to some of the other machine learning algorithms. They also tend to be more accurate than predictors like gshare but they do have more complex implementations. When the processor encounters a conditional branch, its address is used to fetch a perceptron from | ||

| + | a table of perceptrons. A perceptron for our purposes is nothing more than a vector of weights. Those weights represent the correlation between the outcome of a historic branch and the branch being predicted. For example, consider the following three patterns: “TTN”, “NTN”, and “NNN”. If all three patterns resulted in the next branch not being taken, then perhaps we can say that there is no correlation between the first two branches and assign them very little weight. The result of prior branches is fetched from the global history register. The individual bits from that register are used as inputs. The output value is the computed dot product of the weights and the history of prior branches. A negative output, in this case, might mean ‘not taken’ while all other values might be predicted as ‘taken’. It’s worth pointing out that other inputs beyond branch histories can also be used for inference correlations though it’s unknown if any real-world implementation makes use of that idea. The implementation on Zen is likely much more complex, sampling different kinds of histories. Nonetheless, the way it works remains the same. | ||

| + | |||

| + | [[File:zen-1-2-hp-1.png|center]] | ||

| + | |||

| + | Given Zen pipeline length and width, a bad prediction could result in over 100 slots getting flushed. This directly translates to a loss of performance. Zen 2 keeps the hashed perceptron predictor but adds a second layer new TAGE predictor. This predictor was first proposed in 2006 by Andre Seznec which is an improvement on Michaud’s PPM-like predictor. The TAGE predictor has won all four of the last championship branch prediction (CBP) contests (2006-2016). TAGE relies on the idea that different branches in the program require different history lengths. In other words, for some branches, very small histories work best. For example, 1-bit predictor: if a certain branch was taken before, it will be taken again. A different branch might rely on prior branches, hence requiring a much longer multi-bit history to adequately predict if it will be taken. The TAgged GEometric history length (TAGE) predictor consists of multiple global history tables that are indexed with global history registers of varying lengths in order to cover all of those cases. The lengths the registers used forms a geometric series, hence the name. | ||

| + | |||

| + | [[File:zen-1-2-tage.png|center]] | ||

| + | |||

| + | The idea with the TAGE predictor is to figure out which amount of branch history is best for which branch, prioritizing the longest history over shorter history. | ||

| + | |||

| + | [[File:zen-1-2-hr-lengths.png|500px|center]] | ||

| + | |||

| + | This multi-predictor scheme is similar to the layering of the branch target buffers. The first-level predictor, the perceptron, is used for quick lookups (e.g., single-cycle resolution). The second-level TAGE predictor is a complex predictor that requires many cycles to complete and therefore must be layered on top of the simple predictor. In other words, the L2 predictor is slower but better and is therefore used to double check the result of the faster and less accurate predictor. If the L2 predictor differs from the L1 one, a minor flush occurs as the TAGE predictor overrides the perceptron predictor and the fetch goes back and uses the L2 prediction as it’s assumed to be the more accurate prediction. | ||

| + | <!-- End of excerpt. --> | ||

| + | |||

| + | With the Zen family the translation of virtual to physical fetch addresses moved into the branch unit, this allows instruction fetching to begin earlier. The translation is assisted by a two-level translation lookaside buffer (TLB), unchanged in size from Zen to Zen 2. The fully-associative L1 instruction TLB contains 64 entries and holds 4-Kbyte, 2-Mbyte, or 1-Gbyte page table entries. The 512 entries of the 8-way set-associative L2 instruction TLB can hold 4-Kbyte and 2-Mbyte page table entries. 1-Gbyte pages are <i>smashed</i> into 2-Mbyte entries in the L2 ITLB. A hardware page table walker handles L2 ITLB misses. | ||

| + | |||

| + | Address translation, instruction cache and op cache lookups start in parallel. Micro-tags predict the way where the instruction block may be found, these hits are qualified by a cache tag lookup in a following pipeline stage. On an op cache hit the op cache receives a fetch address and enters macro-ops into the micro-op queue. If the machine was not in OC-mode already the op cache stalls until the instruction decoding pipeline is empty. Otherwise the address and information if and where the fetch block resides in the instruction cache is entered into a prediction queue decoupling branch prediction from the instruction fetch unit. | ||

| + | |||

| + | ==== Instruction Fetch and Decode Unit ==== | ||

| + | |||

| + | The instruction fetch unit reads 32 bytes per cycle from a 32 KiB, 8-way set associative, parity protected L1 instruction cache (IC), which replaced the 64 KiB, 4-way set associative instruction cache in the {{\\|Zen}}/{{\\|Zen+}} microarchitecture. The cache line size is 64 bytes. On a miss, cache lines are fetched from the L2 cache which has a | ||

| + | bandwidth of 32 bytes per cycle. The instruction cache generates fill requests for the cache line which includes the miss address and up to thirteen additional 64-byte blocks. | ||

| + | They are prefetched from addresses generated by the branch prediction unit and prepared in the prediction queue. | ||

| + | |||

| + | A 20-entry instruction byte queue decouples the fetch and decode units. Each entry holds 16 instruction bytes aligned on a 16-byte boundary. 10 entries are available to each thread in SMT mode. The IBQ, as apparently all data structures maintained by the core except the L1 and L2 data cache, is parity protected. A parity error causes a machine check exception, the core recovers by reloading the data from memory. The caches are ECC protected to correct single (and double?) bit errors and disable malfunctioning ways. | ||

| + | |||

| + | An align stage scans two 16-byte windows per cycle to determine the boundaries of up to four x86 instructions. The length of x86 instructions is variable and ranges from 1 to | ||

| + | 15 bytes. Only the first slot can pick instructions longer than 8 bytes. There is no penalty for decoding instructions with many prefixes in AMD Family 16h and later processors. | ||

| + | |||

| + | In another pipeline stage or stages the instruction decoder converts up to four x86 instructions per cycle to macro-ops. According to AMD the instruction decoder can send up to four instructions per cycle to the op cache and micro-op queue. This has to encompass at least four, and various sources suggest no more than four, macro-ops. | ||

| + | |||

| + | A macro-op is a fixed length, uniform representation of (usually) one x86 instruction, comprising an ALU and/or a memory operation. The latter can be a load, a store, or a load | ||

| + | and store to the same address. A micro-op in AMD parlance is one of these primitive operations as well as the subset of a macro-op relevant to this operation. AMD refers to | ||

| + | instructions decoded into one macro-op as "FastPath Single" type. More complex x86 instructions, the "VectorPath" type, are expanded into a fixed or variable number of macro-ops by the microcode sequencer, and at this stage probably represented by a macro-op containing a microcode ROM entry address. In the Zen/Zen+ microarchitecture AVX-256 instructions which perform the same operation on the 128-bit upper and lower half of a YMM register are decoded into a pair of macro-ops, the "FastPath Double" type. Zen 2 decodes these instructions into a single macro-op. There are, however, other instructions which generate two macro-ops. If these are "FastPath Double" decoded or microcoded is unclear. Branch fusion, discussed below, combines two x86 instructions into a single macro-op. | ||

| + | |||

| + | The op cache (OC) is a 512-entry, up from 256-entry in Zen, 8-way set associative, parity protected cache of previously decoded instructions. Each entry contains up to 8 sequential instructions ending in the same 64-byte aligned memory region, resulting in a maximum capacity of 4096 instructions, or rather, macro-ops. The op cache can send up to 8 macro-ops per cycle to the micro-op queue in lieu of using the traditional instruction fetch and decode pipeline stages. A transition from IC to OC mode is only possible at a branch target, and the machine generally remains in OC mode until an op cache miss occurs on a fetch address. More details can be gleaned from "Operation Cache", patent [https://patents.google.com/patent/WO2018106736A1 WO 2018/106736 A1]. | ||

| + | |||

| + | Bypassing the clock-gated fetch and decode units, and providing up to twice as many instructions per cycle, the op cache improves the decoding pipeline latency and | ||

| + | bandwidth and reduces power consumption. AMD stated that doubling its size at the expense of halving the instruction cache in Zen 2 results in a better trade-off. | ||

| + | |||

| + | AMD Family 15h and later processors support branch fusion, combining a CMP or TEST instruction with an immediately following conditional branch into a single macro-op. Instructions with a RIP-relative address or both a displacement and an immediate operand are not fused. Reasons may be an inability to handle two RIP-relative operands in one operation and limited space in a macro-op. According to Agner Fog the Zen microarchitecture can process two fused branches per cycle if the branches are not taken, one per two cycles if taken. AMD diagrams refer to the output of the Zen 2 op cache as fused instructions and the aforementioned patent confirms that the op cache can contain branch-fused | ||

| + | instructions. If they are fused when entering the cache, or if the instruction decoder sends fused macro-ops to the op cache as well as the micro-op queue is unclear. | ||

| + | |||

| + | A micro-op queue of undocumented depth, supposedly 72 entries in the Zen/Zen+ microarchitecture, decouples the decoding and dispatch units. Microcoded instructions are sent to the microcode sequencer which expands them into a predetermined sequence of macro-ops stored in the microcode ROM, temporarily inhibiting the output of macro-ops from the micro-op queue. A patch RAM supplements the microcode ROM and can hold additional sequences. The microcode sequencer supports branching within the microcode and includes match registers to intercept a limited number of microcode entry addresses and redirect execution to the patch RAM. | ||

| + | |||

| + | The stack engine unites a sideband stack optimizer (SSO) and a stack tracker. The former removes dependencies on the RSP register in a chain of PUSH, POP, CALL, and RET | ||

| + | instructions. A SSO is present in AMD processors since the {{\\|K10}} microarchitecture. The stack tracker predicts dependencies between pairs of PUSH and POP instructions. The | ||

| + | memfile similarly predicts dependencies between stores and loads accessing the same data in memory, e.g. local variables. Both functions use memory renaming to facilitate | ||

| + | store-to-load forwarding bypassing the load-store unit. <!-- A stack tracker in Zen 2 is inferred from Mike Clark's presentation on the Zen µarch at Hot Chips 28, August 23 2016, specifically his remarks on slide 9, see www.youtube.com/watch?v=sT1fEohOOQ0. --> | ||

| + | |||

| + | Dependencies on the RSP arise from the side effect of decrementing and incrementing the stack pointer. A stack operation can not proceed until the previous one updated the register. The SSO lifts these adjustments into the front end, calculating an offset which falls and rises with every PUSH and POP, and turns these instructions into stores and loads with RSP + offset addressing. The stack tracker records PUSH and POP instructions and their offset in a table. The memfile records stores and their destination, given by base, index, and displacement since linear or physical addresses are still unknown. They remain on file until the instruction retires. A temporary register is assigned to each store. When the store is later executed, the data is copied into this register (possibly by mapping it to the physical register backing the source register?) as well as being sent to the store queue. Loads are compared to recorded stores. A load predicted to match a previous store is modified to speculatively read the data from the store's temporary register. This is resolved in the integer or FP rename unit, potentially as a zero latency register-register move. The load is also sent to the LS unit to verify the prediction, and if incorrect, replay the instructions depending on the load with the correct data. It should be noted that several loads and stores can be dispatched in one cycle and this optimization is applied to all of them. | ||

| + | |||

| + | The dispatch unit distributes macro-ops to the out-of-order integer and floating point execution units. It can dispatch up to six macro-ops per cycle. | ||

| + | |||

| + | === Execution Engine === | ||

| + | AMD stated that both the dispatch bandwidth and the retire bandwidth has been increased. | ||

| + | |||

| + | ==== Integer Execution Unit ==== | ||

| + | The integer execution (EX) unit consists of a dedicated rename unit, five schedulers, a 180-entry physical register file (PRF), four ALU and three AGU pipelines, and a 224-entry retire queue shared with the floating point unit. The depth of the four ALU scheduler queues increased from 14 to 16 entries in Zen 2. The two AGU schedulers with a 14-entry queue of the Zen/Zen+ microarchitecture were replaced by one unified scheduler with a 28-entry queue. A third AGU pipeline only for store operations was added as well. The size of the PRF increased from 168 to 180 entries, the capacity of the retire queue from 192 to 224 entries. | ||

| + | |||

| + | The retire queue and the integer and floating point rename units form the retire control unit (RCU) tracking instructions, registers, and dependencies in the out-of-order execution units. Macro-ops stay in the retire queue until completion or until an exception occurs. When all macro-ops constituting an instruction completed successfully the instruction becomes eligible for retirement. Instructions are retired in program order. The retire queue can track up to 224 macro-ops in flight, 112 per thread in SMT mode, and retire up to eight macro-ops per cycle. | ||

| − | + | The rename unit receives up to six macro-ops per cycle from the dispatch unit. It maps general purpose architectural registers and temporary registers used by microcoded instructions to physical registers and allocates physical registers to receive ALU results. The PRF has 180 entries. Up to 38 registers per thread are mapped to architectural or temporary registers, the rest are available for out-of-order renames. | |

| − | + | ||

| − | * < | + | Zen 2, like the Zen/Zen+ microarchitecture, supports move elimination, performing register to register moves with zero latency in the rename unit while consuming no scheduling or execution resources. This is implemented by mapping the destination register to the same physical register as the source register and freeing the physical register previously backing the destination register. Given a chain of move instructions registers can be renamed several times in one cyle. Moves of partial registers such as AL, AH, or AX are not eliminated, they require a register merge operation in an ALU. |

| + | |||

| + | Earlier AMD microarchitectures, apparently including Zen/Zen+, recognize zeroing idioms such as XOR-ing a register with itself to eliminate the dependency on the source register. Zen 2 likely inherits this optimization but AMD did not disclose details. | ||

| + | |||

| + | The ALU pipelines carry out integer arithmetic and logical operations and evaluate branches. Each ALU pipeline is headed by a scheduler with a 16-entry micro-op queue. The scheduler tracks the availability of operands and issues up to one micro-op per cycle which is ready for execution, oldest first, to the ALU. Together all schedulers in the core can issue up to 11 micro-ops per cycle, this is not sustainable however due to the available dispatch and retire bandwidth. The PRF or the bypass network supply up to two operands to each pipeline. The bypass network enables back-to-back execution of dependent instructions by forwarding results from the ALUs to the previous pipeline stage. Data from load operations is <i>superforwarded</i> through the bypass network, obviating a write and read of the PRF. | ||

| + | |||

| + | The integer pipelines are asymmetric. All ALUs are capable of all integer operations except multiplies, divides, and CRC which are dedicated to one ALU each. The fully pipelined 64 bit × 64 bit multiply unit has a latency of three cycles. With two destination registers the latency becomes four cycles and throughput one per two cycles. The radix-4 integer divider can compute two result bits per cycle. | ||

| + | |||

| + | The address generation pipelines compute a linear memory address from the operands of a load or store micro-op. That can be a segment, base, and index register, an index scale factor, and a displacement. Address generation is optimized for simple address modes with a zeroed segment register. If two or three additions are required the latency increases by one cycle. Three-operand LEA instructions are also sent to an AGU and have two cycle latency, the result is inserted back into the ALU2 or ALU3 pipeline. Load and store micro-ops stay in the 28-entry address generation queue (AGQ) until they can be issued. The scheduler tracks the availability of operands and free entries in the load or store queue, and issues up to three ready micro-ops per cycle to the address generation units (AGUs). Two AGUs can generate addresses for load operations and send them to the load queue. All three AGUs can generate addresses for store operations and send them to the store queue. Some address checks, e.g. for segment boundary violations, are performed on the side. Store data is supplied by an integer or floating point ALU. | ||

| + | |||

| + | AMD did not disclose the rationale for adding a third store AGU. Three-way address generation may be necessary to realize the potential of the load-store unit to perform two 256-bit loads and one store per cycle. Remarkably Intel made the same improvements, doubling the L1 cache bandwidth and adding an AGU, in the {{intel|Haswell|l=arch}} microarchitecture. | ||

| + | |||

| + | ==== Floating Point Unit ==== | ||

| + | Like the {{\\|Zen}}/{{\\|Zen+}} microarchitecture, the Zen 2 floating point unit utilizes a coprocessor architectural model comprising a dedicated rename unit, a single 4-issue, out-of-order scheduler, a 160-entry physical register file (PRF), and four execution pipelines. The in-order retire queue is shared with the integer unit. The FPU handles x87, MMX, SSE, and AVX | ||

| + | instructions. FP loads and stores co-opt the EX unit for address calculations and the LS unit for memory accesses. | ||

| + | |||

| + | In the Zen/Zen+ microarchitecture the floating point physical registers, execution units, and data paths are 128 bits wide. For efficiency AVX-256 instructions which perform the same operation on the 128-bit upper and lower half of a YMM register are decoded into two macro-ops which pass through the FPU individually as execution resources become available and retire together. Accordingly the peak throughput is four SSE/AVX-128 instructions or two AVX-256 instructions per cycle. | ||

| + | |||

| + | Zen 2 doubles the width of the physical registers, execution units, and data paths to 256 bits. The L1 data cache bandwidth was doubled to match. The number of micro-ops issued by the FP scheduler remains four, implying most AVX-256 instructions decode to a single macro-op which conserves queue entries and reduces pressure on RCU and scheduling resources. AMD did not disclose how the FPU was restructured. Die shots suggest two execution blocks splitting the PRF and FP ALUs, one operating on the lower 128 bits of a YMM register, executing x87, MMX, SSE, and AVX instructions, the other on the upper 128 bits for AVX-256 instructions. This improvement doubles the peak throughput of AVX-256 instructions to four per cycle, or in other words, up to 32 [[FLOPs]]/cycle in single precision or up to 16 [[FLOPs]]/cycle in double precision. Another improvement reduces the latency of double-precision vector multiplications from 4 to 3 cycles, equal to the latency of single-precision multiplications. The latency of fused multiply-add (FMA) instructions remains 5 cycles. | ||

| + | |||

| + | The rename unit receives up to four macro-ops per cycle from dispatch. It maps x87, MMX, SSE, and AVX architectural registers and temporary registers used by microcoded instructions to physical registers. The floating point control/status register is renamed as well. MMX and x87 registers occupy the lowest 64 or 80 bits of a PR. The <i>Zen/Zen+</i> rename unit allocates two 128-bit PRs for each YMM register. Only one PR is needed for SSE and AVX-128 instructions, the upper half of the destination YMM register in another PR remains unchanged or is zeroed, respectively, which consumes no execution resources. (SSE instructions behave this way for compatibility with legacy software which is unaware of, and does not preserve, the upper half of YMM registers through library calls or interrupts.) Zen 2 allocates a single 256-bit PR and tracks in the register allocation table (RAT) if the upper half of the YMM register was zeroed. This necessitates register merging when SSE and AVX instructions are mixed and the upper half of the YMM register contains non-zero data. To avoid this the AVX ISA exposes an SSE mode where the FPU maintains the upper half of YMM registers separately. Zen 2 handles transitions between the SSE and AVX mode by microcode which takes approximately 100 cycles in either direction. Zeroing the upper half of all YMM registers with the VZEROUPPER or VZEROALL instruction before executing SSE instructions prevents the transition. | ||

| + | |||

| + | Zen 2 inherits the move elimination and XMM register merge optimizations from its predecessors. Register to register moves are performed by renaming the destination register and do not occupy any scheduling or execution resources. XMM register merging occurs when SSE instructions such as SQRTSS leave the upper 64 or 96 bits of the destination register unchanged, causing a dependency on previous instructions writing to this register. AMD family 15h and later processors can eliminate this dependency in a sequence of scalar FP instructions by recording in the RAT if those bits were zeroed. By setting a Z-bit in the RAT the rename unit also tracks if an architectural register was completely zeroed. All-zero registers are not mapped, the zero data bits are injected at the bypass network which conserves power and PRF entries, and allows for more instructions in flight. Earlier AMD microarchitectures, apparently including Zen/Zen+, recognize zeroing idioms such as XORPS combining a register with itself and eliminate the dependency on the source register. AMD did not disclose if or how this is implemented in Zen 2. Family 16h processors recognize zeroing idioms in the instruction decode unit and set the Z-bit on the destination register in the floating point rename unit, completing the operation without consuming scheduling or execution resources. | ||

| + | |||

| + | As in the Zen/Zen+ microarchitecture the 64-entry non-scheduling queue decouples dispatch and the FP scheduler. This allows dispatch to send operations to the integer side, in particular to expedite floating point loads and store address calculations, while the FP scheduler, whose capacity cannot be arbitrarily increased for complexity reasons, is busy with higher latency FP operations. The 36-entry out-of-order scheduler issues up to four micro-ops per cycle to the execution pipelines. A 160-entry physical register file holds the speculative and committed contents of architectural and temporary registers. The PRF has 8 read ports and 4 write ports for ALU results, each 256 bits wide in Zen 2, and two additional write ports supporting up to two 256-bit load operations per cycle, up from two 128-bit loads in Zen. The load convert (LDCVT) logic converts data to the internal register file format. The FPU is capable of <i>superforwarding</i> load data to dependent instructions through the bypass network, obviating a write and read of the PRF. The bypass network enables back-to-back execution of dependent instructions by forwarding results from the FP ALUs to the previous pipeline stage. | ||

| + | |||

| + | The floating point pipelines are asymmetric, each supporting a different set of operations, and the ALUs are grouped in domains, to conserve die space and reduce signal path lengths which permits higher clock frequencies. The number of execution resources available reflects the density of different instruction types in x86 code. Each pipe receives two operands from the PRF or bypass network. Pipe 0 and 1 support | ||

| + | FMA instructions which take three operands; The third operand is obtained by borrowing a PRF read port from pipe 3, stalling this pipe for one cycle, unless the operand is available on the bypass network. All pipes can perform logical operations. Other operations are supported by one, two, or three pipes. The execution domains distinguish vector integer operations, | ||

| + | floating point operations, and store operations. Instructions consuming data produced in another domain incur a one cycle penalty. Only pipe 2 executes floating point store-data micro-ops, sending data to the store queue at a rate of up to one 256-bit store per cycle, up from one 128-bit store in Zen. It also takes care of transfers between integer and FP registers on dedicated data busses. | ||

| + | |||

| + | Same as Zen/Zen+ the Zen 2 FPU handles denormal floating-point values natively, this can still incur a small penalty in some instances (MUL/DIV/SQRT). | ||

| + | |||

| + | ==== Load-Store Unit ==== | ||

| + | The load-store unit handles memory reads and writes. The width of data paths and buffers doubled from 128 bits in the {{\\|Zen}}/{{\\|Zen+}} microarchitecture to 256 bits. | ||

| + | |||

| + | The LS unit contains a 44-entry load queue (LDQ) which receives load operations from dispatch through either of the two load AGUs in the EX unit and the linear address of the load computed there. A load op stays in the LDQ until the load completes or a fault occurs. Adding the AGQ depth of 28 entries, dispatch can issue up to 72 load operations at a time. A 48-entry store queue (STQ), up from 44 entries in Zen, receives store operations from dispatch, a linear address computed by any of the three AGUs, and store data from the integer or floating point execution units. A store op likewise stays in the STQ until the store is committed or a fault occurs. Loads and stores are speculative due to branch prediction. | ||

| + | |||

| + | Three largely independent pipelines can execute up to two 256-bit load operations and one 256-bit store per cycle. The load pipes translate linear to physical addresses in parallel with L1 data cache accesses. The LS unit can perform loads and stores out of | ||

| + | order. It supports loads bypassing older loads and loads bypassing older non-conflicting stores, observing architectural load and store ordering rules. Store-to-load forwarding is supported when an older store containing all of the load's bytes, with no particular alignment since the {{\\|Piledriver}} microarchitecture, is in the STQ and store data has been produced. Memory dependence prediction, to speculatively reorder loads and stores before the physical address has been determined, was introduced by AMD in the {{\\|Bulldozer}} microarchitecture. | ||

| + | |||

| + | A two-level translation lookaside buffer (TLB) assists load and store address translation. The fully-associative L1 data TLB contains 64 entries and holds 4-Kbyte, 2-Mbyte, and 1-Gbyte page table entries. The L2 data TLB is a unified 12-way set-associative cache with 2048 entries, up from 1536 entries in Zen, holding 4-Kbyte and 2-Mbyte page table entries, as well as page directory entries (PDEs) to speed up DTLB and ITLB table walks. 1-Gbyte pages are <i>smashed</i> into 2-Mbyte entries but installed as 1-Gbyte entries when reloaded into the L1 TLB. | ||

| + | |||

| + | Two hardware page table walkers handle L2 TLB misses, presumably one serving the DTLB, another the ITLB. In addition to the PDE entries, the table walkers include a 64-entry page directory cache which holds page-map-level-4 and page-directory-pointer entries speeding up DTLB and ITLB walks. Like the Zen/Zen+ microarchitecture, Zen 2 supports page table entry (PTE) coalescing. When the table walker loads a PTE, which occupies 8 bytes in the x86-64 architecture, from memory it also examines the other PTEs in the same 64-byte cache line. If a 16-Kbyte aligned block of four consecutive 4-Kbyte pages are also consecutive and 16-Kbyte aligned in physical address space and have identical page attributes, they are stored into a single TLB entry greatly improving the efficiency of this cache. This is only done when the processor is operating in long mode. | ||

| + | |||

| + | The LS unit relies on a 32 KiB, 8-way set associative, write-back, ECC-protected L1 data cache (DC). It supports two loads, if they access different DC banks, and one store per cycle, each up to 256 bits wide. The line width is 64 bytes, however cache stores are aligned to a 32-byte boundary. Loads spanning a 64-byte boundary and stores spanning a 32-byte boundary incur a penalty of one cycle. In the Zen/Zen+ microarchitecture 256-bit vectors are loaded and stored as two 128-bit halves; the load and store boundaries are 32 and 16 bytes respectively. Zen 2 can load and store 256-bit vectors in a single operation, but stores must be 32-byte aligned now to avoid the penalty. As in Zen aligned and unaligned load and store instructions (for example MOVUPS/MOVAPS) provide identical performance. | ||

| + | |||

| + | The DC load-to-use latency is 4 cycles to the integer unit, 7 cycles to the FPU. The AGUs and LS unit are optimized for simple address generation modes: Base + displacement, base + index, and displacement-only. More complex modes and/or a non-zero segment base increase the latency by one cycle. | ||

| + | |||

| + | The L1 DC tags contain a linear-address-based microtag which allows loads to predict the way holding the requested data before the physical address has been determined, reducing power consumption and bank conflicts. A hardware prefetcher brings data into the L1 DC to reduce misses. The LS unit can track up to 22 in-flight cache misses, these are recorded in the miss address buffer (MAB). | ||

| + | |||

| + | The LS unit supports memory type range register (MTRR) and the page attribute table (PAT) extensions. Write-combining, if enabled for a memory range, merges multiple stores targeting locations within the address range of a write buffer to reduce memory bus utilization. Write-combining is also used for non-temporal store instructions such as MOVNTI. The LS unit can gather writes from 8 different 64-byte cache lines. | ||

| + | |||

| + | Each core benefits from a private 512 KiB, 8-way set associative, write-back, ECC-protected L2 cache. The line width is 64 bytes. The data path between the L1 data or instruction cache and the L2 cache is 32 bytes wide. The L2 cache has a variable load-to-use latency of no less than 12 cycles. Like the L1 cache it also has a hardware prefetcher. | ||

| + | |||

| + | == Core Complex == | ||

| + | Zen 2 organizes CPU cores in a core complex (CCX). A CCX comprises four cores sharing a 16 MiB, 16-way set associative, write-back, ECC protected, L3 cache. The L3 capacity doubled over the Zen/Zen+ microarchitecture. The cache is divided into four slices of 4 MiB capacity. Each core can access all slices with the same average load-to-use latency of 39 cycles, compared to 35 cycles in the previous generation. The Zen CCX is a flexible design allowing AMD to omit cores or cache slices in APUs and embedded processors. All Zen 2-based processors introduced as of late 2019 have the same CCX configuration, only the number of usable cores and L3 slices varies by processor model. | ||

| + | |||

| + | The width of a L3 cache line is 64 bytes. The data path between the L3 and L2 caches is 32 bytes wide. AMD did not disclose the size of miss buffers. Processors based on the Zen/Zen+ microarchitecture support 50 outstanding misses per core from L2 to L3, 96 from L3 to memory.<!-- EPYC Tech Day 2017, ISSCC 2018. --> | ||

| + | |||

| + | Each CPU core is supported by a private L2 cache. The L3 cache is a victim cache filled from L2 victims of all four cores and exclusive of L2 unless the data in the L3 cache is likely being accessed by multiple cores, or is requested by an instruction fetch.(non-inclusive hierarchy) | ||

| + | |||

| + | The L3 cache maintains shadow tags for all cache lines of each L2 cache in the CCX. This simplifies coupled fill/victim transactions between the L2 and L3 cache, and allows the L3 cache to act as a probe filter for requests between the L2 caches in the CCX, external probes and, taking advantage of its knowledge that a cache line shared by two or more L2 caches is exclusive to this CCX, probe traffic to the rest of the system. If a core misses in its L2 cache and the L3 cache, and the shadow tags indicate a hit in another L2 cache, a cache-to-cache transfer within the CCX is initiated. CCXs are not directly connected, even if they reside on the same die. Requests leaving the CCX pass through the scalable data fabric on the I/O die. | ||

| + | |||

| + | Zen 2 introduces the AMD64 Technology Platform Quality of Service Extensions which aim for compatibility with Intel Resource Director Technology, specifically CMT, MBM, CAT, and CDP.<!-- AMD did not advertise CDP support in Zen 2 but the feature is documented in AMD Publ #56375. --> An AMD-specific PQE-BW extension supports read bandwidth enforcement equivalent to Intel's MBA. The L3 cache is not a last level cache shared by all cores in a package as on Intel CPUs, so each CCX corresponds to one QoS domain. L2 QoS monitoring and enforcement is not supported. | ||

| + | |||

| + | Resources are tracked using a Resource Monitoring ID (RMID) which abstracts an application, thread, VM, or cgroup. For each logical processor only one RMID is active at a given time. CMT (Cache Monitoring Technology) allows an OS, hypervisor, or VMM to measure the amount of L3 cache occupied by a thread. MBM (Memory Bandwidth Monitoring) counts read requests to the rest of the system. CAT (Cache Allocation Technology) divides the L3 cache into a number of logical segments, possibly corresponding to ways, and allows system software to restrict a thread to an arbitrary, possibly empty, set of segments. It should be noted that still only a single copy of data is stored in the L3 cache if the data is accessed by threads with mutually exclusive sets. CDP (Code and Data Prioritization) extends CAT by differentiating between sets for code and data accesses. MBA (Memory Bandwidth Allocation) and PQE-BW (Platform Quality of Service Enforcement for Memory Bandwidth) limits the memory bandwidth a thread can consume within its QoS domain. Benefits of QoS include the ability to protect time critical processes from cache intensive background tasks, to reduce contention by scheduling threads according to their resource usage, and to mitigate interference from <i>noisy neighbors</i> in multitenant virtual machines. | ||

| + | |||

| + | Rome, Castle Peak, and Matisse are multi-die designs combining an I/O die tailored for their market and between one and eight identical core complex dies (CCDs), each containing two independent core complexes, a system management unit (SMU), and a global memory interconnect version 2 (GMI2) interface. | ||

| + | |||

| + | The GMI2 interface extends the scalable data fabric from the I/O die to the CCDs, presumably a bi-directional 32-lane [[amd/infinity_fabric|IFOP]] link comparable to the die-to-die links in first and second generation EPYC and Threadripper processors. According to AMD the die-to-die bandwidth increased from 16 B read + 16 B write to 32 B read + 16 B write per fclk. | ||

| + | |||

| + | Inferring its function from earlier Family 17h processors the SMU is a microcontroller which captures temperatures, voltage and current levels, adjusts CPU core frequencies and voltages, and applies local limits in a fast local closed loop and a global loop with a master SMU on the I/O die. The SMUs communicate through the scalable control fabric, presumably including a dedicated single lane IFOP SerDes on each CCD. | ||

| + | |||

| + | == Rome == | ||

| + | {{amd|Rome|l=core}} is codename for AMD's server chip based on the Zen 2 core. Like prior generation ({{amd|Naples|l=core}}), Rome utilizes a [[chiplet]] multi-chip package design. Each chip comprises of nine [[dies]] - one centralized I/O die and eight compute dies. The compute dies are fabricated on [[TSMC]]'s [[7 nm process]] in order to take advantage of the lower power and [[transistor density|higher density]]. On the other hand, the I/O makes use of [[GlobalFoundries]] mature [[14 nm process]]. | ||

| + | |||

| + | The centralized I/O die incorporates eight {{amd|Infinity Fabric}} links, 128 [[PCIe]] Gen 4 lanes, and eight [[DDR4]] memory channels. The full capabilities of the I/O have not been disclosed yet. Attached to the I/O die are eight compute dies - each with eight Zen 2 core - for a total of 64 cores and 128 threads per chip. | ||

| + | |||

| + | == Die == | ||

| + | === Zen 2 CPU core === | ||

| + | * TSMC [[N7|7-nanometer process]] | ||

| + | * 13 metal layers<ref name="isscc2020j-zen2">Singh, Teja; Rangarajan, Sundar; John, Deepesh; Schreiber, Russell; Oliver, Spence; Seahra, Rajit; Schaefer, Alex (2020). <i>2.1 Zen 2: The AMD 7nm Energy-Efficient High-Performance x86-64 Microprocessor Core</i>. 2020 IEEE International Solid-State Circuits Conference. pp. 42-44. doi:[https://doi.org/10.1109/ISSCC19947.2020.9063113 10.1109/ISSCC19947.2020.9063113]</ref> | ||

| + | * 475,000,000 transistors incl. 512 KiB L2 cache and one 4 MiB L3 cache slice<ref name="isscc2020j-zen2"/> | ||

| + | * Core size incl. L2 cache and one L3 cache slice: 7.83 mm²<ref name="isscc2020j-zen2"/> | ||

| + | * Core size incl. L2 cache: 3.64 mm² (estimated) | ||

| + | |||

| + | === Core Complex Die === | ||

| + | * TSMC [[N7|7-nanometer process]] | ||

| + | * 13 metal layers<ref name="isscc2020j-zen2"/> | ||

| + | * 3,800,000,000 transistors<ref name="isscc2020p-chiplet">Naffziger, Samuel. <i>[https://www.slideshare.net/AMD/amd-chiplet-architecture-for-highperformance-server-and-desktop-products AMD Chiplet Architecture for High-Performance Server and Desktop Products]</i>. IEEE ISSCC 2020, February 17, 2020.</ref> | ||

| + | * Die size: 74 mm²<ref name="isscc2020p-chiplet"/><ref name="isscc2020j-chiplet">Naffziger, Samuel; Lepak, Kevin; Paraschou, Milam; Subramony, Mahesh (2020). <i>2.2 AMD Chiplet Architecture for High-Performance Server and Desktop Products</i>. 2020 IEEE International Solid-State Circuits Conference. pp. 44-45. doi:[https://doi.org/10.1109/ISSCC19947.2020.9063103 10.1109/ISSCC19947.2020.9063103]</ref> | ||

| + | * CCX size: 31.3 mm²<ref name="e3-2019-nhg"><i>Next Horizon Gaming</i>. Electronic Entertainment Expo 2019 (E3 2019), June 10, 2019.</ref><ref name="isscc2020p-zen2">Singh, Teja; Rangarajan, Sundar; John, Deepesh; Schreiber, Russell; Oliver, Spence; Seahra, Rajit; Schaefer, Alex. <i>[https://www.slideshare.net/AMD/zen-2-the-amd-7nm-energyefficient-highperformance-x8664-microprocessor-core Zen 2: The AMD 7nm Energy-Efficient High-Performance x86-64 Microprocessor Core]</i>. IEEE ISSCC 2020, February 17, 2020.</ref> | ||

| + | * 2 × 16 MiB L3 cache: 2 × 16.8 mm² (estimated) | ||

| + | |||

| + | :[[File:AMD_Zen_2_CCD.jpg|500px]] | ||

| + | |||

| + | === Client I/O Die === | ||

| + | * GlobalFoundries [[14_nm_lithography_process#GlobalFoundries|12-nanometer process]] | ||

| + | * 2,090,000,000 transistors<ref name="isscc2020p-chiplet"/><ref name="isscc2020j-chiplet"/> | ||

| + | * 125 mm² die size<ref name="isscc2020p-chiplet"/><ref name="isscc2020j-chiplet"/> | ||

| + | * Reused as AMD X570 chipset | ||

| + | |||

| + | === Server I/O Die === | ||

| + | * GlobalFoundries [[14_nm_lithography_process#GlobalFoundries|12-nanometer process]] | ||

| + | * 8,340,000,000 transistors<ref name="isscc2020p-chiplet"/><ref name="isscc2020j-chiplet"/> | ||

| + | * 416 mm² die size<ref name="isscc2020p-chiplet"/><ref name="isscc2020j-chiplet"/> | ||

| + | |||

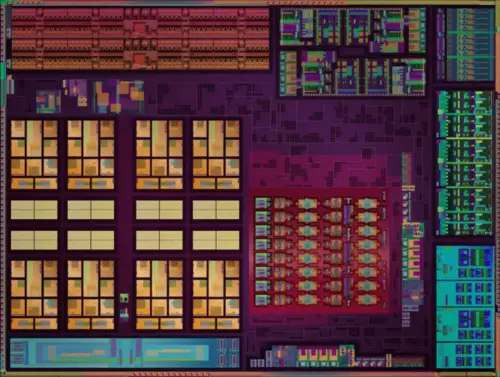

| + | === Renoir Die === | ||

| + | * TSMC [[N7|7-nanometer process]] | ||

| + | * 13 metal layers<ref name="hc32-renoir">Arora, Sonu; Bouvier Dan; Weaver, Chris. <i>[https://www.hotchips.org/archives AMD Next Generation 7nm Ryzen™ 4000 APU "Renoir"]</i>. Hot Chips 32, August 17, 2020.</ref> | ||

| + | * 9,800,000,000 transistors<ref name="hc32-renoir"/> | ||

| + | * 156 mm² die size<ref name="hc32-renoir"/> | ||

| + | |||

| + | :[[File:renoir die.png|500px]] | ||

| + | |||

| + | == Designers == | ||

| + | * David Suggs, Zen 2 core chief architect<ref name="mm2020-zen2">Suggs, David; Subramony, Mahesh; Bouvier, Dan (2020). <i>The AMD "Zen 2" Processor</i>. IEEE Micro. 40 (4): 45-52. doi:[https://doi.org/10.1109/MM.2020.2974217 10.1109/MM.2020.2974217]</ref> | ||

| + | * Mahesh Subramony, "Matisse" SoC architect<ref name="mm2020-zen2"/> | ||

== Bibliography == | == Bibliography == | ||

| Line 59: | Line 709: | ||

* AMD GCC 9 znver2 enablement [https://gcc.gnu.org/ml/gcc-patches/2018-10/msg01982.html patch] | * AMD GCC 9 znver2 enablement [https://gcc.gnu.org/ml/gcc-patches/2018-10/msg01982.html patch] | ||

* AMD 'Next Horizon', November 6, 2018 | * AMD 'Next Horizon', November 6, 2018 | ||

| + | * AMD. Lisa Su ''Keynote''. May 26, 2019 | ||

| + | |||

| + | == References == | ||

| + | <references/> | ||

| + | |||

| + | == See also == | ||

| + | :; [[AMD]] • [[Zen]] • [[Ryzen]] • [[EPYC]] | ||

| + | |||

| + | {| border="0" cellpadding="2" width="100%" | ||

| + | |- | ||

| + | |width="35%" valign="top" align="left"| | ||

| + | {{amd zen core see also}} | ||

| + | |width="35%" valign="top" align="left"| | ||

| + | {{amd zen+ core see also}} | ||

| + | |width="30%" valign="top" align="left"| | ||

| + | {{amd zen 2 core see also}} | ||

| + | |} | ||

| + | ---- | ||

| + | {| border="0" cellpadding="2" width="100%" | ||

| + | |- | ||

| + | |width="35%" valign="top" align="left"| | ||

| + | {{amd zen 3 core see also}} | ||

| + | |width="35%" valign="top" align="left"| | ||

| + | {{amd zen 4 core see also}} | ||

| + | |width="30%" valign="top" align="left"| | ||

| + | . | ||

| + | |} | ||

| + | |||

| + | * [[Intel]] • {{intel|Ice Lake|l=arch}} | ||

| − | + | [[Category:amd]] | |

| − | |||

Latest revision as of 05:14, 1 September 2025

| Edit Values | |

| Zen 2 µarch | |

| General Info | |

| Arch Type | CPU |

| Designer | AMD |

| Manufacturer | TSMC, GlobalFoundries |

| Introduction | July 2019 |

| Process | 7 nm, 12 nm, 14 nm |

| Core Configs | 4, 6, 8, 12, 16, 24, 32, 64 |

| Pipeline | |

| Type | Superscalar |

| OoOE | Yes |

| Speculative | Yes |

| Reg Renaming | Yes |

| Stages | 19 |

| Decode | 4-way |

| Instructions | |

| ISA | x86-64 |

| Extensions | MOVBE, MMX, SSE, SSE2, SSE3, SSSE3, SSE4A, SSE4.1, SSE4.2, POPCNT, AVX, AVX2, AES, PCLMUL, FSGSBASE, RDRND, FMA3, F16C, BMI, BMI2, RDSEED, ADCX, PREFETCHW, CLFLUSHOPT, XSAVE, SHA, UMIP, CLZERO |

| Cores | |

| Core Names | Rome (Server), Castle Peak (HEDT), Matisse (Desktop), Renoir (APU/Mobile), Lucienne (APU/Mobile) |

| Succession | |

Zen 2 is AMD's successor to Zen+, and is a 7 nm process microarchitecture for mainstream mobile, desktops, workstations, and servers. Zen 2 was replaced by Zen 3.

For performance desktop and mobile computing, Zen is branded as Athlon, Ryzen 3, Ryzen 5, Ryzen 7, Ryzen 9, and Ryzen Threadripper processors. For servers, Zen is branded as EPYC.

Contents

History[edit]

Zen 2 succeeded Zen in 2019. In February of 2017 Lisa Su, AMD's CEO announced their future roadmap to include Zen 2 and later Zen 3. On Investor's Day May 2017 Jim Anderson, AMD's Senior Vice President, confirmed that Zen 2 was set to utilize 7 nm process. Initial details of Zen 2 and Rome were unveiled during AMD's Next Horizon event on November 6, 2018.

Codenames[edit]

Product Codenames:

| Core | Series | Cores/Treads | Target |

|---|---|---|---|

| Rome | EPYC 7002 | Up to 64/128 | High-end server multiprocessors |

| Castle Peak | Ryzen Threadripper 3000 | Up to 64/128 | Workstation & Enthusiasts market processors |

| Matisse | Ryzen 3000 | Up to 16/32 | Mainstream to high-end desktops & Enthusiasts market processors |

| Renoir | Ryzen 4000 | Up to 8/16 | Mainstream APUs with Vega GPUs |

| Lucienne | Ryzen 5000 | Up to 8/16 | Mainstream APUs with Vega GPUs |

| Mendocino | Ryzen 7000, Athlon | Up to 4/8 | Mainstream Mobile processors |

Architectural Codenames:

| Arch | Codename |

|---|---|

| Core | Valhalla |

| CCD | Aspen Highlands |

Brands[edit]

| AMD Zen-based processor brands | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Logo | Family | General Description | Differentiating Features | |||||||

| Cores | Unlocked | AVX2 | SMT | IGP | ECC | MP | ||||

| Mainstream | ||||||||||

|

Ryzen 3 | Entry level Performance | Quad | ✔ | ✔ | ✔/✘ | ✔/✘1 | ✔/✘2 | ✘ | |

|

Ryzen 5 | Mid-range Performance | Hexa | ✔ | ✔ | ✔/✘ | ✔/✘1 | ✔/✘2 | ✘ | |

|

Ryzen 7 | High-end Performance | Octa | ✔ | ✔ | ✔/✘ | ✔/✘1 | ✔/✘2 | ✘ | |

|

Ryzen 9 | High-end Performance | 12-16 (Desktop) Octa (Mobile) |

✔ | ✔ | ✔ | ✔/✘1 | ✔/✘2 | ✘ | |

| Enthusiasts / Workstations | ||||||||||

|

Ryzen Threadripper | Enthusiasts | 24-64 | ✔ | ✔ | ✔ | ✘ | ✔ | ✘ | |

| Servers | ||||||||||

|

EPYC | High-performance Server Processor | 8-64 | ✘ | ✔ | ✔ | ✘ | ✔ | ✔ | |

| Embedded / Edge | ||||||||||

|

EPYC Embedded | Embedded / Edge Server Processor | 8-64 | ✘ | ✔ | ✔ | ✘ | ✔ | ✘ | |

|

Ryzen Embedded | Embedded APUs | 6-8 | ✘ | ✔ | ✔ | ✔ | ✔ | ✘ | |

1 Only available on G, GE, H, HS, HX and U SKUs.

2 ECC support is unavailable on AMD APUs.

Process technology[edit]

Zen 2 comprises multiple different components:

- The Core Complex Die (CCD) is fabricated on TSMC N7 (7 nm HPC process)

- The client I/O Die (cIOD) is fabricated on GlobalFoundries GloFo 12LP (12 nm process)

- The server I/O Die (sIOD) is fabricated on GlobalFoundries GloFo 14LPP (14 nm process)

Comparison[edit]

| Core | Zen | Zen+ | Zen 2 | Zen 3 | Zen 3+ | Zen 4 | Zen 4c | Zen 5 | Zen 5c | Zen 6 | Zen 6c | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Codename | Core | Valhalla | Cerberus | Persephone | Dionysus | Nirvana | Prometheus | Morpheus | Monarch | |||

| CCD | Aspen Highlands |

Brecken Ridge |

Durango | Vindhya | Eldora | |||||||

| Cores (threads) |

CCD | 8 (16) | 8 (16) | 8 (16) | 16 (32) | 8 (16) | 16 (32) | |||||

| CCX | 4 (8) | 8 (16) | 8 (16) | 8 (16) | 8 (16) | |||||||

| L3 cache | CCD | 32 MB | 32 MB | 32 MB | 32 MB | 32 MB | 32 MB | |||||

| CCX | 16 MB | 32 MB | 32 MB | 16 MB | 32 MB | |||||||

| Die size | CCD area | 44 mm2 | 74 mm2 | 80.7 mm2 | 66.3 mm2 | 72.7 mm2 | 70.6 mm2 | |||||

| Core area (Fab node) |

7 mm2 (14 nm) |

(12 nm) | 2.83 mm2 (7 nm) |

3.24 mm2 (7 nm) |

(7 nm) | 3.84 mm2 (5 nm) |

2.48 mm2 (5 nm) |

(4 nm) | (3 nm) | (2 nm) | (2 nm) | |

Compiler support[edit]

| Compiler | Arch-Specific | Arch-Favorable |

|---|---|---|

| GCC | -march=znver2 |

-mtune=znver2

|

| LLVM | -march=znver2 |

-mtune=znver2

|

- Note: Initial support in GCC 9 and LLVM 9.

Architecture[edit]

Zen 2 inherits most of the design from Zen+ but improves the instruction stream bandwidth and floating-point throughput performance.

Key changes from Zen+[edit]

|

New instructions[edit]Zen 2 introduced a number of new x86 instructions:

Furthermore, the User-Mode Instruction Prevention (UMIP) extension. |

Memory Hierarchy[edit]

|

|

All Zen 2 Chips[edit]

| List of all Zen-based Processors | |||||||||||||||||||||||||