(codenames from client article) |

(→Individual Core) |

||

| (109 intermediate revisions by 19 users not shown) | |||

| Line 26: | Line 26: | ||

|stages min=14 | |stages min=14 | ||

|stages max=19 | |stages max=19 | ||

| − | |isa | + | |isa=x86-64 |

| − | |||

| − | |||

|extension=MOVBE | |extension=MOVBE | ||

|extension 2=MMX | |extension 2=MMX | ||

| Line 73: | Line 71: | ||

|l3 desc=11-way set associative | |l3 desc=11-way set associative | ||

|core name=Skylake X | |core name=Skylake X | ||

| − | |core name 2=Skylake SP | + | |core name 2=Skylake W |

| + | |core name 3=Skylake SP | ||

|predecessor=Broadwell | |predecessor=Broadwell | ||

|predecessor link=intel/microarchitectures/broadwell | |predecessor link=intel/microarchitectures/broadwell | ||

| + | |successor=Cascade Lake | ||

| + | |successor link=intel/microarchitectures/cascade lake | ||

| + | |contemporary=Skylake (client) | ||

| + | |contemporary link=intel/microarchitectures/skylake (client) | ||

|pipeline=Yes | |pipeline=Yes | ||

|OoOE=Yes | |OoOE=Yes | ||

| Line 91: | Line 94: | ||

{| class="wikitable" | {| class="wikitable" | ||

|- | |- | ||

| − | ! Core !! Abbrev !! Target | + | ! Core !! Abbrev !! Platform !! Target |

|- | |- | ||

| − | | {{intel|Skylake | + | | {{intel|Skylake SP|l=core}} || SKL-SP || {{intel|Purley|l=platform}} || Server Scalable Processors |

|- | |- | ||

| − | | {{intel|Skylake SP|l=core}} || | + | | {{intel|Skylake X|l=core}} || SKL-X || {{intel|Basin Falls|l=platform}} || High-end desktops & enthusiasts market |

| + | |- | ||

| + | | {{intel|Skylake W|l=core}} || SKL-W || {{intel|Basin Falls|l=platform}} || Enterprise/Business workstations | ||

| + | |- | ||

| + | | {{intel|Skylake DE|l=core}} || SKL-DE || || Dense server/edge computing | ||

| + | |} | ||

| + | |||

| + | == Brands == | ||

| + | {{see also|intel/microarchitectures/skylake_(client)|l1=Client Skylake's Brands}} | ||

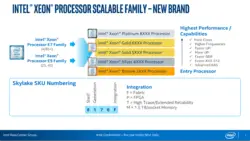

| + | [[File:xeon scalable family decode.png|thumb|right|250px|New Xeon branding]] | ||

| + | Intel introduced a number of new server chip families with the introduction of {{intel|Skylake SP|l=core}} as well as a new enthusiasts family with the introduction of {{intel|Skylake X|l=core}}. | ||

| + | |||

| + | {| class="wikitable tc4 tc5 tc6 tc7 tc8" style="text-align: center;" | ||

| + | |- | ||

| + | ! rowspan="2" | Logo !! rowspan="2" | Family !! rowspan="2" | General Description !! colspan="7" | Differentiating Features | ||

| + | |- | ||

| + | ! Cores !! {{intel|Hyper-Threading|HT}} !! {{x86|AVX}} !! {{x86|AVX2}} !! {{x86|AVX-512}} !! {{intel|Turbo Boost|TBT}} !! [[ECC]] | ||

| + | |- | ||

| + | | [[File:core i7 logo (2015).png|50px|link=intel/core_i7]] || {{intel|Core i7}} || style="text-align: left;" | Enthusiasts/High Performance ({{intel|Skylake X|X|l=core}}) || [[6 cores|6]] - [[8 cores|8]] || {{tchk|yes}} || {{tchk|yes}} || {{tchk|yes}} || {{tchk|yes}} || {{tchk|yes}} || {{tchk|no}} | ||

| + | |- | ||

| + | | [[File:core i9x logo.png|50px|link=intel/core_i9]] || {{intel|Core i9}} || style="text-align: left;" | Enthusiasts/High Performance || [[10 cores|10]] - [[18 cores|18]] || {{tchk|yes}} || {{tchk|yes}} || {{tchk|yes}} || {{tchk|yes}} || {{tchk|yes}} || {{tchk|no}} | ||

| + | |- | ||

| + | ! rowspan="2" | Logo !! rowspan="2" | Family !! rowspan="2" | General Description !! colspan="7" | Differentiating Features | ||

| + | |- | ||

| + | ! Cores !! {{intel|Hyper-Threading|HT}} !! {{intel|Turbo Boost|TBT}} !! {{x86|AVX-512}} !! AVX-512 Units !! {{intel|Ultra Path Interconnect|UPI}} links !! Scalability | ||

| + | |- | ||

| + | | [[File:xeon logo (2015).png|50px|link=intel/xeon d]] || {{intel|Xeon D}} || style="text-align: left;" | Dense servers / edge computing || [[4 cores|4]]-[[18 cores|18]] || {{tchk|yes}} || {{tchk|yes}} || {{tchk|yes}} || 1 || colspan="2" {{tchk|no}} | ||

| + | |- | ||

| + | | [[File:xeon logo (2015).png|50px|link=intel/xeon w]] || {{intel|Xeon W}} || style="text-align: left;" | Business workstations || [[4 cores|4]]-[[18 cores|18]] || {{tchk|yes}} || {{tchk|yes}} || {{tchk|yes}} || 2 || colspan="2" {{tchk|no}} | ||

| + | |- | ||

| + | | [[File:xeon bronze (2017).png|50px]] || {{intel|Xeon Bronze}} || style="text-align: left;" | Entry-level performance / <br>Cost-sensitive || [[6 cores|6]] - [[8 cores|8]] || {{tchk|no}} || {{tchk|no}} || {{tchk|yes}} || 1 || 2 || Up to 2 | ||

| + | |- | ||

| + | | [[File:xeon silver (2017).png|50px]] || {{intel|Xeon Silver}} || style="text-align: left;" | Mid-range performance / <br>Efficient lower power || [[4 cores|4]] - [[12 cores|12]] || {{tchk|yes}} || {{tchk|yes}} || {{tchk|yes}} || 1 || 2 || Up to 2 | ||

| + | |- | ||

| + | | rowspan="2" | [[File:xeon gold (2017).png|50px]] || {{intel|Xeon Gold}} 5000 || style="text-align: left;" | High performance || [[4 cores|4]] - [[14 cores|14]] || {{tchk|yes}} || {{tchk|yes}} || {{tchk|yes}} || 1 || 2 || Up to 4 | ||

| + | |- | ||

| + | | {{intel|Xeon Gold}} 6000 || style="text-align: left;" | Higher performance || [[6 cores|6]] - [[22 cores|22]] || {{tchk|yes}} || {{tchk|yes}} || {{tchk|yes}} || 2 || 3 || Up to 4 | ||

| + | |- | ||

| + | | [[File:xeon platinum (2017).png|50px]] || {{intel|Xeon Platinum}} || style="text-align: left;" | Highest performance / flexibility || [[4 cores|4]] - [[28 cores|28]] || {{tchk|yes}} || {{tchk|yes}} || {{tchk|yes}} || 2 || 3 || Up to 8 | ||

| + | |} | ||

| + | |||

| + | == Release Dates == | ||

| + | Skylake-based {{intel|Core X}} was introduced in May 2017 while {{intel|Skylake SP|l=core}} was introduced in July 2017. | ||

| + | |||

| + | == Process Technology == | ||

| + | {{main|14 nm lithography process}} | ||

| + | Unlike mainstream Skylake models, all Skylake server configuration models are fabricated on Intel's [[14 nm process#Intel|enhanced 14+ nm process]] which is used by {{\\|Kaby Lake}}. | ||

| + | |||

| + | == Compatibility == | ||

| + | {| class="wikitable" | ||

| + | ! Vendor !! OS !! Version !! Notes | ||

| + | |- | ||

| + | | rowspan="5" | Microsoft || rowspan="5" | Windows || style="background-color: #d6ffd8;" | Windows Server 2008 || rowspan="5" | Support | ||

| + | |- | ||

| + | | style="background-color: #d6ffd8;" | Windows Server 2008 R2 | ||

| + | |- | ||

| + | | style="background-color: #d6ffd8;" | Windows Server 2012 | ||

| + | |- | ||

| + | | style="background-color: #d6ffd8;" | Windows Server 2012 R2 | ||

| + | |- | ||

| + | | style="background-color: #d6ffd8;" | Windows Server 2016 | ||

| + | |- | ||

| + | | Linux || Linux || style="background-color: #d6ffd8;" | Kernel 3.19 || Initial Support (MPX support) | ||

| + | |- | ||

| + | | Apple || macOS || style="background-color: #d6ffd8;" | 10.12.3 || iMac Pro | ||

| + | |} | ||

| + | |||

| + | == Compiler support == | ||

| + | {| class="wikitable" | ||

| + | |- | ||

| + | ! Compiler !! Arch-Specific || Arch-Favorable | ||

| + | |- | ||

| + | | [[ICC]] || <code>-march=skylake-avx512</code> || <code>-mtune=skylake-avx512</code> | ||

| + | |- | ||

| + | | [[GCC]] || <code>-march=skylake-avx512</code> || <code>-mtune=skylake-avx512</code> | ||

| + | |- | ||

| + | | [[LLVM]] || <code>-march=skylake-avx512</code> || <code>-mtune=skylake-avx512</code> | ||

| + | |- | ||

| + | | [[Visual Studio]] || <code>/arch:AVX2</code> || <code>/tune:skylake</code> | ||

|} | |} | ||

| + | |||

| + | === CPUID === | ||

| + | {| class="wikitable tc1 tc2 tc3 tc4" | ||

| + | ! Core !! Extended<br>Family !! Family !! Extended<br>Model !! Model | ||

| + | |- | ||

| + | | rowspan="2" | {{intel|Skylake X|X|l=core}}, {{intel|Skylake SP|SP|l=core}}, {{intel|Skylake DE|DE|l=core}}, {{intel|Skylake W|W|l=core}} || 0 || 0x6 || 0x5 || 0x5 | ||

| + | |- | ||

| + | | colspan="4" | Family 6 Model 85 | ||

| + | |} | ||

| + | |||

| + | == Architecture == | ||

| + | Skylake server configuration introduces a number of significant changes from both Intel's previous microarchitecture, {{\\|Broadwell}}, as well as the {{\\|Skylake (client)}} architecture. Unlike client models, Skylake servers and HEDT models will still incorporate the fully integrated voltage regulator (FIVR) on-die. Those chips also have an entirely new multi-core system architecture that brought a new {{intel|mesh interconnect}} network (from [[ring topology]]). | ||

| + | |||

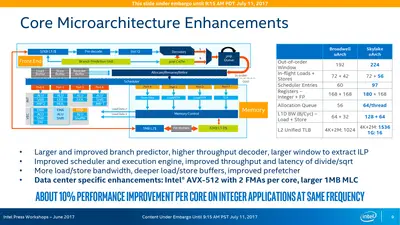

| + | === Key changes from {{\\|Broadwell}} === | ||

| + | [[File:skylake sp buffer windows.png|right|400px]] | ||

| + | * Improved "14 nm+" process (see {{\\|kaby_lake#Process_Technology|Kaby Lake § Process Technology}}) | ||

| + | * {{intel|Omni-Path Architecture}} (OPA) | ||

| + | * {{intel|Mesh architecture}} (from {{intel|Ring architecture|ring}}) | ||

| + | ** {{intel|Sub-NUMA Clustering}} (SNC) support (replaces the {{intel|Cluster-on-Die}} (COD) implementation) | ||

| + | * Chipset | ||

| + | ** {{intel|Wellsburg|l=chipset}} → {{intel|Lewisburg|l=chipset}} | ||

| + | ** Bus/Interface to Chipset | ||

| + | *** {{intel|Direct Media Interface|DMI 3.0}} (from 2.0) | ||

| + | **** Increase in transfer rate from 5.0 GT/s to 8.0 GT/s (~3.93GB/s up from 2GB/s) per lane | ||

| + | **** Limits motherboard trace design to 7 inches max from (down from 8) from the CPU to chipset | ||

| + | ** DMI upgraded to Gen3 | ||

| + | * Core | ||

| + | ** All the changes from Skylake Client (For full list, see {{\\|Skylake (Client)#Key changes from Broadwell|Skylake (Client) § Key changes from Broadwell}}) | ||

| + | ** Front End | ||

| + | *** LSD is disabled (Likely due to a bug; see [[#Front-end|§ Front-end]] for details) | ||

| + | ** Back-end | ||

| + | *** Port 4 now performs 512b stores (from 256b) | ||

| + | *** Port 0 & Port 1 can now be fused to perform AVX-512 | ||

| + | *** Port 5 now can do full 512b operations (not on all models) | ||

| + | ** Memory Subsystem | ||

| + | *** Larger store buffer (56 entries, up from 42) | ||

| + | *** Page split load penalty reduced 20-fold | ||

| + | *** Larger Write-back buffer | ||

| + | *** Store is now 64B/cycle (from 32B/cycle) | ||

| + | *** Load is now 2x64B/cycle (from 2x32B/cycle) | ||

| + | *** New Features | ||

| + | **** Adaptive Double Device Data Correction (ADDDC) | ||

| + | |||

| + | * Memory | ||

| + | ** L2$ | ||

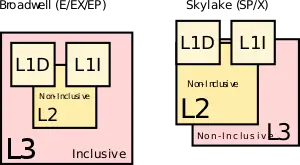

| + | *** Increased to 1 MiB/core (from 256 KiB/core) | ||

| + | *** Latency increased from 12 to 14 | ||

| + | ** L3$ | ||

| + | *** Reduced to 1.375 MiB/core (from 2.5 MiB/core) | ||

| + | *** Now non-inclusive (was inclusive) | ||

| + | ** DRAM | ||

| + | *** hex-channel DDR4-2666 (from quad-channel) | ||

| + | |||

| + | * TLBs | ||

| + | ** ITLB | ||

| + | *** 4 KiB page translations was changed from 4-way to 8-way associative | ||

| + | ** STLB | ||

| + | *** 4 KiB + 2 MiB page translations was changed from 6-way to 12-way associative | ||

| + | ** DMI/PEG are now on a discrete clock domain with BCLK sitting on its own domain with full-range granularity (1 MHz intervals) | ||

| + | * Testability | ||

| + | ** New support for {{intel|Direct Connect Interface}} (DCI), a new debugging transport protocol designed to allow debugging of closed cases (e.g. laptops, embedded) by accessing things such as [[JTAG]] through any [[USB 3]] port. | ||

| + | |||

| + | ==== CPU changes ==== | ||

| + | See {{\\|Skylake (Client)#CPU changes|Skylake (Client) § CPU changes}} | ||

| + | |||

| + | ====New instructions ==== | ||

| + | {{see also|intel/microarchitectures/skylake_(client)#New instructions|l1=Client Skylake's New instructions}} | ||

| + | Skylake server introduced a number of {{x86|extensions|new instructions}}: | ||

| + | |||

| + | * {{x86|MPX|<code>MPX</code>}} - Memory Protection Extensions | ||

| + | * {{x86|XSAVEC|<code>XSAVEC</code>}} - Save processor extended states with compaction to memory | ||

| + | * {{x86|XSAVES|<code>XSAVES</code>}} - Save processor supervisor-mode extended states to memory. | ||

| + | * {{x86|CLFLUSHOPT|<code>CLFLUSHOPT</code>}} - Flush & Invalidates memory operand and its associated cache line (All L1/L2/L3 etc..) | ||

| + | * {{x86|AVX-512|<code>AVX-512</code>}}, specifically: | ||

| + | ** {{x86|AVX512F|<code>AVX512F</code>}} - AVX-512 Foundation | ||

| + | ** {{x86|AVX512CD|<code>AVX512CD</code>}} - AVX-512 Conflict Detection | ||

| + | ** {{x86|AVX512BW|<code>AVX512BW</code>}} - AVX-512 Byte and Word | ||

| + | ** {{x86|AVX512DQ|<code>AVX512DQ</code>}} - AVX-512 Doubleword and Quadword | ||

| + | ** {{x86|AVX512VL|<code>AVX512VL</code>}} - AVX-512 Vector Length | ||

| + | * {{x86|PKU|<code>PKU</code>}} - Memory Protection Keys for Userspace | ||

| + | * {{x86|PCOMMIT|<code>PCOMMIT</code>}} - PCOMMIT instruction | ||

| + | * {{x86|CLWB|<code>CLWB</code>}} - Force cache line write-back without flush | ||

| + | |||

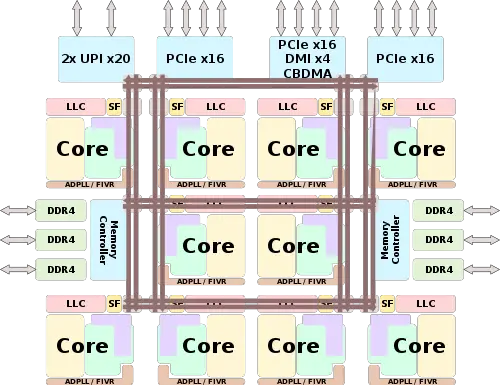

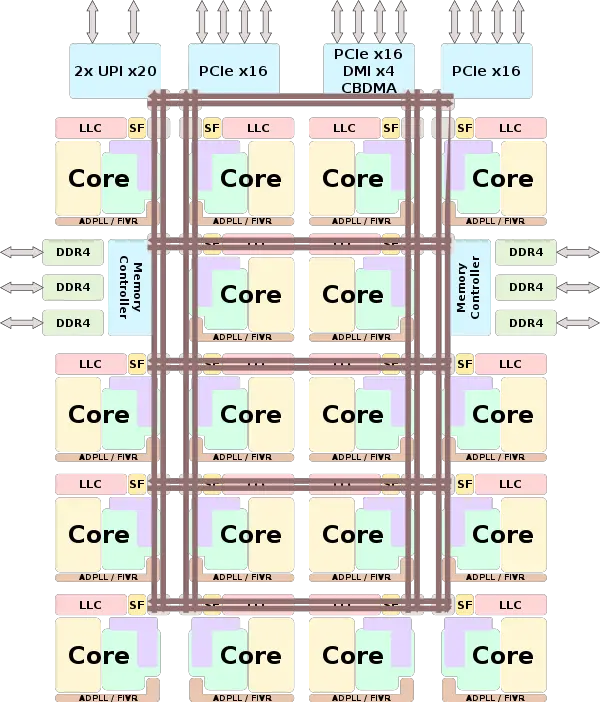

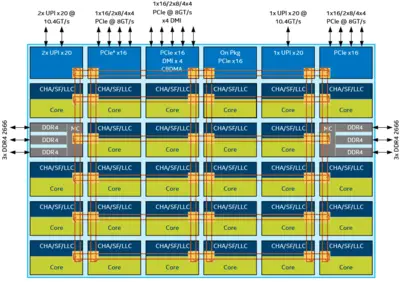

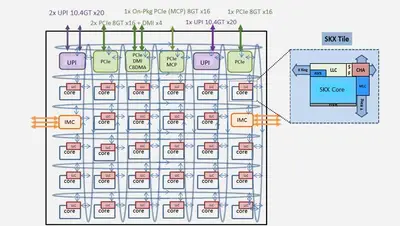

| + | === Block Diagram === | ||

| + | ==== Entire SoC Overview ==== | ||

| + | ===== LCC SoC ===== | ||

| + | :[[File:skylake sp lcc block diagram.svg|500px]] | ||

| + | ===== HCC SoC ===== | ||

| + | :[[File:skylake sp hcc block diagram.svg|600px]] | ||

| + | ===== XCC SoC ===== | ||

| + | :[[File:skylake sp xcc block diagram.svg|800px]] | ||

| + | ===== Individual Core ===== | ||

| + | :[[File:skylake server block diagram.svg|850px]] | ||

| + | |||

| + | === Memory Hierarchy === | ||

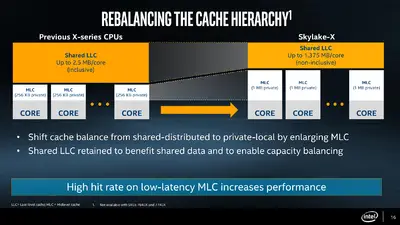

| + | [[File:skylake x memory changes.png|right|400px]] | ||

| + | Some major organizational changes were done to the cache hierarchy in Skylake server configuration vs {{\\|Broadwell}}/{{\\|Haswell}}. The memory hierarchy for Skylake's server and HEDT processors has been rebalanced. Note that the L3 is now non-inclusive and some of the SRAM from the L3 cache was moved into the private L2 cache. | ||

| + | |||

| + | * Cache | ||

| + | ** L0 µOP cache: | ||

| + | *** 1,536 µOPs/core, 8-way set associative | ||

| + | **** 32 sets, 6-µOP line size | ||

| + | **** statically divided between threads, inclusive with L1I | ||

| + | ** L1I Cache: | ||

| + | *** 32 [[KiB]]/core, 8-way set associative | ||

| + | **** 64 sets, 64 B line size | ||

| + | **** competitively shared by the threads/core | ||

| + | ** L1D Cache: | ||

| + | *** 32 KiB/core, 8-way set associative | ||

| + | *** 64 sets, 64 B line size | ||

| + | *** competitively shared by threads/core | ||

| + | *** 4 cycles for fastest load-to-use (simple pointer accesses) | ||

| + | **** 5 cycles for complex addresses | ||

| + | *** 128 B/cycle load bandwidth | ||

| + | *** 64 B/cycle store bandwidth | ||

| + | *** Write-back policy | ||

| + | ** L2 Cache: | ||

| + | *** 1 MiB/core, 16-way set associative | ||

| + | *** 64 B line size | ||

| + | *** Inclusive | ||

| + | *** 64 B/cycle bandwidth to L1$ | ||

| + | *** Write-back policy | ||

| + | *** 14 cycles latency | ||

| + | ** L3 Cache: | ||

| + | *** 1.375 MiB/core, 11-way set associative, shared across all cores | ||

| + | **** Note that a few models have non-default cache sizes due to disabled cores | ||

| + | *** 2,048 sets, 64 B line size | ||

| + | *** Non-inclusive victim cache | ||

| + | *** Write-back policy | ||

| + | *** 50-70 cycles latency | ||

| + | ** Snoop Filter (SF): | ||

| + | *** 2,048 sets, 12-way set associative | ||

| + | * DRAM | ||

| + | ** 6 channels of DDR4, up to 2666 MT/s | ||

| + | *** RDIMM and LRDIMM | ||

| + | *** bandwidth of 21.33 GB/s | ||

| + | *** aggregated bandwidth of 128 GB/s | ||

| + | |||

| + | Skylake TLB consists of dedicated L1 TLB for instruction cache (ITLB) and another one for data cache (DTLB). Additionally there is a unified L2 TLB (STLB). | ||

| + | * TLBs: | ||

| + | ** ITLB | ||

| + | *** 4 KiB page translations: | ||

| + | **** 128 entries; 8-way set associative | ||

| + | **** dynamic partitioning | ||

| + | *** 2 MiB / 4 MiB page translations: | ||

| + | **** 8 entries per thread; fully associative | ||

| + | **** Duplicated for each thread | ||

| + | ** DTLB | ||

| + | *** 4 KiB page translations: | ||

| + | **** 64 entries; 4-way set associative | ||

| + | **** fixed partition | ||

| + | *** 2 MiB / 4 MiB page translations: | ||

| + | **** 32 entries; 4-way set associative | ||

| + | **** fixed partition | ||

| + | *** 1G page translations: | ||

| + | **** 4 entries; 4-way set associative | ||

| + | **** fixed partition | ||

| + | ** STLB | ||

| + | *** 4 KiB + 2 MiB page translations: | ||

| + | **** 1536 entries; 12-way set associative. (Note: STLB is incorrectly reported as "6-way" by CPUID leaf 2 (EAX=02H). Skylake erratum SKL148 recommends software to simply ignore that value.) | ||

| + | **** fixed partition | ||

| + | *** 1 GiB page translations: | ||

| + | **** 16 entries; 4-way set associative | ||

| + | **** fixed partition | ||

== Overview == | == Overview == | ||

| − | [[File:skylake | + | [[File:skylake server overview.svg|right|550px]] |

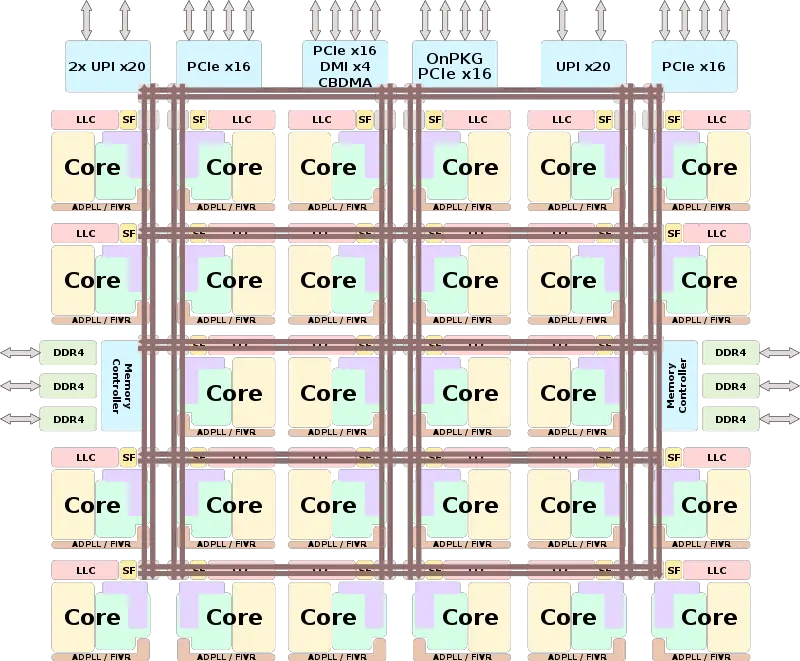

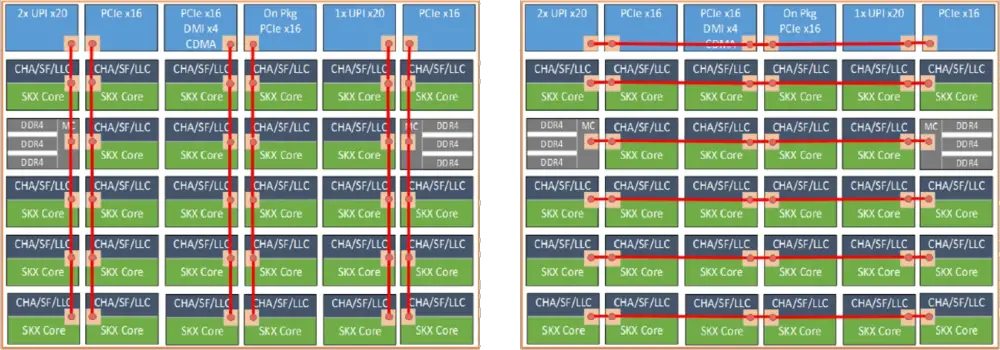

| − | Skylake- | + | The Skylake server architecture marks a significant departure from the previous decade of multi-core system architecture at Intel. Since {{\\|Westmere (server)|Westmere}} Intel has been using a {{intel|ring bus interconnect}} to interlink multiple cores together. As Intel continued to add more I/O, increase the memory bandwidth, and added more cores which increased the data traffic flow, that architecture started to show its weakness. With the introduction of the Skylake server architecture, the interconnect was entirely re-architected to a 2-dimensional {{intel|mesh interconnect}}. |

| + | |||

| + | A superset model is shown on the right. Skylake-based servers are the first mainstream servers to make use of Intel's new {{intel|mesh interconnect}} architecture, an architecture that was previously explored, experimented with, and enhanced with Intel's {{intel|Phi}} [[many-core processors]]. In this configuration, the cores, caches, and the memory controllers are organized in rows and columns - each with dedicated connections going through each of the rows and columns allowing for a shortest path between any tile, reducing latency, and improving the bandwidth. Those processors are offered from [[4 cores]] up to [[28 cores]] with 8 to 56 threads. In addition to the system-level architectural changes, with Skylake, Intel now has a separate core architecture for those chips which incorporate a plethora of new technologies and features including support for the new {{x86|AVX-512}} instruction set extension. | ||

| + | |||

| + | All models incorporate 6 channels of DDR4 supporting up to 12 DIMMS for a total of 768 GiB (with extended models support 1.5 TiB). For I/O all models incorporate 48x (3x16) lanes of PCIe 3.0. There is an additional x4 lanes PCIe 3.0 reserved exclusively for DMI for the the {{intel|Lewisburg|l=chipset}} (LBG) chipset. For a selected number of models, specifically those with ''F'' suffix, they have an {{intel|Omni-Path}} Host Fabric Interface (HFI) on-package (see [[#Integrated_Omni-Path|Integrated Omni-Path]]). | ||

| + | |||

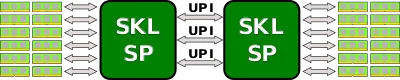

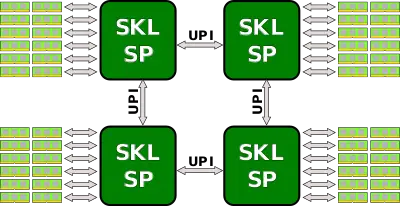

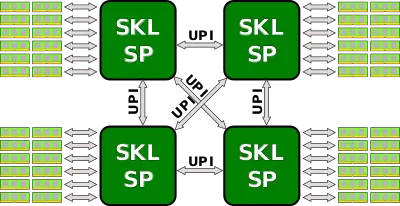

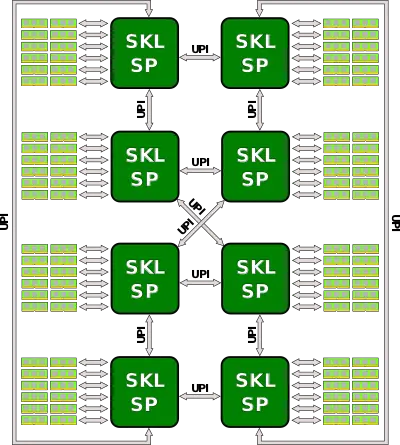

| + | Skylake processors are designed for scalability, supporting 2-way, 4-way, and 8-way multiprocessing through Intel's new {{intel|Ultra Path Interconnect}} (UPI) interconnect links, with two to three links being offered (see [[#Scalability|§ Scalability]]). High-end models have node controller support allowing for even higher way configuration (e.g., 32-way multiprocessing). | ||

| + | |||

| + | == Core == | ||

| + | === Overview === | ||

| + | Skylake shares most of the development vectors with its predecessor while introducing a one of new constraint. The overall goals were: | ||

| + | * Performance improvements - the traditional way of milking more performance by increasing the instructions per cycle as well as clock frequency. | ||

| + | * Power efficiency - reduction of power for all functional blocks | ||

| + | * Security enhancements - new security features are implemented in hardware in the core | ||

| + | * Configurability | ||

| + | ==== Configurability ==== | ||

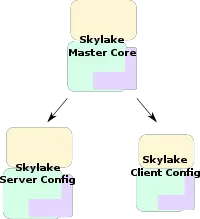

| + | [[File:skylake master core configs.svg|right|200px]] | ||

| + | Intel has been experiencing a growing divergence in functionality over the last number of iterations of [[intel/microarchitectures|their microarchitecture]] between their mainstream consumer products and their high-end HPC/server models. Traditionally, Intel has been using the same exact core design for everything from their lowest end value models (e.g. {{intel|Celeron}}) all the way up to the highest-performance enterprise models (e.g. {{intel|Xeon E7}}). While the two have fundamentally different chip architectures, they use the same exact CPU core architecture as the building block. | ||

| + | |||

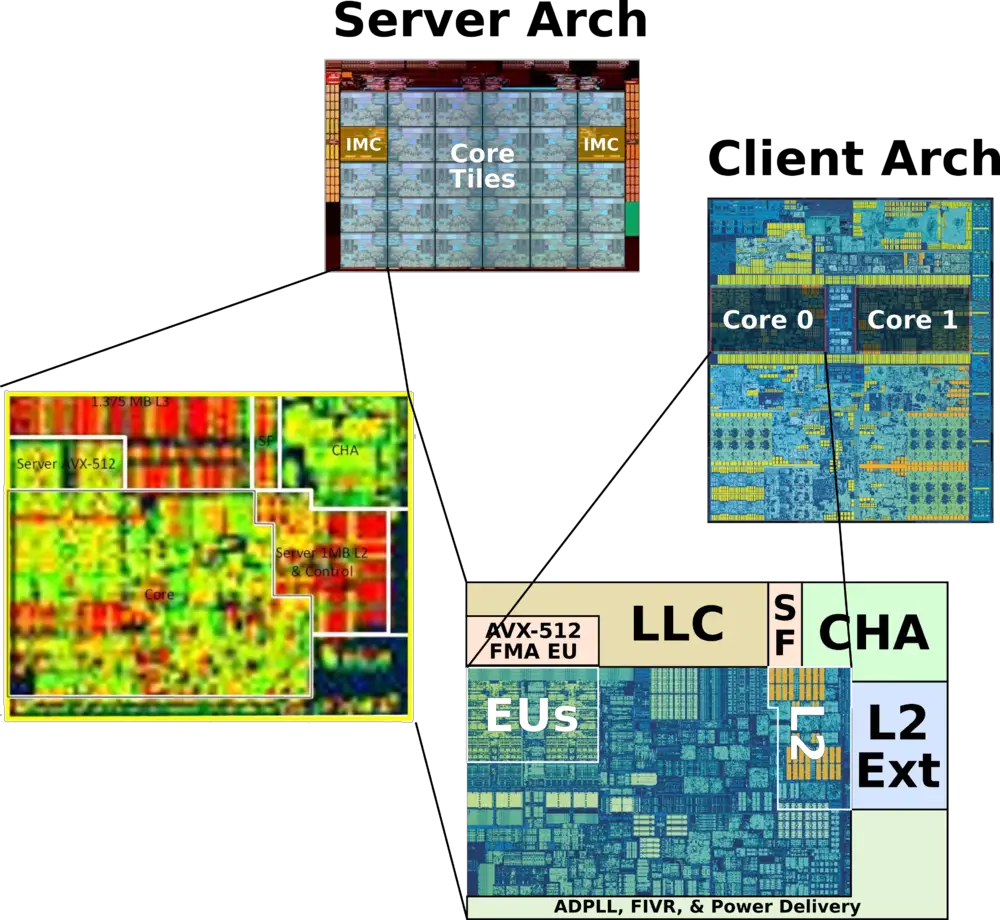

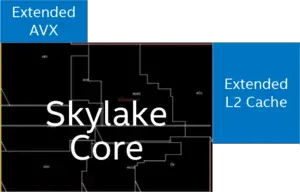

| + | This design philosophy has changed with Skylake. In order to better accommodate the different functionalities of each segment without sacrificing features or making unnecessary compromises, Intel went with a configurable core. The Skylake core is a single development project, making up a master superset core. The project results in two derivatives: one for servers (the substance of this article) and {{\\|skylake (client)|one for clients}}. All mainstream models (from {{intel|Celeron}}/{{intel|Pentium (2009)|Pentium}} all the way up to {{intel|Core i7}}/{{intel|Xeon E3}}) use {{\\|skylake (client)|the client core configuration}}. Server models (e.g. {{intel|Xeon Gold}}/{{intel|Xeon Platinum}}) are using the new server configuration instead. | ||

| + | |||

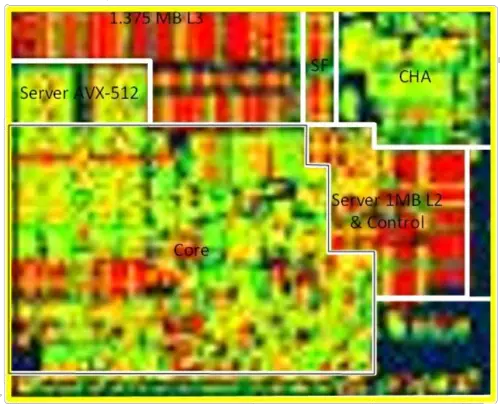

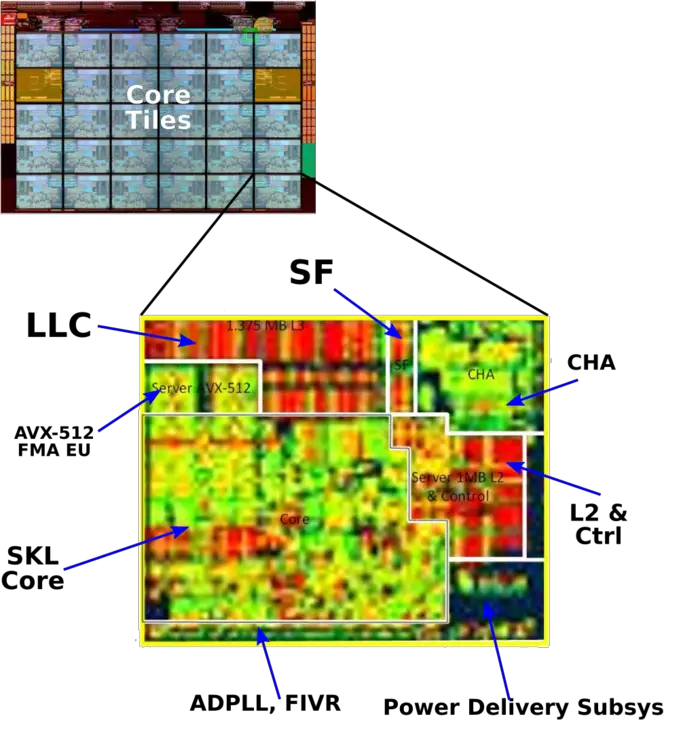

| + | The server core is considerably larger than the client one, featuring [[Advanced Vector Extensions 512]] (AVX-512). Skylake servers support what was formerly called AVX3.2 (AVX512F + AVX512CD + AVX512BW + AVX512DQ + AVX512VL). The server core also incorporates a number of new technologies not found in the client configuration. In addition to the execution units that were added, the cache hierarchy has changed for the server core as well, incorporating a large L2 and a portion of the LLC as well as the caching and home agent and the snoop filter that needs to accommodate the new cache changes. | ||

| + | |||

| + | Below is a visual that helps show how the server core was evolved from the client core. | ||

| − | + | :[[File:skylake sp mesh core tile zoom with client shown.png|1000px]] | |

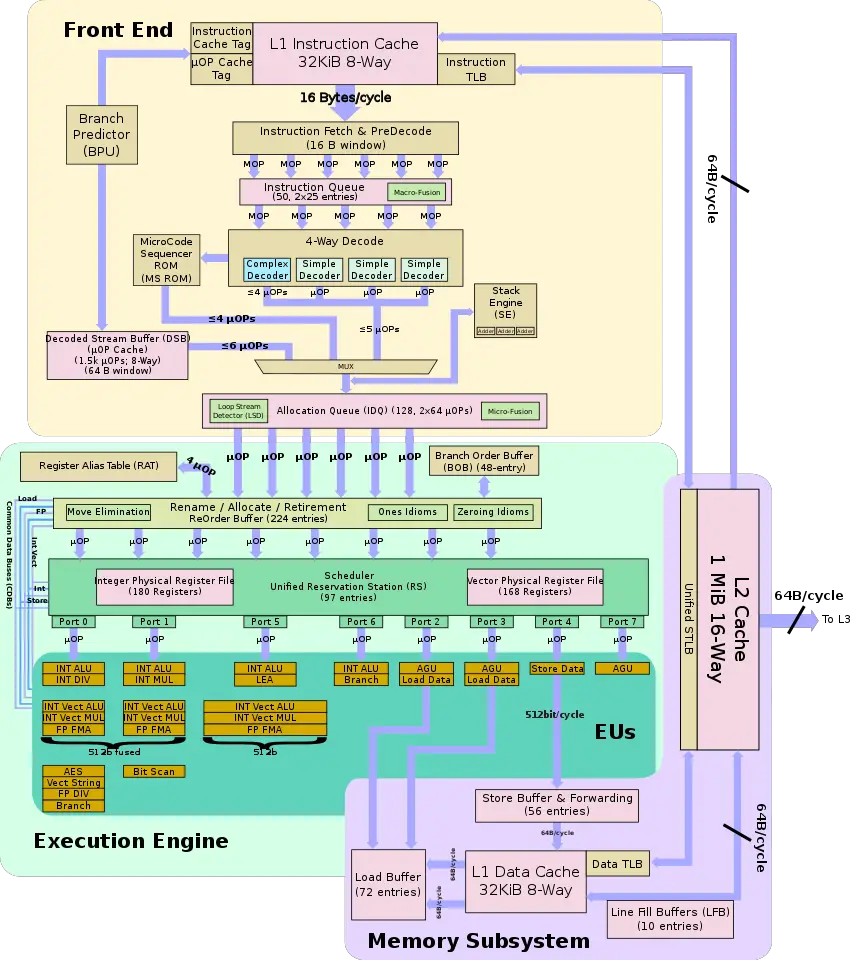

| − | Skylake | + | === Pipeline === |

| + | The Skylake core focuses on extracting performance and reducing power through a number of key ways. Intel builds Skylake on previous microarchitectures, descendants of {{\\|Sandy Bridge}}. For the core to increase the overall performance, Intel focused on extracting additional [[instruction parallelism|parallelism]]. | ||

| − | = | + | ==== Front-end ==== |

| − | + | For the most part, with the exception of the LSD, the front-end of the Skylake server core is identical to the client configuration. For in-depth detail of the Skylake front-end see {{\\|skylake_(client)#Front-end|Skylake (client) § Front-end}}. | |

| − | |||

| − | === Front-end === | ||

| − | |||

| − | === Execution engine === | + | The only major difference in the front-end from the client core configuration is the LSD. The Loop Stream Detector (LSD) has been disabled. While the exact reason is not known, it might be related to a severe issue that [https://lists.debian.org/debian-devel/2017/06/msg00308.html was experienced by] the OCaml Development Team. The issue [https://lists.debian.org/debian-devel/2017/06/msg00308.html was patched via microcode] on the client platform, however this change might indicate it was possibly disabled on there as well. The exact implications of this are unknown. |

| + | |||

| + | ==== Execution engine ==== | ||

| + | The Skylake server configuration core back-end is identical to the client configuration up to the scheduler. For in-depth detail of the Skylake back-end up to that point, see {{\\|skylake_(client)#Execution engine|Skylake (client) § Execution engine}}. | ||

| + | |||

| + | ===== Scheduler & 512-SIMD addition ===== | ||

[[File:skylake scheduler server.svg|right|500px]] | [[File:skylake scheduler server.svg|right|500px]] | ||

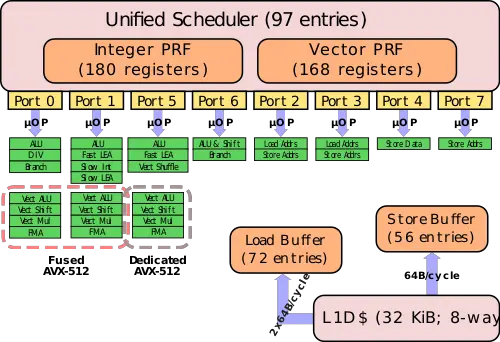

| − | + | The scheduler itself was increased by 50%; with up to 97 entries (from 64 in {{\\|Broadwell}}) being competitively shared between the two threads. Skylake continues with a unified design; this is in contrast to designs such as [[AMD]]'s {{amd|Zen|l=arch}} which uses a split design each one holding different types of µOPs. Scheduler includes the two register files for integers and vectors. It's in those [[register files]] that output operand data is store. In Skylake, the [[integer]] [[register file]] was also slightly increased from 160 entries to 180. | |

| − | + | [[File:skylake sp added cach and vpu.png|left|300px]] | |

| + | This is the first implementation to incorporate {{x86|AVX-512}}, a 512-bit [[SIMD]] [[x86]] instruction set extension. AVX-512 operations can take place on every port. For 512-bit wide FMA SIMD operations, Intel introduced two different mechanisms ways: | ||

| − | In the {{intel|Xeon Gold|high-end}} and {{intel|Xeon Platinum|highest}} performance Xeons, Intel added a second dedicated AVX-512 unit in addition to the fused Port0-1 operations described above. The dedicated unit is situated on Port 5. | + | In the simple implementation, the variants used in the {{intel|Xeon Bronze|entry-level}} and {{intel|Xeon Silver|mid-range}} Xeon servers, AVX-512 fuses Port 0 and Port 1 to form a 512-bit FMA unit. Since those two ports are 256-wide, an AVX-512 option that is dispatched by the scheduler to port 0 will execute on both ports. Note that unrelated operations can still execute in parallel. For example, an AVX-512 operation and an Int ALU operation may execute in parallel - the AVX-512 is dispatched on port 0 and use the AVX unit on port 1 as well and the Int ALU operation will execute independently in parallel on port 1. |

| + | |||

| + | In the {{intel|Xeon Gold|high-end}} and {{intel|Xeon Platinum|highest}} performance Xeons, Intel added a second dedicated 512-bit wide AVX-512 FMA unit in addition to the fused Port0-1 operations described above. The dedicated unit is situated on Port 5. | ||

| − | |||

Physically, Intel added 768 KiB L2 cache and the second AVX-512 VPU externally to the core. | Physically, Intel added 768 KiB L2 cache and the second AVX-512 VPU externally to the core. | ||

| − | [[ | + | {{clear}} |

| + | ====== Scheduler Ports & Execution Units ====== | ||

| + | <table class="wikitable"> | ||

| + | <tr><th colspan="2">Scheduler Ports Designation</th></tr> | ||

| + | <tr><th rowspan="5">Port 0</th><td>Integer/Vector Arithmetic, Multiplication, Logic, Shift, and String ops</td><td rowspan="7">512-bit Vect ALU/Shift/Mul/FMA</td></tr> | ||

| + | <tr><td>[[FP]] Add, [[Multiply]], [[FMA]]</td></tr> | ||

| + | <tr><td>Integer/FP Division and [[Square Root]]</td></tr> | ||

| + | <tr><td>[[AES]] Encryption</td></tr> | ||

| + | <tr><td>Branch2</td></tr> | ||

| + | <tr><th rowspan="2">Port 1</th><td>Integer/Vector Arithmetic, Multiplication, Logic, Shift, and Bit Scanning</td></tr> | ||

| + | <tr><td>[[FP]] Add, [[Multiply]], [[FMA]]</td></tr> | ||

| + | <tr><th rowspan="3">Port 5</th><td>Integer/Vector Arithmetic, Logic</td><td rowspan="3">512-bit Vect ALU/Shift/Mul/FMA</td></tr> | ||

| + | <tr><td>Vector Permute</td></tr> | ||

| + | <tr><td>[[x87]] FP Add, Composite Int, CLMUL</td></tr> | ||

| + | <tr><th rowspan="2">Port 6</th><td>Integer Arithmetic, Logic, Shift</td></tr> | ||

| + | <tr><td>Branch</td></tr> | ||

| + | <tr><th>Port 2</th><td>Load, AGU</td></tr> | ||

| + | <tr><th>Port 3</th><td>Load, AGU</td></tr> | ||

| + | <tr><th>Port 4</th><td>Store, AGU</td></tr> | ||

| + | <tr><th>Port 7</th><td>AGU</td></tr> | ||

| + | </table> | ||

| + | |||

| + | {| class="wikitable collapsible collapsed" | ||

| + | |- | ||

| + | ! colspan="3" | Execution Units | ||

| + | |- | ||

| + | ! Execution Unit !! # of Units !! Instructions | ||

| + | |- | ||

| + | | ALU || 4 || add, and, cmp, or, test, xor, movzx, movsx, mov, (v)movdqu, (v)movdqa, (v)movap*, (v)movup* | ||

| + | |- | ||

| + | | DIV || 1 || divp*, divs*, vdiv*, sqrt*, vsqrt*, rcp*, vrcp*, rsqrt*, idiv | ||

| + | |- | ||

| + | | Shift || 2 || sal, shl, rol, adc, sarx, adcx, adox, etc... | ||

| + | |- | ||

| + | | Shuffle || 1 || (v)shufp*, vperm*, (v)pack*, (v)unpck*, (v)punpck*, (v)pshuf*, (v)pslldq, (v)alignr, (v)pmovzx*, vbroadcast*, (v)pslldq, (v)psrldq, (v)pblendw | ||

| + | |- | ||

| + | | Slow Int || 1 || mul, imul, bsr, rcl, shld, mulx, pdep, etc... | ||

| + | |- | ||

| + | | Bit Manipulation || 2 || andn, bextr, blsi, blsmsk, bzhi, etc | ||

| + | |- | ||

| + | | FP Mov || 1 || (v)movsd/ss, (v)movd gpr | ||

| + | |- | ||

| + | | SIMD Misc || 1 || STTNI, (v)pclmulqdq, (v)psadw, vector shift count in xmm | ||

| + | |- | ||

| + | | Vec ALU || 3 || (v)pand, (v)por, (v)pxor, (v)movq, (v)movq, (v)movap*, (v)movup*, (v)andp*, (v)orp*, (v)paddb/w/d/q, (v)blendv*, (v)blendp*, (v)pblendd | ||

| + | |- | ||

| + | | Vec Shift || 2 || (v)psllv*, (v)psrlv*, vector shift count in imm8 | ||

| + | |- | ||

| + | | Vec Add || 2 || (v)addp*, (v)cmpp*, (v)max*, (v)min*, (v)padds*, (v)paddus*, (v)psign, (v)pabs, (v)pavgb, (v)pcmpeq*, (v)pmax, (v)cvtps2dq, (v)cvtdq2ps, (v)cvtsd2si, (v)cvtss2si | ||

| + | |- | ||

| + | | Vec Mul || 2 || (v)mul*, (v)pmul*, (v)pmadd* | ||

| + | |- | ||

| + | |colspan="3" | This table was taken verbatim from the Intel manual. Execution unit mapping to {{x86|MMX|MMX instructions}} are not included. | ||

| + | |} | ||

| − | === Memory subsystem === | + | ==== Memory subsystem ==== |

[[File:skylake-sp memory.svg|right|300px]] | [[File:skylake-sp memory.svg|right|300px]] | ||

| + | Skylake's memory subsystem is in charge of the loads and store requests and ordering. Since {{\\|Haswell}}, it's possible to sustain two memory reads (on ports 2 and 3) and one memory write (on port 4) each cycle. Each memory operation can be of any register size up to 512 bits. Skylake memory subsystem has been improved. The store buffer has been increased by 42 entries from {{\\|Broadwell}} to 56 for a total of 128 simultaneous memory operations in-flight or roughly 60% of all µOPs. Special care was taken to reduce the penalty for page-split loads; previously scenarios involving page-split loads were thought to be rarer than they actually are. This was addressed in Skylake with page-split loads are now made equal to other splits loads. Expect page split load penalty down to 5 cycles from 100 cycles in {{\\|Broadwell}}. The average latency to forward a load to store has also been improved and stores that miss in the L1$ generate L2$ requests to the next level cache much earlier in Skylake than before. | ||

| + | |||

| + | The L2 to L1 bandwidth in Skylake is the same as {{\\|Haswell}} at 64 bytes per cycle in either direction. Note that one operation can be done each cycle; i.e., the L1 can either receive data from the L1 or send data to the Load/Store buffers each cycle, but not both. Latency from L2$ to L3$ has also been increased from 4 cycles/line to 2 cycles/line. <!-- The bandwidth from the level 2 cache to the shared level 3 is 64 bytes per cycle. ?? --> | ||

| + | |||

The [[medium level cache]] (MLC) and [[last level cache]] (LLC) was rebalanced. Traditionally, Intel had a 256 KiB [[L2 cache]] which was duplicated along with the L1s over in the LLC which was 2.5 MiB. That is, prior to Skylake, the 256 KiB L2 cache actually took up 512 KiB of space for a total of 2.25 mebibytes effective cache per core. In Skylake Intel doubled the L2 and quadrupled the effective capacity to 1 MiB while decreasing the LLC to 1.375 MiB. The LLC is also now made [[non-inclusive cache|non-inclusive]], i.e., the L2 may or may not be in the L3 (no guarantee is made); what stored where will depend on the particular access pattern of the executing application, the size of code and data accessed, and the inter-core sharing behavior. | The [[medium level cache]] (MLC) and [[last level cache]] (LLC) was rebalanced. Traditionally, Intel had a 256 KiB [[L2 cache]] which was duplicated along with the L1s over in the LLC which was 2.5 MiB. That is, prior to Skylake, the 256 KiB L2 cache actually took up 512 KiB of space for a total of 2.25 mebibytes effective cache per core. In Skylake Intel doubled the L2 and quadrupled the effective capacity to 1 MiB while decreasing the LLC to 1.375 MiB. The LLC is also now made [[non-inclusive cache|non-inclusive]], i.e., the L2 may or may not be in the L3 (no guarantee is made); what stored where will depend on the particular access pattern of the executing application, the size of code and data accessed, and the inter-core sharing behavior. | ||

<!-- | <!-- | ||

[[File:skylake server cache bandwidth.svg|left|200px]]--> | [[File:skylake server cache bandwidth.svg|left|200px]]--> | ||

Having an inclusive L3 makes cache coherence considerably easier to implement. [[Snooping]] only requires checking the L3 cache tags to know if the data is on board and in which core. It also makes passing data around a bit more efficient. It's currently unknown what mechanism is being used to reduce snooping. In the past, Intel has discussed a couple of additional options they were researching such as NCID (non-inclusive cache, inclusive directory architecture). It's possible that a NCID is being used in Skylake or a related derivative. These changes also mean that software optimized for data placing in the various caches needs to be revised for the new changes, particularly in situations where data is not shared, the overall capacity can be treated as L2+L3 for a total of 2.375 MiB. | Having an inclusive L3 makes cache coherence considerably easier to implement. [[Snooping]] only requires checking the L3 cache tags to know if the data is on board and in which core. It also makes passing data around a bit more efficient. It's currently unknown what mechanism is being used to reduce snooping. In the past, Intel has discussed a couple of additional options they were researching such as NCID (non-inclusive cache, inclusive directory architecture). It's possible that a NCID is being used in Skylake or a related derivative. These changes also mean that software optimized for data placing in the various caches needs to be revised for the new changes, particularly in situations where data is not shared, the overall capacity can be treated as L2+L3 for a total of 2.375 MiB. | ||

| + | <!-- THIS NEEDS DOUBLE CHECKING, INTEL's INFO IS VERY UNCLEAR | ||

| + | With an increase to the L2 which is now 1 MiB, there is a latency penalty of 2 additional cycles (to 14 cycles from 12) but the max bandwidth from the L3 has also doubled accordingly to 64 bytes/cycle (from 32 B/cycle) with a sustainable bandwidth of 52 B/cycle. The L2 cache line size is 64 B and is 16-way set associative.--> | ||

| − | + | == New Technologies == | |

| − | + | ||

| − | -- | + | === Memory Protection Extension (MPX) === |

| − | + | {{main|x86/mpx|l1=Intel's Memory Protection Extension}} | |

| + | '''Memory Protection Extension''' ('''MPX''') is a new [[x86]] {{x86|extension}} that offers a hardware-level [[bound checking]] implementation. This extension allows an application to define memory boundaries for allocated memory areas. The processors can then check all proceeding memory accesses against those boundaries to ensure accesses are not [[out of bound]]. A program accessing a boundary-marked buffer out of buffer will generate an exception. | ||

| + | |||

| + | === Key Protection Technology (KPT) === | ||

| + | '''Key Protection Technology''' ('''KPT''') is designed to help secure sensitive private keys in hardware at runtime. KPT augments QuickAssist Technology (QAT) hardware crypto accelerators with run-time storage of private keys using Intel's existing Platform Trust Technology (PTT), thereby allowing high throughput hardware security acceleration. The QAT accelerators are all integrated onto Intel's new {{intel|Lewisburg|l=chipsset}} chipset along with the Converged Security Manageability Engine (CSME) which implements Intel's PTT. The CSME is linked through a private hardware link that is invisible to x86 software and simple hardware probes. | ||

| + | |||

| + | === Memory Protection Keys for Userspace (PKU) === | ||

| + | '''Memory Protection Keys for Userspace''' ('''PKU''' also '''PKEY'''s) is an extension that provides a mechanism for enforcing page-based protections - all without requiring modification of the page tables when an application changes protection domains. PKU introduces 16 keys by re-purposing the 4 ignored bits from the page table entry. | ||

| + | |||

| + | === Mode-Based Execute (MBE) Control === | ||

| + | '''Mode-Based Execute''' ('''MBE''') is an enhancement to the Extended Page Tables (EPT) that provides finer level of control of execute permissions. With MBE the previous Execute Enable (''X'') bit is turned into Execute Userspace page (XU) and Execute Supervisor page (XS). The processor selects the mode based on the guest page permission. With proper software support, hypervisors can take advantage of this as well to ensure integrity of kernel-level code. | ||

== Mesh Architecture == | == Mesh Architecture == | ||

| + | {{main|intel/mesh interconnect architecture|l1=Intel's Mesh Interconnect Architecture}} | ||

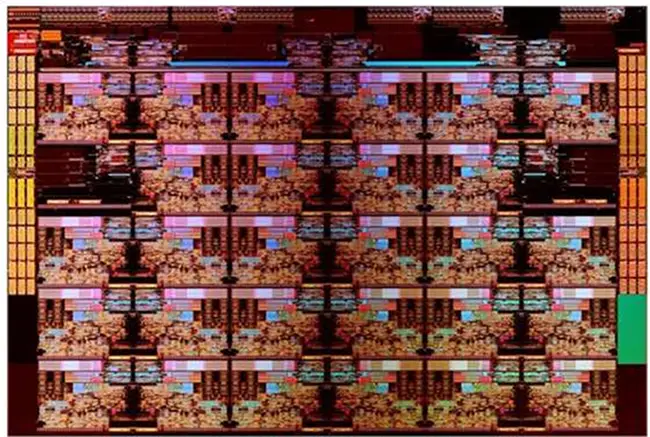

[[File:skylake sp xcc die config.png|right|400px]] | [[File:skylake sp xcc die config.png|right|400px]] | ||

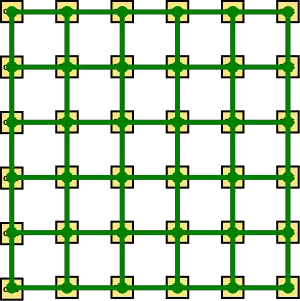

On the {{intel|microarchitectures|previous number of generations}}, Intel has been adding cores onto the die and connecting them via a {{intel|ring architecture}}. This was sufficient until recently. With each generation, the added cores increased the access latency while lowering the available bandwidth per core. Intel mitigated this problem by splitting up the die into two halves each on its own ring. This reduced hopping distance and added additional bandwidth but it did not solve the growing fundamental inefficiencies of the ring architecture. | On the {{intel|microarchitectures|previous number of generations}}, Intel has been adding cores onto the die and connecting them via a {{intel|ring architecture}}. This was sufficient until recently. With each generation, the added cores increased the access latency while lowering the available bandwidth per core. Intel mitigated this problem by splitting up the die into two halves each on its own ring. This reduced hopping distance and added additional bandwidth but it did not solve the growing fundamental inefficiencies of the ring architecture. | ||

| − | This was completely addressed with the new mesh architecture that is implemented in the Skylake server processors. The mesh | + | This was completely addressed with the new {{intel|mesh architecture}} that is implemented in the Skylake server processors. The mesh consists of a 2-dimensional array of half rings going in the vertical and horizontal directions which allow communication to take the shortest path to the correct node. The new mesh architecture implements a modular design for the routing resources in order to remove the various bottlenecks. That is, the mesh architecture now integrates the caching agent, the home agent, and the IO subsystem on the mesh interconnect distributed across all the cores. Each core now has its own associated LLC slice as well as the snooping filter and the Caching and Home Agent (CHA). Additional nodes such as the two memory controllers, the {{intel|Ultra Path Interconnect}} (UPI) nodes and PCIe are not independent node on the mesh as well and they now behave identically to any other node/core in the network. This means that in addition to the performance increase expected from core-to-core and core-to-memory latency, there should be a substantial increase in I/O performance. The CHA which is found on each of the LLC slices now maps addresses being accessed to the specific LLC bank, memory controller, or I/O subsystem. This provides the necessary information required for the routing to take place. |

| + | |||

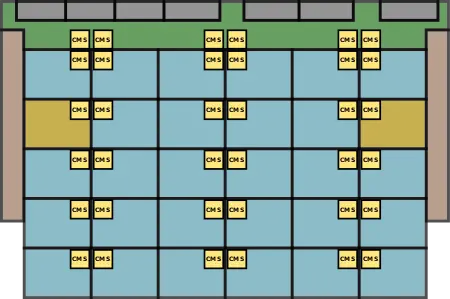

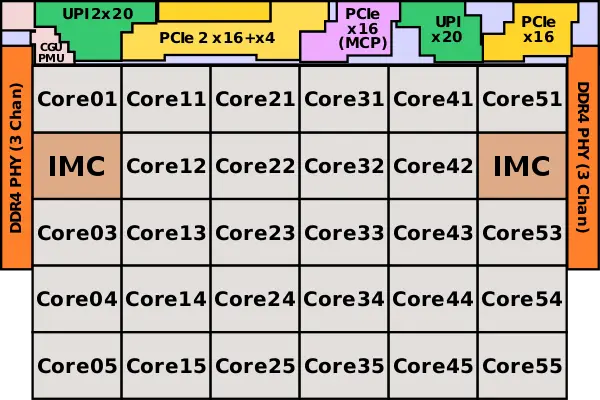

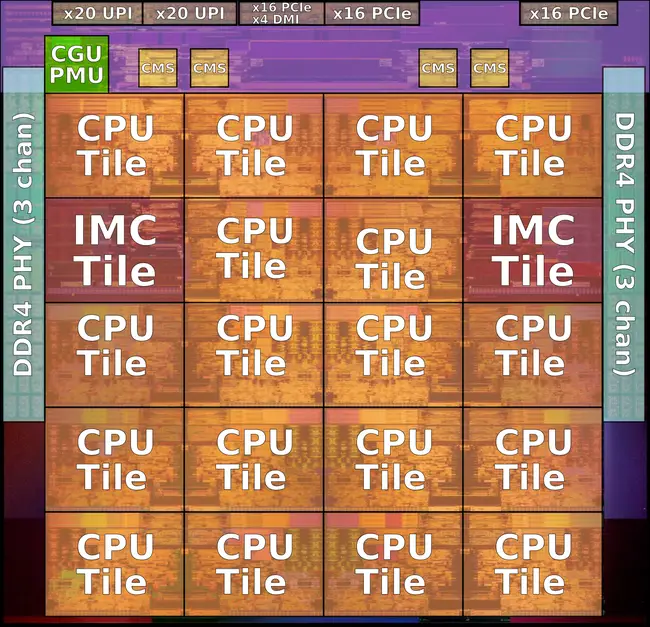

| + | === Organization === | ||

| + | [[File:skylake (server) half rings.png|right|400px]] | ||

| + | Each die has a grid of converged mesh stops (CMS). For example, for the XCC die, there are 36 CMSs. As the name implies, the CMS is a block that effectively interfaces between all the various subsystems and the mesh interconnect. The locations of the CMSes for the large core count is shown on the diagram below. It should be pointed that although the CMS appears to be inside the core tiles, most of the mesh is likely routed above the cores in a similar fashion to how Intel has done it with the ring interconnect which was wired above the caches in order reduce the die area. | ||

| + | |||

| + | |||

| + | :[[File:skylake server cms units.svg|450px]] | ||

| + | |||

| + | |||

| + | Each core tile interfaces with the mesh via its associated converged mesh stop (CMS). The CMSs at the very top are for the UPI links and PCIe links to interface with the mesh we annotated on the previous page. Additionally, the two integrated memory controllers have their own CMS they use to interface with the mesh as well. | ||

| + | |||

| + | Every stop at each tile is directly connected to its immediate four neighbors – north, south, east, and west. | ||

| + | |||

| + | |||

| + | ::[[File:skylake sp cms links.svg|300px]] | ||

| + | |||

| + | |||

| + | Every vertical column of CMSs form a bi-directional half ring. Similarly, every horizontal row forms a bi-directional half ring. | ||

| + | |||

| + | |||

| + | ::[[File:skylake sp mesh half rings.png|1000px]] | ||

| + | |||

| + | |||

| + | {{clear}} | ||

=== Cache Coherency === | === Cache Coherency === | ||

| − | Given the new mesh architecture, new tradeoffs were involved. The new {{intel|UPI}} inter-socket links are a valuable resource that could bottlenecked when flooded with unnecessary cross-socket snoop requests. There's also considerably higher memory bandwidth with Skylake which can impact performance. As a compromise, the previous four snoop modes (no-snoop, early snoop, home snoop, and directory) have been reduced to just directory-base coherency. This also alleviates the implementation | + | Given the new mesh architecture, new tradeoffs were involved. The new {{intel|UPI}} inter-socket links are a valuable resource that could bottlenecked when flooded with unnecessary cross-socket snoop requests. There's also considerably higher memory bandwidth with Skylake which can impact performance. As a compromise, the previous four snoop modes (no-snoop, early snoop, home snoop, and directory) have been reduced to just directory-base coherency. This also alleviates the implementation complexity (which is already complex enough in itself). |

[[File:snc clusters.png|right|350px]] | [[File:snc clusters.png|right|350px]] | ||

| − | It should be pointed out that the directory-base coherency optimizations that were done in previous generations have been furthered improved with Skylake - particularly OSB, {{intel|HitME}} cache, IO directory cache. Skylake maintained support for {{intel|Opportunistic Snoop Broadcast}} (OSB) which allows the network to opportunistically make use of the UPI links when idle or lightly loaded thereby avoiding an expensive memory directory lookup. With the mesh network and distributed CHAs, HitME is now distributed and scales with the CHAs, enhancing the speeding up of cache-to-cache transfers (Those are your migratory cache lines that frequently get transferred between nodes). Specifically for I/O operations, the I/O directory cache (IODC), which was introduced with {{intel|Haswell|l=arch}}, improves stream throughput by eliminating directory reads for InvItoE from | + | It should be pointed out that the directory-base coherency optimizations that were done in previous generations have been furthered improved with Skylake - particularly OSB, {{intel|HitME}} cache, IO directory cache. Skylake maintained support for {{intel|Opportunistic Snoop Broadcast}} (OSB) which allows the network to opportunistically make use of the UPI links when idle or lightly loaded thereby avoiding an expensive memory directory lookup. With the mesh network and distributed CHAs, HitME is now distributed and scales with the CHAs, enhancing the speeding up of cache-to-cache transfers (Those are your migratory cache lines that frequently get transferred between nodes). Specifically for I/O operations, the I/O directory cache (IODC), which was introduced with {{intel|Haswell|l=arch}}, improves stream throughput by eliminating directory reads for InvItoE from snoop caching agent. Previously this was implemented as a 64-entry directory cache to complement the directory in memory. In Skylake, with a distributed CHA at each node, the IODC is implemented as an eight-entry directory cache per CHA. |

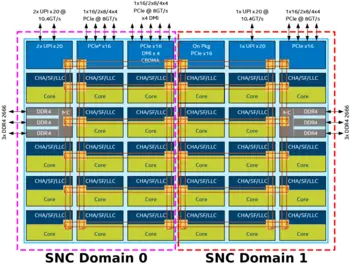

==== Sub-NUMA Clustering ==== | ==== Sub-NUMA Clustering ==== | ||

| − | In previous generations Intel had a feature called {{intel|cluster-on-die}} (COD) which was introduced with {{intel|Haswell|l=arch}}. With Skylake, there's a similar feature called {{intel|sub-NUMA cluster}} (SNC). With a memory controller physically located on each side of the die, SNC allows for the creation of two localized domains with each memory controller belonging to each domain. The processor can then map the addresses from the controller to the distributed home | + | In previous generations Intel had a feature called {{intel|cluster-on-die}} (COD) which was introduced with {{intel|Haswell|l=arch}}. With Skylake, there's a similar feature called {{intel|sub-NUMA cluster}} (SNC). With a memory controller physically located on each side of the die, SNC allows for the creation of two localized domains with each memory controller belonging to each domain. The processor can then map the addresses from the controller to the distributed home agents and LLC in its domain. This allows executing code to experience lower LLC and memory latency within its domain compared to accesses outside of the domain. |

| − | It should be pointed out that in contrast to COD, SNC has a unique location for every | + | It should be pointed out that in contrast to COD, SNC has a unique location for every address in the LLC and is never duplicated across LLC banks (previously, COD cache lines could have copies). Additionally, on multiprocessor systems, addresses mapped to memory on remote sockets are still uniformly distributed across all LLC banks irrespective of the localized SNC domain. |

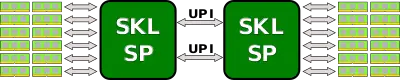

== Scalability == | == Scalability == | ||

{{see also|intel/quickpath interconnect|intel/ultra path interconnect|l1=QuickPath Interconnect|l2=Ultra Path Interconnect}} | {{see also|intel/quickpath interconnect|intel/ultra path interconnect|l1=QuickPath Interconnect|l2=Ultra Path Interconnect}} | ||

| − | In the last couple of generations, Intel has been utilizing {{intel|QuickPath Interconnect}} (QPI) which served as a high-speed point-to-point interconnect. QPI has been replaced the {{intel|Ultra Path Interconnect}} (UPI) which is higher-efficiency coherent interconnect for scalable systems, allowing multiple processors to share a single shared address space. Depending on the exact model, each processor can have | + | In the last couple of generations, Intel has been utilizing {{intel|QuickPath Interconnect}} (QPI) which served as a high-speed point-to-point interconnect. QPI has been replaced by the {{intel|Ultra Path Interconnect}} (UPI) which is higher-efficiency coherent interconnect for scalable systems, allowing multiple processors to share a single shared address space. Depending on the exact model, each processor can have either two or three UPI links connecting to the other processors. |

| − | UPI links eliminate some of the scalability | + | UPI links eliminate some of the scalability limitations that surfaced in QPI over the past few microarchitecture iterations. They use directory-based home snoop coherency protocol and operate at up either 10.4 GT/s or 9.6 GT/s. This is quite a bit different from previous generations. In addition to the various improvements done to the protocol layer, {{intel|Skylake SP|l=core}} now implements a distributed CHA that is situated along with the LLC bank on each core. It's in charge of tracking the various requests from the core as well as responding to snoop requests from both local and remote agents. The ease of distributing the home agent is a result of Intel getting rid of the requirement on preallocation of resources at the home agent. This also means that future architectures should be able to scale up well. |

Depending on the exact model, Skylake processors can scale from 2-way all the way up to 8-way multiprocessing. Note that the high-end models that support 8-way multiprocessing also only come with three UPI links for this purpose while the lower end processors can have either two or three UPI links. Below are the typical configurations for those processors. | Depending on the exact model, Skylake processors can scale from 2-way all the way up to 8-way multiprocessing. Note that the high-end models that support 8-way multiprocessing also only come with three UPI links for this purpose while the lower end processors can have either two or three UPI links. Below are the typical configurations for those processors. | ||

| Line 186: | Line 553: | ||

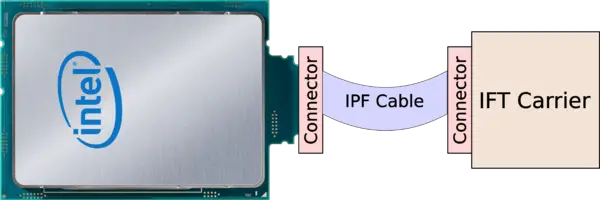

:: [[File:skylake sp with hfi to carrier.png|600px]] | :: [[File:skylake sp with hfi to carrier.png|600px]] | ||

| + | |||

| + | |||

| + | Regardless of the model, the integrated fabric die has a TDP of 8 Watts (note that this value is already included in the model's TDP value). | ||

| + | |||

| + | {{clear}} | ||

| + | |||

| + | == Sockets/Platform == | ||

| + | Both {{intel|Skylake X|l=core}} and {{intel|Skylake SP|PS|l=core}} are a two-chip solution linked together via Intel's standard [[DMI 3.0]] bus interface which utilizes 4 [[PCIe]] 3.0 lanes (having a transfer rate of 8 GT/s per lane). {{intel|Skylake SP|l=arch}} has additional SMP capabilities which utilizes either 2 or 3 (depending on the model) {{intel|Ultra Path Interconnect}} (UPI) links. | ||

| + | |||

| + | {| class="wikitable" style="text-align: center;" | ||

| + | |- | ||

| + | ! !! Core !! Socket !! Permanent !! Platform !! Chipset !! Chipset Bus !! SMP Interconnect | ||

| + | |- | ||

| + | | [[File:skylake x (back).png|100px|link=intel/cores/skylake_x]] || {{intel|Skylake X|l=core}} || {{intel|LGA-2066}} || rowspan="2" | No || 2-chip || rowspan="2" | {{intel|Lewisburg}} || rowspan="2" | [[DMI 3.0]] || {{tchk|no}} | ||

| + | |- | ||

| + | | || {{intel|Skylake SP|l=core}} || {{intel|LGA-3647}} || 2-chip + 2-8-way SMP || {{intel|Ultra Path Interconnect|UPI}} | ||

| + | |} | ||

| + | |||

| + | === Packages === | ||

| + | {| class="wikitable" | ||

| + | |- | ||

| + | ! Core !! Die Type !! Package !! Dimensions | ||

| + | |- | ||

| + | | rowspan="3" | {{intel|Skylake SP|l=core}} || LCC || rowspan="3" | {{intel|FCLGA-3647}} || rowspan="3" | 76.16 mm x 56.6 mm | ||

| + | |- | ||

| + | | HCC | ||

| + | |- | ||

| + | | XCC | ||

| + | |- | ||

| + | | rowspan="2" | {{intel|Skylake X|l=core}} || LCC || rowspan="2" | {{intel|FCLGA-2066}} || rowspan="2" | 58.5 mm x 51 mm | ||

| + | |- | ||

| + | | HCC | ||

| + | |} | ||

| + | |||

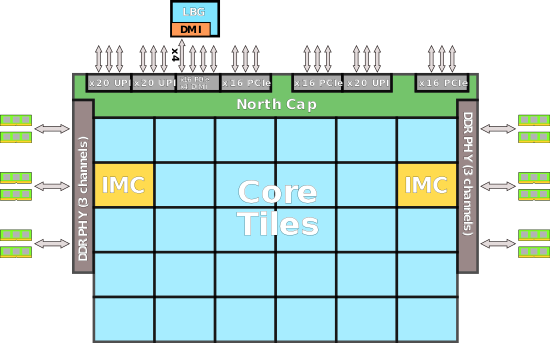

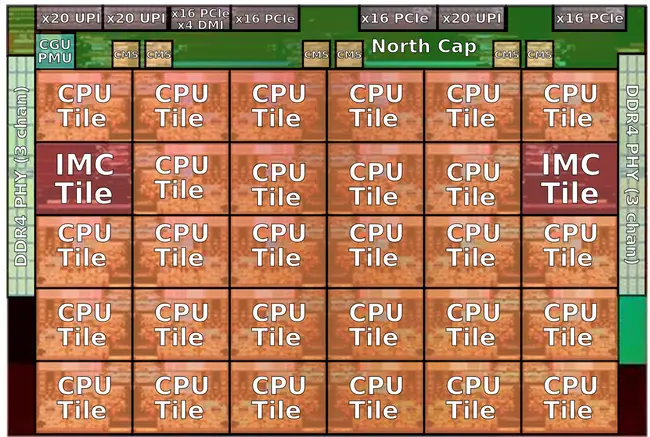

| + | == Floorplan == | ||

| + | [[File:skylake sp major blocks.svg|right|400px]] | ||

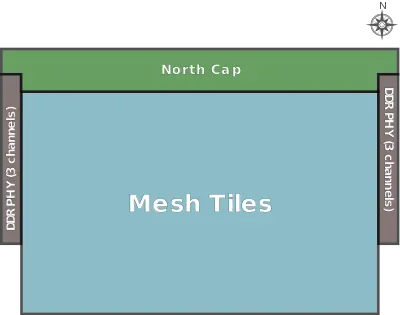

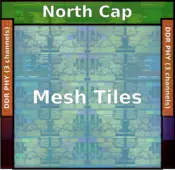

| + | All Skylake server dies consist of three major blocks: | ||

| + | |||

| + | * DDR PHYs | ||

| + | * North Cap | ||

| + | * Mesh Tiles | ||

| + | |||

| + | Those blocks are found on all die configuration and form the base for Intel's highly configurable floorplan. Depending on the market segment and model specification targets, Intel can add and remove rows of tiles. | ||

| + | |||

| + | <div style="text-align: center;"> | ||

| + | <div style="float: left;">'''XCC Die'''<br>[[File:skylake (server) die major blocks (xcc).png|250px]]</div> | ||

| + | <div style="float: left; margin-left: 30px;">'''HCC Die'''<br>[[File:skylake (server) die major blocks (hcc).png|175px]]</div> | ||

| + | </div> | ||

{{clear}} | {{clear}} | ||

| + | === Physical Layout === | ||

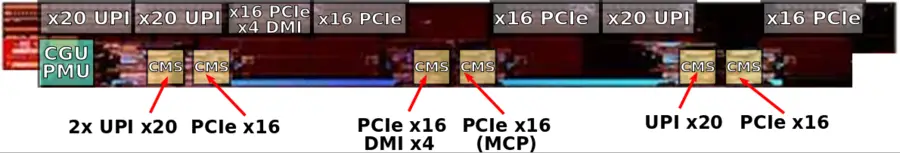

| + | ==== North Cap ==== | ||

| + | The '''North Cap''' at the very top of the die contains all the I/O agents and PHYs as well as serial IP ports, and the fuse unit. For the most part this configuration largely the same for all the dies. For the smaller dies, the extras are removed (e.g., the in-package PCIe link is not needed). | ||

| + | |||

| + | At the very top of the North Cap are the various I/O connectivity. There are a total of 128 high-speed I/O lanes – 3×16 (48) PCIe lanes operating at 8 GT/s, x4 DMI lanes for hooking up the Lewisburg chipset, 16 on-package PCIe lanes (operating at 2.5/5/8 GT/s), and 3×20 (60) {{intel|Ultra-Path Interconnect}} (UPI) lanes operating at 10.4 GT/s for the [[multiprocessing]] support. | ||

| + | |||

| + | At the south-west corner of the North Cap is the clock generator unit (CGU) and the Global Power Management Unit (Global PMU). The CGU contains an all-digital (AD) filter phase-locked loops (PLL) and an all-digital uncore PLL. The filter ADPLL is dedicated to the generation of all on-die reference clock used for all the core PLLs and one uncore PLL. The power management unit also has its own dedicated all-digital PLL. | ||

| + | |||

| + | At the bottom part of the North Cap are the {{intel|mesh interconnect architecture#Overview|Mesh stops}} for the various I/O to interface with the Mesh. | ||

| + | |||

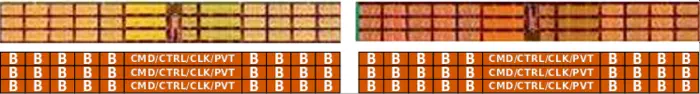

| + | ==== DDR PHYs ==== | ||

| + | There are the two DDR4 PHYs which are identical for all the dies (albeit in the low-end models, the extra channel is simply disabled). There are two independent and identical physical sections of 3 DDR4 channels each which reside on the east and west edges of the die. Each channel is 72-bit (64 bit and an 8-bit ECC), supporting 2-DIMM per channel with a data rate of up to 2666 MT/s for a bandwidth of 21.33 GB/s and an aggregated bandwidth of 128 GB/s. RDIMM and LRDIMM are supported. | ||

| + | |||

| + | The location of the PHYs was carefully chosen in order to ease the package design, specifically, they were chosen in order to maintain escape routing and pin-out order matching between the CPU and the DIMM slots to shorten package and PCB routing length in order to improve signal integrity. | ||

| + | |||

| + | ==== Layout ==== | ||

| + | :[[File:skylake (server) die area layout.svg|600px]] | ||

| + | |||

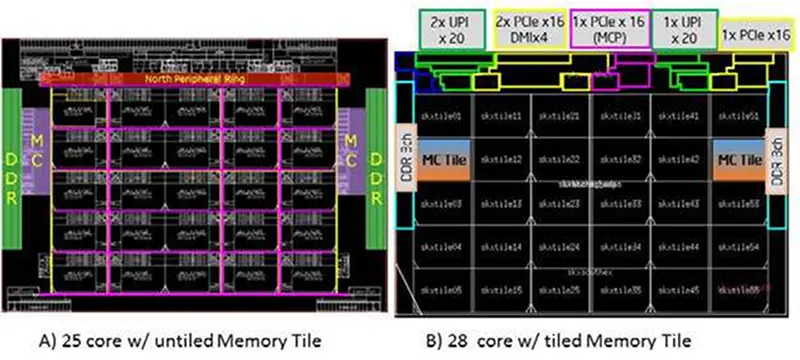

| + | ==== Evolution ==== | ||

| + | The original Skylake large die started out as a 5 by 5 core tile (25 tiles, 25 cores) as shown by the image from Intel on the left side. The memory controllers were next to the PHYs on the east and west side. An additional row was inserted to get to a 5 by 6 grid. Two core tiles one from each of the sides was then replaced by the new memory controller module which can interface with the mesh just like any other core tile. The final die is shown in the image below as well on the right side. | ||

| + | |||

| + | :[[File:skylaake server layout evoluation.png|800px]] | ||

| + | |||

| + | == Die == | ||

| + | {{see also|intel/microarchitectures/skylake_(client)#Die|l1=Client Skylake's Die}} | ||

| + | [[File:intel xeon skylake sp.jpg|right|300px|thumb|Skylake SP chips and wafer.]] | ||

| + | Skylake Server class models and high-end desktop (HEDT) consist of 3 different dies: | ||

| + | |||

| + | * 12 tiles (3x4), 10-core, Low Core Count (LCC) | ||

| + | * 20 tiles (5x4), 18-core, High Core Count (HCC) | ||

| + | * 30 tiles (5x6), 28-core, Extreme Core Count (XCC) | ||

| + | |||

| + | === North Cap === | ||

| + | '''HCC:''' | ||

| + | |||

| + | :[[File:skylake (server) northcap (hcc).png|700px]] | ||

| + | |||

| + | :[[File:skylake (server) northcap (hcc) (annotated).png|700px]] | ||

| + | |||

| + | '''XCC:''' | ||

| + | |||

| + | :[[File:skylake (server) northcap (xcc).png|900px]] | ||

| + | |||

| + | :[[File:skylake (server) northcap (xcc) (annotated).png|900px]] | ||

| + | |||

| + | |||

| + | === Memory PHYs === | ||

| + | Data bytes are located on the north and south sub-sections of the channel layout. Command, Control, Clock signals, and process, supply voltage, and temperature (PVT) compensation circuitry are located in the middle section of the channels. | ||

| + | |||

| + | :[[File:skylake sp memory phys (annotated).png|700px]] | ||

| + | |||

| + | === Core Tile === | ||

| + | * ~4.8375 x 3.7163 | ||

| + | * ~ 17.978 mm² die area | ||

| + | |||

| + | :[[File:skylake sp core.png|500px]] | ||

| + | |||

| + | :[[File:skylake sp mesh core tile zoom.png|700px]] | ||

| + | |||

| + | === Low Core Count (LCC) === | ||

| + | * [[14 nm process]] | ||

| + | * 12 metal layers | ||

| + | * ~22.26 mm x ~14.62 mm | ||

| + | * ~325.44 mm² die size | ||

| + | * [[10 cores]] | ||

| + | * 12 tiles (3x4) | ||

| + | |||

| + | |||

| + | : (NOT official die shot, artist's rendering based on the larger die) | ||

| + | : [[File:skylake lcc die shot.jpg|650px]] | ||

| + | |||

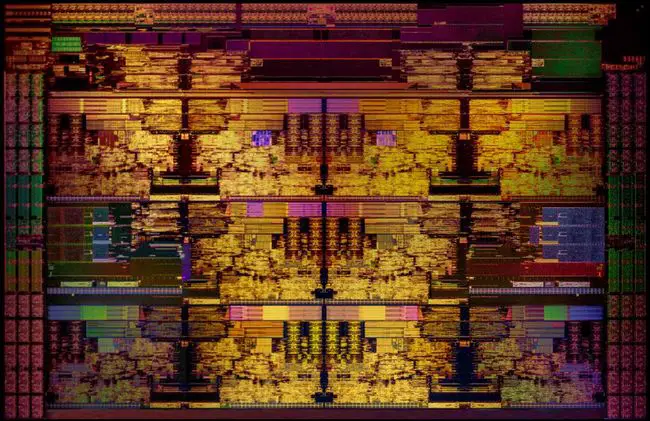

| + | === High Core Count (HCC) === | ||

| + | Die shot of the [[octadeca core]] HEDT {{intel|Skylake X|l=core}} processors. | ||

| + | |||

| + | * [[14 nm process]] | ||

| + | * 13 metal layers | ||

| + | * ~485 mm² die size (estimated) | ||

| + | * [[18 cores]] | ||

| + | * 20 tiles (5x4) | ||

| + | |||

| + | : [[File:skylake (octadeca core).png|650px]] | ||

| + | |||

| + | |||

| + | : [[File:skylake (octadeca core) (annotated).png|650px]] | ||

| + | |||

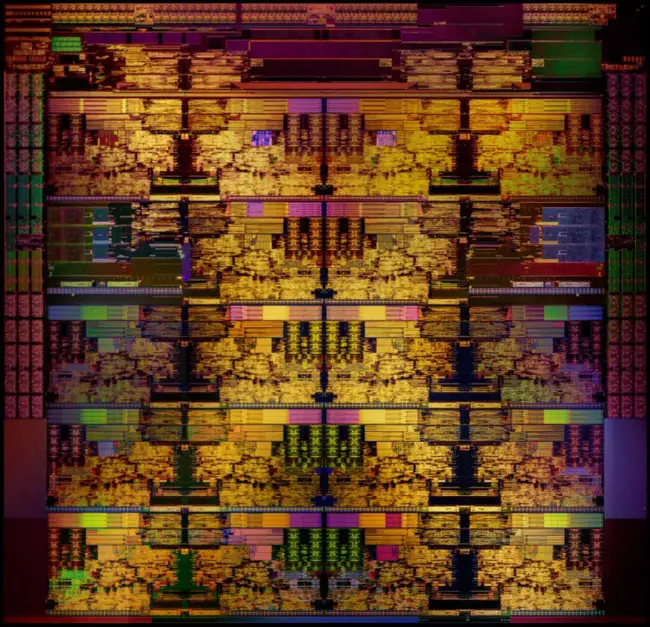

| + | === Extreme Core Count (XCC) === | ||

| + | * [[14 nm process]] | ||

| + | * 13 metal layers | ||

| + | * ~694 mm² die size (estimated) | ||

| + | * [[28 cores]] | ||

| + | * 30 tiles (5x6) | ||

| + | |||

| + | |||

| + | : [[File:skylake-sp hcc die shot.png|class=wikichip_ogimage|650px]] | ||

| + | |||

| + | |||

| + | : [[File:skylake-sp hcc die shot (annotated).png|650px]] | ||

| + | |||

| + | == All Skylake Chips == | ||

| + | <!-- NOTE: | ||

| + | This table is generated automatically from the data in the actual articles. | ||

| + | If a microprocessor is missing from the list, an appropriate article for it needs to be | ||

| + | created and tagged accordingly. | ||

| + | |||

| + | Missing a chip? please dump its name here: https://en.wikichip.org/wiki/WikiChip:wanted_chips | ||

| + | --> | ||

| + | {{comp table start}} | ||

| + | <table class="comptable sortable tc6 tc7 tc14 tc15"> | ||

| + | <tr class="comptable-header"><th> </th><th colspan="24">List of Skylake Processors</th></tr> | ||

| + | <tr class="comptable-header"><th> </th><th colspan="9">Main processor</th><th colspan="2">Frequency/{{intel|Turbo Boost|Turbo}}</th><th>Mem</th><th colspan="7">Major Feature Diff</th></tr> | ||

| + | {{comp table header 1|cols=Launched, Price, Family, Core Name, Cores, Threads, %L2$, %L3$, TDP, %Frequency, %Max Turbo, Max Mem, Turbo, SMT}} | ||

| + | <tr class="comptable-header comptable-header-sep"><th> </th><th colspan="25">[[Uniprocessors]]</th></tr> | ||

| + | {{#ask: [[Category:microprocessor models by intel]] [[instance of::microprocessor]] [[microarchitecture::Skylake (server)]] [[max cpu count::1]] | ||

| + | |?full page name | ||

| + | |?model number | ||

| + | |?first launched | ||

| + | |?release price | ||

| + | |?microprocessor family | ||

| + | |?core name | ||

| + | |?core count | ||

| + | |?thread count | ||

| + | |?l2$ size | ||

| + | |?l3$ size | ||

| + | |?tdp | ||

| + | |?base frequency#GHz | ||

| + | |?turbo frequency (1 core)#GHz | ||

| + | |?max memory#GiB | ||

| + | |?has intel turbo boost technology 2_0 | ||

| + | |?has simultaneous multithreading | ||

| + | |format=template | ||

| + | |template=proc table 3 | ||

| + | |searchlabel= | ||

| + | |sort=microprocessor family, model number | ||

| + | |order=asc,asc | ||

| + | |userparam=16:15 | ||

| + | |mainlabel=- | ||

| + | |limit=200 | ||

| + | }} | ||

| + | <tr class="comptable-header comptable-header-sep"><th> </th><th colspan="25">[[Multiprocessors]] (2-way)</th></tr> | ||

| + | {{#ask: | ||

| + | [[Category:microprocessor models by intel]] [[instance of::microprocessor]] [[microarchitecture::Skylake (server)]] [[max cpu count::2]] | ||

| + | |?full page name | ||

| + | |?model number | ||

| + | |?first launched | ||

| + | |?release price | ||

| + | |?microprocessor family | ||

| + | |?core name | ||

| + | |?core count | ||

| + | |?thread count | ||

| + | |?l2$ size | ||

| + | |?l3$ size | ||

| + | |?tdp | ||

| + | |?base frequency#GHz | ||

| + | |?turbo frequency (1 core)#GHz | ||

| + | |?max memory#GiB | ||

| + | |?has intel turbo boost technology 2_0 | ||

| + | |?has simultaneous multithreading | ||

| + | |format=template | ||

| + | |template=proc table 3 | ||

| + | |searchlabel= | ||

| + | |sort=microprocessor family, model number | ||

| + | |order=asc,asc | ||

| + | |userparam=16:15 | ||

| + | |mainlabel=- | ||

| + | |limit=60 | ||

| + | }} | ||

| + | <tr class="comptable-header comptable-header-sep"><th> </th><th colspan="25">[[Multiprocessors]] (4-way)</th></tr> | ||

| + | {{#ask: | ||

| + | [[Category:microprocessor models by intel]] [[instance of::microprocessor]] [[microarchitecture::Skylake (server)]] [[max cpu count::4]] | ||

| + | |?full page name | ||

| + | |?model number | ||

| + | |?first launched | ||

| + | |?release price | ||

| + | |?microprocessor family | ||

| + | |?core name | ||

| + | |?core count | ||

| + | |?thread count | ||

| + | |?l2$ size | ||

| + | |?l3$ size | ||

| + | |?tdp | ||

| + | |?base frequency#GHz | ||

| + | |?turbo frequency (1 core)#GHz | ||

| + | |?max memory#GiB | ||

| + | |?has intel turbo boost technology 2_0 | ||

| + | |?has simultaneous multithreading | ||

| + | |format=template | ||

| + | |template=proc table 3 | ||

| + | |searchlabel= | ||

| + | |sort=microprocessor family, model number | ||

| + | |order=asc,asc | ||

| + | |userparam=16:15 | ||

| + | |mainlabel=- | ||

| + | |limit=60 | ||

| + | }} | ||

| + | <tr class="comptable-header comptable-header-sep"><th> </th><th colspan="25">[[Multiprocessors]] (8-way)</th></tr> | ||

| + | {{#ask: | ||

| + | [[Category:microprocessor models by intel]] [[instance of::microprocessor]] [[microarchitecture::Skylake (server)]] [[max cpu count::8]] | ||

| + | |?full page name | ||

| + | |?model number | ||

| + | |?first launched | ||

| + | |?release price | ||

| + | |?microprocessor family | ||

| + | |?core name | ||

| + | |?core count | ||

| + | |?thread count | ||

| + | |?l2$ size | ||

| + | |?l3$ size | ||

| + | |?tdp | ||

| + | |?base frequency#GHz | ||

| + | |?turbo frequency (1 core)#GHz | ||

| + | |?max memory#GiB | ||

| + | |?has intel turbo boost technology 2_0 | ||

| + | |?has simultaneous multithreading | ||

| + | |format=template | ||

| + | |template=proc table 3 | ||

| + | |searchlabel= | ||

| + | |sort=microprocessor family, model number | ||

| + | |order=asc,asc | ||

| + | |userparam=16:15 | ||

| + | |mainlabel=- | ||

| + | |limit=60 | ||

| + | }} | ||

| + | {{comp table count|ask=[[Category:microprocessor models by intel]] [[instance of::microprocessor]] [[microarchitecture::Skylake (server)]]}} | ||

| + | </table> | ||

| + | {{comp table end}} | ||

| − | == | + | == References == |

| + | * Intel Unveils Powerful Intel Xeon Scalable Processors, Live Event, July 11, 2017 | ||

| + | * [[:File:intel xeon scalable processor architecture deep dive.pdf|Intel Xeon Scalable Process Architecture Deep Dive]], Akhilesh Kumar & Malay Trivedi, Skylake-SP CPU & Lewisburg PCH Architects, June 12th, 2017. | ||

| + | * IEEE Hot Chips (HC28) 2017. | ||

| + | * IEEE ISSCC 2018 | ||

| − | * | + | == Documents == |

| + | * [[:File:Intel-Core-X-Series-Processor-Family Product-Information.pdf|New Intel Core X-Series Processor Family]] | ||

| + | * [[:File:intel-xeon-scalable-processors-product-brief.pdf|Intel Xeon (Skylake SP) Processors Product Brief]] | ||

| + | * [[:File:intel-xeon-scalable-processors-overview.pdf|Intel Xeon (Skylake SP) Processors Product Overview]] | ||

| + | * [[:File:intel-skylake-w-overview.pdf|Xeon (Skylake W) Workstations Overview]] | ||

| + | * [[:File:optimal hpc solutions for scalable xeons.pdf|Optimal HPC solutions with Intel Scalable Xeons]] | ||

Latest revision as of 23:59, 5 July 2022

| Edit Values | |

| Skylake (server) µarch | |

| General Info | |

| Arch Type | CPU |

| Designer | Intel |

| Manufacturer | Intel |

| Introduction | May 4, 2017 |

| Process | 14 nm |

| Core Configs | 4, 6, 8, 10, 12, 14, 16, 18, 20, 22, 24, 26, 28 |

| Pipeline | |

| Type | Superscalar |

| OoOE | Yes |

| Speculative | Yes |

| Reg Renaming | Yes |

| Stages | 14-19 |

| Instructions | |

| ISA | x86-64 |

| Extensions | MOVBE, MMX, SSE, SSE2, SSE3, SSSE3, SSE4.1, SSE4.2, POPCNT, AVX, AVX2, AES, PCLMUL, FSGSBASE, RDRND, FMA3, F16C, BMI, BMI2, VT-x, VT-d, TXT, TSX, RDSEED, ADCX, PREFETCHW, CLFLUSHOPT, XSAVE, SGX, MPX, AVX-512 |

| Cache | |

| L1I Cache | 32 KiB/core 8-way set associative |

| L1D Cache | 32 KiB/core 8-way set associative |

| L2 Cache | 1 MiB/core 16-way set associative |

| L3 Cache | 1.375 MiB/core 11-way set associative |

| Cores | |

| Core Names | Skylake X, Skylake W, Skylake SP |

| Succession | |

| Contemporary | |

| Skylake (client) | |

Skylake (SKL) Server Configuration is Intel's successor to Broadwell, an enhanced 14nm+ process microarchitecture for enthusiasts and servers. Skylake succeeded Broadwell. Skylake is the "Architecture" phase as part of Intel's PAO model. The microarchitecture was developed by Intel's R&D center in Haifa, Israel.

For desktop enthusiasts, Skylake is branded Core i7, and Core i9 processors (under the Core X series). For scalable server class processors, Intel branded it as Xeon Bronze, Xeon Silver, Xeon Gold, and Xeon Platinum.

There are a fair number of major differences in the Skylake server configuration vs the client configuration.

Contents

Codenames[edit]

- See also: Client Skylake's Codenames

| Core | Abbrev | Platform | Target |

|---|---|---|---|

| Skylake SP | SKL-SP | Purley | Server Scalable Processors |

| Skylake X | SKL-X | Basin Falls | High-end desktops & enthusiasts market |

| Skylake W | SKL-W | Basin Falls | Enterprise/Business workstations |

| Skylake DE | SKL-DE | Dense server/edge computing |

Brands[edit]

- See also: Client Skylake's Brands

Intel introduced a number of new server chip families with the introduction of Skylake SP as well as a new enthusiasts family with the introduction of Skylake X.

| Logo | Family | General Description | Differentiating Features | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Cores | HT | AVX | AVX2 | AVX-512 | TBT | ECC | |||

|

Core i7 | Enthusiasts/High Performance (X) | 6 - 8 | ✔ | ✔ | ✔ | ✔ | ✔ | ✘ |

|

Core i9 | Enthusiasts/High Performance | 10 - 18 | ✔ | ✔ | ✔ | ✔ | ✔ | ✘ |

| Logo | Family | General Description | Differentiating Features | ||||||

| Cores | HT | TBT | AVX-512 | AVX-512 Units | UPI links | Scalability | |||

|

Xeon D | Dense servers / edge computing | 4-18 | ✔ | ✔ | ✔ | 1 | ✘ | |

|

Xeon W | Business workstations | 4-18 | ✔ | ✔ | ✔ | 2 | ✘ | |

|

Xeon Bronze | Entry-level performance / Cost-sensitive |

6 - 8 | ✘ | ✘ | ✔ | 1 | 2 | Up to 2 |

|

Xeon Silver | Mid-range performance / Efficient lower power |

4 - 12 | ✔ | ✔ | ✔ | 1 | 2 | Up to 2 |

|

Xeon Gold 5000 | High performance | 4 - 14 | ✔ | ✔ | ✔ | 1 | 2 | Up to 4 |

| Xeon Gold 6000 | Higher performance | 6 - 22 | ✔ | ✔ | ✔ | 2 | 3 | Up to 4 | |

|

Xeon Platinum | Highest performance / flexibility | 4 - 28 | ✔ | ✔ | ✔ | 2 | 3 | Up to 8 |

Release Dates[edit]

Skylake-based Core X was introduced in May 2017 while Skylake SP was introduced in July 2017.

Process Technology[edit]

- Main article: 14 nm lithography process

Unlike mainstream Skylake models, all Skylake server configuration models are fabricated on Intel's enhanced 14+ nm process which is used by Kaby Lake.

Compatibility[edit]

| Vendor | OS | Version | Notes |

|---|---|---|---|

| Microsoft | Windows | Windows Server 2008 | Support |

| Windows Server 2008 R2 | |||

| Windows Server 2012 | |||

| Windows Server 2012 R2 | |||

| Windows Server 2016 | |||

| Linux | Linux | Kernel 3.19 | Initial Support (MPX support) |

| Apple | macOS | 10.12.3 | iMac Pro |

Compiler support[edit]

| Compiler | Arch-Specific | Arch-Favorable |

|---|---|---|

| ICC | -march=skylake-avx512 |

-mtune=skylake-avx512

|

| GCC | -march=skylake-avx512 |

-mtune=skylake-avx512

|

| LLVM | -march=skylake-avx512 |

-mtune=skylake-avx512

|

| Visual Studio | /arch:AVX2 |

/tune:skylake

|

CPUID[edit]

| Core | Extended Family |

Family | Extended Model |

Model |

|---|---|---|---|---|

| X, SP, DE, W | 0 | 0x6 | 0x5 | 0x5 |

| Family 6 Model 85 | ||||

Architecture[edit]

Skylake server configuration introduces a number of significant changes from both Intel's previous microarchitecture, Broadwell, as well as the Skylake (client) architecture. Unlike client models, Skylake servers and HEDT models will still incorporate the fully integrated voltage regulator (FIVR) on-die. Those chips also have an entirely new multi-core system architecture that brought a new mesh interconnect network (from ring topology).

Key changes from Broadwell[edit]

- Improved "14 nm+" process (see Kaby Lake § Process Technology)

- Omni-Path Architecture (OPA)

- Mesh architecture (from ring)

- Sub-NUMA Clustering (SNC) support (replaces the Cluster-on-Die (COD) implementation)

- Chipset

- Core

- All the changes from Skylake Client (For full list, see Skylake (Client) § Key changes from Broadwell)

- Front End

- LSD is disabled (Likely due to a bug; see § Front-end for details)

- Back-end

- Port 4 now performs 512b stores (from 256b)

- Port 0 & Port 1 can now be fused to perform AVX-512

- Port 5 now can do full 512b operations (not on all models)

- Memory Subsystem

- Larger store buffer (56 entries, up from 42)

- Page split load penalty reduced 20-fold

- Larger Write-back buffer

- Store is now 64B/cycle (from 32B/cycle)

- Load is now 2x64B/cycle (from 2x32B/cycle)

- New Features

- Adaptive Double Device Data Correction (ADDDC)

- Memory

- L2$

- Increased to 1 MiB/core (from 256 KiB/core)

- Latency increased from 12 to 14

- L3$

- Reduced to 1.375 MiB/core (from 2.5 MiB/core)

- Now non-inclusive (was inclusive)

- DRAM

- hex-channel DDR4-2666 (from quad-channel)

- L2$

- TLBs

- ITLB

- 4 KiB page translations was changed from 4-way to 8-way associative

- STLB

- 4 KiB + 2 MiB page translations was changed from 6-way to 12-way associative

- DMI/PEG are now on a discrete clock domain with BCLK sitting on its own domain with full-range granularity (1 MHz intervals)

- ITLB

- Testability

- New support for Direct Connect Interface (DCI), a new debugging transport protocol designed to allow debugging of closed cases (e.g. laptops, embedded) by accessing things such as JTAG through any USB 3 port.

CPU changes[edit]

See Skylake (Client) § CPU changes

New instructions[edit]

- See also: Client Skylake's New instructions

Skylake server introduced a number of new instructions:

-

MPX- Memory Protection Extensions -

XSAVEC- Save processor extended states with compaction to memory -

XSAVES- Save processor supervisor-mode extended states to memory. -

CLFLUSHOPT- Flush & Invalidates memory operand and its associated cache line (All L1/L2/L3 etc..) -

AVX-512, specifically: -

PKU- Memory Protection Keys for Userspace -

PCOMMIT- PCOMMIT instruction -

CLWB- Force cache line write-back without flush

Block Diagram[edit]

Entire SoC Overview[edit]

LCC SoC[edit]

HCC SoC[edit]

XCC SoC[edit]

Individual Core[edit]

Memory Hierarchy[edit]

Some major organizational changes were done to the cache hierarchy in Skylake server configuration vs Broadwell/Haswell. The memory hierarchy for Skylake's server and HEDT processors has been rebalanced. Note that the L3 is now non-inclusive and some of the SRAM from the L3 cache was moved into the private L2 cache.

- Cache

- L0 µOP cache:

- 1,536 µOPs/core, 8-way set associative

- 32 sets, 6-µOP line size

- statically divided between threads, inclusive with L1I

- 1,536 µOPs/core, 8-way set associative

- L1I Cache:

- 32 KiB/core, 8-way set associative

- 64 sets, 64 B line size

- competitively shared by the threads/core

- 32 KiB/core, 8-way set associative

- L1D Cache:

- 32 KiB/core, 8-way set associative

- 64 sets, 64 B line size

- competitively shared by threads/core

- 4 cycles for fastest load-to-use (simple pointer accesses)

- 5 cycles for complex addresses

- 128 B/cycle load bandwidth

- 64 B/cycle store bandwidth

- Write-back policy

- L2 Cache:

- 1 MiB/core, 16-way set associative

- 64 B line size

- Inclusive

- 64 B/cycle bandwidth to L1$

- Write-back policy

- 14 cycles latency

- L3 Cache:

- 1.375 MiB/core, 11-way set associative, shared across all cores

- Note that a few models have non-default cache sizes due to disabled cores

- 2,048 sets, 64 B line size

- Non-inclusive victim cache

- Write-back policy

- 50-70 cycles latency

- 1.375 MiB/core, 11-way set associative, shared across all cores

- Snoop Filter (SF):

- 2,048 sets, 12-way set associative

- L0 µOP cache:

- DRAM

- 6 channels of DDR4, up to 2666 MT/s

- RDIMM and LRDIMM

- bandwidth of 21.33 GB/s

- aggregated bandwidth of 128 GB/s

- 6 channels of DDR4, up to 2666 MT/s

Skylake TLB consists of dedicated L1 TLB for instruction cache (ITLB) and another one for data cache (DTLB). Additionally there is a unified L2 TLB (STLB).

- TLBs:

- ITLB

- 4 KiB page translations:

- 128 entries; 8-way set associative

- dynamic partitioning

- 2 MiB / 4 MiB page translations:

- 8 entries per thread; fully associative

- Duplicated for each thread

- 4 KiB page translations:

- DTLB

- 4 KiB page translations:

- 64 entries; 4-way set associative

- fixed partition

- 2 MiB / 4 MiB page translations:

- 32 entries; 4-way set associative

- fixed partition

- 1G page translations:

- 4 entries; 4-way set associative

- fixed partition

- 4 KiB page translations:

- STLB

- 4 KiB + 2 MiB page translations:

- 1536 entries; 12-way set associative. (Note: STLB is incorrectly reported as "6-way" by CPUID leaf 2 (EAX=02H). Skylake erratum SKL148 recommends software to simply ignore that value.)

- fixed partition

- 1 GiB page translations:

- 16 entries; 4-way set associative

- fixed partition

- 4 KiB + 2 MiB page translations:

- ITLB

Overview[edit]