(→History) |

(→Key changes from {{\\|Palm Cove}}/{{\\|Skylake}}) |

||

| (83 intermediate revisions by 20 users not shown) | |||

| Line 6: | Line 6: | ||

|manufacturer=Intel | |manufacturer=Intel | ||

|introduction=2019 | |introduction=2019 | ||

| + | |phase-out=2021 | ||

|process=10 nm | |process=10 nm | ||

| + | |cores=2 | ||

| + | |cores 2=4 | ||

| + | |cores 3=8 | ||

| + | |cores 4=10 | ||

| + | |cores 5=12 | ||

| + | |cores 6=16 | ||

| + | |cores 7=18 | ||

| + | |cores 8=20 | ||

| + | |cores 9=24 | ||

| + | |cores 10=26 | ||

| + | |cores 11=28 | ||

| + | |cores 12=32 | ||

| + | |cores 13=36 | ||

| + | |cores 14=38 | ||

| + | |cores 15=40 | ||

| + | |type=Superscalar | ||

| + | |oooe=Yes | ||

| + | |speculative=Yes | ||

| + | |renaming=Yes | ||

| + | |stages min=14 | ||

| + | |stages max=19 | ||

|isa=x86-64 | |isa=x86-64 | ||

| + | |extension=MOVBE | ||

| + | |extension 2=MMX | ||

| + | |extension 3=SSE | ||

| + | |extension 4=SSE2 | ||

| + | |extension 5=SSE3 | ||

| + | |extension 6=SSSE3 | ||

| + | |extension 7=SSE4.1 | ||

| + | |extension 8=SSE4.2 | ||

| + | |extension 9=POPCNT | ||

| + | |extension 10=AVX | ||

| + | |extension 11=AVX2 | ||

| + | |extension 12=AES | ||

| + | |extension 13=PCLMUL | ||

| + | |extension 14=FSGSBASE | ||

| + | |extension 15=RDRND | ||

| + | |extension 16=FMA3 | ||

| + | |extension 17=F16C | ||

| + | |extension 18=BMI | ||

| + | |extension 19=BMI2 | ||

| + | |extension 20=VT-x | ||

| + | |extension 21=VT-d | ||

| + | |extension 22=TXT | ||

| + | |extension 23=TSX | ||

| + | |extension 24=RDSEED | ||

| + | |extension 25=ADCX | ||

| + | |extension 26=PREFETCHW | ||

| + | |extension 27=CLFLUSHOPT | ||

| + | |extension 28=XSAVE | ||

| + | |extension 29=SGX | ||

| + | |extension 30=MPX | ||

| + | |extension 31=AVX-512 | ||

| + | |l1i=32 KiB | ||

| + | |l1i per=core | ||

| + | |l1i desc=8-way set associative | ||

| + | |l1d=48 KiB | ||

| + | |l1d per=core | ||

| + | |l1d desc=12-way set associative | ||

| + | |l2=512 KiB | ||

| + | |l2 per=core | ||

| + | |l2 desc=8-way set associative | ||

| + | |l3=2 MiB | ||

| + | |l3 per=core | ||

| + | |l3 desc=16-way set associative | ||

| + | |core name=Spring Hill | ||

| + | |core name 2=Lakefield | ||

| + | |core name 3=Ice Lake (Client) | ||

| + | |core name 4=Ice Lake (Server) | ||

|predecessor=Palm Cove | |predecessor=Palm Cove | ||

|predecessor link=intel/microarchitectures/palm cove | |predecessor link=intel/microarchitectures/palm cove | ||

| Line 13: | Line 82: | ||

|successor link=intel/microarchitectures/willow cove | |successor link=intel/microarchitectures/willow cove | ||

}} | }} | ||

| − | '''Sunny Cove''' | + | '''Sunny Cove''' ('''SNC'''), the successor to {{\\|Palm Cove}}, is a high-performance [[10 nm]] [[x86]]-64 core microarchitecture designed by [[Intel]] for an array of server and client products, including {{\\|Ice Lake (Client)}}, {{\\|Ice Lake (Server)}}, {{\\|Lakefield}}, and the Nervana {{nervana|NNP-I}}. The microarchitecture was developed by Intel's R&D Center (IDC) in Haifa, Israel. |

== History == | == History == | ||

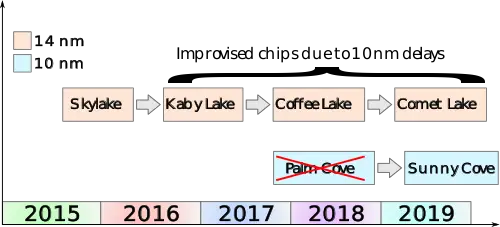

| − | [[File:sunny cove roadmap.png|thumb|right| | + | [[File:sunny cove roadmap.png|thumb|right|200px|Intel Core roadmap]] |

| − | Sunny Cove was originally unveiled by Intel at their 2018 architecture day. Intel originally intended for Sunny Cove to succeed {{\\|Palm Cove}} in late 2017 which | + | Sunny Cove was originally unveiled by Intel at their 2018 architecture day. Intel originally intended for Sunny Cove to succeed {{\\|Palm Cove}} in late 2017 which was intended to be the first [[10 nm]]-based core and the proper successor to {{\\|Skylake (client)|Skylake}}. Prolonged delays and problems with their [[10 nm process]] resulted in a number of improvised derivatives of {{\\|Skylake (client)|Skylake}} including {{\\|Kaby Lake}}, {{\\|Coffee Lake}}, and {{\\|Comet Lake}}. For all practical purposes, {{\\|Palm Cove}} has been skipped and Intel has gone directly to Sunny Cove. Sunny Cove debuted in mid-2019. |

:[[File:14nm improv 10 delays.svg|500px]] | :[[File:14nm improv 10 delays.svg|500px]] | ||

== Process Technology == | == Process Technology == | ||

| − | Sunny Cove is designed to take advantage of Intel's | + | Sunny Cove is designed to take advantage of [[intel 10nm|Intel's 10 nm process]]. |

| + | |||

| + | == Implementations == | ||

| + | The Sunny Cove core is integrated into a number of Intel designs. | ||

| + | |||

| + | {| class="wikitable" style="text-align: center;" | ||

| + | | colspan="3" | Chips with Intel Sunny Cove | ||

| + | |- | ||

| + | | Chip || Instances || Notes | ||

| + | |- | ||

| + | | {{\\|Lakefield}} || 1 || Heterogeneous [[pentacore]] | ||

| + | |- | ||

| + | | {{\\|Spring Hill}} || 2 || Used for {{nervana|NNP-I}} [[NPU]]s | ||

| + | |- | ||

| + | | {{\\|Ice Lake (Client)}} || 2-4 || Mobile/Desktop processors | ||

| + | |- | ||

| + | | {{\\|Ice Lake (Server)}} || 40 || Workstation/Server processors | ||

| + | |} | ||

== Architecture == | == Architecture == | ||

| − | === Key changes from {{\\|Palm Cove}}=== | + | |

| − | {{ | + | {| border="0" cellpadding="1" width="100%" |

| + | |- | ||

| + | |width="30%" valign="top" align="left"| | ||

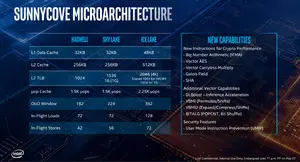

| + | [[File:sunny cove buffer capacities.png|thumb|left|Sunny Cove buffers]] | ||

| + | |width="30%" valign="top" align="left"| | ||

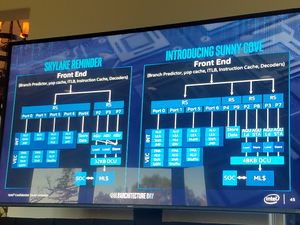

| + | [[File:skylake - sunny cove changes block.jpg|thumb|left|Skylake to Sunny Cove changes]] | ||

| + | |width="30%" valign="top" align="left"| | ||

| + | [[File:sunny cove enhancements.jpg|thumb|left|Sunny Cove enhancements]] | ||

| + | |} | ||

| + | |||

| + | === Key changes from {{\\|Palm Cove}}/{{\\|Skylake}}=== | ||

| + | {| border="0" cellpadding="5" width="100%" | ||

| + | |- | ||

| + | |width="50%" valign="top" align="left"| | ||

| + | * Performance | ||

| + | ** [[IPC]] uplift ([[Intel]] self-reported average 18-20% IPC across proxy <br>benchmarks such as [[SPEC CPU2006]]/[[SPEC CPU2017]]) | ||

| + | * Front-end | ||

| + | ** 1.5x larger µOP cache (2.3K entries, up from 1536) | ||

| + | ** Smarter [[prefetchers]] | ||

| + | ** Improved [[branch predictor]] | ||

| + | ** ITLB | ||

| + | *** 2x 2M page entries (16 entries, up from 8) | ||

| + | ** Larger IDQ (70 µOPs, up from 64) | ||

| + | ** LSD can detect up to 70 µOP loops (up from 64) | ||

| + | * Back-end | ||

| + | ** Wider allocation (6-way, up from 5-way in {{\\|Skylake}} and 4-way in {{\\|Broadwell}} | ||

| + | ** Wider decoding width with an additional simple decoder is added <br>(from 3 simple + 1 complex in {{\\|Skylake}} 4 way wide decoder <br>to 4 simple + 1 complex in {{\\|Sunny Cove}} 5 way wide decoder) | ||

| + | ** Delivery Throughout remain 6 uops, same as {{\\|Skylake}} | ||

| + | ** 1.6x larger ROB (352, up from 224 entries) | ||

| + | ** Scheduler | ||

| + | *** 1.65x larger scheduler (160-entry, up from 97 entries) | ||

| + | *** Larger dispatch (10-way, up from 8-way) | ||

| + | ** 1.55x larger integer register file (280-entry, up from 180) | ||

| + | ** 1.33x larger vector register file (224-entry, up from 168) | ||

| + | ** Distributed scheduling queues (4 scheduling queues, up from 2) | ||

| + | *** New dedicated queue for store data | ||

| + | *** Replaced 2 generic AGUs with two load AGUs | ||

| + | *** Load/Store pair have dedicated queues | ||

| + | **** New paired store capabilities | ||

| + | |width="50%" valign="top" align="left"| | ||

| + | * Execution Engine | ||

| + | ** Execution ports rebalanced | ||

| + | ** 2x store data ports (up from 1) | ||

| + | ** 2x store address AGU (up from 1) | ||

| + | * Memory subsystem | ||

| + | ** Data Cache | ||

| + | *** DTLB now split for load and stores | ||

| + | *** Store | ||

| + | **** DTLB 4 KiB TLB competitively shared (from fixed partitioning) | ||

| + | **** DTLB 2 MiB / 4 MiB TLB competitively shared (from fixed partitioning) | ||

| + | **** 2x larger DTLB 1 GiB page entries (8-entry, up from 4) | ||

| + | *** Load | ||

| + | **** New DTLB store | ||

| + | **** 16-entry, all page sizes | ||

| + | ** STLB | ||

| + | *** Single unified TLB for all pages (from 4 KiB+2/4 MiB and seperate 1 GiB) | ||

| + | *** STLB uses dynamic partitioning (from partition fixed partitioning) | ||

| + | ** LSU | ||

| + | *** 1.8x more inflight loads (128, up from 72 entries) | ||

| + | *** 1.3x more inflight stores (72, up from 56 entries) | ||

| + | ** 1.5x larger L1 data cache (48 KiB, up from 32 KiB) | ||

| + | ** 2x larger L2 cache (512 KiB, up from 256 KiB) | ||

| + | *** Larger STLBs | ||

| + | **** 1.33x larger 4k table (2048 entries, up from 1536) | ||

| + | ** 5-Level Paging | ||

| + | *** Large virtual address (57 bits, up from 48 bits) | ||

| + | *** Significantly large virtual address space (128 PiB, up from 256 TiB) | ||

| + | |} | ||

| + | |||

| + | ==== New instructions ==== | ||

| + | Sunny Cove introduced a number of {{x86|extensions|new instructions}}: | ||

| + | {| border="0" cellpadding="5" width="100%" | ||

| + | |- | ||

| + | |width="50%" valign="top" align="left"| | ||

| + | * {{x86|SHA|<code>SHA</code>}} - [[Hardware acceleration]] for SHA hashing operations | ||

| + | * {{x86|RDPID|<code>RDPID</code>}} - Read Processor ID | ||

| + | * Additional {{x86|AVX-512}} extensions: | ||

| + | ** {{x86|AVX512VPOPCNTDQ|<code>AVX512VPOPCNTDQ</code>}} - AVX-512 Vector Population Count <br>Doubleword and Quadword | ||

| + | ** {{x86|AVX512VNNI|<code>AVX512VNNI</code>}} - AVX-512 Vector Neural Network Instructions | ||

| + | ** {{x86|AVX512GFNI|<code>AVX512GFNI</code>}} - AVX-512 Galois Field New Instructions | ||

| + | ** {{x86|AVX512VAES|<code>AVX512VAES</code>}} - AVX-512 Vector AES | ||

| + | ** {{x86|AVX512VBMI2|<code>AVX512VBMI2</code>}} - AVX-512 Vector Bit Manipulation, Version 2 | ||

| + | ** {{x86|AVX512BITALG|<code>AVX512BITALG</code>}} - AVX-512 Bit Algorithms | ||

| + | ** {{x86|AVX512VPCLMULQDQ|<code>AVX512VPCLMULQDQ</code>}} - AVX-512 Vector Vector Carry-less Multiply | ||

| + | |width="50%" valign="top" align="left"| | ||

| + | * {{x86|CLWB|<code>CLWB</code>}} - Force cache line write-back without flush | ||

| + | * {{x86|SSE_GFNI|<code>SSE_GFNI</code>}} - SSE-based Galois Field New Instructions | ||

| + | * {{x86|AVX_GFNI|<code>AVX_GFNI</code>}} - AVX-based Galois Field New Instructions | ||

| + | * Split Lock Detection - detection and cause an exception for split locks | ||

| + | * Fast Short REP MOV | ||

| + | Only on server parts ({{\\|Ice Lake (Server)}}): | ||

| + | * {{x86|TME|<code>TME</code>}} - Total Memory Encryption | ||

| + | * {{x86|PCONFIG|<code>PCONFIG</code>}} Platform Configuration | ||

| + | * {{x86|WBNOINVD|<code>WBNOINVD</code>}} Write-back and do not invalidate cache | ||

| + | * {{x86|ENCLV|<code>ENCLV</code>}} - SGX oversubscription instructions | ||

| + | |} | ||

| + | |||

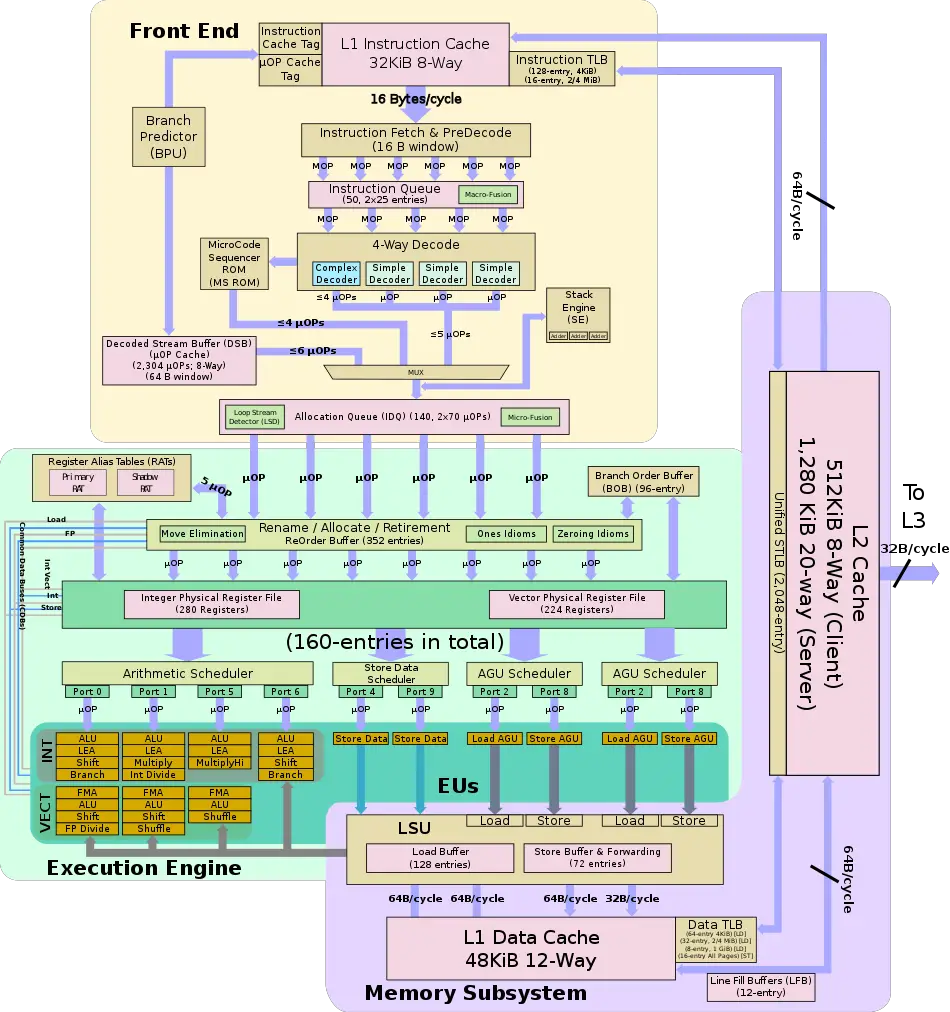

| + | === Block diagram === | ||

| + | :[[File:sunny cove block diagram.svg|950px]] | ||

| + | |||

| + | === Memory Hierarchy === | ||

| + | {{\\|Sunny Cove}} TLB consists of a dedicated L1 TLB for instruction cache (ITLB) and another one for data cache (DTLB). Additionally, there is a unified L2 TLB (STLB). | ||

| + | {| border="0" cellpadding="5" width="100%" | ||

| + | |- | ||

| + | |width="45%" valign="top" align="left"| | ||

| + | :; Cache | ||

| + | * L0 µOP cache: | ||

| + | ** 2,304 µOPs, 8-way set associative | ||

| + | *** 48 sets, 6-µOP line size | ||

| + | *** statically divided between threads, per core, inclusive with L1I | ||

| + | * L1I Cache: | ||

| + | ** 32 [[KiB]], 8-way set associative | ||

| + | *** 64 sets, 64 B line size | ||

| + | *** shared by the two threads, per core | ||

| + | * L1D Cache: | ||

| + | ** 48 KiB, 12-way set associative | ||

| + | ** 64 sets, 64 B line size | ||

| + | ** shared by the two threads, per core | ||

| + | ** 4 cycles for fastest load-to-use (simple pointer accesses) | ||

| + | *** 5 cycles for complex addresses | ||

| + | ** Bandwidth | ||

| + | *** 2x 64 B/cycle load + 1x64 B/cycle store | ||

| + | *** OR 2x32 B/cycle store | ||

| + | ** Write-back policy | ||

| + | * L2 Cache: | ||

| + | ** Client | ||

| + | *** Unified, 512 KiB, 8-way set associative | ||

| + | *** 1024 sets, 64 B line size | ||

| + | ** Server | ||

| + | *** Unified, 1,280 KiB, 20-way set associative | ||

| + | *** 1024 sets, 64 B line size | ||

| + | ** Non-inclusive | ||

| + | ** 13 cycles for fastest load-to-use | ||

| + | ** 64 B/cycle bandwidth to L1$ | ||

| + | ** Write-back policy | ||

| + | |width="55%" valign="top" align="left"| | ||

| + | :; TLBs: | ||

| + | * ITLB | ||

| + | ** 4 KiB page translations: | ||

| + | *** 128 entries; 8-way set associative | ||

| + | *** dynamic partitioning | ||

| + | ** 2 MiB / 4 MiB page translations: | ||

| + | *** 16 entries per thread; fully associative | ||

| + | *** Duplicated for each thread | ||

| + | * DTLB | ||

| + | ** Load | ||

| + | *** 4 KiB page translations: | ||

| + | **** 64 entries; 4-way set associative | ||

| + | **** competitively shared | ||

| + | *** 2 MiB / 4 MiB page translations: | ||

| + | **** 32 entries; 4-way set associative | ||

| + | **** competitively shared | ||

| + | *** 1G page translations: | ||

| + | **** 8 entries; 8-way set associative | ||

| + | **** competitively partition | ||

| + | ** Store | ||

| + | *** All pages: | ||

| + | **** 16 entries; 16-way set associative | ||

| + | **** competitively partition | ||

| + | * STLB | ||

| + | ** All pages: | ||

| + | *** 2,048 entire; 16-way set associative | ||

| + | *** Parititoning: | ||

| + | **** 4 KiB pages can use all 2,048 entries | ||

| + | **** 2/4 MiB pages can use 1,024 entries (8-way sets), shared with 4 KiB | ||

| + | **** 1 GiB pages can use 1,024 entries (8-way sets), shared with 4 KiB pages | ||

| + | |} | ||

== Overview == | == Overview == | ||

| − | Sunny Cove is Intel's | + | Sunny Cove is Intel's microarchitecture for their [[big core|big CPU core]] which is incorporated into a number of client and server chips that succeed {{\\|Palm Cove}} (and effectively the {{\\|Skylake (client)|Skylake}} series of derivatives). Sunny Cove is a [[big core]] implemented which is incorporated into numerous chips made by Intel including {{\\|Lakefield}}, {{\\|Ice Lake (Client)}}, and {{\\|Ice Lake (Server)}}, as well as the [[Nervana]] {{nervana|NNP}} accelerator. Sunny Cove introduces a large set of enhancements that improves the performance of legacy code and new code through the extraction of parallelism as well as new features. Those include a deep [[out-of-window]] pipeline, a wider execution back-end, higher load-store bandwidth, lower effective access latencies, and bigger caches. |

| + | |||

| + | == Pipeline == | ||

| + | Like its predecessors, Sunny Cove focuses on extracting performance and reducing power through a number of key ways. Intel builds Sunny Cove on previous microarchitectures, descendants of {{\\|Sandy Bridge}}. For the core to increase the overall performance, Intel focused on extracting additional parallelism. | ||

| + | |||

| + | ==== Broad Overview ==== | ||

| + | At a 5,000 foot view, Sunny Cove represents the logical evolution from {{\\|Skylake}} and {{\\|Haswell}}. Therefore, despite some significant differences from the previous microarchitecture, the overall designs is fundamentally the same and can be seen as enhancements over {{\\|Skylake}} rather than a complete change. | ||

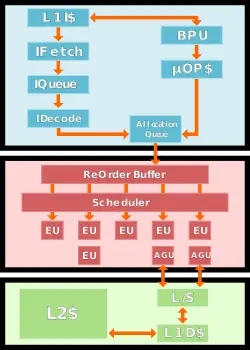

| + | [[File:intel common arch post ucache.svg|left|250px]] | ||

| + | The pipeline can be broken down into three areas: the front-end, back-end or execution engine, and the memory subsystem. The goal of the [[front-end]] is to feed the back-end with a sufficient stream of operations which it gets by [[decoding instructions]] coming from memory. The front-end has two major pathways: the [[µOPs cache]] path and the legacy path. The legacy path is the traditional path whereby variable-length [[x86]] instructions are fetched from the [[level 1 instruction cache]], queued, and consequently get decoded into simpler, fixed-length [[µOPs]]. The alternative and much more desired path is the µOPs cache path whereby a [[cache]] containing already decoded µOPs receives a hit allowing the µOPs to be sent directly to the decode queue. | ||

| + | |||

| + | Regardless of which path an instruction ends up taking it will eventually arrive at the decode queue. The IDQ represents the end of the front-end and the [[in-order]] part of the machine and the start of the execution engine which operates [[out-of-order]]. | ||

| + | |||

| + | In the back-end, the micro-operations visit the [[reorder buffer]]. It's there where register allocation, renaming, and [[instruction retired|retiring]] takes place. At this stage a number of other optimizations are also done. From the reorder buffer, µOPs are sent to the unified scheduler. The scheduler has a number of exit ports, each wired to a set of different execution units. Some units can perform basic ALU operations, others can do multiplication and division, with some units capable of more complex operations such as various vector operations. The scheduler is effectively in charge of queuing the µOPs on the appropriate port so they can be executed by the appropriate unit. | ||

| + | |||

| + | Some µOPs deal with memory access (e.g. [[instruction load|load]] & [[instruction store|store]]). Those will be sent on dedicated scheduler ports that can perform those memory operations. Store operations go to the store buffer which is also capable of performing forwarding when needed. Likewise, Load operations come from the load buffer. Sunny Cove features a dedicated 48 KiB level 1 data cache and a dedicated 32 KiB level 1 instruction cache. It also features a core-private 512 KiB L2 cache that is shared by both of the L1 caches. | ||

| + | |||

| + | Each core enjoys a slice of a third level of cache that is shared by all the core. For {{\\|Ice Lake (Client)}} which incorporates Sunny Cove cores, there are either [[two cores]] or [[four cores]] connected together on a single chip. | ||

| + | {{clear}} | ||

| + | |||

| + | ==== Front-end ==== | ||

| + | The front-end is tasked with the challenge of fetching the complex [[x86]] instructions from memory, decoding them, and delivering them to the execution units. In other words, the front end needs to be able to consistently deliver enough [[µOPs]] from the instruction code stream to keep the back-end busy. When the back-end is not being fully utilized, the core is not reaching its full performance. A poorly or under-performing front-end will translate directly to a poorly performing core. This challenge is further complicated by various redirection such as branches and the complex nature of the [[x86]] instructions themselves. | ||

| + | |||

| + | ===== Fetch & pre-decoding ===== | ||

| + | On their first pass, instructions should have already been prefetched from the [[L2 cache]] and into the [[L1 cache]]. The L1 is a 32 [[KiB]], 8-way set associative cache, identical in size and organization to {{intel|microarchitectures|previous generations}}. Sunny Cove fetching is done on a 16-byte fetch window. A window size that has not changed in a number of generations. Up to 16 bytes of code can be fetched each cycle. Note that the fetcher is shared evenly between the two threads so that each thread gets every other cycle. At this point they are still [[macro-ops]] (i.e. variable-length [[x86]] architectural instruction). Instructions are brought into the pre-decode buffer for initial preparation. | ||

| + | |||

| + | [[File:sunny cove fetch.svg|left|300px]] | ||

| + | |||

| + | [[x86]] instructions are complex, variable length, have inconsistent encoding, and may contain multiple operations. At the pre-decode buffer, the instructions boundaries get detected and marked. This is a fairly difficult task because each instruction can vary from a single byte all the way up to fifteen. Moreover, determining the length requires inspecting a couple of bytes of the instruction. In addition to boundary marking, prefixes are also decoded and checked for various properties such as branches. As with previous microarchitectures, the pre-decoder has a [[throughput]] of 6 [[macro-ops]] per cycle or until all 16 bytes are consumed, whichever happens first. Note that the predecoder will not load a new 16-byte block until the previous block has been fully exhausted. For example, suppose a new chunk was loaded, resulting in 7 instructions. In the first cycle, 6 instructions will be processed and a whole second cycle will be wasted for that last instruction. This will produce the much lower throughput of 3.5 instructions per cycle which is considerably less than optimal. Likewise, if the 16-byte block resulted in just 4 instructions with 1 byte of the 5th instruction received, the first 4 instructions will be processed in the first cycle and a second cycle will be required for the last instruction. This will produce an average throughput of 2.5 instructions per cycle. Note that there is a special case for {{x86|length-changing prefix}} (LCPs) which will incur additional pre-decoding costs. Real code is often less than 4 bytes which usually results in a good rate. | ||

| + | |||

| + | All of this works along with the branch prediction unit which attempts to guess the flow of instructions. In Sunny Cove, the [[branch predictor]] has also been improved. The intimate improvements done in the branch predictor were not further disclosed by Intel. | ||

| + | ====== Instruction Queue & MOP-Fusion ====== | ||

| + | {| style="border: 1px solid gray; float: right; margin: 10px; padding: 5px" | ||

| + | | [[MOP-Fusion]] Example: | ||

| + | |- | ||

| + | | | ||

| + | <pre>cmp eax, [mem] | ||

| + | jne loop</pre> | ||

| + | | '''→''' | ||

| + | | <pre>cmpjne eax, [mem], loop</pre> | ||

| + | |} | ||

| + | {{see also|macro-operation fusion|l1=Macro-Operation Fusion}} | ||

| + | The pre-decoded instructions are delivered to the Instruction Queue (IQ). In {{\\|Broadwell}}, the Instruction Queue has been increased to 25 entries duplicated over for each thread (i.e. 50 total entries). It's unclear if that has changed with Sunny Cove. One key optimization the instruction queue does is [[macro-op fusion]]. Sunny Cove can fuse two [[macro-ops]] into a single complex one in a number of cases. In cases where a {{x86|test}} or {{x86|compare}} instruction with a subsequent conditional jump is detected, it will be converted into a single compare-and-branch instruction. Those fused instructions remain fused throughout the entire pipeline and get executed as a single operation by the branch unit thereby saving bandwidth everywhere. Only one such fusion can be performed during each cycle. | ||

| + | |||

| + | ===== Decoding ===== | ||

| + | [[File:sunny cove decode.svg|right|425px]] | ||

| + | Up to five (3 + 2 fused or up to 5 unfused) pre-decoded instructions are sent to the decoders each cycle. Like the fetchers, the Decoders alternate between the two thread each cycle. Decoders read in [[macro-operations]] and emit regular, fixed length [[µOPs]]. The five decoders are asymmetric; the first one, Decoder 0, is a [[complex decoder]] while the other four are [[simple decoders]]. A simple decoder is capable of translating instructions that emit a single fused-[[µOP]]. By contrast, a [[complex decoder]] can decode anywhere from one to four fused-µOPs. Overall up to 5 simple instructions can be decoded each cycle with lesser amounts if the complex decoder needs to emit addition µOPs; i.e., for each additional µOP the complex decoder needs to emit, 1 less simple decoder can operate. In other words, for each additional µOP the complex decoder emits, one less decoder is active. | ||

| + | |||

| + | ====== MSROM & Stack Engine ====== | ||

| + | There are more complex instructions that are not trivial to be decoded even by complex decoder. For instructions that transform into more than four µOPs, the instruction detours through the [[microcode sequencer]] (MS) ROM. When that happens, up to 4 µOPs/cycle are emitted until the microcode sequencer is done. During that time, the decoders are disabled. | ||

| + | |||

| + | [[x86]] has dedicated [[stack machine]] operations. Instructions such as <code>{{x86|PUSH}}</code>, <code>{{x86|POP}}</code>, as well as <code>{{x86|CALL}}</code>, and <code>{{x86|RET}}</code> all operate on the [[stack pointer]] (<code>{{x86|ESP}}</code>). Without any specialized hardware, such operations would need to be sent to the back-end for execution using the general purpose ALUs, using up some of the bandwidth and utilizing scheduler and execution units resources. Since {{\\|Pentium M}}, Intel has been making use of a [[Stack Engine]]. The Stack Engine has a set of three dedicated adders it uses to perform and eliminate the stack-updating µOPs (i.e. capable of handling three additions per cycle). Instruction such as <code>{{x86|PUSH}}</code> are translated into a store and a subtraction of 4 from <code>{{x86|ESP}}</code>. The subtraction in this case will be done by the Stack Engine. The Stack Engine sits after the [[instruction decode|decoders]] and monitors the µOPs stream as it passes by. Incoming stack-modifying operations are caught by the Stack Engine. This operation alleviate the burden of the pipeline from stack pointer-modifying µOPs. In other words, it's cheaper and faster to calculate stack pointer targets at the Stack Engine than it is to send those operations down the pipeline to be done by the execution units (i.e., general purpose ALUs). | ||

| + | |||

| + | ===== µOP cache ===== | ||

| + | {{see also|intel/microarchitectures/sandy_bridge_(client)#New_.C2.B5OP_cache_.26_x86_tax|l1=Sandy Bridge § New µOP cache}} | ||

| + | [[File:sunny cove ucache.svg|right|400px]] | ||

| + | Decoding the variable-length, inconsistent, and complex [[x86]] instructions is a nontrivial task. It's also expensive in terms of performance and power. Therefore, the best way for the pipeline to avoid those things is to simply not decode the instructions. This is the job of the [[µOP cache]] or the Decoded Stream Buffer (DSB). Sunny Cove's µOP cache is organized similarly to all previous generations since its introduction in {{\\|Sandy Bridge}}, however, its size has increased. Sunny Cove increased the cache by 1.5x from 1.5K in {{\\|Skylake}} to over 2.3K. The cache is organized into 48 sets of 8 cache lines with each line holding up to 6 µOP for a total of 2,304 µOPs. As with {{\\|Skylake}}, the µOP cache operates on 64-byte fetch windows. The micro-operation cache is competitively shared between the two threads and can also hold pointers to the microcode. The µOP cache has an average hit rate of 80% or greater. | ||

| + | |||

| + | A hit in the µOP allows for up to 6 µOPs (i.e., entire line) per cycle to be sent directly to the Instruction Decode Queue (IDQ), bypassing all the pre-decoding and decoding that would otherwise have to be done. Whereas the legacy decode path works in 16-byte instruction fetch windows, the µOP cache has no such restriction and can deliver 6 µOPs/cycle corresponding to the much bigger 64-byte window. The higher bandwidth of µOPs greatly improves the numbers of µOP that the back-end can take advantage of in the [[out-of-order]] part of the machine. To better improve this area, Sunny cove increased the [[#Renaming & Allocation|rename and retire]] to 5 µOPs/cycle, one more than {{\\|Skylake}}, increasing the absolute ceiling rate of the out-of-order engine. | ||

| + | |||

| + | ===== Allocation Queue ===== | ||

| + | The emitted µOPs from the decoders are sent directly to the Allocation Queue (AQ) or Instruction Decode Queue (IDQ). The Allocation Queue acts as the interface between the front-end ([[in-order]]) and the back-end ([[out-of-order]]). Like {{\\|Skylake}}, the IDQ is no longer competitively shared; it's partitioned between two active threads. Sunny Cove's Allocation Queue increased from 64-µOPs/thread to 70 for a total of 140 entries or roughly 10% more than {{\\|Skylake}}. The queue's purpose is effectively to help absorb [[bubbles]] which may be introduced in the front-end, ensuring that a steady stream of 6 µOPs are delivered each cycle. | ||

| + | |||

| + | ====== µOP-Fusion & LSD ====== | ||

| + | The IDQ does a number of additional optimizations as it queues instructions. The Loop Stream Detector (LSD) is a mechanism inside the IDQ capable of detecting loops that fit in the IDQ and lock them down. That is, the LSD can stream the same sequence of µOPs directly from the IDQ continuously without any additional [[instruction fetch|fetching]], [[instruction decode|decoding]], or utilizing additional caches or resources. Streaming continues indefinitely until reaching a branch [[mis-prediction]]. Note that while the LSD is active, the rest of the front-end is effectively disabled. | ||

| + | |||

| + | The LSD in Sunny Cove can take advantage of the larger IDQ; capable of detecting loops up to 70 µOPs per thread. The LSD is particularly excellent in for many common algorithms that are found in many programs (e.g., tight loops, intensive calc loops, searches, etc..). | ||

| + | |||

| + | ==== Execution engine ==== | ||

| + | [[File:sunny cove rob.svg|right|450px]] | ||

| + | Sunny Cove's back-end or execution engine deals with the execution of [[out-of-order]] operations. Much of the design is inherited from previous architectures such as {{\\|Skylake}} but has been widened to explorer more [[instruction-level parallelism]] opportunities. From the allocation queue instructions are sent to the [[Reorder Buffer]] (ROB) at the rate of up to 6 fused-µOPs each cycle, similar to {{\\|Skylake}}'s. | ||

| + | |||

| + | ===== Renaming & Allocation ===== | ||

| + | Like the front-end, the [[Reorder Buffer]] has been significantly enlarged by 60%, now having the capacity of 352 entries, 128 entries more than {{\\|Skylake}}. Since each ROB entry holds complete µOPs, in practice 352 entries might be equivalent to as much as 525 µOPs depending on the code being executed (e.g. fused load/stores). It is at this stage that [[architectural registers]] are mapped onto the underlying [[physical registers]]. Other additional bookkeeping tasks are also done at this point such as allocating resources for stores, loads, and determining all possible scheduler ports. Register renaming is also controlled by the [[Register Alias Table]] (RAT) which is used to mark where the data we depend on is coming from (after that value, too, came from an instruction that has previously been renamed). In {{intel|microarchitectures|previous microarchitectures}}, the RAT could handle 4 µOPs each cycle. In Sunny Cove this has been increased to five, A 25% increase in the OoO application capabilities. Sunny Cove can now rename any five registers per cycle. This includes the same register renamed five times in a single cycle. Note that the ROB still operates on fused µOPs, therefore 5 µOPs can effectively be as high as 10 µOPs. | ||

| + | |||

| + | It should be noted that there are no special costs involved in splitting up fused µOPs before execution or [[retirement]] and the two fused µOPs only occupy a single entry in the ROB. | ||

| + | |||

| + | Since Sunny Cove performs [[speculative execution]], it can speculate incorrectly. When this happens, the architectural state is invalidated and as such needs to be rolled back to the last known valid state. {{intel|microarchitectures|previous microarchitectures}} had a 48-entry [[Branch Order Buffer]] (BOB) that keeps tracks of those states for this very purpose. It's unknown if that has changed with Sunny Cove. | ||

| + | |||

| + | ===== Optimizations ===== | ||

| + | Sunny Cove has a number of optimizations it performs prior to entering the out-of-order and renaming part. Three of those optimizations include [[Move Elimination]] and [[Zeroing Idioms]], and [[Ones Idioms]]. A Move Elimination is capable of eliminating register-to-register moves (including chained moves) prior to bookkeeping at the ROB, allowing those µOPs to save resources and eliminating them entirely. Eliminated moves are zero latency and are entirely removed from the pipeline. This optimization does not always succeed; when it fails, the operands were simply not ready. On average this optimization is almost always successful (upward of 85% in most cases). Move elimination works on all 32- and 64-bit GP integer registers as well as all 128- and 256-bit vector registers. | ||

| + | {| style="border: 1px solid gray; float: right; margin: 10px; padding: 5px; width: 350px;" | ||

| + | | [[Zeroing Idiom]] Example: | ||

| + | |- | ||

| + | | <pre>xor eax, eax</pre> | ||

| + | |- | ||

| + | | Not only does this instruction get eliminated at the ROB, but it's actually encoded as just 2 bytes <code>31 C0</code> vs the 5 bytes for <code>{{x86|mov}} {{x86|eax}}, 0x0</code> which is encoded as <code>b8 00 00 00 00</code>. | ||

| + | |} | ||

| + | There are some exceptions that Sunny Cove will not optimize, most dealing with [[signedness]]. [[sign extension|sign-extended]] moves cannot be eliminated and neither can zero-extended from 16-bit to 32/64 big registers (note that 8-bit to 32/64 works). Likewise, in the other direction, no moves to 8/16-bit registers can be eliminated. A move of a register to itself is never eliminated. | ||

| + | |||

| + | When instructions use registers that are independent of their prior values, another optimization opportunity can be exploited. A second common optimization performed in Sunny Cove around the same time is [[Zeroing Idioms]] elimination. A number common zeroing idioms are recognized and consequently eliminated in much the same way as the move eliminations are performed. Sunny Cove recognizes instructions such as <code>{{x86|XOR}}</code>, <code>{{x86|PXOR}}</code>, and <code>{{x86|XORPS}}</code> as zeroing idioms when the [[source operand|source]] and [[destination operand|destination]] operands are the same. Those optimizations are done at the same rate as renaming during renaming (at 4 µOPs per cycle) and the register is simply set to zero. | ||

| + | |||

| + | The [[ones idioms]] is another dependency breaking idiom that can be optimized. In all the various {{x86|PCMPEQ|PCMPEQx}} instructions that perform packed comparison the same register with itself always set all bits to one. On those cases, while the µOP still has to be executed, the instructions may be scheduled as soon as possible because the current state of the register need not be known. | ||

| + | |||

| + | ===== Scheduler ===== | ||

| + | [[File:sunny cove scheduler.svg|right|500px]] | ||

| + | The scheduler size itself has likely increased with Sunny cove, although its exact capacity was not disclosed. Intel increased the schedule by 50% from 64 to 97 entries in {{\\|Skylake}}, therefore it's reasonable to expect Sunny Cove to be greater than 125 entries. Those entries are competitively shared between the two threads. Sunny Cove continues with a unified design; this is in contrast to designs such as [[AMD]]'s {{amd|Zen|l=arch}} which uses a split design each one holding different types of µOPs. Scheduler includes the two register files for integers and vectors. It's in those [[register files]] that output operand data is stored. In Skylake, the [[integer]] [[register file]] was 180-entry deep. It's unknown if that has changed and by how much on Sunny Cove. | ||

| + | |||

| + | At this point µOPs are no longer fused and will be dispatched to the execution units independently. The scheduler holds the µOPs while they wait to be executed. A µOP could be waiting on an operand that has not arrived (e.g., fetched from memory or currently being calculated from another µOPs) or because the execution unit it needs is busy. Once the µOP is ready, it is dispatched through its designated port. The scheduler will send the oldest ready µOP to be executed on each of the eight ports each cycle. | ||

| + | |||

| + | The scheduler on Sunny Cove enlarged further with two additional ports that deal with memory operations, making it 25% wider than {{\\|Skylake}}. Up to 10 operations may be dispatched each cycle. On the arithmetic side of the execution engine, the four workhorse ports were augmented with more functionality. On the vector side, Sunny Cove retains the three FMAs and ALUs. One of the key changes here is the addition of a new shuffle unit on Port 1 for moving data within a register. | ||

| + | |||

| + | ====== Scheduler Ports & Execution Units ====== | ||

| + | <table class="wikitable"> | ||

| + | <tr><th colspan="2">Scheduler Ports Designation</th></tr> | ||

| + | <tr><th rowspan="5">Port 0</th><td>Integer/Vector Arithmetic, Multiplication, Logic, Shift, and String ops</td></tr> | ||

| + | <tr><td>[[FP]] Add, [[Multiply]], [[FMA]]</td></tr> | ||

| + | <tr><td>Integer/FP Division and [[Square Root]]</td></tr> | ||

| + | <tr><td>[[AES]] Encryption</td></tr> | ||

| + | <tr><td>Branch2</td></tr> | ||

| + | <tr><th rowspan="2">Port 1</th><td>Integer/Vector Arithmetic, Multiplication, Logic, Shift, and Bit Scanning</td></tr> | ||

| + | <tr><td>[[FP]] Add, [[Multiply]], [[FMA]]</td></tr> | ||

| + | <tr><th rowspan="3">Port 5</th><td>Integer/Vector Arithmetic, Logic</td></tr> | ||

| + | <tr><td>Vector Permute</td></tr> | ||

| + | <tr><td>[[x87]] FP Add, Composite Int, CLMUL</td></tr> | ||

| + | <tr><th rowspan="2">Port 6</th><td>Integer Arithmetic, Logic, Shift</td></tr> | ||

| + | <tr><td>Branch</td></tr> | ||

| + | <tr><th>Port 2</th><td>Load AGU</td></tr> | ||

| + | <tr><th>Port 3</th><td>Load AGU</td></tr> | ||

| + | <tr><th>Port 4</th><td>Store Data</td></tr> | ||

| + | <tr><th>Port 7</th><td>Store AGU</td></tr> | ||

| + | <tr><th>Port 8</th><td>Store AGU</td></tr> | ||

| + | <tr><th>Port 9</th><td>Store Data</td></tr> | ||

| + | </table> | ||

| + | |||

| + | {| class="wikitable collapsible collapsed" | ||

| + | |- | ||

| + | ! colspan="3" | Execution Units | ||

| + | |- | ||

| + | ! Execution Unit !! # of Units !! Instructions | ||

| + | |- | ||

| + | | ALU || 4 || add, and, cmp, or, test, xor, movzx, movsx, mov, (v)movdqu, (v)movdqa, (v)movap*, (v)movup* | ||

| + | |- | ||

| + | | SHFT || 2 || sal, shl, rol, adc, sarx, adcx, adox, etc. | ||

| + | |- | ||

| + | | Slow Int || 1 || mul, imul, bsr, rcl, shld, mulx, pdep, etc. | ||

| + | |- | ||

| + | | BM 2 andn, bextr, blsi, blsmsk, bzhi, etc. | ||

| + | |- | ||

| + | | Vec ALU || 3 || (v)pand, (v)por, (v)pxor, (v)movq, (v)movq, (v)movap*, (v)movup*, (v)andp*, (v)orp*, (v)paddb/w/d/q, (v)blendv*, (v)blendp*, (v)pblendd | ||

| + | |- | ||

| + | | Vec_Shft || 2 || (v)psllv*, (v)psrlv*, vector shift count in imm8 | ||

| + | |- | ||

| + | | Vec Add || 2 || (v)addp*, (v)cmpp*, (v)max*, (v)min*, (v)padds*, (v)paddus*, (v)psign, (v)pabs, (v)pavgb, (v)pcmpeq*, (v)pmax, (v)cvtps2dq, (v)cvtdq2ps, (v)cvtsd2si, (v)cvtss2si | ||

| + | |- | ||

| + | | Shuffle || 2 || (v)shufp*, vperm*, (v)pack*, (v)unpck*, (v)punpck*, (v)pshuf*, (v)pslldq, (v)alignr, (v)pmovzx*, vbroadcast*, (v)pslldq, (v)psrldq, (v)pblendw | ||

| + | |- | ||

| + | | Vec Mul || 2 || (v)mul*, (v)pmul*, (v)pmadd* | ||

| + | |- | ||

| + | | SIMD Misc || 1 || STTNI, (v)pclmulqdq, (v)psadw, vector shift count in xmm | ||

| + | |- | ||

| + | | FP Mov || 1 || (v)movsd/ss, (v)movd gpr | ||

| + | |- | ||

| + | | DIVIDE || 1 || divp*, divs*, vdiv*, sqrt*, vsqrt*, rcp*, vrcp*, rsqrt*, idiv | ||

| + | |- | ||

| + | |- | ||

| + | |colspan="3" | This table was taken verbatim from the Intel manual. Execution unit mapping to {{x86|MMX|MMX instructions}} are not included. | ||

| + | |} | ||

| + | |||

| + | ===== Retirement ===== | ||

| + | Once a µOP executes, or in the case of fused µOPs both µOPs have executed, they can be [[retired]]. Retirement happens [[in-order]] and releases any used resources such as those used to keep track in the [[reorder buffer]]. With retirement/allocation increasing from four to five in Sunny Cove, it's now possible to retire 5 instructions per cycle (5 unfused or 7 with fused ops). | ||

| + | |||

| + | == Die == | ||

| + | === Core === | ||

| + | * [[Intel 10 nm process]] | ||

| + | * Core from an {{\\|Ice Lake (client)}} SoC | ||

| + | * ~6.91 mm² die size | ||

| + | ** ~3.5 mm x ~1.97 mm | ||

| + | |||

| + | :[[File:ice lake die core.png|400px]] | ||

| + | |||

| + | |||

| + | :[[File:ice lake die core (annotated).png|400px]] | ||

| + | |||

| + | |||

| + | :[[File:ice lake die core 2.png|500px]] | ||

| + | |||

| + | |||

| + | |||

| + | * [[Intel 10 nm process]] | ||

| + | * Core from an {{\\|Ice Lake (server)}} SoC | ||

| + | |||

| + | :[[File:ice lake core die.png|200px]] | ||

| + | |||

| + | === Core group === | ||

| + | * [[Intel 10 nm process]] | ||

| + | * Quad-core from an {{\\|Ice Lake (client)|Ice Lake}} SoC | ||

| + | * ~30.73 mm² die size | ||

| + | ** ~7.86 mm x ~3.91 mm | ||

| + | |||

| + | |||

| + | :[[File:ice lake die core group.png|class=wikichip_ogimage|700px]] | ||

| + | |||

| + | |||

| + | :[[File:ice lake die core group (annotated).png|700px]] | ||

| + | |||

| + | |||

| + | :[[File:ice lake die core group 2.png|800px]] | ||

== Bibliography == | == Bibliography == | ||

* Intel Architecture Day 2018, December 11, 2018 | * Intel Architecture Day 2018, December 11, 2018 | ||

Latest revision as of 20:33, 17 November 2025

| Edit Values | |

| Sunny Cove µarch | |

| General Info | |

| Arch Type | CPU |

| Designer | Intel |

| Manufacturer | Intel |

| Introduction | 2019 |

| Phase-out | 2021 |

| Process | 10 nm |

| Core Configs | 2, 4, 8, 10, 12, 16, 18, 20, 24, 26, 28, 32, 36, 38, 40 |

| Pipeline | |

| Type | Superscalar |

| OoOE | Yes |

| Speculative | Yes |

| Reg Renaming | Yes |

| Stages | 14-19 |

| Instructions | |

| ISA | x86-64 |

| Extensions | MOVBE, MMX, SSE, SSE2, SSE3, SSSE3, SSE4.1, SSE4.2, POPCNT, AVX, AVX2, AES, PCLMUL, FSGSBASE, RDRND, FMA3, F16C, BMI, BMI2, VT-x, VT-d, TXT, TSX, RDSEED, ADCX, PREFETCHW, CLFLUSHOPT, XSAVE, SGX, MPX, AVX-512 |

| Cache | |

| L1I Cache | 32 KiB/core 8-way set associative |

| L1D Cache | 48 KiB/core 12-way set associative |

| L2 Cache | 512 KiB/core 8-way set associative |

| L3 Cache | 2 MiB/core 16-way set associative |

| Cores | |

| Core Names | Spring Hill, Lakefield, Ice Lake (Client), Ice Lake (Server) |

| Succession | |

Sunny Cove (SNC), the successor to Palm Cove, is a high-performance 10 nm x86-64 core microarchitecture designed by Intel for an array of server and client products, including Ice Lake (Client), Ice Lake (Server), Lakefield, and the Nervana NNP-I. The microarchitecture was developed by Intel's R&D Center (IDC) in Haifa, Israel.

Contents

History[edit]

Sunny Cove was originally unveiled by Intel at their 2018 architecture day. Intel originally intended for Sunny Cove to succeed Palm Cove in late 2017 which was intended to be the first 10 nm-based core and the proper successor to Skylake. Prolonged delays and problems with their 10 nm process resulted in a number of improvised derivatives of Skylake including Kaby Lake, Coffee Lake, and Comet Lake. For all practical purposes, Palm Cove has been skipped and Intel has gone directly to Sunny Cove. Sunny Cove debuted in mid-2019.

Process Technology[edit]

Sunny Cove is designed to take advantage of Intel's 10 nm process.

Implementations[edit]

The Sunny Cove core is integrated into a number of Intel designs.

| Chips with Intel Sunny Cove | ||

| Chip | Instances | Notes |

| Lakefield | 1 | Heterogeneous pentacore |

| Spring Hill | 2 | Used for NNP-I NPUs |

| Ice Lake (Client) | 2-4 | Mobile/Desktop processors |

| Ice Lake (Server) | 40 | Workstation/Server processors |

Architecture[edit]

Key changes from Palm Cove/Skylake[edit]

|

|

New instructions[edit]

Sunny Cove introduced a number of new instructions:

|

Only on server parts (Ice Lake (Server)): |

Block diagram[edit]

Memory Hierarchy[edit]

Sunny Cove TLB consists of a dedicated L1 TLB for instruction cache (ITLB) and another one for data cache (DTLB). Additionally, there is a unified L2 TLB (STLB).

|

|

Overview[edit]

Sunny Cove is Intel's microarchitecture for their big CPU core which is incorporated into a number of client and server chips that succeed Palm Cove (and effectively the Skylake series of derivatives). Sunny Cove is a big core implemented which is incorporated into numerous chips made by Intel including Lakefield, Ice Lake (Client), and Ice Lake (Server), as well as the Nervana NNP accelerator. Sunny Cove introduces a large set of enhancements that improves the performance of legacy code and new code through the extraction of parallelism as well as new features. Those include a deep out-of-window pipeline, a wider execution back-end, higher load-store bandwidth, lower effective access latencies, and bigger caches.

Pipeline[edit]

Like its predecessors, Sunny Cove focuses on extracting performance and reducing power through a number of key ways. Intel builds Sunny Cove on previous microarchitectures, descendants of Sandy Bridge. For the core to increase the overall performance, Intel focused on extracting additional parallelism.

Broad Overview[edit]

At a 5,000 foot view, Sunny Cove represents the logical evolution from Skylake and Haswell. Therefore, despite some significant differences from the previous microarchitecture, the overall designs is fundamentally the same and can be seen as enhancements over Skylake rather than a complete change.

The pipeline can be broken down into three areas: the front-end, back-end or execution engine, and the memory subsystem. The goal of the front-end is to feed the back-end with a sufficient stream of operations which it gets by decoding instructions coming from memory. The front-end has two major pathways: the µOPs cache path and the legacy path. The legacy path is the traditional path whereby variable-length x86 instructions are fetched from the level 1 instruction cache, queued, and consequently get decoded into simpler, fixed-length µOPs. The alternative and much more desired path is the µOPs cache path whereby a cache containing already decoded µOPs receives a hit allowing the µOPs to be sent directly to the decode queue.

Regardless of which path an instruction ends up taking it will eventually arrive at the decode queue. The IDQ represents the end of the front-end and the in-order part of the machine and the start of the execution engine which operates out-of-order.

In the back-end, the micro-operations visit the reorder buffer. It's there where register allocation, renaming, and retiring takes place. At this stage a number of other optimizations are also done. From the reorder buffer, µOPs are sent to the unified scheduler. The scheduler has a number of exit ports, each wired to a set of different execution units. Some units can perform basic ALU operations, others can do multiplication and division, with some units capable of more complex operations such as various vector operations. The scheduler is effectively in charge of queuing the µOPs on the appropriate port so they can be executed by the appropriate unit.

Some µOPs deal with memory access (e.g. load & store). Those will be sent on dedicated scheduler ports that can perform those memory operations. Store operations go to the store buffer which is also capable of performing forwarding when needed. Likewise, Load operations come from the load buffer. Sunny Cove features a dedicated 48 KiB level 1 data cache and a dedicated 32 KiB level 1 instruction cache. It also features a core-private 512 KiB L2 cache that is shared by both of the L1 caches.

Each core enjoys a slice of a third level of cache that is shared by all the core. For Ice Lake (Client) which incorporates Sunny Cove cores, there are either two cores or four cores connected together on a single chip.

Front-end[edit]

The front-end is tasked with the challenge of fetching the complex x86 instructions from memory, decoding them, and delivering them to the execution units. In other words, the front end needs to be able to consistently deliver enough µOPs from the instruction code stream to keep the back-end busy. When the back-end is not being fully utilized, the core is not reaching its full performance. A poorly or under-performing front-end will translate directly to a poorly performing core. This challenge is further complicated by various redirection such as branches and the complex nature of the x86 instructions themselves.

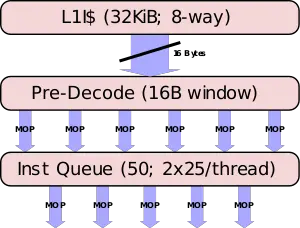

Fetch & pre-decoding[edit]

On their first pass, instructions should have already been prefetched from the L2 cache and into the L1 cache. The L1 is a 32 KiB, 8-way set associative cache, identical in size and organization to previous generations. Sunny Cove fetching is done on a 16-byte fetch window. A window size that has not changed in a number of generations. Up to 16 bytes of code can be fetched each cycle. Note that the fetcher is shared evenly between the two threads so that each thread gets every other cycle. At this point they are still macro-ops (i.e. variable-length x86 architectural instruction). Instructions are brought into the pre-decode buffer for initial preparation.

x86 instructions are complex, variable length, have inconsistent encoding, and may contain multiple operations. At the pre-decode buffer, the instructions boundaries get detected and marked. This is a fairly difficult task because each instruction can vary from a single byte all the way up to fifteen. Moreover, determining the length requires inspecting a couple of bytes of the instruction. In addition to boundary marking, prefixes are also decoded and checked for various properties such as branches. As with previous microarchitectures, the pre-decoder has a throughput of 6 macro-ops per cycle or until all 16 bytes are consumed, whichever happens first. Note that the predecoder will not load a new 16-byte block until the previous block has been fully exhausted. For example, suppose a new chunk was loaded, resulting in 7 instructions. In the first cycle, 6 instructions will be processed and a whole second cycle will be wasted for that last instruction. This will produce the much lower throughput of 3.5 instructions per cycle which is considerably less than optimal. Likewise, if the 16-byte block resulted in just 4 instructions with 1 byte of the 5th instruction received, the first 4 instructions will be processed in the first cycle and a second cycle will be required for the last instruction. This will produce an average throughput of 2.5 instructions per cycle. Note that there is a special case for length-changing prefix (LCPs) which will incur additional pre-decoding costs. Real code is often less than 4 bytes which usually results in a good rate.

All of this works along with the branch prediction unit which attempts to guess the flow of instructions. In Sunny Cove, the branch predictor has also been improved. The intimate improvements done in the branch predictor were not further disclosed by Intel.

Instruction Queue & MOP-Fusion[edit]

| MOP-Fusion Example: | ||

cmp eax, [mem] jne loop |

→ | cmpjne eax, [mem], loop |

- See also: Macro-Operation Fusion

The pre-decoded instructions are delivered to the Instruction Queue (IQ). In Broadwell, the Instruction Queue has been increased to 25 entries duplicated over for each thread (i.e. 50 total entries). It's unclear if that has changed with Sunny Cove. One key optimization the instruction queue does is macro-op fusion. Sunny Cove can fuse two macro-ops into a single complex one in a number of cases. In cases where a test or compare instruction with a subsequent conditional jump is detected, it will be converted into a single compare-and-branch instruction. Those fused instructions remain fused throughout the entire pipeline and get executed as a single operation by the branch unit thereby saving bandwidth everywhere. Only one such fusion can be performed during each cycle.

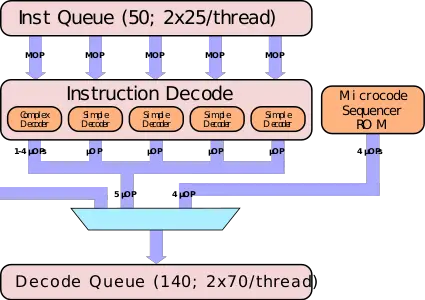

Decoding[edit]

Up to five (3 + 2 fused or up to 5 unfused) pre-decoded instructions are sent to the decoders each cycle. Like the fetchers, the Decoders alternate between the two thread each cycle. Decoders read in macro-operations and emit regular, fixed length µOPs. The five decoders are asymmetric; the first one, Decoder 0, is a complex decoder while the other four are simple decoders. A simple decoder is capable of translating instructions that emit a single fused-µOP. By contrast, a complex decoder can decode anywhere from one to four fused-µOPs. Overall up to 5 simple instructions can be decoded each cycle with lesser amounts if the complex decoder needs to emit addition µOPs; i.e., for each additional µOP the complex decoder needs to emit, 1 less simple decoder can operate. In other words, for each additional µOP the complex decoder emits, one less decoder is active.

MSROM & Stack Engine[edit]

There are more complex instructions that are not trivial to be decoded even by complex decoder. For instructions that transform into more than four µOPs, the instruction detours through the microcode sequencer (MS) ROM. When that happens, up to 4 µOPs/cycle are emitted until the microcode sequencer is done. During that time, the decoders are disabled.

x86 has dedicated stack machine operations. Instructions such as PUSH, POP, as well as CALL, and RET all operate on the stack pointer (ESP). Without any specialized hardware, such operations would need to be sent to the back-end for execution using the general purpose ALUs, using up some of the bandwidth and utilizing scheduler and execution units resources. Since Pentium M, Intel has been making use of a Stack Engine. The Stack Engine has a set of three dedicated adders it uses to perform and eliminate the stack-updating µOPs (i.e. capable of handling three additions per cycle). Instruction such as PUSH are translated into a store and a subtraction of 4 from ESP. The subtraction in this case will be done by the Stack Engine. The Stack Engine sits after the decoders and monitors the µOPs stream as it passes by. Incoming stack-modifying operations are caught by the Stack Engine. This operation alleviate the burden of the pipeline from stack pointer-modifying µOPs. In other words, it's cheaper and faster to calculate stack pointer targets at the Stack Engine than it is to send those operations down the pipeline to be done by the execution units (i.e., general purpose ALUs).

µOP cache[edit]

- See also: Sandy Bridge § New µOP cache

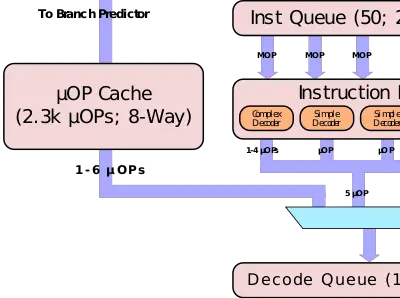

Decoding the variable-length, inconsistent, and complex x86 instructions is a nontrivial task. It's also expensive in terms of performance and power. Therefore, the best way for the pipeline to avoid those things is to simply not decode the instructions. This is the job of the µOP cache or the Decoded Stream Buffer (DSB). Sunny Cove's µOP cache is organized similarly to all previous generations since its introduction in Sandy Bridge, however, its size has increased. Sunny Cove increased the cache by 1.5x from 1.5K in Skylake to over 2.3K. The cache is organized into 48 sets of 8 cache lines with each line holding up to 6 µOP for a total of 2,304 µOPs. As with Skylake, the µOP cache operates on 64-byte fetch windows. The micro-operation cache is competitively shared between the two threads and can also hold pointers to the microcode. The µOP cache has an average hit rate of 80% or greater.

A hit in the µOP allows for up to 6 µOPs (i.e., entire line) per cycle to be sent directly to the Instruction Decode Queue (IDQ), bypassing all the pre-decoding and decoding that would otherwise have to be done. Whereas the legacy decode path works in 16-byte instruction fetch windows, the µOP cache has no such restriction and can deliver 6 µOPs/cycle corresponding to the much bigger 64-byte window. The higher bandwidth of µOPs greatly improves the numbers of µOP that the back-end can take advantage of in the out-of-order part of the machine. To better improve this area, Sunny cove increased the rename and retire to 5 µOPs/cycle, one more than Skylake, increasing the absolute ceiling rate of the out-of-order engine.

Allocation Queue[edit]

The emitted µOPs from the decoders are sent directly to the Allocation Queue (AQ) or Instruction Decode Queue (IDQ). The Allocation Queue acts as the interface between the front-end (in-order) and the back-end (out-of-order). Like Skylake, the IDQ is no longer competitively shared; it's partitioned between two active threads. Sunny Cove's Allocation Queue increased from 64-µOPs/thread to 70 for a total of 140 entries or roughly 10% more than Skylake. The queue's purpose is effectively to help absorb bubbles which may be introduced in the front-end, ensuring that a steady stream of 6 µOPs are delivered each cycle.

µOP-Fusion & LSD[edit]

The IDQ does a number of additional optimizations as it queues instructions. The Loop Stream Detector (LSD) is a mechanism inside the IDQ capable of detecting loops that fit in the IDQ and lock them down. That is, the LSD can stream the same sequence of µOPs directly from the IDQ continuously without any additional fetching, decoding, or utilizing additional caches or resources. Streaming continues indefinitely until reaching a branch mis-prediction. Note that while the LSD is active, the rest of the front-end is effectively disabled.

The LSD in Sunny Cove can take advantage of the larger IDQ; capable of detecting loops up to 70 µOPs per thread. The LSD is particularly excellent in for many common algorithms that are found in many programs (e.g., tight loops, intensive calc loops, searches, etc..).

Execution engine[edit]

Sunny Cove's back-end or execution engine deals with the execution of out-of-order operations. Much of the design is inherited from previous architectures such as Skylake but has been widened to explorer more instruction-level parallelism opportunities. From the allocation queue instructions are sent to the Reorder Buffer (ROB) at the rate of up to 6 fused-µOPs each cycle, similar to Skylake's.

Renaming & Allocation[edit]

Like the front-end, the Reorder Buffer has been significantly enlarged by 60%, now having the capacity of 352 entries, 128 entries more than Skylake. Since each ROB entry holds complete µOPs, in practice 352 entries might be equivalent to as much as 525 µOPs depending on the code being executed (e.g. fused load/stores). It is at this stage that architectural registers are mapped onto the underlying physical registers. Other additional bookkeeping tasks are also done at this point such as allocating resources for stores, loads, and determining all possible scheduler ports. Register renaming is also controlled by the Register Alias Table (RAT) which is used to mark where the data we depend on is coming from (after that value, too, came from an instruction that has previously been renamed). In previous microarchitectures, the RAT could handle 4 µOPs each cycle. In Sunny Cove this has been increased to five, A 25% increase in the OoO application capabilities. Sunny Cove can now rename any five registers per cycle. This includes the same register renamed five times in a single cycle. Note that the ROB still operates on fused µOPs, therefore 5 µOPs can effectively be as high as 10 µOPs.

It should be noted that there are no special costs involved in splitting up fused µOPs before execution or retirement and the two fused µOPs only occupy a single entry in the ROB.

Since Sunny Cove performs speculative execution, it can speculate incorrectly. When this happens, the architectural state is invalidated and as such needs to be rolled back to the last known valid state. previous microarchitectures had a 48-entry Branch Order Buffer (BOB) that keeps tracks of those states for this very purpose. It's unknown if that has changed with Sunny Cove.

Optimizations[edit]

Sunny Cove has a number of optimizations it performs prior to entering the out-of-order and renaming part. Three of those optimizations include Move Elimination and Zeroing Idioms, and Ones Idioms. A Move Elimination is capable of eliminating register-to-register moves (including chained moves) prior to bookkeeping at the ROB, allowing those µOPs to save resources and eliminating them entirely. Eliminated moves are zero latency and are entirely removed from the pipeline. This optimization does not always succeed; when it fails, the operands were simply not ready. On average this optimization is almost always successful (upward of 85% in most cases). Move elimination works on all 32- and 64-bit GP integer registers as well as all 128- and 256-bit vector registers.

| Zeroing Idiom Example: |

xor eax, eax |

Not only does this instruction get eliminated at the ROB, but it's actually encoded as just 2 bytes 31 C0 vs the 5 bytes for mov eax, 0x0 which is encoded as b8 00 00 00 00.

|

There are some exceptions that Sunny Cove will not optimize, most dealing with signedness. sign-extended moves cannot be eliminated and neither can zero-extended from 16-bit to 32/64 big registers (note that 8-bit to 32/64 works). Likewise, in the other direction, no moves to 8/16-bit registers can be eliminated. A move of a register to itself is never eliminated.

When instructions use registers that are independent of their prior values, another optimization opportunity can be exploited. A second common optimization performed in Sunny Cove around the same time is Zeroing Idioms elimination. A number common zeroing idioms are recognized and consequently eliminated in much the same way as the move eliminations are performed. Sunny Cove recognizes instructions such as XOR, PXOR, and XORPS as zeroing idioms when the source and destination operands are the same. Those optimizations are done at the same rate as renaming during renaming (at 4 µOPs per cycle) and the register is simply set to zero.

The ones idioms is another dependency breaking idiom that can be optimized. In all the various PCMPEQx instructions that perform packed comparison the same register with itself always set all bits to one. On those cases, while the µOP still has to be executed, the instructions may be scheduled as soon as possible because the current state of the register need not be known.

Scheduler[edit]

The scheduler size itself has likely increased with Sunny cove, although its exact capacity was not disclosed. Intel increased the schedule by 50% from 64 to 97 entries in Skylake, therefore it's reasonable to expect Sunny Cove to be greater than 125 entries. Those entries are competitively shared between the two threads. Sunny Cove continues with a unified design; this is in contrast to designs such as AMD's Zen which uses a split design each one holding different types of µOPs. Scheduler includes the two register files for integers and vectors. It's in those register files that output operand data is stored. In Skylake, the integer register file was 180-entry deep. It's unknown if that has changed and by how much on Sunny Cove.

At this point µOPs are no longer fused and will be dispatched to the execution units independently. The scheduler holds the µOPs while they wait to be executed. A µOP could be waiting on an operand that has not arrived (e.g., fetched from memory or currently being calculated from another µOPs) or because the execution unit it needs is busy. Once the µOP is ready, it is dispatched through its designated port. The scheduler will send the oldest ready µOP to be executed on each of the eight ports each cycle.

The scheduler on Sunny Cove enlarged further with two additional ports that deal with memory operations, making it 25% wider than Skylake. Up to 10 operations may be dispatched each cycle. On the arithmetic side of the execution engine, the four workhorse ports were augmented with more functionality. On the vector side, Sunny Cove retains the three FMAs and ALUs. One of the key changes here is the addition of a new shuffle unit on Port 1 for moving data within a register.

Scheduler Ports & Execution Units[edit]

| Scheduler Ports Designation | |

|---|---|

| Port 0 | Integer/Vector Arithmetic, Multiplication, Logic, Shift, and String ops |

| FP Add, Multiply, FMA | |

| Integer/FP Division and Square Root | |

| AES Encryption | |

| Branch2 | |

| Port 1 | Integer/Vector Arithmetic, Multiplication, Logic, Shift, and Bit Scanning |

| FP Add, Multiply, FMA | |

| Port 5 | Integer/Vector Arithmetic, Logic |

| Vector Permute | |

| x87 FP Add, Composite Int, CLMUL | |

| Port 6 | Integer Arithmetic, Logic, Shift |

| Branch | |

| Port 2 | Load AGU |

| Port 3 | Load AGU |

| Port 4 | Store Data |

| Port 7 | Store AGU |

| Port 8 | Store AGU |

| Port 9 | Store Data |

| Execution Units | ||

|---|---|---|

| Execution Unit | # of Units | Instructions |

| ALU | 4 | add, and, cmp, or, test, xor, movzx, movsx, mov, (v)movdqu, (v)movdqa, (v)movap*, (v)movup* |

| SHFT | 2 | sal, shl, rol, adc, sarx, adcx, adox, etc. |

| Slow Int | 1 | mul, imul, bsr, rcl, shld, mulx, pdep, etc. |

| BM 2 andn, bextr, blsi, blsmsk, bzhi, etc. | ||

| Vec ALU | 3 | (v)pand, (v)por, (v)pxor, (v)movq, (v)movq, (v)movap*, (v)movup*, (v)andp*, (v)orp*, (v)paddb/w/d/q, (v)blendv*, (v)blendp*, (v)pblendd |

| Vec_Shft | 2 | (v)psllv*, (v)psrlv*, vector shift count in imm8 |

| Vec Add | 2 | (v)addp*, (v)cmpp*, (v)max*, (v)min*, (v)padds*, (v)paddus*, (v)psign, (v)pabs, (v)pavgb, (v)pcmpeq*, (v)pmax, (v)cvtps2dq, (v)cvtdq2ps, (v)cvtsd2si, (v)cvtss2si |

| Shuffle | 2 | (v)shufp*, vperm*, (v)pack*, (v)unpck*, (v)punpck*, (v)pshuf*, (v)pslldq, (v)alignr, (v)pmovzx*, vbroadcast*, (v)pslldq, (v)psrldq, (v)pblendw |

| Vec Mul | 2 | (v)mul*, (v)pmul*, (v)pmadd* |

| SIMD Misc | 1 | STTNI, (v)pclmulqdq, (v)psadw, vector shift count in xmm |

| FP Mov | 1 | (v)movsd/ss, (v)movd gpr |

| DIVIDE | 1 | divp*, divs*, vdiv*, sqrt*, vsqrt*, rcp*, vrcp*, rsqrt*, idiv |

| This table was taken verbatim from the Intel manual. Execution unit mapping to MMX instructions are not included. | ||

Retirement[edit]

Once a µOP executes, or in the case of fused µOPs both µOPs have executed, they can be retired. Retirement happens in-order and releases any used resources such as those used to keep track in the reorder buffer. With retirement/allocation increasing from four to five in Sunny Cove, it's now possible to retire 5 instructions per cycle (5 unfused or 7 with fused ops).

Die[edit]

Core[edit]

- Intel 10 nm process

- Core from an Ice Lake (client) SoC

- ~6.91 mm² die size

- ~3.5 mm x ~1.97 mm

- Intel 10 nm process

- Core from an Ice Lake (server) SoC

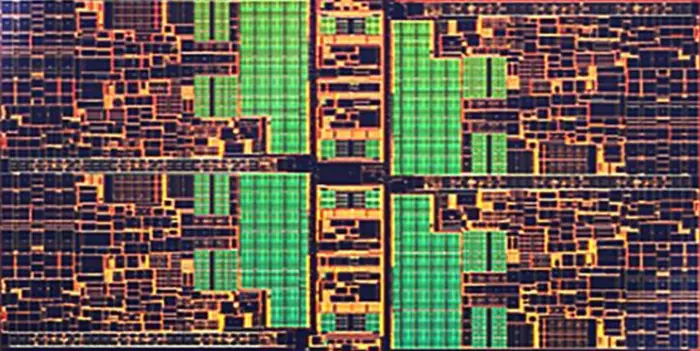

Core group[edit]

- Intel 10 nm process

- Quad-core from an Ice Lake SoC

- ~30.73 mm² die size

- ~7.86 mm x ~3.91 mm

Bibliography[edit]

- Intel Architecture Day 2018, December 11, 2018

| codename | Sunny Cove + |

| core count | 2 +, 4 +, 8 +, 10 +, 12 +, 16 +, 18 +, 20 +, 24 +, 26 +, 28 +, 32 +, 36 +, 38 + and 40 + |

| designer | Intel + |

| first launched | 2019 + |

| full page name | intel/microarchitectures/sunny cove + |

| instance of | microarchitecture + |

| instruction set architecture | x86-64 + |

| manufacturer | Intel + |

| microarchitecture type | CPU + |

| name | Sunny Cove + |

| phase-out | 2021 + |

| pipeline stages (max) | 19 + |

| pipeline stages (min) | 14 + |

| process | 10 nm (0.01 μm, 1.0e-5 mm) + |