| Edit Values | |

| CHA µarch | |

| General Info | |

| Arch Type | CPU |

| Designer | Centaur Technology |

| Manufacturer | TSMC |

| Process | 16 nm |

| Core Configs | 8 |

| Instructions | |

| ISA | x86-64 |

CHA is a 16-nanometer x86 SoC microarchitecture designed by Centaur Technology for the server market.

Contents

Process technology

| This section is empty; you can help add the missing info by editing this page. |

Architecture

Key changes from CN

| This section is empty; you can help add the missing info by editing this page. |

Block Diagram

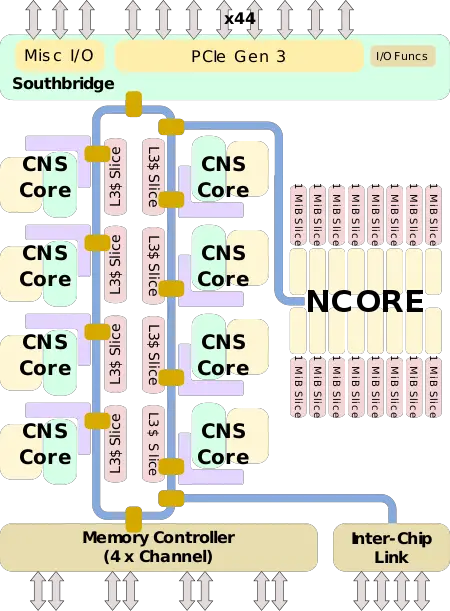

CHA SoC

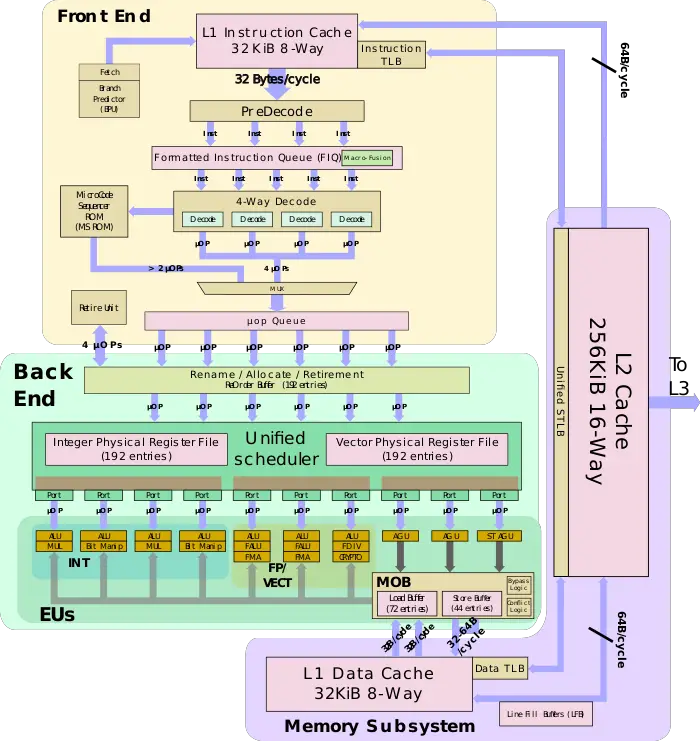

CNS Core

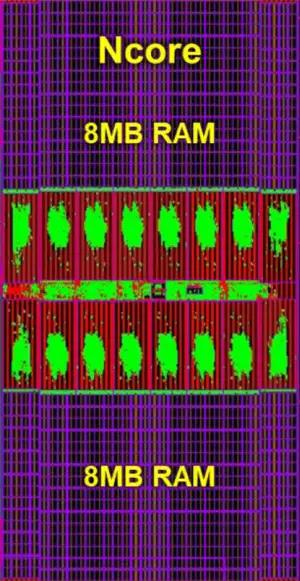

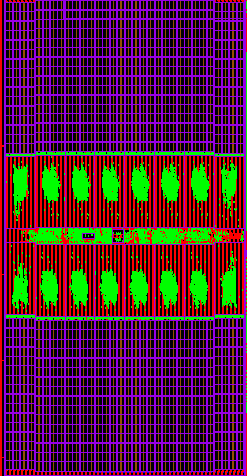

NCORE NPU

Overview

CHA is an x86 SoC designed by Centaur for the server, edge, and AI market. Fabricated on TSMC 16 nm process, the chip integrates 8 new high-performance x86 "CNS" cores interconnected on a ring along with a high-performance "NCORE" neural processor. This chip is the first server x86 chip to integrate an AI accelerator right on-die and is designed to reduce the dependency on AI acceleration through additional hardware in order to simplify the platform and cost.

The CHA SoC incorporates both the source bridge and north bridge on-die. The chip supports for up to quad-channel DDR4 memory and up to 44 PCIe Gen 3 lanes. Additionally, CHA supports the ability to directly link to a second CHA SoC in a 2-way multiprocessing configuration.

Ring

CHA interlinks all the components on the chip through a bidirectional ring interconnect. There is a dedicated ring stop for every one of the eight CNS cores connected at the L3 cache slice, one for the NCORE NPU, one for the southbridge, and another one for the memory controller.

The ring itself consists of two opposing unidirectional rings. Each ring is 512-bit wide. Each cycle, data is passed from one ring stop to the next ring stop. The ring operates at the same clock frequency as the cores. At 2.5 GHz, the ring has a peak theoretical bidirectional bandwidth of 2.56 Tb/s or 320 GB/s.

CNS Core

| This section is empty; you can help add the missing info by editing this page. |

NCORE NPU

The AI accelerator coprocessor sits on the same ring as the rest of the chip with its own dedicated ring stop. NCORE has two DMA channels, capable of reading and writing to/from the L3 cache slices, DRAM, and in theory also I/O. NCORE has a relatively different architecture to many of the dedicated neural processors developed by various startups. To that end, NCORE is an extremely-wide 32,768-bit VLIW SIMD coprocessor. The coprocessor is a programmable coprocessor that's capable of controlling up to 4K-lanes of logic each cycle at the same clock frequency as the CPU cores.

Instructions are brought to the NCORE through the ring and are stored in a centralized instruction unit. The unit incorporates a 12 KiB instruction cache and a 4 KiB instruction ROM. Each cycle, a single 128-bit instruction is fetched, decoded, and gets executed by a sequencer which controls simultaneously all the compute slices and memory. The instruction ROM is used for executing validation code as well as commonly-used functions. The instruction sequencer incorporates a loop counter and various special registers along with sixteen address registers and dedicated hardware for performing on various addressing modes and auto-incrementation operations.

NCORE is built up using small compute units called slices. The design is done in this way in order to allow for future reconfigurability. The full CHA configuration features 16 slices. Each slice is a 256-byte wide SIMD unit and is accompanied by its own cache slice. The cache is build of two banks - D-RAM and W-RAM, each consists of 2,048 256B-wide rows. Both RAMs are 64-bit ECC-protected. With a total memory capacity of 1 MiB per slice, the NCORE has 16 MiB of SRAM. In sync with the compute, one bank can be written and both banks can be read from each cycle. In both cases, the operation is done on the full 4,096-byte vector, giving the NCORE a peak theoretical bandwidth of 10.2 TB/s per bank and 20.4 TB/s in total.

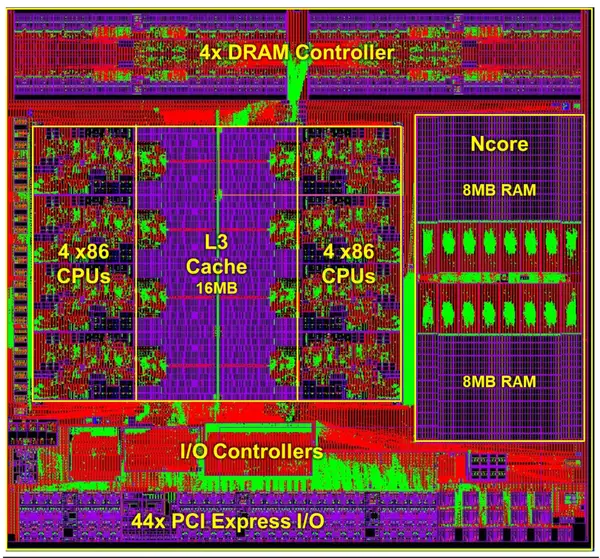

Die

SoC

- TSMC 16 nm process

- 195 mm²

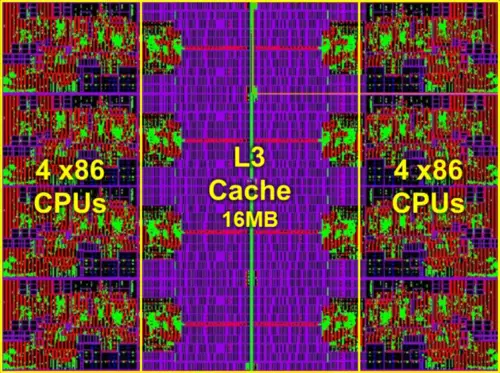

Core group

NCORE

Bibliography

- Centaur. personal communication. November 2019.

See also

- Direct Competition

| codename | CHA + |

| core count | 8 + |

| designer | Centaur Technology + |

| full page name | centaur/microarchitectures/cha + |

| instance of | microarchitecture + |

| instruction set architecture | x86-64 + |

| manufacturer | TSMC + |

| microarchitecture type | CPU + |

| name | CHA + |

| process | 16 nm (0.016 μm, 1.6e-5 mm) + |