(→Implementation) |

(Added FP16 and an instruction list.) |

||

| (23 intermediate revisions by 7 users not shown) | |||

| Line 1: | Line 1: | ||

| − | {{x86 title|AVX-512}}{{x86 isa main}} | + | {{x86 title|Advanced Vector Extensions 512 (AVX-512)}}{{x86 isa main}} |

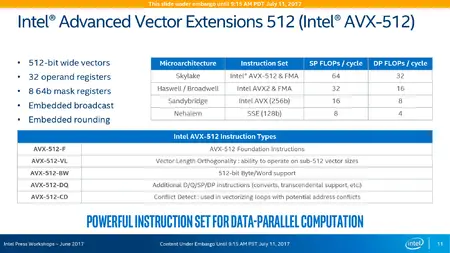

| − | '''AVX-512''' is collective name for a number of {{arch|512}} [[SIMD]] [[x86]] [[instruction set]] extensions. The {{x86|extensions}} | + | '''Advanced Vector Extensions 512''' ('''AVX-512''') is collective name for a number of {{arch|512}} [[SIMD]] [[x86]] [[instruction set]] extensions. The {{x86|extensions}} were formally introduced by [[Intel]] in July [[2013]] with first general-purpose microprocessors implementing the extensions introduced in July [[2017]]. |

== Overview == | == Overview == | ||

AVX-512 is a set of {{arch|512}} [[SIMD]] extensions that allow programs to pack sixteen [[single-precision]] eight [[double-precision]] [[floating-point]] numbers, or eight 64-bit or sixteen 32-bit integers within 512-bit vectors. The extension provides double the computation capabilities of that of {{x86|AVX}}/{{x86|AV2}}. | AVX-512 is a set of {{arch|512}} [[SIMD]] extensions that allow programs to pack sixteen [[single-precision]] eight [[double-precision]] [[floating-point]] numbers, or eight 64-bit or sixteen 32-bit integers within 512-bit vectors. The extension provides double the computation capabilities of that of {{x86|AVX}}/{{x86|AV2}}. | ||

| − | + | ; '''AVX512F''' - {{x86|AVX512F|'''AVX-512 Foundation'''}} | |

| + | : Base of the 512-bit SIMD instruction extensions which is a comprehensive list of features for most HPC and enterprise applications. AVX-512 Foundation is the natural extensions to AVX/AVX2 which is extended using the {{x86|EVEX}} prefix which builds on the existing {{x86|VEX}} prefix. Any processor that implements any portion of the AVX-512 extensions MUST implement AVX512F. | ||

| − | + | ; '''AVX512CD''' - {{x86|AVX512CD|'''AVX-512 Conflict Detection Instructions'''}} | |

| + | : Enables vectorization of loops with possible address conflict. | ||

| − | + | ; '''AVX512PF''' - {{x86|AVX512PF|'''AVX-512 Prefetch Instructions'''}} | |

| + | : Adds new prefetch instructions matching the gather/scatter instructions introduced by AVX512F. | ||

| − | + | ; '''AVX512ER''' - {{x86|AVX512ER|'''AVX-512 Exponential and Reciprocal Instructions'''}} ('''ERI''') | |

| + | : Doubles the precision of the RCP, RSQRT and EXP2 approximation instructions from 14 to 28 bits. | ||

| − | + | ; '''AVX512BW''' - {{x86|AVX512BW|'''AVX-512 Byte and Word Instructions'''}} | |

| + | : Adds new and supplemental 8-bit and 16-bit integer instructions, mostly promoting legacy AVX and AVX-512 instructions. | ||

| − | + | ; '''AVX512DQ''' - {{x86|AVX512DQ|'''AVX-512 Doubleword and Quadword Instructions'''}} | |

| + | : Adds new and supplemental 32-bit and 64-bit integer and floating point instructions. | ||

| − | + | ; '''AVX512VL''' - '''AVX-512 Vector Length''' | |

| + | : Adds vector length orthogonality. This is not an extension so much as a feature flag indicating that the supported AVX-512 instructions operating on 512-bit vectors can also operate on 128- and 256-bit vectors like {{x86|SSE}} and {{x86|AVX}} instructions. | ||

| − | + | ; '''AVX512_IFMA''' - {{x86|AVX512_IFMA|'''AVX-512 Integer Fused Multiply-Add'''}} | |

| + | : Fused multiply-add of 52-bit integers. | ||

| − | + | ; '''AVX512_VBMI''' - {{x86|AVX512_VBMI|'''AVX-512 Vector Bit Manipulation Instructions'''}} | |

| + | : Byte permutation instructions, including one shifting unaligned bytes. | ||

| − | + | ; '''AVX512_VBMI2''' - {{x86|AVX512_VBMI2|'''AVX-512 Vector Bit Manipulation Instructions 2'''}} | |

| + | : Bytewise compress/expand instructions and bitwise funnel shifts. | ||

| − | + | ; '''AVX512_BITALG''' - {{x86|AVX512_BITALG|'''AVX-512 Bit Algorithms'''}} | |

| + | : Parallel population count in bytes or words and a bit shuffle instruction. | ||

| − | + | ; '''AVX512_VPOPCNTDQ''' - {{x86|AVX512_VPOPCNTDQ|'''AVX-512 Vector Population Count Doubleword and Quadword'''}} | |

| + | : Parallel population count in doublewords or quadwords. | ||

| + | |||

| + | ; '''AVX512_4FMAPS''' - {{x86|AVX512_4FMAPS|'''AVX-512 Fused Multiply-Accumulate Packed Single Precision'''}} | ||

| + | : Floating-point single precision multiply-accumulate, four iterations, instructions for deep learning. | ||

| + | |||

| + | ; '''AVX512_4VNNIW''' - {{x86|AVX512_4VNNIW|'''AVX-512 Vector Neural Network Instructions Word Variable Precision'''}} | ||

| + | : 16-bit integer multiply-accumulate, four iterations, instructions for deep learning. | ||

| + | |||

| + | ; '''AVX512_FP16''' - {{x86|AVX512_FP16|'''AVX-512 FP16 Instructions'''}} | ||

| + | : Adds support for 16-bit half precision floating point values, promoting nearly all floating point instructions introduced by AVX512F. | ||

| + | |||

| + | ; '''AVX512_BF16''' - {{x86|AVX512_BF16|'''AVX-512 BFloat16 Instructions'''}} | ||

| + | : {{link|BFloat16}} multiply-add and conversion instructions for deep learning. | ||

| + | |||

| + | ; '''AVX512_VNNI''' - {{x86|AVX512_VNNI|'''AVX-512 Vector Neural Network Instructions'''}} | ||

| + | : 8- and 16-bit multiply-add instructions for deep learning. | ||

| + | |||

| + | ; '''AVX512_VP2INTERSECT''' - {{x86|AVX512_VP2INTERSECT|'''AVX-512 Intersect Instructions'''}} | ||

| + | : ... | ||

| + | |||

| + | The following extensions add instructions in AVX-512, AVX, and (in the case of GFNI) SSE versions and may be available on x86 CPUs without AVX-512 or even AVX support. | ||

| + | |||

| + | ; '''VAES''' - {{x86|VAES|'''Vector AES Instructions'''}} | ||

| + | : Parallel {{wp|Advanced Encryption Standard|AES}} decoding and encoding instructions. Expands the earlier AESNI extension, which adds SSE and AVX versions of these instructions operating on 128-bit vectors, with support for 256- and 512-bit vectors and AVX-512 features. | ||

| + | |||

| + | ; '''VPCLMULQDQ''' - {{x86|VPCLMULQDQ|'''Vector Carry-Less Multiplication of Quadwords'''}} | ||

| + | : Expands the earlier {{x86|PCLMULQDQ}} extension, which adds SSE and AVX instructions performing this operation on 128-bit vectors, with support for 256- and 512-bit vectors and AVX-512 features. | ||

| + | |||

| + | ; '''GFNI''' - {{x86|GFNI|'''Galois Field New Instructions'''}} | ||

| + | : Adds Galois Field transformation instructions. | ||

| + | |||

| + | Note that, | ||

| + | |||

| + | * Formerly, the term '''AVX3.1''' referred to <code>F + CD + ER + PF</code>. | ||

| + | * Formerly, the term '''AVX3.2''' referred to <code>F + CD + BW + DQ + VL</code>. | ||

| + | |||

| + | == Registers == | ||

| + | AVX-512 defines 32 512-bit vector registers ZMM0 ... ZMM31. They are shared by integer and floating point vector instructions and can contain 64 bytes, or 32 16-bit words, or 16 doublewords, or 8 quadwords, or 32 half precision, 16 single precision, 16 {{link|BFloat16}}, or 8 double precision IEEE 754 floating point values. If the integer values are interpreted as signed or unsigned depends on the instruction. As x86 is a little-endian architecture elements are numbered from zero beginning at the least significant byte of the register, and vectors are stored in memory LSB to MSB regardless of vector size and element type. Some instructions group elements in lanes. A pair of single precision values in the second 64-bit lane for instance refers to bits 64 ... 95 and 96 ... 127 of the register, again counting from the least significant bit. | ||

| + | |||

| + | The earlier AVX extension, which supports only 128- and 256-bit vectors, similarly defines 16 256-bit vector registers YMM0 ... YMM15. These are aliased to the lower half of the registers ZMM0 ... ZMM15. The still earlier SSE extension defines 16 128-bit vector registers XMM0 ... XMM15. These are aliased to the lowest quarter of the registers ZMM0 ... ZMM15. That means AVX-512 instructions can access the results of AVX and SSE instructions and to some degree vice versa. MMX instructions, the oldest vector instructions on x86 processors, use different registers. The full set of registers is only available in x86-64 mode. In other modes SSE, AVX, and AVX-512 instructions can only access the first eight registers. | ||

| + | |||

| + | AVX-512 instructions generally operate on 512-bit vectors. Often variants operating on 128- and 256-bit vectors are also available, and sometimes only a 128-bit variant. Shorter vectors are stored towards the LSB of the vector registers. Accordingly in assembler code the vector size is implied by register names XMM, YMM, and ZMM. AVX-512 instructions can of course access 32 of all these registers. | ||

| + | |||

| + | Use of 128- and 256-bit vectors can be beneficial to reduce memory traffic and achieve performance gains on implementations which complete 512-bit instructions as two or even four internal operations. This also explains why many horizontal operations are confined to 128-bit lanes. Motivations of such implementations can be a reduction of execution resources due to die size or complexity constraints, or performance optimizations where execution resources are dynamically disabled if power or thermal limits are reached instead of downclocking the CPU core which also affects uncritical instructions. If AVX-512 features are not needed shorter equivalent AVX instructions may also improve instruction cache utilization. | ||

| + | |||

| + | == Write masking == | ||

| + | Most AVX-512 instructions support write masking. That means they can write individual elements in the destination vector unconditionally, leave them unchanged, or zero them if the corresponding bit in a mask register supplied as an additional source operand is zero. The masking mode is encoded in the instruction opcode. AVX-512 defines 8 64-bit mask registers K0 ... K7. Masks can vary in width from 1 to 64 bits depending on the instruction (e.g. some operating on a single vector element) and the number of elements in the vector. They are likewise stored towards the LSB of the mask registers. | ||

| + | |||

| + | One application of write masking are predicated instructions. In vectorized code conditional operations on individual elements are not possible. A solution is to form predicates by comparing elements and execute the conditional operations regardless of outcome, but skip writes for results where the predicate is negative. This was already possible with MMX compare and bitwise logical instructions. Write masking improves the code density by blending results as a side effect. Another application are operations on arrays which do not match the vector sizes supported by AVX-512 or which are inconveniently aligned. | ||

| + | |||

| + | == Memory fault suppression == | ||

| + | AVX-512 supports memory fault suppression. A memory fault occurs for instance if a vector loaded from memory crosses a page boundary and one of the pages is unreadable because no physical memory was allocated to that virtual address. Programmers and compilers can avoid this by aligning vectors. A vectorized function served with pointers as parameters has to prepare code handling these edge cases at runtime. With memory fault suppression it can just mask out bytes it was not supposed to load from or store in memory and no exceptions will be generated for those bytes. | ||

| + | |||

| + | == Integer instructions == | ||

| + | Common aspects: | ||

| + | * Parallel operations are performed on the corresponding elements of the destination and source operands. | ||

| + | * A "word" is 16 bits wide, a "doubleword" 32 bits, a "quadword" 64 bits. | ||

| + | * Signed saturation: min(max(-2<sup>W-1</sup>, x), 2<sup>W-1</sup> - 1), | ||

| + | * Unsigned saturation: min(max(0, x), 2<sup>W</sup> - 1), where W is the destination data type width in bits. | ||

| + | * Except as noted the destination operand and the first of two source operands is a vector register. | ||

| + | * If the destination is a vector register and the vector size is less than 512 bits AVX and AVX-512 instructions zero the unused higher bits to avoid a dependency on earlier instructions writing those bits. If the destination is a mask register unused higher mask bits due to the vector and element size are cleared. | ||

| + | * Except as noted the second or only source operand can be | ||

| + | ** a vector register, | ||

| + | ** a vector in memory, | ||

| + | ** or a single element in memory broadcast to all elements in the vector. The broadcast option is limited to AVX-512 instructions operating on doublewords or quadwords. | ||

| + | * Some instructions use an immediate value as an additional operand, a byte which is part of the opcode. | ||

| + | |||

| + | The table below lists all AVX-512 instructions operating on integer values. The columns on the right show the x86 extension which introduced the instruction, broken down by instruction encoding and supported vector size in bits. For brevity mnemonics are abbreviated: "(V)PMULLW" means PMULLW (MMX/SSE variant) and VPMULLW (AVX/AVX-512). "P(MIN/MAX)(SD/UD)" means PMINSD, PMINUD, PMAXSD, and PMAXUD, instructions computing the minimum or maximum of signed or unsigned doublewords. | ||

| + | |||

| + | {| class="wikitable" | ||

| + | ! style="width:100%" rowspan="2" | Instruction | ||

| + | ! MMX !! SSE !! colspan="2" | AVX !! colspan="3" | AVX-512 | ||

| + | |- | ||

| + | ! 64 !! 128 !! 128 !! 256 !! 128 !! 256 !! 512 | ||

| + | |||

| + | |- | ||

| + | | <code>(V)P(ADD/SUB)(/S/US)(B/W)</code> | ||

| + | | MMX || SSE2 || AVX || AVX2 || BW+VL || BW+VL || BW | ||

| + | |- | ||

| + | | <code>{{vanchor|(V)PADD(D/Q)|VPADDD}}</code> | ||

| + | | MMX || SSE2 || AVX || AVX2 || F+VL || F+VL || F | ||

| + | |- | ||

| + | | <code>(V)PSUB(D/Q)</code> | ||

| + | | SSE2 || SSE2 || AVX || AVX2 || F+VL || F+VL || F | ||

| + | |- | ||

| + | | colspan="8" | Parallel addition or subtraction (source1 - source2) of bytes, words, doublewords, or quadwords. The result is truncated (), or stored with signed (S) or unsigned saturation (US). | ||

| + | |||

| + | |- | ||

| + | | <code>(V)P(MIN/MAX)(SB/UW)</code> | ||

| + | | - || SSE4_1 || AVX || AVX2 || BW+VL || BW+VL || BW | ||

| + | |- | ||

| + | | <code>(V)P(MIN/MAX)(UB/SW)</code> | ||

| + | | SSE || SSE2 || AVX || AVX2 || BW+VL || BW+VL || BW | ||

| + | |- | ||

| + | | <code>(V)P(MIN/MAX)(SD/UD)</code> | ||

| + | | - || SSE4_1 || AVX || AVX2 || F+VL || F+VL || F | ||

| + | |- | ||

| + | | <code>(V)P(MIN/MAX)(SQ/UQ)</code> | ||

| + | | - || - || - || - || F+VL || F+VL || F | ||

| + | |- | ||

| + | | colspan="8" | Parallel minimum or maximum of signed or unsigned bytes, words, doublewords, or quadwords. | ||

| + | |||

| + | |- | ||

| + | | <code>(V)PABS(B/W)</code> | ||

| + | | SSSE3 || SSSE3 || AVX || AVX2 || BW+VL || BW+VL || BW | ||

| + | |- | ||

| + | | <code>(V)PABSD</code> | ||

| + | | SSSE3 || SSSE3 || AVX || AVX2 || F+VL || F+VL || F | ||

| + | |- | ||

| + | | <code>VPABSQ</code> | ||

| + | | - || - || - || - || F+VL || F+VL || F | ||

| + | |- | ||

| + | | colspan="8" | Parallel absolute value of signed bytes, words, doublewords, or quadwords. | ||

| + | |||

| + | |- | ||

| + | | <code>(V)PMULLW</code> | ||

| + | | MMX || SSE2 || AVX || AVX2 || BW+VL || BW+VL || BW | ||

| + | |- | ||

| + | | <code>(V)PMULLD</code>, <code>(V)PMULDQ</code> | ||

| + | | - || SSE4_1 || AVX || AVX2 || F+VL || F+VL || F | ||

| + | |- | ||

| + | | <code>(V)PMULLQ</code> | ||

| + | | - || - || - || - || DQ+VL || DQ+VL || DQ | ||

| + | |- | ||

| + | | colspan="8" | Parallel multiplication of unsigned words (<code>PMULLW</code>), signed doublewords (<code>PMULDQ</code>), unsigned doublewords (<code>PMULLD</code>), or unsigned quadwords (<code>PMULLQ</code>). The instructions store the lower half of the product in the corresponding words, doublewords, or quadwords of the destination vector. | ||

| + | |||

| + | |- | ||

| + | | <code>(V)PMULHW</code> | ||

| + | | MMX || SSE2 || AVX || AVX2 || BW+VL || BW+VL || BW | ||

| + | |- | ||

| + | | <code>(V)PMULHUW</code> | ||

| + | | SSE || SSE2 || AVX || AVX2 || BW+VL || BW+VL || BW | ||

| + | |- | ||

| + | | <code>(V)PMULHRSW</code> | ||

| + | | SSSE3 || SSSE3 || AVX || AVX2 || BW+VL || BW+VL || BW | ||

| + | |- | ||

| + | | colspan="8" | Parallel multiplication of signed words (<code>PMULHW</code>, <code>PMULHRSW</code>) or unsigned words (<code>PMULHUW</code>). The instructions store the upper half of the 32-bit product in the corresponding words of the destination vector. The <code>PMULHRSW</code> instruction rounds the result: | ||

| + | |||

| + | dest = (source1 * source2 + 2<sup>15</sup>) >> 16 | ||

| + | |||

| + | |- | ||

| + | | <code>(V)PMULUDQ</code> | ||

| + | | SSE2 || SSE2 || AVX || AVX2 || F+VL || F+VL || F | ||

| + | |- | ||

| + | | colspan="8" | Parallel multiplication of unsigned doublewords. The instruction reads only the doublewords in the lower half of each 64-bit lane of the source operands and stores the 64-bit quadword product in the same lane of the destination vector. | ||

| + | |||

| + | |- | ||

| + | | <code>{{vanchor|(V)PMADDWD|VPMADDWD}}</code> | ||

| + | | MMX || SSE2 || AVX || AVX2 || BW+VL || BW+VL || BW | ||

| + | |- | ||

| + | | colspan="8" | Multiplies the corresponding signed words of the source operands, adds the 32-bit products from the even and odd lanes, and stores the results in the doublewords of the destination vector. The results overflow (wrap around to -2<sup>31</sup>) if all four inputs are -32768. Broadcasting is not supported. | ||

| + | |||

| + | |- | ||

| + | | <code>(V)PMADDUBSW</code> | ||

| + | | - || SSSE3 || AVX || AVX2 || BW+VL || BW+VL || BW | ||

| + | |- | ||

| + | | colspan="8" | Multiplies the ''unsigned'' bytes in the first source operand with the corresponding ''signed'' bytes in the second source operand, adds the 16-bit products from the even and odd lanes, and stores the results with signed saturation in the 16-bit words of the destination vector. | ||

| + | |||

| + | |- | ||

| + | | <code>VPMADD52(L/H)UQ</code> | ||

| + | | - || - || AVX-IFMA || AVX-IFMA || IFMA+VL || IFMA+VL || IFMA | ||

| + | |- | ||

| + | | colspan="8" | Parallel multiply-accumulate. The instructions multiply the 52 least significant bits in corresponding unsigned quadwords of the source operands, then add the lower (L) or upper (H) half of the 104-bit product, zero-extended from 52 to 64 bits, to the corresponding quadword of the destination vector. | ||

| + | |||

| + | |- | ||

| + | | <code>VP4DPWSSD(/S)</code> | ||

| + | | - || - || - || - || - || - || 4VNNIW | ||

| + | |- | ||

| + | | colspan="8" | | ||

| + | Dot product of signed 16-bit words, accumulated in doublewords, four iterations. | ||

| + | |||

| + | These instructions use two 512-bit vector operands. The first one is a vector register, the second one is obtained by reading a 128-bit vector from memory and broadcasting it to the four 128-bit lanes of a 512-bit vector. The instructions multiply the 32 corresponding signed words in the source operands, then add the signed 32-bit products from the even lanes, odd lanes, and the 16 signed doublewords in the 512-bit destination vector register and store the sums in the destination. Finally the instructions increment the number of the source register by one modulo four, and repeat these operations three more times, reading four vector registers total in a 4-aligned block, e.g. ZMM12 ... ZMM15. Exceptions can occur in each iteration. Write masking is supported. | ||

| + | |||

| + | <code>VP4DPWSSD</code> can be replaced by 16 <code>{{x86|avx-512#VPDPWSSD|VPDPWSSD}}</code> instructions (from the later {{x86|AVX512_VNNI}} extension) working on 128-bit vectors, or four 512-bit instructions and a memory load with broadcast. | ||

| + | |||

| + | <code>VP4DPWSSDS</code> performs the same operations except the 33-bit intermediate sum is stored in the destination with signed saturation: | ||

| + | |||

| + | dest = min(max(-2<sup>31</sup>, even + odd + dest), 2<sup>31</sup> - 1) | ||

| + | |||

| + | Its VNNI equivalent is <code>{{x86|avx-512#VPDPWSSD|VPDPWSSDS}}</code>. | ||

| + | |||

| + | |- | ||

| + | | <code>VPDPBUSD(/S)</code> | ||

| + | | - || - || AVX-VNNI || AVX-VNNI || VNNI+VL || VNNI+VL || VNNI | ||

| + | |- | ||

| + | | colspan="8" | ... | ||

| + | |||

| + | |- | ||

| + | | <code>{{vanchor|VPDPWSSD}}(/S)</code> | ||

| + | | - || - || AVX-VNNI || AVX-VNNI || VNNI+VL || VNNI+VL || VNNI | ||

| + | |- | ||

| + | | colspan="8" | Dot product of signed words, accumulated in doublewords. The instructions multiply the corresponding signed words of the source operands, add the signed 32-bit products from the even lanes, odd lanes, and the signed doublewords of the destination vector and store the sums in the destination. <code>VPDPWSSDS</code> performs the same operation except the 33-bit intermediate sum is stored with signed saturation: | ||

| + | |||

| + | dest = min(max(-2<sup>31</sup>, even + odd + dest), 2<sup>31</sup> - 1) | ||

| + | |||

| + | <code>VPDPWSSD</code> fuses two older instructions <code>{{link|#VPMADDWD}}</code> which computes the products and even/odd sums, and <code>{{link|#VPADDD}}</code> to accumulate the results. <code>VPDPWSSDS</code> cannot be easily replaced because <code>VPMADDWD</code> overflows if all four inputs are equal to -32768 and there is no instruction to add doublewords with saturation. | ||

| + | |||

| + | |- | ||

| + | | <code>(V)PCMP(EQ/GT)(B/W)</code> | ||

| + | | MMX || SSE2 || AVX || AVX2 || BW+VL || BW+VL || BW | ||

| + | |- | ||

| + | | <code>(V)PCMP(EQ/GT)D</code> | ||

| + | | MMX || SSE2 || AVX || AVX2 || F+VL || F+VL || F | ||

| + | |- | ||

| + | | <code>(V)PCMPEQQ</code> | ||

| + | | - || SSE4_1 || AVX || AVX2 || F+VL || F+VL || F | ||

| + | |- | ||

| + | | <code>(V)PCMPGTQ</code> | ||

| + | | - || SSE4_2 || AVX || AVX2 || F+VL || F+VL || F | ||

| + | |- | ||

| + | | colspan="8" | Parallel compare with predicate equal or greater-than of the signed bytes, words, doublewords, or quadwords in the first and second source operand. The MMX, SSE, and AVX versions store the result, -1 = true or 0 = false, in the corresponding elements of the destination, a vector register. These can be used as masks in bitwise logical operations to emulate predicated vector instructions. The AVX-512 versions set the corresponding bits in the destination mask register to 1 = true or 0 = false. | ||

| + | |||

| + | |- | ||

| + | | <code>VCMP(B/UB/W/UW)</code> | ||

| + | | - || - || - || - || BW+VL || BW+VL || BW | ||

| + | |- | ||

| + | | <code>VCMP(D/UD/Q/UQ)</code> | ||

| + | | - || - || - || - || F+VL || F+VL || F | ||

| + | |- | ||

| + | | colspan="8" | Parallel compare operation of the signed or unsigned bytes, words, doublewords, or quadwords in the first and second source operand. One of 8 operations (EQ, LT, LE, F, NEQ, NLT, NLE, T) is selected by an immediate byte. The instructions set the corresponding bits in the destination mask register to 1 = true or 0 = false. The instructions support write masking which performs a bitwise 'and' on the destination using a second mask register. | ||

| + | |||

| + | |- | ||

| + | | <code>VPTESTM(B/W)</code>, <code>VPTESTNM(B/W)</code> | ||

| + | | - || - || - || - || BW+VL || BW+VL || BW | ||

| + | |- | ||

| + | | <code>VPTESTM(D/Q)</code>, <code>VPTESTNM(D/Q)</code> | ||

| + | | - || - || - || - || F+VL || F+VL || F | ||

| + | |- | ||

| + | | colspan="8" | These instructions perform a parallel bitwise 'and' (TESTM) or 'not and' (TESTNM), that is (not source1) and source2, on the bytes, words, doublewords, or quadwords of the source operands, then set the bits in the destination mask register, copying the most significant bit of the corresponding results. | ||

| + | |||

| + | |- | ||

| + | | <code>(V)P(AND/ANDN/OR/XOR)(/D/Q)</code> | ||

| + | | MMX || SSE2 || AVX || AVX2 || F+VL || F+VL || F | ||

| + | |- | ||

| + | | colspan="8" | Parallel bitwise logical operations. The <code>ANDN</code> operation is (not source1) and source2. The AVX-512 versions operate on doublewords or quadwords, the distinction is necessary because these instructions support write masking which observes the number of elements in the vector. | ||

| + | |||

| + | |- | ||

| + | | <code>VPTERNLOG(D/Q)</code> | ||

| + | | - || - || - || - || F+VL || F+VL || F | ||

| + | |- | ||

| + | | colspan="8" | Parallel bitwise ternary logical operations on doublewords or quadwords. These instructions concatenate the corresponding bits of the destination, first, and second source operand into a 3-bit index which selects a bit from an 8-bit lookup table provided by an immediate byte, and store it in the corresponding bit of the destination. In other words they can perform any bitwise boolean operation with up to three inputs. The data type distinction is necessary because these instructions support write masking which observes the number of elements in the vector. | ||

| + | |||

| + | |- | ||

| + | | <code>VPOPCNT(B/W)</code> | ||

| + | | - || - || - || - || BITALG+VL || BITALG+VL || BITALG | ||

| + | |- | ||

| + | | <code>VPOPCNT(D/Q)</code> | ||

| + | | - || - || - || - || VPOPC+VL || VPOPC+VL || VPOPC | ||

| + | |- | ||

| + | | colspan="8" | Parallel count of the number of set bits in bytes, words, doublewords, or quadwords. | ||

| + | |||

| + | |- | ||

| + | | <code>VPLZCNT(D/Q)</code> | ||

| + | | - || - || - || - || CD+VL || CD+VL || CD | ||

| + | |- | ||

| + | | colspan="8" | Parallel count of the number of leading (most significant) zero bits in doublewords or quadwords. | ||

| + | |||

| + | |- | ||

| + | | <code>(V)PS(LL/RA/RL)W</code> | ||

| + | | MMX || SSE2 || AVX || AVX2 || BW+VL || BW+VL || BW | ||

| + | |- | ||

| + | | <code>(V)PS(LL/RA/RL)(D/Q)</code> | ||

| + | | MMX || SSE2 || AVX || AVX2 || F+VL || F+VL || F | ||

| + | |- | ||

| + | | colspan="8" | Parallel bitwise left shift (PSLL) or logical (unsigned) right shift (PSRL) or arithmetic (signed) right shift (PSRA) of the words, doublewords, or quadwords in the first or only source operand. The amount can be a constant provided by an immediate byte, or a single value provided by a second source operand, specifically the lowest quadword of a vector register or a 128-bit vector in memory. If the shift amount is greater than or equal to the element size in bits the destination element is zeroed (PSLL, PSRL) or set to -1 or 0 (PSRA). Broadcasting is supported by the constant amount AVX-512 instruction variants operating on doublewords or quadwords. | ||

| + | |||

| + | |- | ||

| + | | <code>VPS(LL/RA/RL)VW</code> | ||

| + | | - || - || - || - || BW+VL || BW+VL || BW | ||

| + | |- | ||

| + | | <code>VPS(LL/RL)V(D/Q)</code> | ||

| + | | - || - || AVX2 || AVX2 || F+VL || F+VL || F | ||

| + | |- | ||

| + | | <code>VPSRAVD</code> | ||

| + | | - || - || AVX2 || AVX2 || F+VL || F+VL || F | ||

| + | |- | ||

| + | | <code>VPSRAVQ</code> | ||

| + | | - || - || - || - || F+VL || F+VL || F | ||

| + | |- | ||

| + | | colspan="8" | Parallel bitwise left shift (VPSLL) or logical (unsigned) right shift (VPSRL) or arithmetic (signed) right shift (VPSRA) of the words, doublewords, or quadwords in the first source operand by a per-element variable amount taken from the corresponding element of the second source operand. If the shift amount is greater than or equal to the element size in bits the destination element is zeroed (VPSLL, VPSRL) or set to -1 or 0 (VPSRA). | ||

| + | |||

| + | |- | ||

| + | | <code>VPRO(L/R)(/V)(D/Q)</code> | ||

| + | | - || - || - || - || F+VL || F+VL || F | ||

| + | |- | ||

| + | | colspan="8" | Parallel bitwise rotate left or right of the doublewords or quadwords in the first or only source operand by a constant () or per-element variable number of bits (V) modulo element width in bits. The instructions take a constant amount from an immediate byte, a variable amount from the corresponding element of a second source operand. | ||

| + | |||

| + | |- | ||

| + | | <code>VPSH(L/R)D(W/D/Q)</code> | ||

| + | | - || - || - || - || VBMI2+VL || VBMI2+VL || VBMI2 | ||

| + | |- | ||

| + | | colspan="8" | Parallel bitwise funnel shift left or right by a constant amount. The instructions concatenate each word, doubleword, or quadword of the first and second source operand, perform a bitwise logical left or right shift by a constant amount modulo element width in bits taken from an immediate byte, and store the upper (L) or lower (R) half of the result in the corresponding elements of the destination vector. | ||

| + | |||

| + | |- | ||

| + | | <code>VPSH(L/R)DV(W/D/Q)</code> | ||

| + | | - || - || - || - || VBMI2+VL || VBMI2+VL || VBMI2 | ||

| + | |- | ||

| + | | colspan="8" | Parallel bitwise funnel shift left or right by a per-element variable amount. The instructions concatenate each word, doubleword, or quadword of the ''destination and first source operand'', perform a bitwise logical left or right shift by a variable amount modulo element width in bits taken from the corresponding element of the second source operand, and store the upper (L) or lower (R) half of the result in the corresponding elements of the destination vector.> | ||

| + | |||

| + | |- | ||

| + | | <code>VPMULTISHIFTQB</code> | ||

| + | | - || - || - || - || VBMI+VL || VBMI+VL || VBMI | ||

| + | |- | ||

| + | | colspan="8" | | ||

| + | Copies 8 consecutive bits from the second source operand into each byte of the destination vector using bit indices. For each destination byte the instruction obtains an index from the corresponding byte of the first source operand. The operation is confined to 64-bit quadwords so the indices can only address bits in the same 64-bit lane as the index and destination byte. The instruction increments the index for each bit modulo 64. In other words the operation for each destination byte is: | ||

| + | |||

| + | dest.byte[i] = bitwise_rotate_right(source2.quadword[i / 8], source1.byte[i] and 63) and 255 | ||

| + | |||

| + | The destination and first source operand is a vector register. The second source operand can be a vector register, a vector in memory, or one quadword broadcast to all 64-bit lanes of the vector. Write masking is supported with quadword granularity. | ||

| + | |||

| + | |- | ||

| + | | <code>(V)PS(LL/RL)DQ</code> | ||

| + | | - || SSE2 || AVX || AVX2 || BW+VL || BW+VL || BW | ||

| + | |- | ||

| + | | colspan="8" | Parallel ''bytewise'' shift left or right of quadwords by a constant amount, shifting in zero bytes. The amount is taken from an immediate byte. If greater than 15 the instructions zero the destination element. Broadcasting and write masking is not supported. | ||

| + | |||

| + | |- | ||

| + | | <code>(V)PALIGNR</code> | ||

| + | | SSSE3 || SSSE3 || AVX || AVX2 || BW+VL || BW+VL || BW | ||

| + | |- | ||

| + | | colspan="8" | Parallel ''bytewise'' funnel shift right by a constant amount, shifting in zero bytes. The instruction concatenates the data in corresponding 128-bit lanes of the first and second source operand, shifts the 256-bit intermediate values right by a constant amount times 8 and stores the lower half of the results in the corresponding 128-bit lanes of the destination. The amount is taken from an immediate byte. Broadcasting is not supported. Write masking is supported with byte granularity. | ||

| + | |||

| + | |- | ||

| + | | <code>VALIGN(D/Q)</code> | ||

| + | | - || - || - || - || F+VL || F+VL || F | ||

| + | |- | ||

| + | | colspan="8" | Element-wise funnel shift right by a constant amount, shifting in zeroed doublewords or quadwords. The instruction concatenates the first and second source operand, performs a bitwise logical right shift by a constant amount times 32 (<code>VALIGND</code>) or 64 (<code>VALIGNQ</code>), and stores the lower half of the vector in the destination vector register. The amount, modulo number of elements in the destination vector, is taken from an immediate byte. | ||

| + | |||

| + | |- | ||

| + | | <code>(V)AESDEC</code>, <code>(V)AESDECLAST</code>,<br/> <code>(V)AESENC</code>, <code>(V)AESENCLAST</code> | ||

| + | | - || AESNI || AESNI+AVX || VAES || VAES+VL || VAES+VL || VAES+F | ||

| + | |- | ||

| + | | colspan="8" | Performs one round, or the last round, of an {{wp|Advanced Encryption Standard|AES}} decryption or encryption flow. ... Broadcasting and write masking is not supported. | ||

| + | |||

| + | |- | ||

| + | | <code>(V)PCLMULQDQ</code> | ||

| + | | - || PCLM || PCLM+AVX || VPCLM || VPCLM+VL || VPCLM+VL || VPCLM+F | ||

| + | |- | ||

| + | | colspan="8" | Carry-less multiplication of quadwords. ... Broadcasting and write masking is not supported. | ||

| + | |||

| + | |- | ||

| + | | <code>GF2P8AFFINEQB</code> | ||

| + | | - || GFNI || GFNI+AVX || GFNI+AVX || GFNI+VL || GFNI+VL || GFNI+F | ||

| + | |- | ||

| + | | colspan="8" | ... | ||

| + | |- | ||

| + | | <code>GF2P8AFFINEINVQB</code> | ||

| + | | - || GFNI || GFNI+AVX || GFNI+AVX || GFNI+VL || GFNI+VL || GFNI+F | ||

| + | |- | ||

| + | | colspan="8" | ... | ||

| + | |- | ||

| + | | <code>GF2P8MULB</code> | ||

| + | | - || GFNI || GFNI+AVX || GFNI+AVX || GFNI+VL || GFNI+VL || GFNI+F | ||

| + | |- | ||

| + | | colspan="8" | ... | ||

| + | |||

| + | |- | ||

| + | | <code>VPAVG(B/W)</code> | ||

| + | | SSE || SSE2 || AVX || AVX2 || F+VL || F+VL || F | ||

| + | |- | ||

| + | | colspan="8" | Parallel average of unsigned bytes or words with rounding: | ||

| + | |||

| + | dest = (source1 + source2 + 1) >> 1 | ||

| + | |||

| + | |- | ||

| + | | <code>(V)PSADBW</code> | ||

| + | | SSE || SSE2 || AVX || AVX2 || BW+VL || BW+VL || BW | ||

| + | |- | ||

| + | | colspan="8" | Computes the absolute difference of the corresponding unsigned bytes in the source operands, adds the eight results from each 64-bit lane and stores the sum in the corresponding 64-bit quadword of the destination vector. Write masking is not supported. | ||

| + | |||

| + | |- | ||

| + | | <code>VDBPSADBW</code> | ||

| + | | - || - || - || - || BW+VL || BW+VL || BW | ||

| + | |- | ||

| + | | colspan="8" | ... | ||

| + | |||

| + | |- | ||

| + | | <code>VPMOV(B/W)2M</code> | ||

| + | | - || - || - || - || BW+VL || BW+VL || BW | ||

| + | |- | ||

| + | | <code>VPMOV(D/Q)2M</code> | ||

| + | | - || - || - || - || DQ+VL || DQ+VL || DQ | ||

| + | |- | ||

| + | | colspan="8" | These instructions set the bits in a mask register, copying the most significant bit in the corresponding byte, word, doubleword, or quadword of the source vector in a vector register. | ||

| + | |||

| + | |- | ||

| + | | <code>VPMOVM2(B/W)</code> | ||

| + | | - || - || - || - || BW+VL || BW+VL || BW | ||

| + | |- | ||

| + | | <code>VPMOVM2(D/Q)</code> | ||

| + | | - || - || - || - || DQ+VL || DQ+VL || DQ | ||

| + | |- | ||

| + | | colspan="8" | These instructions set the bits in each byte, word, doubleword, or quadword of the destination vector in a vector register to all ones or zeros, copying the corresponding bit in a mask register. | ||

| + | |||

| + | |- | ||

| + | | <code>VPMOV(/S/US)WB</code> | ||

| + | | - || - || - || - || BW+VL || BW+VL || BW | ||

| + | |- | ||

| + | | <code>VPMOV(/S/US)(DB/DW/QB/QW/QD)</code> | ||

| + | | - || - || - || - || F+VL || F+VL || F | ||

| + | |- | ||

| + | | colspan="8" | Parallel down conversion of words, doublewords, or quadwords to bytes, words, or doublewords. The instructions truncate () the input or convert with signed (S) or unsigned saturation (US). The destination operand is a vector register or vector in memory. In the former case the instructions zero unused higher elements. The source operand is a vector register. | ||

| + | |||

| + | |- | ||

| + | | <code>(V)MOV(SX/ZX)BW</code> | ||

| + | | - || SSE4_1 || AVX || AVX2 || BW+VL || BW+VL || BW | ||

| + | |- | ||

| + | | <code>(V)MOV(SX/ZX)(BD/BQ/WD/WQ/DQ)</code> | ||

| + | | - || SSE4_1 || AVX || AVX2 || F+VL || F+VL || F | ||

| + | |- | ||

| + | | colspan="8" | Parallel sign or zero extend of bytes, words, or doublewords to words, doublewords, or quadwords. The BW, WD, and DQ instruction variants read only the lower half of the source operand, the BD, WQ variants the lowest quarter, and the BQ variant the lowest one-eighth. The source operand can be a vector register or a vector in memory of the size above. Broadcasting is not supported. | ||

| + | |||

| + | |- | ||

| + | | <code>(V)MOVDQU</code>, <code>(V)MOVDQA</code> | ||

| + | | - || SSE2 || AVX || AVX || - || - || - | ||

| + | |- | ||

| + | | <code>VMOVDQU(8/16)</code> | ||

| + | | - || - || - || - || BW+VL || BW+VL || BW | ||

| + | |- | ||

| + | | <code>VMOVDQU(32/64)</code>, <code>VMOVDQA(32/64)</code> | ||

| + | | - || - || - || - || F+VL || F+VL || F | ||

| + | |- | ||

| + | | colspan="8" | These instructions copy a vector of 8-bit bytes, 16-bit words, 32-bit doublewords, or 64-bit quadwords between vector registers, or from a vector register to memory or vice versa. The data type distinction is necessary because the AVX-512 versions of these instructions support write masking which observes the number of elements in the vector. For the <code>MOVDQA</code> ("move aligned") versions the memory address must be a multiple of the vector size in bytes or an exception is generated. Broadcasting is not supported. | ||

| + | |||

| + | |- | ||

| + | | <code>(V)MOVNTDQA</code> | ||

| + | | - || SSE4_1 || AVX || AVX2 || F+VL || F+VL || F | ||

| + | |- | ||

| + | | colspan="8" | Loads a vector from memory into a vector register with a non-temporal hint i.e. it is not beneficial to load the data into the cache hierarchy. The memory address must be a multiple of the vector size in bytes or an exception is generated. Broadcasting and write masking is not supported. | ||

| + | |||

| + | |- | ||

| + | | <code>(V)MOVNTDQ</code> | ||

| + | | - || SSE2 || AVX || AVX || F+VL || F+VL || F | ||

| + | |- | ||

| + | | colspan="8" | Stores a vector from a vector register in memory with non-temporal hint i.e. it is not beneficial to perform a write-allocate and/or insert the data in the cache hierarchy. Write masking is not supported. | ||

| + | |||

| + | |- | ||

| + | | <code>(V)MOV(D/Q)</code> | ||

| + | | MMX || SSE2 || AVX || - || F || - || - | ||

| + | |- | ||

| + | | colspan="8" | Copies a doubleword or quadword, the lowest element in a vector register, to a general purpose register or vice versa. If the destination is a vector register the instructions zero the higher elements of the vector. If the element is a doubleword and the destination a 64-bit GPR they zero the upper half. Write masking is not supported. | ||

| + | |||

| + | |- | ||

| + | | <code>VMOVW</code> | ||

| + | | - || - || - || - || FP16 || - || - | ||

| + | |- | ||

| + | | colspan="8" | Copies a 16-bit word, the lowest element in a vector register, to a general purpose register or vice versa. Unused higher bits in the destination register are zeroed. Write masking is not supported. | ||

| + | |||

| + | |- | ||

| + | | <code>VPSCATTER(D/Q)(D/Q)</code> | ||

| + | | - || - || - || - || F+VL || F+VL || F | ||

| + | |- | ||

| + | | colspan="8" | ... | ||

| + | |||

| + | |- | ||

| + | | <code>VPGATHER(D/Q)(D/Q)</code> | ||

| + | | - || - || - || - || F+VL || F+VL || F | ||

| + | |- | ||

| + | | colspan="8" | ... | ||

| + | |||

| + | |- | ||

| + | | <code>(V)PACKSSWB</code>, <code>(V)PACKSSDW</code>, <code>(V)PACKUSWB</code> | ||

| + | | MMX || SSE2 || AVX || AVX2 || BW+VL || BW+VL || BW | ||

| + | |- | ||

| + | | <code>(V)PACKUSDW</code> | ||

| + | | - || SSE4_1 || AVX || AVX2 || BW+VL || BW+VL || BW | ||

| + | |- | ||

| + | | colspan="8" | These instruction pack the signed words (WB) or doublewords (DW) in the source operands into the bytes or words, respectively, of the destination vector with signed (SS) or unsigned saturation (US). They interleave the results, packing data from the first source operand into the even 64-bit lanes of the destination, data from the second source operand into the odd lanes. Broadcasting is not supported by the WB variants. | ||

| + | |||

| + | |- | ||

| + | | <code>(V)PUNPCK(L/H)(BW/WD)</code> | ||

| + | | MMX || SSE2 || AVX || AVX2 || BW+VL || BW+VL || BW | ||

| + | |- | ||

| + | | <code>(V)PUNPCK(L/H)DQ</code> | ||

| + | | MMX || SSE2 || AVX || AVX2 || F+VL || F+VL || F | ||

| + | |- | ||

| + | | <code>(V)PUNPCK(L/H)QDQ</code> | ||

| + | | - || SSE2 || AVX || AVX2 || F+VL || F+VL || F | ||

| + | |- | ||

| + | | colspan="8" | These instructions interleave the bytes, words, doublewords, or quadwords of the first and second source operand. In order to fit the data into the destination vector <code>PUNPCKL</code> reads only the elements in the even 64-bit lanes, <code>UNPCKH</code> only the odd 64-bit lanes of the source operands. Broadcasting is not supported by the BW and WD variants. | ||

| + | |||

| + | |- | ||

| + | | <code>VPEXTRB</code> | ||

| + | | - || SSE4_1 || AVX || - || BW || - || - | ||

| + | |- | ||

| + | | <code>VPEXTRW</code> | ||

| + | | SSE || SSE2/SSE4_1 || AVX || - || BW || - || - | ||

| + | |- | ||

| + | | <code>VPEXTR(D/Q)</code> | ||

| + | | - || SSE4_1 || AVX || - || DQ || - || - | ||

| + | |- | ||

| + | | colspan="8" | These instructions extract a byte, word, doubleword, or quadword using a constant index to select an element from the lowest 128-bit lane of a source vector register and store it in a general purpose register or in memory. A memory destination is not supported by the MMX version of <code>VPEXTRW</code> and by the SSE version introduced by the {{x86|SSE2}} extension. The index is provided by an immediate byte. If the destination register is wider than the element the instructions zero the unused higher bits. | ||

| + | |||

| + | |- | ||

| + | | <code>VEXTRACTI32X4</code> | ||

| + | | - || - || - || - || - || F+VL || F | ||

| + | |- | ||

| + | | <code>VEXTRACTI32X8</code> | ||

| + | | - || - || - || - || - || - || DQ | ||

| + | |- | ||

| + | | <code>VEXTRACTI64X2</code> | ||

| + | | - || - || - || - || - || DQ+VL || DQ | ||

| + | |- | ||

| + | | <code>VEXTRACTI64X4</code> | ||

| + | | - || - || - || - || - || - || F | ||

| + | |- | ||

| + | | <code>VEXTRACTI128</code> | ||

| + | | - || - || - || AVX || - || - || - | ||

| + | |- | ||

| + | | colspan="8" | These instructions extract four (I32X4) or eight (I32X8) doublewords, or two (I64X2) or four (I64X4) quadwords, or one block of 128 bits (I128), from a lane of that width (e.g. 128 bits for I32X4) of the source operand selected by a constant index and store the data in memory, or in the lowest lane of a vector register. Higher lanes of the destination register are zeroed. The index is provided by an immediate byte. Broadcasting is not supported. | ||

| + | |||

| + | |- | ||

| + | | <code>VPINSRB</code> | ||

| + | | - || SSE4_1 || AVX || - || BW || - || - | ||

| + | |- | ||

| + | | <code>VPINSRW</code> | ||

| + | | SSE || SSE2 || AVX || - || BW || - || - | ||

| + | |- | ||

| + | | <code>VPINSR(D/Q)</code> | ||

| + | | - || SSE4_1 || AVX || - || DQ || - || - | ||

| + | |- | ||

| + | | colspan="8" | Inserts a byte, word, doubleword, or quadword taken from the lowest bits of a general purpose register or from memory, into the lowest 128-bit lane of the destination vector register using a constant index to select the element. The index is provided by an immediate byte. Write masking is not supported. | ||

| + | |||

| + | |- | ||

| + | | <code>VINSERTI32X4</code> | ||

| + | | - || - || - || - || - || F+VL || F | ||

| + | |- | ||

| + | | <code>VINSERTI32X8</code> | ||

| + | | - || - || - || - || - || - || DQ | ||

| + | |- | ||

| + | | <code>VINSERTI64X2</code> | ||

| + | | - || - || - || - || - || DQ+VL || DQ | ||

| + | |- | ||

| + | | <code>VINSERTI64X4</code> | ||

| + | | - || - || - || - || - || - || F | ||

| + | |- | ||

| + | | <code>VINSERTI128</code> | ||

| + | | - || - || - || AVX2 || - || - || - | ||

| + | |- | ||

| + | | colspan="8" | These instructions insert four (I32X4) or eight (I32X8) doublewords, or two (I64X2) or four (I64X4) quadwords, or one block of 128 bits ($128), in a lane of that width (e.g. 128 bits for I32X4) of the destination vector selected by a constant index provided by an immediate byte. They load this data from memory, or the lowest lane of a vector register specified by the second source operand, and data for the remaining lanes of the destination vector from the corresponding lanes of the first source operand, a vector register. Broadcasting is not supported. | ||

| + | |||

| + | |- | ||

| + | | <code>(V)PSHUFB</code> | ||

| + | | SSSE3 || SSSE3 || AVX || AVX2 || BW+VL || BW+VL || BW | ||

| + | |- | ||

| + | | colspan="8" | Shuffles the bytes of the first source operand using variable indices. For each byte of the destination vector the instruction obtains a source index modulo 16 from the corresponding byte of the second source operand. If the most significant bit of the index byte is set the instruction zeros the destination byte instead. The indices can only address a source byte in the same 128-bit lane as the destination byte. | ||

| + | |||

| + | |- | ||

| + | | <code>(V)PSHUF(L/H)W</code> | ||

| + | | - || SSE2 || AVX || AVX2 || BW+VL || BW+VL || BW | ||

| + | |- | ||

| + | | colspan="8" | These instructions shuffle the words of the source operand using constant indices. For each element of the destination vector, a 2-bit index selects an element in the same 64-bit lane of the source operand. For each 64-bit lane the same four indices are taken from an immediate byte. The <code>PSHUFLW</code> instruction writes only the even 64-bit lanes of the destination vector and leaves the remaining lanes unchanged, <code>PSHUFHW</code> writes the odd lanes. | ||

| + | |||

| + | |- | ||

| + | | <code>(V)PSHUFD</code> | ||

| + | | - || SSE2 || AVX || AVX2 || F+VL || F+VL || F | ||

| + | |- | ||

| + | | colspan="8" | Shuffles the doublewords of the source operand using constant indices. For each element of the destination vector, a 2-bit index selects an element in the same 128-bit lane of the source operand. For each 128-bit lane the same four indices are taken from an immediate byte. | ||

| + | |||

| + | |- | ||

| + | | <code>VSHUFI32X4</code> | ||

| + | | - || - || - || - || F+VL || F | ||

| + | |- | ||

| + | | <code>VSHUFI64X2</code> | ||

| + | | - || - || - || - || F+VL || F | ||

| + | |- | ||

| + | | colspan="8" | Shuffles a 128-bit wide group of four doublewords or two quadwords of the source operands using constant indices. For each 128-bit destination lane an index selects one 128-bit lane of the source operands. The indices for even destination lanes can only address lanes in the first source operand, those for odd destination lanes only lanes in the second source operand. Instruction variants operating on 256-bit vectors use two 1-bit indices, those operating on 512-bit vectors four 2-bit indices, always taken from an immediate byte. | ||

| + | |||

| + | |- | ||

| + | | <code>VPSHUFBITQMB</code> | ||

| + | | - || - || - || - || BITALG+VL || BITALG+VL || BITALG | ||

| + | |- | ||

| + | | colspan="8" | Shuffles the bits in the first source operand, a vector register, using bit indices. For each bit of the 16/32/64-bit mask in a destination mask register, the instruction obtains a source index modulo 64 from the corresponding byte in a second source operand, a 128/256/512-bit vector in a vector register or in memory. The operation is confined to quadwords so the indices can only select a bit from the same 64-bit lane where the index byte resides. For instance bytes 8 ... 15 can address bits 64 ... 127. The instruction supports write masking which means it optionally performs a bitwise 'and' on the destination using a second mask register. | ||

| + | |||

| + | |- | ||

| + | | <code>VPERMB</code> | ||

| + | | - || - || - || - || VBMI+VL || VBMI+VL || VBMI | ||

| + | |- | ||

| + | | <code>VPERM(W/D)</code> | ||

| + | | - || - || - || - || F+VL || F+VL || F | ||

| + | |- | ||

| + | | colspan="8" | Permutes the bytes, words, or doublewords of the second source operand using element indices. For each element of the destination vector the instruction obtains a source index, modulo vector size in elements, from the corresponding element of the first source operand. | ||

| + | |||

| + | |- | ||

| + | | <code>VPERMQ</code> | ||

| + | | - || - || - || AVX2 || - || F+VL || F | ||

| + | |- | ||

| + | | colspan="8" | Permutes the quadwords of a source operand within a 256-bit lane using constant or variable source element indices. For each destination element the constant index instruction variant obtains a 2-bit index from an immediate byte, and uses the same four indices in each 256-bit lane. The destination operand is a vector register. The source operand can be a vector register, a vector in memory, or one quadword in memory broadcast to all elements of the vector. | ||

| + | |||

| + | The variable index variant permutes the elements of a ''second'' source operand. For each destination element it obtains a 2-bit source index from the corresponding quadword of the first source operand. The destination and first source operand is a vector register. The second source operand can be a vector register, a vector in memory, or one quadword in memory broadcast to all elements of the vector. | ||

| + | |||

| + | |- | ||

| + | | <code>VPERM(I/T)2B</code> | ||

| + | | - || - || - || - || VBMI+VL || VBMI+VL || VBMI | ||

| + | |- | ||

| + | | <code>VPERM(I/T)2W</code> | ||

| + | | - || - || - || - || BW+VL || BW+VL || BW | ||

| + | |- | ||

| + | | <code>VPERM(I/T)2(D/Q)</code> | ||

| + | | - || - || - || - || F+VL || F+VL || F | ||

| + | |- | ||

| + | | colspan="8" | The "I" instruction variant concatenates the second and first source operand and permutes their elements using element indices. For each element of the destination vector it obtains a source index, modulo twice the vector size in elements, from the byte, word, doubleword, or quadword in this lane and overwrites it.<br/>The "T" variant concatenates the second source and destination operand, and obtains the source indices from the first source operand. In other words the instructions perform the same operation, one overwriting the indices, the other one half of the data table. The destination and first source operand is a vector register. The second source operand can be a vector register, a vector in memory, or a single value broadcast to all elements of the vector if the element is 32 or 64 bits wide. | ||

| + | |||

| + | |- | ||

| + | | <code>VPCOMPRESS(B/W)</code> | ||

| + | | - || - || - || - || VBMI2+VL || VBMI2+VL || VBMI2 | ||

| + | |- | ||

| + | | <code>VPCOMPRESS(D/Q)</code> | ||

| + | | - || - || - || - || F+VL || F+VL || F | ||

| + | |- | ||

| + | | colspan="8" | These instructions copy bytes, words, doublewords, or quadwords from a vector register to memory or another vector register. They copy each element in the source vector if the corresponding bit in a mask register is set, and only then increment the memory address or destination register element number for the next store. Remaining elements if the destination is a register are left unchanged or zeroed depending on the instruction variant. | ||

| + | |||

| + | |- | ||

| + | | <code>VPEXPAND(B/W)</code> | ||

| + | | - || - || - || - || VBMI2+VL || VBMI2+VL || VBMI2 | ||

| + | |- | ||

| + | | <code>VPEXPAND(D/Q)</code> | ||

| + | | - || - || - || - || F+VL || F+VL || F | ||

| + | |- | ||

| + | | colspan="8" | These instructions copy bytes, words, doublewords, or quadwords from memory or a vector register to another vector register. They load each element of the destination register, if the corresponding bit in a mask register is set, from the source and only then increment the memory address or source register element number for the next load. Destination elements where the mask bit is cleared are left unchanged or zeroed depending on the instruction variant. | ||

| + | |||

| + | |- | ||

| + | | <code>{{vanchor|VPBLENDM}}(B/W)</code> | ||

| + | | - || - || - || - || BW+VL || BW+VL || BW | ||

| + | |- | ||

| + | | <code>VPBLENDM(D/Q)</code> | ||

| + | | - || - || - || - || F+VL || F+VL || F | ||

| + | |- | ||

| + | | colspan="8" | Parallel blend of the bytes, words, doublewords, or quadwords in the source operands. For each element in the destination vector the corresponding bit in a mask register selects the element in the first source operand (0) or second source operand (1). If no mask operand is provided the default is all ones. "Zero-masking" variants of the instructions zero the destination elements where the mask bit is 0. In other words these instructions are equivalent to copying the first source operand to the destination, then the second source operand with regular write masking. | ||

| + | |||

| + | |- | ||

| + | | <code>VPBROADCAST(B/W)</code> from VR or memory | ||

| + | | - || - || AVX2 || AVX2 || BW+VL || BW+VL || BW | ||

| + | |- | ||

| + | | <code>VPBROADCAST(B/W)</code> from GPR | ||

| + | | - || - || - || - || BW+VL || BW+VL || BW | ||

| + | |- | ||

| + | | <code>VPBROADCAST(D/Q)</code> from VR or memory | ||

| + | | - || - || AVX2 || AVX2 || F+VL || F+VL || F | ||

| + | |- | ||

| + | | <code>VPBROADCAST(D/Q)</code> from GPR | ||

| + | | - || - || - || - || F+VL || F+VL || F | ||

| + | |- | ||

| + | | <code>VPBROADCASTI32X2</code> from VR or memory | ||

| + | | - || - || - || - || DQ+VL || DQ+VL || DQ | ||

| + | |- | ||

| + | | <code>VPBROADCASTI32X4</code> from VR or memory | ||

| + | | - || - || - || - || - || F+VL || F | ||

| + | |- | ||

| + | | <code>VPBROADCASTI32X8</code> from VR or memory | ||

| + | | - || - || - || - || - || - || DQ | ||

| + | |- | ||

| + | | <code>VPBROADCASTI64X2</code> from VR or memory | ||

| + | | - || - || - || - || - || DQ+VL || DQ | ||

| + | |- | ||

| + | | <code>VPBROADCASTI64X4</code> from VR or memory | ||

| + | | - || - || - || - || - || - || F | ||

| + | |- | ||

| + | | <code>VPBROADCASTI128</code> from memory | ||

| + | | - || - || - || AVX2 || - || - || - | ||

| + | |- | ||

| + | | colspan="8" | These instructions broadcast one byte, or one word, or one (D), two (I32X2), four (I32X4), or eight (I32X8) doublewords, or one (Q), two (I64X2), or four (I64X4) quadwords, or one block of 128 bits (I128), from the lowest lane of that width (e.g. 64 bits for I32X2) of a source vector register, or from memory, or from a general purpose register to all lanes of that width in the destination vector. The AVX-512 instructions support write masking with byte, word, doubleword, or quadword granularity. | ||

| + | |||

| + | |- | ||

| + | | <code>VPBROADCASTM(B2Q/W2D)</code> | ||

| + | | - || - || - || - || CD+VL || CD+VL || CD | ||

| + | |- | ||

| + | | colspan="8" | These instructions broadcast the lowest byte or word in a mask register to all doublewords or quadwords of the destination vector in a vector register. Write masking is not supported. | ||

| + | |||

| + | |- | ||

| + | | <code>VP2INTERSECT(D/Q)</code> | ||

| + | | - || - || - || - || VP2IN+VL || VP2IN+VL || VP2IN+F | ||

| + | |- | ||

| + | | colspan="8" | ... | ||

| + | |||

| + | |- | ||

| + | | <code>VPCONFLICT(D/Q)</code> | ||

| + | | - || - || - || - || CD+VL || CD+VL || CD | ||

| + | |- | ||

| + | | colspan="8" | ... | ||

| + | |||

| + | |- | ||

| + | | colspan="8" | Total: 310<!-- Counts mnemonics of instructions with EVEX encoding, ignores different vector sizes and operand options. --> | ||

| + | |} | ||

| + | |||

| + | == Floating point instructions == | ||

| + | Common aspects: | ||

| + | * Parallel operations are performed on the corresponding elements of the destination and source operands. | ||

| + | * "Packed" instructions with mnemonics ending in PH/PS/PD write all elements of the destination vector. "Scalar" instructions ending in SH/SS/SD write only the lowest element and leave the higher elements unchanged. | ||

| + | * Except as noted the destination operand and the first of two source operands is a vector register. | ||

| + | * If the destination is a vector register and the vector size is less than 512 bits AVX and AVX-512 instructions zero the unused higher bits to avoid a dependency on earlier instructions writing those bits. | ||

| + | * Except as noted the second or only source operand can be | ||

| + | ** a vector register, | ||

| + | ** a vector in memory for "packed" instructions, | ||

| + | ** a single element in memory for "scalar" instructions, | ||

| + | ** or a single element in memory broadcast to all elements in the vector for "packed" AVX-512 instructions. | ||

| + | * Some instructions use an immediate value as an additional operand, a byte which is part of the opcode. | ||

| + | |||

| + | Some floating point instructions which merely copy data or perform bitwise logical operations duplicate the functionality of integer instructions. AVX-512 implementations may actually execute vector integer and floating point instructions in separate execution units. Mixing those instructions is not advisable because results will be transferred automatically but this may incur a delay. | ||

| + | |||

| + | The table below lists all AVX-512 instructions operating on floating point values. The columns on the right show the x86 extension which introduced the instruction, broken down by instruction encoding and supported vector size in bits. For brevity "SSE/SSE2" means the single precision variant of the instruction was introduced by the {{x86|SSE}}, the double precision variant by the {{x86|SSE2}} extension. Similarly "F/FP16" means the single and double precision variant was introduced by the AVX512F (Foundation) extension, the half precision variant by the {{x86|AVX512_FP16}} extension. | ||

| + | |||

| + | {| class="wikitable" | ||

| + | ! style="width:100%" rowspan="2" | Instruction | ||

| + | ! SSE !! colspan="2" | AVX !! colspan="3" | AVX-512 | ||

| + | |- | ||

| + | ! 128 !! 128 !! 256 !! 128 !! 256 !! 512 | ||

| + | |||

| + | |- | ||

| + | | <code>(V)(ADD/{{vanchor|DIV}}/MAX/MIN/MUL/SUB)(PS/PD)</code> | ||

| + | | SSE/SSE2 || AVX || AVX || F+VL || F+VL || F | ||

| + | |- | ||

| + | | <code>(V)(ADD/DIV/MAX/MIN/MUL/SUB)(SS/SD)</code> | ||

| + | | SSE/SSE2 || AVX || - || F || - || - | ||

| + | |- | ||

| + | | <code>V(ADD/DIV/MAX/MIN/MUL/SUB)PH</code> | ||

| + | | - || - || - || FP16+VL || FP16+VL || FP16 | ||

| + | |- | ||

| + | | <code>V(ADD/DIV/MAX/MIN/MUL/SUB)SH</code> | ||

| + | | - || - || - || FP16 || - || - | ||

| + | |- | ||

| + | | colspan="7" | Parallel addition, division (source1 / source2), maximum, minimum, multiplication, or subtraction (source1 - source2), with desired rounding if applicable, of half, single, or double precision values. | ||

| + | |||

| + | |- | ||

| + | | <code>VRANGE(PS/PD)</code> | ||

| + | | - || - || - || DQ+VL || DQ+VL || DQ | ||

| + | |- | ||

| + | | <code>VRANGE(SD/SS)</code> | ||

| + | | - || - || - || DQ || - || - | ||

| + | |- | ||

| + | | colspan="8" | | ||

| + | These instructions perform a parallel minimum or maximum operation on single or double precision values, either on their original or absolute values. They optionally change the sign of all results to positive or negative, or copy the sign of the corresponding element in the first source operand. The operation is selected by an immediate byte. | ||

| + | |||

| + | A saturation operation like min(max(-limit, value), +limit) for instance can be expressed as minimum of absolute values with sign copying. | ||

| + | |||

| + | |- | ||

| + | | <code>VF(/N)(MADD/MSUB)(132/213/231)(PS/PD)</code>,<br/><code>VF(MADDSUB/MSUBADD)(132/213/231)(PS/PD)</code> | ||

| + | | - || FMA || FMA || F+VL || F+VL || F | ||

| + | |- | ||

| + | | <code>VF(/N)(MADD/MSUB)(132/213/231)(SS/SD)</code> | ||

| + | | - || FMA || FMA || F || - || - | ||

| + | |- | ||

| + | | <code>VF(/N)(MADD/MSUB)(132/213/231)PH</code>,<br/><code>VF(MADDSUB/MSUBADD)(132/213/231)PH</code> | ||

| + | | - || - || - || FP16+VL || FP16+VL || FP16 | ||

| + | |- | ||

| + | | <code>VF(/N)(MADD/MSUB)(132/213/231)SH</code> | ||

| + | | - || - || - || FP16 || - || - | ||

| + | |- | ||

| + | | colspan="7" | Parallel fused multiply-add of half, single, or double precision values. These instructions require three source operands, the first source operand is also the destination operand. The numbers in the mnemonic specify the operand order, e.g. 132 means src1 = src1 * src3 + src2. The operations are: | ||

| + | |||

| + | {| | ||

| + | | VFMADD || d = a * b + c | ||

| + | |- | ||

| + | | VFMSUB || d = a * b - c | ||

| + | |- | ||

| + | | VFMADDSUB || d[even] = a[even] * b[even] - c[even]<br/> d[odd] = a[odd] * b[odd] + c[odd] | ||

| + | |- | ||

| + | | VFMSUBADD || d[even] = a[even] * b[even] + c[even]<br/> d[odd] = a[odd] * b[odd] - c[odd] | ||

| + | |- | ||

| + | | VFNMADD || d = - a * b + c | ||

| + | |- | ||

| + | | VFNMSUB || d = - a * b - c | ||

| + | |} | ||

| + | |||

| + | |- | ||

| + | | <code>VF(/C)MADDC(PH/SH)</code>,<br/><code>VF(/C)MULC(PH/SH)</code> | ||

| + | | - || - || - || FP16+VL || FP16+VL || FP16 | ||

| + | |- | ||

| + | | colspan="7" | Parallel complex multiplication of half precision pairs. The instructions multiply corresponding pairs of half precision values in the first and second source operand: | ||

| + | |||

| + | <code>VFCMADDC</code>, <code>VFCMULC</code>:<br/> | ||

| + | temp[0] = source1[0] * source2[0] - source1[1] * source2[1]<br/> | ||

| + | temp[1] = source1[1] * source2[0] + source1[0] * source2[1] | ||

| + | |||

| + | <code>VFMADDC</code>, <code>VFMULC</code>:<br/> | ||

| + | temp[0] = source1[0] * source2[0] + source1[1] * source2[1]<br/> | ||

| + | temp[1] = source1[1] * source2[0] - source1[0] * source2[1] | ||

| + | |||

| + | The <code>MUL</code> instructions store the result in the corresponding pair of half precision values of the destination, the <code>MADD</code> instructions add the result to this pair. The "packed" instructions (PH) write all elements of the destination vector. the "scalar" instructions (SH) only the lowest pair and leave the higher elements unchanged. Write masking is supported with 32-bit granularity. | ||

| + | |||

| + | |- | ||

| + | | <code>V4F(/N)MADD(PS/SS)</code> | ||

| + | | - || - || - || - || - || 4FMAPS | ||

| + | |- | ||

| + | | colspan="7" | | ||

| + | Parallel fused multiply-accumulate of single precision values, four iterations. | ||

| + | |||

| + | In each iteration the instructions source 16 multiplicands from a 512-bit vector register, and one multiplier from memory which is broadcast to all 16 elements of a second vector. They add the 16 products and the 16 values in the corresponding elements of the 512-bit destination register, round the sums as desired, and store them in the destination. Finally the instructions increment the number of the source register by one modulo four, and the memory address by four bytes. Exceptions can occur in each iteration. Write masking is supported. | ||

| + | |||

| + | In total these instructions perform 64 multiply-accumulate operations, reading 64 single precision multiplicands from four source registers in a 4-aligned block, e.g. ZMM12 ... ZMM15, four single precision multipliers consecutive in memory, and accumulate 16 single precision results four times, also rounding four times. | ||

| + | |||

| + | <code>V4FNMADD</code> performs the same operation as <code>V4FMADD</code> except this instruction also negates the product. | ||

| + | |||

| + | The "packed" variants (PS) perform the operations above, the "scalar" variants (SS) yield only a single result in the lowest element of the 128-bit destination vector, leaving the three higher elements unchanged. As usual if the vector size is less than 512 bits the instructions zero the unused higher bits in the destination register to avoid a dependency on earlier instructions writing those bits. | ||

| + | |||

| + | In total the "scalar" instructions sequentially perform four multiply-accumulate operations, read a single precision multiplicand from four source registers, four single precision multipliers from memory, and accumulate one single precision result four times in the destination register, also rounding four times. | ||

| + | |||

| + | |- | ||

| + | | <code>VDPBF16PS</code> | ||

| + | | - || - || - || BF16+VL || BF16+VL || BF16 | ||

| + | |- | ||

| + | | colspan="7" | Dot product of {{link|BFloat16}} values, accumulated in single precision elements. The instruction multiplies the corresponding BF16 values of the source operands, converted to single precision, then adds the products from the even lanes, odd lanes, and the single precision values in the destination operand and stores the sums in the destination. | ||

| + | |||

| + | |- | ||

| + | | <code>V{{vanchor|RCP}}14(PS/PD)</code> | ||

| + | | - || - || - || F+VL || F+VL || F | ||

| + | |- | ||

| + | | <code>VRCP28(PS/PD)</code> | ||

| + | | - || - || - || - || - || ER | ||

| + | |- | ||

| + | | <code>VRCPPH</code> | ||

| + | | - || - || - || FP16+VL || FP16+VL || FP16 | ||

| + | |- | ||

| + | | <code>VRCP14(SS/SD)</code> | ||

| + | | - || - || - || F || - || - | ||

| + | |- | ||

| + | | <code>VRCP28(SS/SD)</code> | ||

| + | | - || - || - || ER || - || - | ||

| + | |- | ||

| + | | <code>VRCPSH</code> | ||

| + | | - || - || - || FP16 || - || - | ||

| + | |- | ||

| + | | colspan="8" | Parallel approximate reciprocal of half, single, or double precision values. The maximum relative error is less than 2<sup>-14</sup> or 2<sup>-28</sup> (RCP28), see Intel's [https://www.intel.com/content/www/us/en/developer/articles/code-sample/reference-implementations-for-ia-approximation-instructions-vrcp14-vrsqrt14-vrcp28-vrsqrt28-vexp2.html reference implementation] for exact values. | ||

| + | |||

| + | Multiplication by a reciprocal can be faster than the <code>{{link|#DIV}}</code> instruction which computes full precision results. More precise results can be achieved using the {{wp|Division_algorithm#Newton%E2%80%93Raphson_division|Newton-Raphson}} method. For examples see <ref name="Intel-248966-*">{{cite techdoc|title=Intel® 64 and IA-32 Architectures Optimization Reference Manual|url=https://www.intel.com/content/www/us/en/developer/articles/technical/intel-sdm.html|publ=Intel|pid=248966|rev=046|date=2023-01}}</ref>. | ||

| + | |||

| + | |- | ||

| + | | <code>(V){{vanchor|SQRT}}(PS/PD)</code> | ||

| + | | SSE/SSE2 || AVX || AVX || F+VL || F+VL || F | ||

| + | |- | ||

| + | | <code>VSQRTPH</code> | ||

| + | | - || - || - || FP16+VL || FP16+VL || FP16 | ||

| + | |- | ||

| + | | <code>(V)SQRT(SS/SD)</code> | ||

| + | | SSE/SSE2 || AVX || - || F || - || - | ||

| + | |- | ||

| + | | <code>VSQRTSH</code> | ||

| + | | - || - || - || FP16 || - || - | ||

| + | |- | ||

| + | | colspan="7" | Parallel square root with desired rounding of half, single, or double precision values. | ||

| + | |||

| + | |- | ||

| + | | <code>V{{vanchor|RSQRT}}14(PS/PD)</code> | ||

| + | | - || - || - || F+VL || F+VL || F | ||

| + | |- | ||

| + | | <code>VRSQRT28(PS/PD)</code> | ||

| + | | - || - || - || - || - || ER | ||

| + | |- | ||

| + | | <code>VRSQRTPH</code> | ||

| + | | - || - || - || FP16+VL || FP16+VL || FP16 | ||

| + | |- | ||

| + | | <code>VRSQRT14(SS/SD)</code> | ||

| + | | - || - || - || F || - || - | ||

| + | |- | ||

| + | | <code>VRSQRT28(SS/SD)</code> | ||

| + | | - || - || - || ER || - || - | ||

| + | |- | ||

| + | | <code>VRSQRTSH</code> | ||

| + | | - || - || - || FP16 || - || - | ||

| + | |- | ||

| + | | colspan="8" | Parallel approximate reciprocal of the square root of half, single, or double precision values. The maximum relative error is less than 2<sup>-14</sup> or 2<sup>-28</sup> (RSQRT28), see Intel's [https://www.intel.com/content/www/us/en/developer/articles/code-sample/reference-implementations-for-ia-approximation-instructions-vrcp14-vrsqrt14-vrcp28-vrsqrt28-vexp2.html reference implementation] for exact values. | ||

| + | |||

| + | Multiplication by a reciprocal square root can be faster than the <code>{{link|#SQRT}}</code> and <code>{{link|#DIV}}</code> instructions which compute full precision results. Also <code>RSQRT</code> and <code>{{link|#RCP}}</code> can be faster than <code>SQRT</code>. More precise reciprocal square roots can be computed using {{wp|Methods_of_computing_square_roots#Iterative_methods_for_reciprocal_square_roots|Newton's method}}, square roots by Taylor series expansion, both avoiding divisions. For examples see <ref name="Intel-248966-*"/>. | ||

| + | |||

| + | |- | ||

| + | | <code>VEXP2(PS/PD)</code> | ||

| + | | - || - || - || - || - || ER | ||

| + | |- | ||

| + | | colspan="7" | Parallel approximation of 2<sup>x</sup> of single or double precision values. The maximum relative error is less than 2<sup>-23</sup>, see Intel's [https://www.intel.com/content/www/us/en/developer/articles/code-sample/reference-implementations-for-ia-approximation-instructions-vrcp14-vrsqrt14-vrcp28-vrsqrt28-vexp2.html reference implementation] for exact values. | ||

| + | |||

| + | |- | ||

| + | | <code>(V)CMP*(PS/PD)</code> | ||

| + | | SSE/SSE2 || AVX || AVX || F+VL || F+VL || F | ||

| + | |- | ||

| + | | <code>(V)CMP*(SS/SD)</code> | ||

| + | | SSE/SSE2 || AVX || - || F || - || - | ||

| + | |- | ||

| + | | <code>VCMP*PH</code> | ||

| + | | - || - || - || FP16+VL || FP16+VL || FP16 | ||

| + | |- | ||

| + | | <code>VCMP*SH</code> | ||

| + | | - || - || - || FP16 || - || - | ||

| + | |- | ||

| + | | colspan="7" | Parallel compare operation of the half, single, or double precision values in the first and second source operand. One of 32 operations (CMPEQ - equal, CMPLT - less than, CMPEQ_UO - equal unordered, ...) is selected by an immediate byte. The results, 1 = true or 0 = false, are stored in the destination. | ||

| + | |||

| + | For AVX-512 versions of the instructions the destination is a mask register with bits corresponding to the source elements. The "scalar" instructions (SH/SS/SD) set only the lowest bit. Unused bits due to the vector and element size are zeroed. The instructions support write masking which performs a bitwise 'and' on the destination using a second mask register. | ||

| + | |||

| + | For SSE/AVX versions the negated results (-1 or 0) are stored in a vector of doublewords (PS/SS) or quadwords (PD/SD) corresponding to the source elements. These can be used as masks in bitwise logical operations to emulate predicated vector instructions. | ||

| + | |||

| + | |- | ||

| + | | <code>(V)(/U)COMI(SS/SD)</code> | ||

| + | | SSE/SSE2 || AVX || - || F || - || - | ||

| + | |- | ||

| + | | <code>V(/U)COMISH</code> | ||

| + | | - || - || - || FP16 || - || - | ||

| + | |- | ||

| + | | colspan="7" | Compares the half, single, or double precision values in the lowest element of the first and second source operand and stores the result in the {{abbr|ZF|Zero Flag}} (equal), {{abbr|CF|Carry Flag}} (less than), and {{abbr|PF|Parity Flag}} (unordered) for branch instructions. UCOMI instructions perform an unordered compare and only generate an exception if a source operand is a {{abbr|SNaN}}, COMI instructions also for {{abbr|QNaN}}s. Broadcasting and write masking is not supported. | ||

| + | |||

| + | |- | ||

| + | | <code>VFPCLASS(PH/PS/PD)</code> | ||

| + | | - || - || - || DQ/FP16+VL || DQ/FP16+VL || DQ/FP16 | ||

| + | |- | ||

| + | | <code>VFPCLASS(SH/SS/SD)</code> | ||

| + | | - || - || - || DQ/FP16 || - || - | ||

| + | |- | ||

| + | | colspan="7" | These instructions test if the half, single, or double precision values in the source operand belong to certain classes and set the bit corresponding to each element in the destination mask register to 1 = true or 0 = false. The "packed" instructions (PH/PS/PD) operate on all elements, the "scalar" instructions (SH/SS/SD) only on the lowest element and set a single mask bit. Unused higher bits of the 64-bit mask register are cleared. The instructions support write masking which means they optionally perform a bitwise 'and' on the destination using a second mask register. The class is selected by an immediate byte and can be: {{abbr|QNaN}}, +0, -0, +∞, -∞, denormal, negative, {{abbr|SNaN}}, or any combination. | ||

| + | |||

| + | |- | ||

| + | | <code>(V)(AND/ANDN/OR/XOR)(PS/PD)</code> | ||

| + | | SSE/SSE2 || AVX || AVX || DQ+VL || DQ+VL || DQ | ||

| + | |- | ||

| + | | colspan="7" | Parallel bitwise logical operations on single or double precision values. There are no "scalar" variants operating on a single element. The <code>ANDN</code> operation is (not source1) and source2. The precision distinction is necessary because all these instructions support write masking which observes the number of elements in the vector. | ||

| + | |||

| + | |- | ||

| + | | <code>VRNDSCALE(PH/PS/PD)</code> | ||

| + | | - || - || - || F/FP16+VL || F/FP16+VL || F/FP16 | ||

| + | |- | ||

| + | | <code>VRNDSCALE(SH/SS/SD)</code> | ||

| + | | - || - || - || F/FP16 || - || - | ||

| + | |- | ||

| + | | colspan="8" | Parallel rounding to a given number of fractional bits on half, single, or double precision values. The operation is | ||

| + | |||

| + | dest = round(2<sup>M</sup> * source) * 2<sup>-M</sup> | ||

| + | |||

| + | with desired rounding mode and M a constant in range 0 ... 15. | ||

| + | |||

| + | |- | ||

| + | | <code>VREDUCE(PH/PS/PD)</code> | ||

| + | | - || - || - || DQ/FP16+VL || DQ/FP16+VL || DQ/FP16 | ||

| + | |- | ||

| + | | <code>VREDUCE(SH/SS/SD)</code> | ||

| + | | - || - || - || DQ/FP16 || - || - | ||

| + | |- | ||

| + | | colspan="8" | | ||

| + | Parallel reduce transformation on half, single, or double precision values. The operation is | ||

| + | |||

| + | dest = source – round(2<sup>M</sup> * source) * 2<sup>-M</sup> | ||

| + | |||

| + | with desired rounding mode and M a constant in range 0 ... 15. These instructions can be used to accelerate transcendental functions. | ||

| + | |||

| + | |- | ||

| + | | <code>VSCALEF(PH/PS/PD)</code> | ||

| + | | - || - || - || F/FP16+VL || F/FP16+VL || F/FP16 | ||

| + | |- | ||

| + | | <code>VSCALEF(SH/SS/SD)</code> | ||

| + | | - || - || - || F/FP16 || - || - | ||

| + | |- | ||

| + | | colspan="8" | Parallel scale operation on half, single, or double precision values. The operation is: | ||

| + | |||

| + | dest = source1 * 2<sup>floor(source2)</sup> | ||

| + | |||

| + | |- | ||