m (Reverted edits by 31.11.239.182 (talk) to last revision by David) |

|||

| Line 39: | Line 39: | ||

The NNP family comprises two separate series - '''NNP-I''' for [[inference]] and '''NNP-L''' for [[training]]. | The NNP family comprises two separate series - '''NNP-I''' for [[inference]] and '''NNP-L''' for [[training]]. | ||

| − | == | + | == Learning (NNP-L) == |

=== Lake Crest === | === Lake Crest === | ||

{{main|nervana/microarchitectures/lake_crest|l1=Lake Crest µarch}} | {{main|nervana/microarchitectures/lake_crest|l1=Lake Crest µarch}} | ||

The first generation of NNPs were based on the {{nervana|Lake Crest|Lake Crest microarchitecture|l=arch}}. Manufactured on [[TSMC]]'s [[28 nm process]], those chips were never productized. Samples were used for customer feedback and the design mostly served as a software development vehicle for their follow-up design. | The first generation of NNPs were based on the {{nervana|Lake Crest|Lake Crest microarchitecture|l=arch}}. Manufactured on [[TSMC]]'s [[28 nm process]], those chips were never productized. Samples were used for customer feedback and the design mostly served as a software development vehicle for their follow-up design. | ||

| − | === NNP | + | === NNP L-1000 (Spring Crest) === |

| − | [[File:nnp-l-1000 announcement.png|thumb|right|NNP | + | [[File:nnp-l-1000 announcement.png|thumb|right|NNP L-1000]] |

{{main|nervana/microarchitectures/spring_crest|l1=Spring Crest µarch}} | {{main|nervana/microarchitectures/spring_crest|l1=Spring Crest µarch}} | ||

| − | Second-generation NNP- | + | Second-generation NNP-Ls are branded as the NNP L-1000 series and are the first chips to be productized. Fabricated [[TSMC]]'s [[16 nm process]] based on the {{nervana|Spring Crest|Spring Crest microarchitecture|l=arch}}, those chips feature a number of enhancements and refinments over the prior generation including a shift from [[Flexpoint]] to [[Bfloat16]]. Intel claims that these chips have about 3-4x the training performance of first generation. Those chips come with 32 GiB of four [[HBM2]] stacks and are [[packaged]] in two forms - [[PCIe x16 Gen 3 Card]] and an [[OCP OAM]]. |

Revision as of 02:04, 12 June 2019

Neural Network Processors (NNP) are a family of neural processors designed by Intel Nervana for both inference and training.

Contents

Overview

Neural network processors (NNP) are a family of neural processors designed by Intel for the acceleration of artificial intelligence workloads. The design initially originated by Nervana prior to their acquisition by Intel. Intel eventually productized those chips starting with their second-generation designs.

The NNP family comprises two separate series - NNP-I for inference and NNP-L for training.

Learning (NNP-L)

Lake Crest

- Main article: Lake Crest µarch

The first generation of NNPs were based on the Lake Crest microarchitecture. Manufactured on TSMC's 28 nm process, those chips were never productized. Samples were used for customer feedback and the design mostly served as a software development vehicle for their follow-up design.

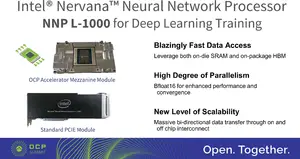

NNP L-1000 (Spring Crest)

- Main article: Spring Crest µarch

Second-generation NNP-Ls are branded as the NNP L-1000 series and are the first chips to be productized. Fabricated TSMC's 16 nm process based on the Spring Crest microarchitecture, those chips feature a number of enhancements and refinments over the prior generation including a shift from Flexpoint to Bfloat16. Intel claims that these chips have about 3-4x the training performance of first generation. Those chips come with 32 GiB of four HBM2 stacks and are packaged in two forms - PCIe x16 Gen 3 Card and an OCP OAM.

Inference (NNP-I)

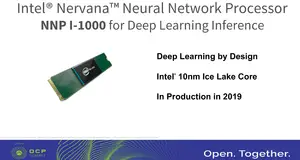

NNP I-1000 Series

- Main article: Spring Hill µarch

The NNP I-1000 series is Intel's first chips designed specifically for the acceleration of inference workloads. Fabricated on Intel's 10 nm process, thos chips are based on Spring Hill and incorporate a Sunny Cove core. Those devices come in M.2 form factor.

See also

| designer | Intel + |

| first announced | May 23, 2018 + |

| first launched | 2019 + |

| full page name | nervana/nnp + |

| instance of | integrated circuit family + |

| main designer | Intel + |

| manufacturer | Intel + and TSMC + |

| name | NNP + |

| package | PCIe x16 Gen 3 Card +, OCP OAM + and M.2 + |

| process | 28 nm (0.028 μm, 2.8e-5 mm) +, 16 nm (0.016 μm, 1.6e-5 mm) + and 10 nm (0.01 μm, 1.0e-5 mm) + |

| technology | CMOS + |