| (28 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

| − | {{title|Summit (OLCF-4)}} | + | {{sc title|Summit (OLCF-4)}} |

| − | '''Summit''' ('''OLCF-4''') is a 200-petaFLOP [[supercomputer]] operating by the [[DoE]] [[Oak Ridge National Laboratory]]. Summit was officially unveiled on June 8, 2018 as the fastest supercomputer in the world, overtaking {{sc|Sunway TaihuLight}}. | + | {{supercomputer |

| + | |name=Summit | ||

| + | |logo=olcf-4 summit logo.png | ||

| + | |image=ornl summit front shot.jpg | ||

| + | |sponsor=U.S. Department of Energy | ||

| + | |designer=IBM | ||

| + | |designer 2=Nvidia | ||

| + | |operator=Oak Ridge National Laboratory | ||

| + | |introduction=June 8, 2018 | ||

| + | |peak dpflops=200 petaFLOPS | ||

| + | |price=$200,000,000 | ||

| + | |predecessor=Titan | ||

| + | |predecessor link=supercomputers/titan | ||

| + | |successor=Frontier | ||

| + | |successor link=supercomputers/frontier | ||

| + | }} | ||

| + | '''Summit''' ('''OLCF-4''') is {{\\|Titan|Titan's}} successor, a 200-petaFLOP [[supercomputer]] operating by the [[DoE]] [[Oak Ridge National Laboratory]]. Summit was officially unveiled on June 8, 2018 as the fastest supercomputer in the world, overtaking the {{sc|Sunway TaihuLight}}. Summit is expected to be succeeded by {{\\|Frontier}} in 2021. | ||

== History == | == History == | ||

| − | |||

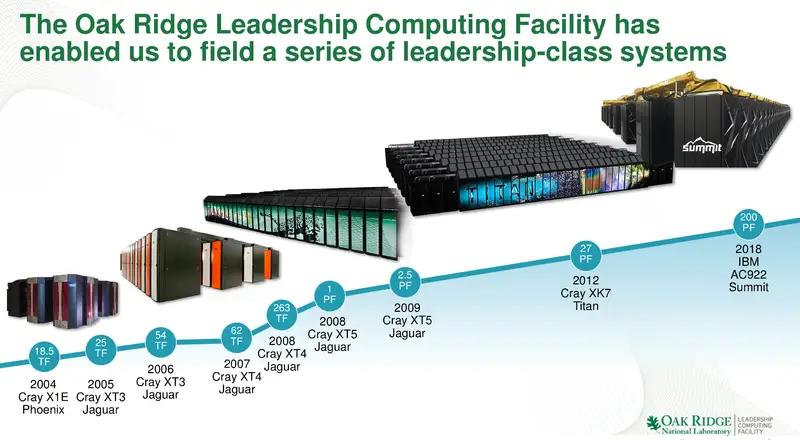

Summit is one of three systems as part of the Collaboration of Oak Ridge, Argonne, and Lawrence Livermore Labs (CORAL) procurement program. Research and planning started in [[2012]] with initial system delivery arriving in late [[2017]]. The full system arrived in early [[2018]] and the system was officially unveiled on June 8, 2018. Summit is estimated to have cost around $200 million as part of the CORAL procurement program. | Summit is one of three systems as part of the Collaboration of Oak Ridge, Argonne, and Lawrence Livermore Labs (CORAL) procurement program. Research and planning started in [[2012]] with initial system delivery arriving in late [[2017]]. The full system arrived in early [[2018]] and the system was officially unveiled on June 8, 2018. Summit is estimated to have cost around $200 million as part of the CORAL procurement program. | ||

| + | |||

| + | :[[File:ornl-summit-historical-roadmap.png|800px]] | ||

== Overview == | == Overview == | ||

Summit was designed to deliver 5-10x improvement in performance for real big science workload performance over {{\\|Titan}}. Compared to {{\\|Titan}} which had 18,688 nodes ([[AMD]] {{amd|Opteron}} + [[Nvidia]] {{nvidia|Kepler|l=arch}}) with a 9 MW power consumption, Summit slightly increased the power consumption to 13 MW, reduced the number of nodes to only 4,608, but tenfold the peak theoretical performance from 27 petaFLOPS to around 225 PF. | Summit was designed to deliver 5-10x improvement in performance for real big science workload performance over {{\\|Titan}}. Compared to {{\\|Titan}} which had 18,688 nodes ([[AMD]] {{amd|Opteron}} + [[Nvidia]] {{nvidia|Kepler|l=arch}}) with a 9 MW power consumption, Summit slightly increased the power consumption to 13 MW, reduced the number of nodes to only 4,608, but tenfold the peak theoretical performance from 27 petaFLOPS to around 225 PF. | ||

| + | Summit has over 200 [[petaFLOPS]] of theoretical compute power and over 3 AI exaFLOPS for AI workloads. | ||

| + | |||

| + | <table class="wikitable"> | ||

| + | <tr><th></th><th colspan="2">Components</th><th> </th><th> </th><th colspan="3">System</th></tr> | ||

| + | <tr><th>Processor</th><td>CPU</td><td>GPU</td><td> </td><th>Rack</th><td>Compute Racks</td><td>Storage Racks</td><td>Switch Racks</td></tr> | ||

| + | <tr><th>Type</th><td>{{ibm|POWER9|l=arch}}</td><td>{{nvidia|Volta|V100|l=arch}}</td><td> </td><th>Type</th><td>AC922</td><td>SSC (4 ESS GL4)</td><td>[[Mellanox]] [[IB EDR]]</td></tr> | ||

| + | <tr><th>Count</th><td>9,216<br>2 × 18 x 256</td><td>27,648<br>6 × 18 x 256</td><td> </td><th>Count</th><td>256 Racks × 18 Nodes</td><td>40 Racks × 8 Servers</td><td>18 Racks</td></tr> | ||

| + | <tr><th>Peak FLOPS</th><td>9.96 PF</td><td>215.7 PF</td><td> </td><th>Power</th><td>59 kW</td><td>38 kW</td><td></td></tr> | ||

| + | <tr><th>Peak AI FLOPS</th><td></td><td>3.456 EF</td><td> </td><td></td><td colspan="3" style="text-align: center;">13 MW (Total System)</td></tr> | ||

| + | </table> | ||

| + | |||

| + | Summit has over 10 [[petabytes]] of memory. | ||

| + | |||

| + | <table class="wikitable"> | ||

| + | <tr><th colspan="4">Summit Total Memory</th></tr> | ||

| + | <tr><th>Type</th><td>[[DDR4]]</td><td>[[HBM2]]</td><td>[[NVMe]]</td><tr> | ||

| + | <tr><th>Node</th><td>512 GiB</td><td>96 GiB</td><td>1.6 GB</td></tr> | ||

| + | <tr><th>Summit</th><td>2.53 PiB</td><td>475 TiB</td><td>7.37 PB</td></tr> | ||

| + | </table> | ||

| + | |||

| + | == Architecture == | ||

| + | === System === | ||

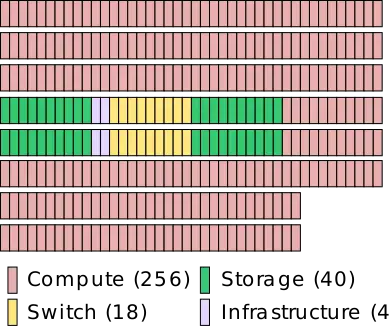

| + | Weighing over 340 tons, Summit takes up 5,600 sq. ft. of floor space at [[Oak Ridge National Laboratory]]. Summit consists of 256 compute racks, 40 storage racks, 18 switching director racks, and 4 infrastructure racks. Servers are linked via [[Mellanox]] [[IB EDR]] [[interconnect]] in a three-level non-blocking fat-tree topology. | ||

| + | |||

| + | :[[File:summit-floorplan.svg|390px]] | ||

| + | |||

| + | |||

| + | :[[File:ornl summit open rack.jpg|700px]] | ||

| + | |||

| + | === Compute Rack === | ||

| + | [[File:summit supercomputer compute rack.jpg|right|300px]] | ||

| + | Each of Summit's 256 Compute Racks consists of 18 Compute Nodes along with a Mellanox IB EDR for a non-blocking fat-tree interconnect topology (actually appears to be pruned 3-level fat-trees). With 18 nodes, each rack has 9 TiB of DDR4 memory and another 1.7 TiB of HBM2 memory for a total of 10.7 TiB of memory. A rack has a 59 kW max power and a total of 864 TF/s of peak compute power (ORNL reports 775 TF/s). | ||

| + | |||

| + | === Compute Node === | ||

| + | The basic compute node is the Power Systems AC922 (Accelerated Computing), formerly codename ''Witherspoon''. The AC9222 comes in a 19-inch 2U rack-mount case. | ||

| + | |||

| + | :[[File:ornl-summit-node.png|800px]] | ||

| + | |||

| + | Each node has two 2200W power supplies, 4 PCIe Gen 4 slots, and a BMC card. There are two 22-core POWER9 processors per node, each with 8 DIMMs. For the Summit supercomputer, there are 8 32-GiB DDR4-2666 DIMMs for a total of 256 GiB and 170.7 GB/s of aggregated memory bandwidth per socket. There are three V100 GPUs per POWER9 socket. Those use the SXM2 form factor and come with 16 GiB of HBM2 memory for a total of 48 GiB of HBM2 and 2.7 TBps of aggregated bandwidth per socket. | ||

| + | |||

| + | :[[File:summit compute node (annotated).png|800px]] | ||

| + | |||

| + | ==== Socket ==== | ||

| + | Since [[IBM]] {{ibm|POWER9|l=arch}} processors have native on-die [[NVLink]] connectivity, they are connected directly to the CPUs. The {{ibm|POWER9|l=arch}} processor has six [[NVLink 2.0]] Bricks which are divided into three groups of two Bricks. Since [[NVLink 2.0]] has bumped the signaling rate to 25 GT/s, two Bricks allow for 100 GB/s of bandwidth between the CPU and GPU. In addition to everything else, there are x48 PCIe Gen 4 lanes for I/O. | ||

| + | |||

| + | The {{nvidia|Volta|l=arch}} GPUs have 6 [[NVLink 2.0]] Bricks which are divided into three groups. One group is used for the CPU while the other two groups interconnect every GPU to every other GPU. As with the GPU-CPU link, the aggregated bandwidth between two GPUs is also 100 GB/s. | ||

| + | |||

| + | :[[File:summit single-socket.svg|500px]] | ||

| + | |||

| + | |||

| + | <table class="wikitable"> | ||

| + | <tr><th colspan="5">Single-socket Capabilities</th></tr> | ||

| + | <tr><th>Processor</th><td>POWER9</td><td>V100</td></tr> | ||

| + | <tr><th>Count</th><td>1</td><td>3</td></tr> | ||

| + | <tr><th>FLOPS (SP)</th><td>1.081 TFLOPS<br>22 × 49.12 GFLOPs</td><td>47.1 TFLOPS<br>3 × 15.7 TFLOPs</td></tr> | ||

| + | <tr><th>FLOPS (DP)</th><td>540.3 GFLOPs<br>22 × 24.56 GFLOPs</td><td>23.4 TFLOPS<br>3 × 7.8 TFLOPs</td></tr> | ||

| + | <tr><th>AI FLOPS</th><td>-</td><td>375 TFLOPS<br>3 × 125 TFLOPs</td></tr> | ||

| + | <tr><th>Memory</th><td>256 GiB (DRR4)<br>8 × 32 GiB</td><td>48 GiB (HBM2)<br>3 × 16 GiB</td></tr> | ||

| + | <tr><th>Bandwidth</th><td>170.7 GB/s<br>8 × 21.33 GB/s</td><td>900 GB/s/GPU</td></tr> | ||

| + | </table> | ||

| + | |||

| + | ==== Full-node ==== | ||

| + | There are two sockets per node. Communication between the two {{ibm|POWER9|l=arch}} processors is done over IBM’s {{ibm|X Bus}}. The {{ibm|X Bus}} is a 4-byte 16 GT/s link providing 64 GB/s of bidirectional bandwidth. A node has four [[PCIe Gen 4.0]] slots consisting of two x16 (with [[CAPI]] support), a single x8 (also with CAPI support), and a single x4 slot. One of the x16 comes from one CPU, the other comes from the second. The x8 is configurable from either one of the CPUs and the last x4 slot comes from the second CPU only. The rest of the [[PCIe]] lanes used for various I/O applications (PEX, [[USB]], [[BMC]], and 1 Gbps [[Ethernet]]). | ||

| + | |||

| + | The node has a Mellanox InfiniBand ConnectX5 ([[IB EDR]]) [[network interface card|NIC]] installed which supports 100 Gbps of bi-directional traffic. This card sits on a [[PCIe Gen4]] x8 shared slot which directly connects x8 lanes to each of the two processors. With 12.5 GB/s per port (25 GB/s peak bandwidth) there is higher bandwidth of 16 GB/s per x8 lane (32 GB/s peak aggregated bandwidth) to the CPU. This enables each CPU to have direct access to the [[InfiniBand]] card, reducing bottlenecks with higher bandwidth. | ||

| + | |||

| + | :[[File:summit single-node.svg|700px]] | ||

| + | |||

| + | Each POWER9 processor operates at 3.07 GHz and supports simultaneous execution of two vector single-precision operations. In other words, each core can execute 16 single-precision floating-point operations per cycle. At 3.07 GHz, this works out to 49.12 gigaFLOPS of peak theoretical performance per core. A full node has a little under 1.1 teraFLOPS (DP) of peak performance from the CPUs and around 47 teraFLOPS (DP) from the GPUs. Note that there is a slight discrepancy between our numbers and ORNL’s. Buddy Bland, OLCF Program Director, informed us that their peak performance for Summit only include the GPU’s peak performance numbers because that’s what most of the FP-intensive code will use to achieve the highest performance. In theory, if we were to include everything, Summit actually has a higher peak performance of around ~220 petaFLOPS. | ||

| + | |||

| + | There is 1.6 TB of NVMe Flash adapter attached to each node and Mellanox Infiniband EDR NIC. | ||

| + | |||

| + | <table class="wikitable"> | ||

| + | <tr><th colspan="5">Full Node Capabilities</th></tr> | ||

| + | <tr><th>Processor</th><td>POWER9</td><td>V100</td></tr> | ||

| + | <tr><th>Count</th><td>2</td><td>6</td></tr> | ||

| + | <tr><th>FLOPS (SP)</th><td>2.161 TFLOPS<br>2 × 22 × 49.12 GFLOPs</td><td>94.2 TFLOPs<br>6 × 15.7 TFLOPs</td></tr> | ||

| + | <tr><th>FLOPS (DP)</th><td>1.081 TFLOPS<br>2 × 22 × 24.56 GFLOPs</td><td>46.8 TFLOPS<br>6 × 7.8 TFLOPs</td></tr> | ||

| + | <tr><th>AI FLOPS</th><td>-</td><td>750 TFLOPS<br>6 × 125 TFLOPs</td></tr> | ||

| + | <tr><th>Memory</th><td>512 GiB (DRR4)<br>16 × 32 GiB</td><td>96 GiB (HBM2)<br>6 × 16 GiB</td></tr> | ||

| + | <tr><th>Bandwidth</th><td>341.33 GB/s<br>16 × 21.33 GB/s</td><td>900 GB/s/GPU</td></tr> | ||

| + | </table> | ||

| + | |||

| + | == Documents == | ||

| + | * [[:File:ornl summit node infographic.pdf|Summit Compute Node Infographic]] | ||

| + | |||

| + | == Bibliography == | ||

| + | * Schor, D (2018, June). ''[https://fuse.wikichip.org/news/1351/ornls-200-petaflops-summit-supercomputer-has-arrived-to-become-worlds-fastest/ ORNL’s 200-petaFLOPS Summit Supercomputer Has Arrived, To Become World’s Fastest]''. | ||

| + | * ORNL. (personal communication, 2018, June). | ||

| + | * ORNL. (2018, June). ''[https://www.olcf.ornl.gov/summit/ Summit - Oak Ridge National Laboratory]''. | ||

[[category:supercomputers]] | [[category:supercomputers]] | ||

Latest revision as of 20:39, 21 July 2019

| Edit Values | |

| Summit | |

| |

| |

| General Info | |

| Sponsors | U.S. Department of Energy |

| Designers | IBM, Nvidia |

| Operators | Oak Ridge National Laboratory |

| Introduction | June 8, 2018 |

| Peak FLOPS | 200 petaFLOPS |

| Price | $200,000,000 |

| Succession | |

Summit (OLCF-4) is Titan's successor, a 200-petaFLOP supercomputer operating by the DoE Oak Ridge National Laboratory. Summit was officially unveiled on June 8, 2018 as the fastest supercomputer in the world, overtaking the Sunway TaihuLight. Summit is expected to be succeeded by Frontier in 2021.

Contents

History[edit]

Summit is one of three systems as part of the Collaboration of Oak Ridge, Argonne, and Lawrence Livermore Labs (CORAL) procurement program. Research and planning started in 2012 with initial system delivery arriving in late 2017. The full system arrived in early 2018 and the system was officially unveiled on June 8, 2018. Summit is estimated to have cost around $200 million as part of the CORAL procurement program.

Overview[edit]

Summit was designed to deliver 5-10x improvement in performance for real big science workload performance over Titan. Compared to Titan which had 18,688 nodes (AMD Opteron + Nvidia Kepler) with a 9 MW power consumption, Summit slightly increased the power consumption to 13 MW, reduced the number of nodes to only 4,608, but tenfold the peak theoretical performance from 27 petaFLOPS to around 225 PF.

Summit has over 200 petaFLOPS of theoretical compute power and over 3 AI exaFLOPS for AI workloads.

| Components | System | ||||||

|---|---|---|---|---|---|---|---|

| Processor | CPU | GPU | Rack | Compute Racks | Storage Racks | Switch Racks | |

| Type | POWER9 | V100 | Type | AC922 | SSC (4 ESS GL4) | Mellanox IB EDR | |

| Count | 9,216 2 × 18 x 256 | 27,648 6 × 18 x 256 | Count | 256 Racks × 18 Nodes | 40 Racks × 8 Servers | 18 Racks | |

| Peak FLOPS | 9.96 PF | 215.7 PF | Power | 59 kW | 38 kW | ||

| Peak AI FLOPS | 3.456 EF | 13 MW (Total System) | |||||

Summit has over 10 petabytes of memory.

| Summit Total Memory | |||

|---|---|---|---|

| Type | DDR4 | HBM2 | NVMe |

| Node | 512 GiB | 96 GiB | 1.6 GB |

| Summit | 2.53 PiB | 475 TiB | 7.37 PB |

Architecture[edit]

System[edit]

Weighing over 340 tons, Summit takes up 5,600 sq. ft. of floor space at Oak Ridge National Laboratory. Summit consists of 256 compute racks, 40 storage racks, 18 switching director racks, and 4 infrastructure racks. Servers are linked via Mellanox IB EDR interconnect in a three-level non-blocking fat-tree topology.

Compute Rack[edit]

Each of Summit's 256 Compute Racks consists of 18 Compute Nodes along with a Mellanox IB EDR for a non-blocking fat-tree interconnect topology (actually appears to be pruned 3-level fat-trees). With 18 nodes, each rack has 9 TiB of DDR4 memory and another 1.7 TiB of HBM2 memory for a total of 10.7 TiB of memory. A rack has a 59 kW max power and a total of 864 TF/s of peak compute power (ORNL reports 775 TF/s).

Compute Node[edit]

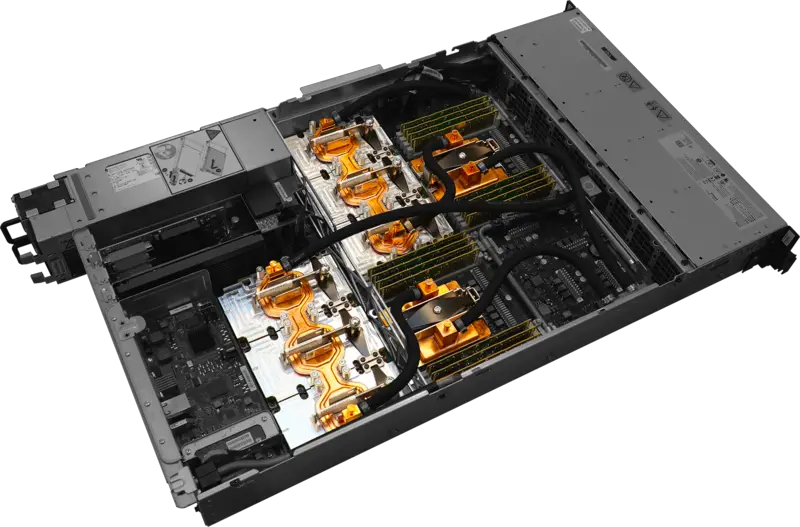

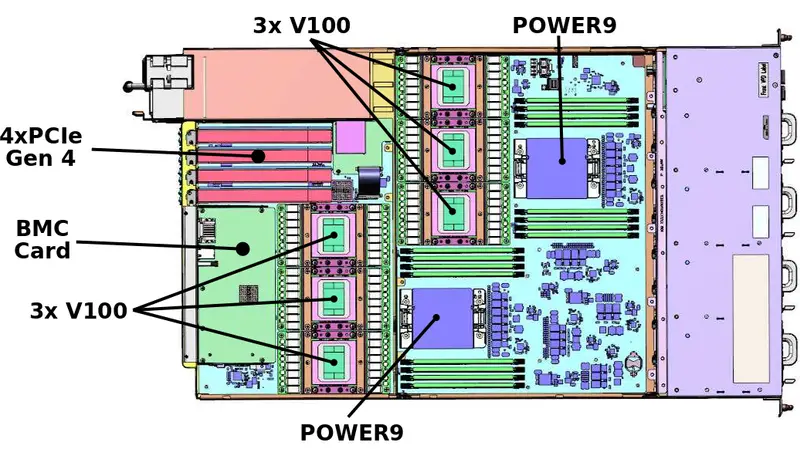

The basic compute node is the Power Systems AC922 (Accelerated Computing), formerly codename Witherspoon. The AC9222 comes in a 19-inch 2U rack-mount case.

Each node has two 2200W power supplies, 4 PCIe Gen 4 slots, and a BMC card. There are two 22-core POWER9 processors per node, each with 8 DIMMs. For the Summit supercomputer, there are 8 32-GiB DDR4-2666 DIMMs for a total of 256 GiB and 170.7 GB/s of aggregated memory bandwidth per socket. There are three V100 GPUs per POWER9 socket. Those use the SXM2 form factor and come with 16 GiB of HBM2 memory for a total of 48 GiB of HBM2 and 2.7 TBps of aggregated bandwidth per socket.

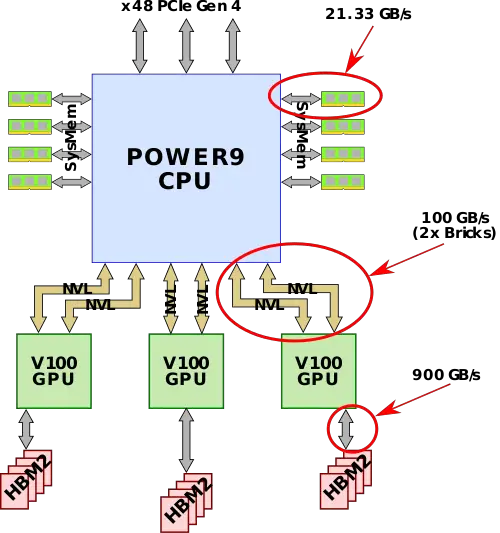

Socket[edit]

Since IBM POWER9 processors have native on-die NVLink connectivity, they are connected directly to the CPUs. The POWER9 processor has six NVLink 2.0 Bricks which are divided into three groups of two Bricks. Since NVLink 2.0 has bumped the signaling rate to 25 GT/s, two Bricks allow for 100 GB/s of bandwidth between the CPU and GPU. In addition to everything else, there are x48 PCIe Gen 4 lanes for I/O.

The Volta GPUs have 6 NVLink 2.0 Bricks which are divided into three groups. One group is used for the CPU while the other two groups interconnect every GPU to every other GPU. As with the GPU-CPU link, the aggregated bandwidth between two GPUs is also 100 GB/s.

| Single-socket Capabilities | ||||

|---|---|---|---|---|

| Processor | POWER9 | V100 | ||

| Count | 1 | 3 | ||

| FLOPS (SP) | 1.081 TFLOPS 22 × 49.12 GFLOPs | 47.1 TFLOPS 3 × 15.7 TFLOPs | ||

| FLOPS (DP) | 540.3 GFLOPs 22 × 24.56 GFLOPs | 23.4 TFLOPS 3 × 7.8 TFLOPs | ||

| AI FLOPS | - | 375 TFLOPS 3 × 125 TFLOPs | ||

| Memory | 256 GiB (DRR4) 8 × 32 GiB | 48 GiB (HBM2) 3 × 16 GiB | ||

| Bandwidth | 170.7 GB/s 8 × 21.33 GB/s | 900 GB/s/GPU | ||

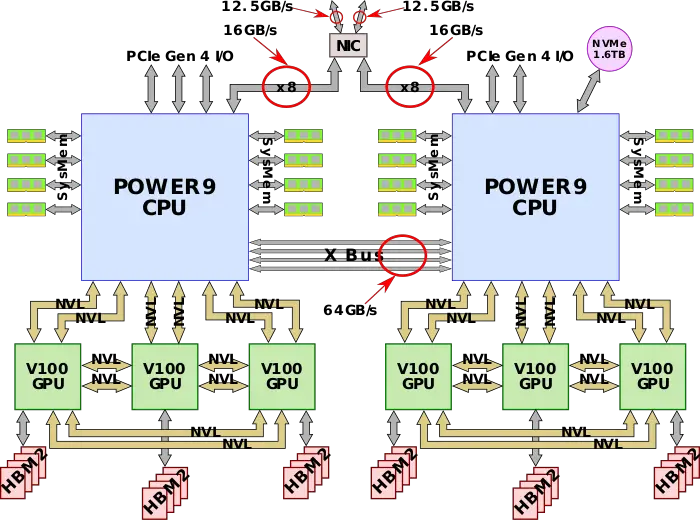

Full-node[edit]

There are two sockets per node. Communication between the two POWER9 processors is done over IBM’s X Bus. The X Bus is a 4-byte 16 GT/s link providing 64 GB/s of bidirectional bandwidth. A node has four PCIe Gen 4.0 slots consisting of two x16 (with CAPI support), a single x8 (also with CAPI support), and a single x4 slot. One of the x16 comes from one CPU, the other comes from the second. The x8 is configurable from either one of the CPUs and the last x4 slot comes from the second CPU only. The rest of the PCIe lanes used for various I/O applications (PEX, USB, BMC, and 1 Gbps Ethernet).

The node has a Mellanox InfiniBand ConnectX5 (IB EDR) NIC installed which supports 100 Gbps of bi-directional traffic. This card sits on a PCIe Gen4 x8 shared slot which directly connects x8 lanes to each of the two processors. With 12.5 GB/s per port (25 GB/s peak bandwidth) there is higher bandwidth of 16 GB/s per x8 lane (32 GB/s peak aggregated bandwidth) to the CPU. This enables each CPU to have direct access to the InfiniBand card, reducing bottlenecks with higher bandwidth.

Each POWER9 processor operates at 3.07 GHz and supports simultaneous execution of two vector single-precision operations. In other words, each core can execute 16 single-precision floating-point operations per cycle. At 3.07 GHz, this works out to 49.12 gigaFLOPS of peak theoretical performance per core. A full node has a little under 1.1 teraFLOPS (DP) of peak performance from the CPUs and around 47 teraFLOPS (DP) from the GPUs. Note that there is a slight discrepancy between our numbers and ORNL’s. Buddy Bland, OLCF Program Director, informed us that their peak performance for Summit only include the GPU’s peak performance numbers because that’s what most of the FP-intensive code will use to achieve the highest performance. In theory, if we were to include everything, Summit actually has a higher peak performance of around ~220 petaFLOPS.

There is 1.6 TB of NVMe Flash adapter attached to each node and Mellanox Infiniband EDR NIC.

| Full Node Capabilities | ||||

|---|---|---|---|---|

| Processor | POWER9 | V100 | ||

| Count | 2 | 6 | ||

| FLOPS (SP) | 2.161 TFLOPS 2 × 22 × 49.12 GFLOPs | 94.2 TFLOPs 6 × 15.7 TFLOPs | ||

| FLOPS (DP) | 1.081 TFLOPS 2 × 22 × 24.56 GFLOPs | 46.8 TFLOPS 6 × 7.8 TFLOPs | ||

| AI FLOPS | - | 750 TFLOPS 6 × 125 TFLOPs | ||

| Memory | 512 GiB (DRR4) 16 × 32 GiB | 96 GiB (HBM2) 6 × 16 GiB | ||

| Bandwidth | 341.33 GB/s 16 × 21.33 GB/s | 900 GB/s/GPU | ||

Documents[edit]

Bibliography[edit]

- Schor, D (2018, June). ORNL’s 200-petaFLOPS Summit Supercomputer Has Arrived, To Become World’s Fastest.

- ORNL. (personal communication, 2018, June).

- ORNL. (2018, June). Summit - Oak Ridge National Laboratory.

| designer | IBM + and Nvidia + |

| introductory date | June 8, 2018 + |

| logo |  + + |

| main image |  + + |

| name | Summit + |

| operator | Oak Ridge National Laboratory + |

| peak flops (double-precision) | 2.0e+17 FLOPS (200,000,000,000,000 KFLOPS, 200,000,000,000 MFLOPS, 200,000,000 GFLOPS, 200,000 TFLOPS, 200 PFLOPS, 0.2 EFLOPS, 2.0e-4 ZFLOPS) + |

| release price | $ 200,000,000.00 (€ 180,000,000.00, £ 162,000,000.00, ¥ 20,666,000,000.00) + |

| sponsor | United States Department of Energy (DOE) + |