(→Scalable Data Fabric (SDF): added ogimage) |

(→IFOP) |

||

| (27 intermediate revisions by 9 users not shown) | |||

| Line 1: | Line 1: | ||

| − | {{amd title|Infinity Fabric (IF)}} | + | {{amd title|Infinity Fabric (IF)}}{{interconnect arch}} |

| − | '''Infinity Fabric''' ('''IF''') is a system [[interconnect architecture]] that facilitates data and control transmission | + | '''Infinity Fabric''' ('''IF''') is a proprietary system [[interconnect architecture]] that facilitates data and control transmission across all linked components. This architecture is utilized by [[AMD]]'s recent microarchitectures for both CPU (i.e., {{amd|Zen|l=arch}}) and graphics (e.g., {{amd|Vega|l=arch}}), and any other additional accelerators they might add in the future. The fabric was first announced and detailed in April 2017 by Mark Papermaster, AMD's SVP and CTO. |

== Overview == | == Overview == | ||

| + | [[File:amd infinity fabric.svg|left|250px]] | ||

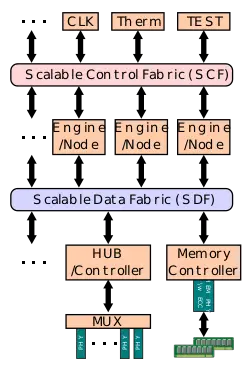

The Infinity Fabric consists of two separate communication planes - Infinity '''Scalable Data Fabric''' ('''SDF''') and the Infinity '''Scalable Control Fabric''' ('''SCF'''). The SDF is the primary means by which data flows around the system between endpoints (e.g. [[NUMA node]]s, [[PHY]]s). The SDF might have dozens of connecting points hooking together things such as [[PCIe]] PHYs, [[memory controller]]s, USB hub, and the various computing and execution units. The SDF is a [[superset]] of what was previously [[HyperTransport]]. The SCF is a complementary plane that handles the transmission of the many miscellaneous system control signals - this includes things such as thermal and power management, tests, security, and 3rd party IP. With those two planes, AMD can efficiently scale up many of the basic computing blocks. | The Infinity Fabric consists of two separate communication planes - Infinity '''Scalable Data Fabric''' ('''SDF''') and the Infinity '''Scalable Control Fabric''' ('''SCF'''). The SDF is the primary means by which data flows around the system between endpoints (e.g. [[NUMA node]]s, [[PHY]]s). The SDF might have dozens of connecting points hooking together things such as [[PCIe]] PHYs, [[memory controller]]s, USB hub, and the various computing and execution units. The SDF is a [[superset]] of what was previously [[HyperTransport]]. The SCF is a complementary plane that handles the transmission of the many miscellaneous system control signals - this includes things such as thermal and power management, tests, security, and 3rd party IP. With those two planes, AMD can efficiently scale up many of the basic computing blocks. | ||

| − | + | {{clear}} | |

== Scalable Data Fabric (SDF) == | == Scalable Data Fabric (SDF) == | ||

[[File:amd zeppelin sdf plane block.svg|class=wikichip_ogimage|400px|right]] | [[File:amd zeppelin sdf plane block.svg|class=wikichip_ogimage|400px|right]] | ||

| Line 11: | Line 12: | ||

In the case of AMD's processors based on the {{amd|Zeppelin}} SoC and the {{amd|Zen|Zen core|l=arch}}, the block diagram of the SDF is shown on the right. The two {{amd|CPU Complex|CCX's}} are directly connected to the SDF plane using the '''Cache-Coherent Master''' ('''CCM''') which provides the mechanism for coherent data transports between cores. There is also a single '''I/O Master/Slave''' (IOMS) interface for the I/O Hub communication. The Hub contains two [[PCIe]] controllers, a [[SATA]] controller, the [[USB]] controllers, [[Ethernet]] controller, and the [[southbridge]]. From an operational point of view, the IOMS and the CCMs are actually the only interfaces that are capable of making DRAM requests. | In the case of AMD's processors based on the {{amd|Zeppelin}} SoC and the {{amd|Zen|Zen core|l=arch}}, the block diagram of the SDF is shown on the right. The two {{amd|CPU Complex|CCX's}} are directly connected to the SDF plane using the '''Cache-Coherent Master''' ('''CCM''') which provides the mechanism for coherent data transports between cores. There is also a single '''I/O Master/Slave''' (IOMS) interface for the I/O Hub communication. The Hub contains two [[PCIe]] controllers, a [[SATA]] controller, the [[USB]] controllers, [[Ethernet]] controller, and the [[southbridge]]. From an operational point of view, the IOMS and the CCMs are actually the only interfaces that are capable of making DRAM requests. | ||

| − | The DRAM is attached to the DDR4 interface which is attached to the Unified Memory Controller (UMC). There are two Unified Memory Controllers (UMC) for each of the DDR channels which are also directly connected to the SDF. | + | The DRAM is attached to the DDR4 interface which is attached to the Unified Memory Controller (UMC). There are two Unified Memory Controllers (UMC) for each of the DDR channels which are also directly connected to the SDF. It's worth noting that all SDF components run at the DRAM's MEMCLK frequency. For example, a system using DDR4-2133 would have the entire SDF plane operating at 1066 MHz. This is a fundamental design choice made by AMD in order to eliminate clock-domain latency. |

| + | |||

| + | === CAKE === | ||

| + | The workhorse mechanism that interfaces between the SDF and the various SerDes that link both multiple [[dies]] together and multiple chips together is the CAKE. The '''Coherent AMD socKet Extender''' ('''CAKE''') module encodes local SDF requests onto 128-bit serialized packets each cycle and ships them over any SerDes interface. Responses are also decoded by the CAKE back to the SDF. As with everything else that is attached to the SDF, the CAKEs operate at DRAM’s MEMCLK frequency in order to eliminate clock-domain crossing latency. | ||

| + | |||

| + | === SerDes === | ||

| + | [[File:amd if-ifop-serdes.png|right|thumb|IFOP SerDes]] | ||

| + | The Infinity Scalable Data Fabric (SDF) employs two different types of [[SerDes]] links - '''Infinity Fabric On-Package''' ('''IFOP''') and '''Infinity Fabric InterSocket''' ('''IFIS'''). | ||

| + | |||

| + | ==== IFOP ==== | ||

| + | The '''Infinity Fabric On-Package''' ('''IFOP''') SerDes deal with die-to-die communication in the same package. AMD designed a fairly straightforward custom SerDes suitable for short in-package trace lengths which can achieve a power efficiency of roughly 2 pJ/b. This was done by using a 32-bit low-swing [[single-ended]] data transmission with differential clocking which consumes roughly half the power of an equivalent differential drive. They utilize a zero-power driver state from the TX/RX impedance termination to the ground while the driver pull-up is disabled. This allows transmitting zeros with less power than transmitting ones which is also leveraged when the link is idle. Additionally [[inversion encoding]] was used to save another 10% average power per bit. | ||

| + | |||

| + | Due to the performance sensitivity of the on-package links, the IFOP links are over-provisioned by about a factor of two relative to DDR4 channel bandwidth for mixed read/write traffic. They are bidirectional links and a CRC is transmitted along with every cycle of data. The IFOP SerDes do four transfers per CAKE clock. | ||

| + | |||

| + | |||

| + | :[[File:amd if ifop link example.svg|600px]] | ||

| + | |||

| + | |||

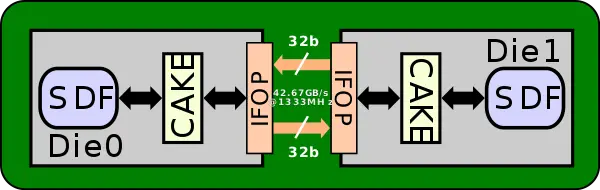

| + | Since the CAKEs operate at the same frequency as the DRAM's MEMCLK frequency, the bandwidth is fully dependent on that. For a system using DDR4-2666 DIMMs, this means the CAKEs will be operating at 1333.33 MHz meaning the IFOPs will have a bi-directional bandwidth of 42.667 GB/s (= 16B per clock per direction). | ||

| + | |||

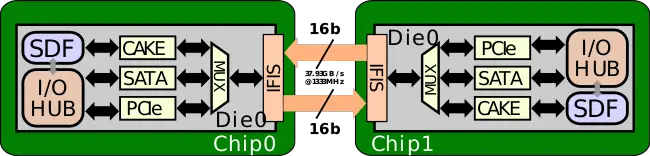

| + | ==== IFIS ==== | ||

| + | '''Infinity Fabric InterSocket''' ('''IFIS''') SerDes are the second type which are used for package-to-package communications such as in two-way [[multiprocessing]]. The IFIS were designed so they could [[multiplexed]] with other protocols such as [[PCIe]] and [[SATA]]. They operate on TX/RX 16 [[differential]] data lanes at roughly 11 pJ/b. Those links are also aligned with the [[package]] [[pinout]] of standard PCIe lanes. Because they are 16-bit wide they run at 8 transfers per CAKE clock. Compared to the IFOP, the IFIS links have 8/9 of the bandwidth. | ||

| − | == Scalable Control Fabric ( | + | |

| − | The Infinity Scalable Control Fabric (SCF) is the control communication plane of the Infinity Fabric. | + | :[[File:amd if ifis link example.svg|650px]] |

| + | |||

| + | |||

| + | For a system using DDR4-2666 DIMMs, the CAKEs will be operating at 1333.33 MHz meaning the IFIS will have a bidirectional bandwidth of 37.926 GB/s. | ||

| + | |||

| + | == Scalable Control Fabric (SCF) == | ||

| + | {{see also|amd/microarchitectures/zen#System_Management_Unit|l1=Zen § System Management Unit}} | ||

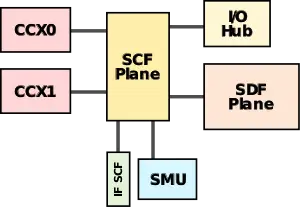

| + | [[File:zeppelin scf plane block.svg|right|300px]] | ||

| + | The Infinity '''Scalable Control Fabric''' ('''SCF''') is the control communication plane of the Infinity Fabric. The SCF connects the System Management Unit (SMU) to all the various components. The SCF has its own dedicated IFIS SerDes that allows the SCF of multiple chips within a system to talk to each other. The SCF also extends to the dies on a second socket in multi-way [[multiprocessing]] configurations. | ||

| + | |||

| + | {{clear}} | ||

| + | |||

| + | == Implementations == | ||

| + | === Zen === | ||

| + | {{main|amd/microarchitectures/zen|l1=Zen}} | ||

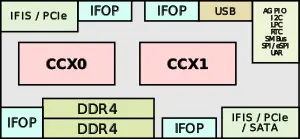

| + | The Zen cores are incorporated into AMD's {{amd|Zeppelin}} which is designed to scale from a single-die configuration all the way to a 4-die multi-chip package. Each Zeppelin consists of four IFOPs SerDes and two IFISs SerDes as described above. | ||

| + | |||

| + | :[[File:amd zeppelin basic block.svg|300px]] | ||

| + | |||

| + | |||

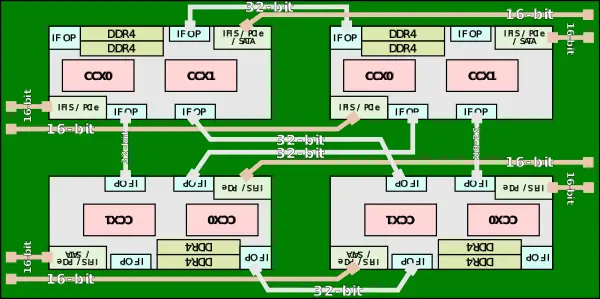

| + | In a four-die multi-chip package, such as in the case of {{amd|EPYC}}, two of the dies are rotated 180 degrees with each die being linked directly to all the other dies in the package. Assuming the system is using DDR4-2666 (i.e., DRAM's MEMCLK is 1333.33 MHz), each of the die-to-die links have a bandwidth of 42.667 GB/s and a total system bisectional bandwidth of 170.667 GB/s. | ||

| + | |||

| + | |||

| + | :[[File:amd zeppelin basic block 4-dies.svg|600px]] | ||

| + | |||

| + | == See also == | ||

| + | * [https://fuse.wikichip.org/news/1064/isscc-2018-amds-zeppelin-multi-chip-routing-and-packaging/ ISSCC 2018: AMD’s Zeppelin; Multi-chip routing and packaging], WikiChip Fuse | ||

== References == | == References == | ||

* AMD Infinity Fabric introduction by Mark Papermaster, April 6, 2017 | * AMD Infinity Fabric introduction by Mark Papermaster, April 6, 2017 | ||

* AMD EPYC Tech Day, June 20, 2017 | * AMD EPYC Tech Day, June 20, 2017 | ||

| − | * ISSCC 2018 | + | * IEEE ISSCC 2018 |

| + | |||

| + | [[category:amd]][[category:interconnect architectures]] | ||

Latest revision as of 20:50, 18 August 2020

| Interconnect Architectures | |

| |

| Concepts | |

| General | |

| Peripheral | |

| Storage Devices | |

| Audio Devices | |

Infinity Fabric (IF) is a proprietary system interconnect architecture that facilitates data and control transmission across all linked components. This architecture is utilized by AMD's recent microarchitectures for both CPU (i.e., Zen) and graphics (e.g., Vega), and any other additional accelerators they might add in the future. The fabric was first announced and detailed in April 2017 by Mark Papermaster, AMD's SVP and CTO.

Contents

Overview[edit]

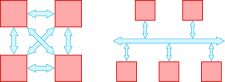

The Infinity Fabric consists of two separate communication planes - Infinity Scalable Data Fabric (SDF) and the Infinity Scalable Control Fabric (SCF). The SDF is the primary means by which data flows around the system between endpoints (e.g. NUMA nodes, PHYs). The SDF might have dozens of connecting points hooking together things such as PCIe PHYs, memory controllers, USB hub, and the various computing and execution units. The SDF is a superset of what was previously HyperTransport. The SCF is a complementary plane that handles the transmission of the many miscellaneous system control signals - this includes things such as thermal and power management, tests, security, and 3rd party IP. With those two planes, AMD can efficiently scale up many of the basic computing blocks.

Scalable Data Fabric (SDF)[edit]

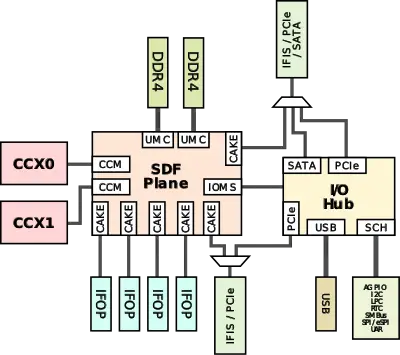

The Infinity Scalable Data Fabric (SDF) is the data communication plane of the Infinity Fabric. All data from and to the cores and to the other peripherals (e.g. memory controller and I/O hub) are routed through the SDF. A key feature of the coherent data fabric is that it's not limited to a single die and can extend over multiple dies in an MCP as well as multiple sockets over PCIe links (possibly even across independent systems, although that's speculation). There's also no constraint on the topology of the nodes connected over the fabric, communication can be done directly node-to-node, island-hopping in a bus topology, or as a mesh topology system.

In the case of AMD's processors based on the Zeppelin SoC and the Zen core, the block diagram of the SDF is shown on the right. The two CCX's are directly connected to the SDF plane using the Cache-Coherent Master (CCM) which provides the mechanism for coherent data transports between cores. There is also a single I/O Master/Slave (IOMS) interface for the I/O Hub communication. The Hub contains two PCIe controllers, a SATA controller, the USB controllers, Ethernet controller, and the southbridge. From an operational point of view, the IOMS and the CCMs are actually the only interfaces that are capable of making DRAM requests.

The DRAM is attached to the DDR4 interface which is attached to the Unified Memory Controller (UMC). There are two Unified Memory Controllers (UMC) for each of the DDR channels which are also directly connected to the SDF. It's worth noting that all SDF components run at the DRAM's MEMCLK frequency. For example, a system using DDR4-2133 would have the entire SDF plane operating at 1066 MHz. This is a fundamental design choice made by AMD in order to eliminate clock-domain latency.

CAKE[edit]

The workhorse mechanism that interfaces between the SDF and the various SerDes that link both multiple dies together and multiple chips together is the CAKE. The Coherent AMD socKet Extender (CAKE) module encodes local SDF requests onto 128-bit serialized packets each cycle and ships them over any SerDes interface. Responses are also decoded by the CAKE back to the SDF. As with everything else that is attached to the SDF, the CAKEs operate at DRAM’s MEMCLK frequency in order to eliminate clock-domain crossing latency.

SerDes[edit]

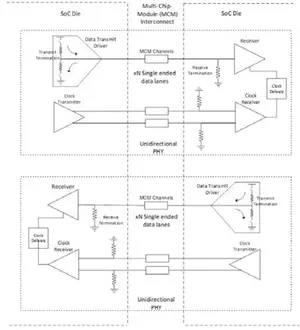

The Infinity Scalable Data Fabric (SDF) employs two different types of SerDes links - Infinity Fabric On-Package (IFOP) and Infinity Fabric InterSocket (IFIS).

IFOP[edit]

The Infinity Fabric On-Package (IFOP) SerDes deal with die-to-die communication in the same package. AMD designed a fairly straightforward custom SerDes suitable for short in-package trace lengths which can achieve a power efficiency of roughly 2 pJ/b. This was done by using a 32-bit low-swing single-ended data transmission with differential clocking which consumes roughly half the power of an equivalent differential drive. They utilize a zero-power driver state from the TX/RX impedance termination to the ground while the driver pull-up is disabled. This allows transmitting zeros with less power than transmitting ones which is also leveraged when the link is idle. Additionally inversion encoding was used to save another 10% average power per bit.

Due to the performance sensitivity of the on-package links, the IFOP links are over-provisioned by about a factor of two relative to DDR4 channel bandwidth for mixed read/write traffic. They are bidirectional links and a CRC is transmitted along with every cycle of data. The IFOP SerDes do four transfers per CAKE clock.

Since the CAKEs operate at the same frequency as the DRAM's MEMCLK frequency, the bandwidth is fully dependent on that. For a system using DDR4-2666 DIMMs, this means the CAKEs will be operating at 1333.33 MHz meaning the IFOPs will have a bi-directional bandwidth of 42.667 GB/s (= 16B per clock per direction).

IFIS[edit]

Infinity Fabric InterSocket (IFIS) SerDes are the second type which are used for package-to-package communications such as in two-way multiprocessing. The IFIS were designed so they could multiplexed with other protocols such as PCIe and SATA. They operate on TX/RX 16 differential data lanes at roughly 11 pJ/b. Those links are also aligned with the package pinout of standard PCIe lanes. Because they are 16-bit wide they run at 8 transfers per CAKE clock. Compared to the IFOP, the IFIS links have 8/9 of the bandwidth.

For a system using DDR4-2666 DIMMs, the CAKEs will be operating at 1333.33 MHz meaning the IFIS will have a bidirectional bandwidth of 37.926 GB/s.

Scalable Control Fabric (SCF)[edit]

- See also: Zen § System Management Unit

The Infinity Scalable Control Fabric (SCF) is the control communication plane of the Infinity Fabric. The SCF connects the System Management Unit (SMU) to all the various components. The SCF has its own dedicated IFIS SerDes that allows the SCF of multiple chips within a system to talk to each other. The SCF also extends to the dies on a second socket in multi-way multiprocessing configurations.

Implementations[edit]

Zen[edit]

- Main article: Zen

The Zen cores are incorporated into AMD's Zeppelin which is designed to scale from a single-die configuration all the way to a 4-die multi-chip package. Each Zeppelin consists of four IFOPs SerDes and two IFISs SerDes as described above.

In a four-die multi-chip package, such as in the case of EPYC, two of the dies are rotated 180 degrees with each die being linked directly to all the other dies in the package. Assuming the system is using DDR4-2666 (i.e., DRAM's MEMCLK is 1333.33 MHz), each of the die-to-die links have a bandwidth of 42.667 GB/s and a total system bisectional bandwidth of 170.667 GB/s.

See also[edit]

- ISSCC 2018: AMD’s Zeppelin; Multi-chip routing and packaging, WikiChip Fuse

References[edit]

- AMD Infinity Fabric introduction by Mark Papermaster, April 6, 2017

- AMD EPYC Tech Day, June 20, 2017

- IEEE ISSCC 2018