| (23 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

| − | {{nervana title|NNP}} | + | {{nervana title|Neural Network Processors (NNP)}} |

{{ic family | {{ic family | ||

| title = NNP | | title = NNP | ||

| Line 29: | Line 29: | ||

| predecessor = | | predecessor = | ||

| predecessor link = | | predecessor link = | ||

| − | | successor = | + | | successor = Habana HL-Series |

| − | | successor link = | + | | successor link = habana/hl |

}} | }} | ||

| − | '''Neural Network Processors''' ('''NNP''') | + | '''Neural Network Processors''' ('''NNP''') is a family of [[neural processors]] designed by [[Intel Nervana]] for both [[inference]] and [[training]]. |

| + | |||

| + | The NNP family has been discontinued on January 31, 2019, in favor of the [[Habana]] {{habana|HL}} series. | ||

== Overview == | == Overview == | ||

| − | Neural network processors (NNP) | + | Neural network processors (NNP) is a family of [[neural processors]] designed by [[Intel]] for the [[acceleration]] of [[artificial intelligence]] workloads. The name and original architecture originated with the [[Nervana]] startup prior to its acquisition by [[Intel]] in [[2016]]. Although the first product was announced in 2017, it never made it past customer sampling which eventually served as a learning product. Intel eventually productized those chips starting with their second-generation designs in late 2019. |

| + | |||

| + | The NNP family comprises two separate series - '''NNP-I''' for [[inference]] and '''NNP-T''' for [[training]]. The two series use entirely different architectures. The training chip is a direct descendent of Nervana's original ASIC design. Those chips use the PCIe and [[OCP OAM|OAM]] form factors that have high TDPs designed for maximum performance at the data center and for workstations. Unlike the NNP-T, NNP-I inference chips are the product of Intel IDC which, architecturally, are very different from the training chips. They use Intel's low-power client SoC has the base SoC and build the AI architecture from there. The inference chips use low-power PCIe, M.2, and ruler form factors designed for servers, workstations, and embedded applications. | ||

| + | |||

| + | On January 31, 2020, Intel announced that it has discontinued the Nervana NNP product line in favor of the unified architecture it has acquired from [[Habana Labs]] a month earlier. | ||

| + | |||

| + | === Codenames === | ||

| + | {| class="wikitable" | ||

| + | |- | ||

| + | ! Introduction || Type || Microarchitecture || Process | ||

| + | |- | ||

| + | | 2017<sup>1</sup> || [[Training]] || {{nervana|Lake Crest|l=arch}} || [[TSMC 28 nm|28 nm]] | ||

| + | |- | ||

| + | | 2019 || Training || {{nervana|Spring Crest|l=arch}} || [[TSMC 16 nm|16 nm]] | ||

| + | |- | ||

| + | | 2019 || [[Inference]] || {{nervana|Spring Hill|l=arch}} || [[Intel 10 nm|10 nm]] | ||

| + | |- style="text-decoration:line-through" | ||

| + | | 2020 || Training+CPU || {{nervana|Knights Crest|l=arch}} || ? | ||

| + | |} | ||

| − | + | 1 - Only sampled | |

| − | == | + | == Training (NNP-T) == |

=== Lake Crest === | === Lake Crest === | ||

{{main|nervana/microarchitectures/lake_crest|l1=Lake Crest µarch}} | {{main|nervana/microarchitectures/lake_crest|l1=Lake Crest µarch}} | ||

The first generation of NNPs were based on the {{nervana|Lake Crest|Lake Crest microarchitecture|l=arch}}. Manufactured on [[TSMC]]'s [[28 nm process]], those chips were never productized. Samples were used for customer feedback and the design mostly served as a software development vehicle for their follow-up design. | The first generation of NNPs were based on the {{nervana|Lake Crest|Lake Crest microarchitecture|l=arch}}. Manufactured on [[TSMC]]'s [[28 nm process]], those chips were never productized. Samples were used for customer feedback and the design mostly served as a software development vehicle for their follow-up design. | ||

| − | === | + | === T-1000 Series (Spring Crest) === |

| + | [[File:nnp-l-1000 announcement.png|thumb|right|NNP T-1000]] | ||

{{main|nervana/microarchitectures/spring_crest|l1=Spring Crest µarch}} | {{main|nervana/microarchitectures/spring_crest|l1=Spring Crest µarch}} | ||

| − | + | Launched in late 2019, second-generation NNP-Ts are branded as the NNP T-1000 series and are the first chips to be productized. Fabricated [[TSMC]]'s [[16 nm process]] based on the {{nervana|Spring Crest|Spring Crest microarchitecture|l=arch}}, those chips feature a number of enhancements and refinments over the prior generation including a shift from [[Flexpoint]] to [[Bfloat16]] and considerable performance uplift. Intel claims that these chips have about 3-4x the training performance of first generation. All NNP-T 1000 chips come with 32 GiB of four [[HBM2]] stacks in a [[CoWoS]] package and come in two form factors: [[PCIe Gen 3]] and an [[OCP OAM]] [[accelerator card]]. | |

| + | [[File:spring_crest_ocp_board_(front).png|right|thumb|NNP-T 1400 [[OAM Module]].]] | ||

| + | |||

| + | * '''Proc''' [[16 nm process]] | ||

| + | * '''Mem''' 32 GiB, HBM2-2400 | ||

| + | * '''TDP''' 300-400 W (150-250 W typical power) | ||

| + | * '''Perf''' 108 TOPS ([[bfloat16]]) | ||

| + | |||

| + | <!-- NOTE: | ||

| + | This table is generated automatically from the data in the actual articles. | ||

| + | If a microprocessor is missing from the list, an appropriate article for it needs to be | ||

| + | created and tagged accordingly. | ||

| + | |||

| + | Missing a chip? please dump its name here: https://en.wikichip.org/wiki/WikiChip:wanted_chips | ||

| + | --> | ||

| + | {{comp table start}} | ||

| + | <table class="comptable sortable tc4"> | ||

| + | {{comp table header|main|7:List of NNP-T 1000-based Processors}} | ||

| + | {{comp table header|main|5:Main processor|1:Performance}} | ||

| + | {{comp table header|cols|Launched|TDP|EUs|Frequency|[[HBM2]]|Peak Perf ([[bfloat16]])}} | ||

| + | {{#ask: [[Category:microprocessor models by intel]] [[microarchitecture::Spring Crest]] | ||

| + | |?full page name | ||

| + | |?model number | ||

| + | |?first launched | ||

| + | |?tdp | ||

| + | |?core count | ||

| + | |?base frequency#MHz | ||

| + | |?max memory#GiB | ||

| + | |?peak flops (half-precision)#TFLOPS | ||

| + | |format=template | ||

| + | |template=proc table 3 | ||

| + | |userparam=8 | ||

| + | |mainlabel=- | ||

| + | }} | ||

| + | {{comp table count|ask=[[Category:microprocessor models by intel]] [[microarchitecture::Spring Crest]]}} | ||

| + | </table> | ||

| + | {{comp table end}} | ||

| + | |||

| + | ==== POD Reference Design ==== | ||

| + | [[File:ai hw summit supermicro ref pod rack.jpeg|right|thumb|POD Rack]] | ||

| + | Along with the launch of the NNP-T 1000 series, Intel also introduced the POD reference design. Those systems were intended for large-scale out systems for the processing of very large neural networks. The POD reference design featured 10 racks with 6 nodes per rack. Each of the nodes features eight interconnected OAM cards, producing a system with a total of 480 NNP-Ts. | ||

| − | + | :[[File:ai hw summit supermicro ref pod.jpeg|500px]] | |

== Inference (NNP-I) == | == Inference (NNP-I) == | ||

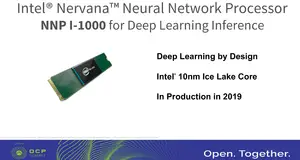

| − | === | + | === I-1000 Series (Spring Hill) === |

| − | {{main| | + | [[File:nnp-i-1000.png|right|thumb|NNP I-1000]] |

| − | The | + | {{main|intel/microarchitectures/spring_hill|l1=Spring Hill µarch}} |

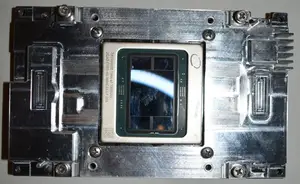

| + | The NNP I-1000 series is Intel's first series of devices designed specifically for the [[acceleration]] of inference workloads. Fabricated on [[Intel's 10 nm process]], these chips are based on {{nervana|Spring Hill|l=arch}} and incorporate a {{intel|Sunny Cove|Sunny Cove core|l=arch}} along with twelve specialized inference acceleration engines. The overall SoC design borrows considerable amount of IP from {{intel|Ice Lake (Client)|Ice Lake|l=arch}}. Those devices come in [[M.2]] and PCIe form factors. | ||

| + | [[File:nnp-i ruler.jpg|right|thumb|NNP-I Ruler]] | ||

| + | [[File:supermicro nnp-i chassis.jpg|thumb|right|NNP-I Ruler Chassis.]] | ||

| + | |||

| + | * '''Proc''' [[10 nm process]] | ||

| + | * '''Mem''' 4x32b LPDDR4x-4200 | ||

| + | * '''TDP''' 10-50 W | ||

| + | * '''Eff''' 2.0-4.8 TOPs/W | ||

| + | * '''Perf''' 48-92 TOPS (Int8) | ||

| + | <!-- NOTE: | ||

| + | This table is generated automatically from the data in the actual articles. | ||

| + | If a microprocessor is missing from the list, an appropriate article for it needs to be | ||

| + | created and tagged accordingly. | ||

| + | |||

| + | Missing a chip? please dump its name here: https://en.wikichip.org/wiki/WikiChip:wanted_chips | ||

| + | --> | ||

| + | {{comp table start}} | ||

| + | <table class="comptable sortable tc4"> | ||

| + | {{comp table header|main|5:List of NNP-I-1000-based Processors}} | ||

| + | {{comp table header|main|3:Main processor|1:Performance}} | ||

| + | {{comp table header|cols|Launched|TDP|EUs|Peak Perf (Int8)}} | ||

| + | {{#ask: [[Category:microprocessor models by intel]] [[microarchitecture::Spring Hill]] | ||

| + | |?full page name | ||

| + | |?model number | ||

| + | |?first launched | ||

| + | |?tdp | ||

| + | |?core count | ||

| + | |?peak integer ops (8-bit)#TOPS | ||

| + | |format=template | ||

| + | |template=proc table 3 | ||

| + | |userparam=6 | ||

| + | |mainlabel=- | ||

| + | }} | ||

| + | {{comp table count|ask=[[Category:microprocessor models by intel]] [[microarchitecture::Spring Hill]]}} | ||

| + | </table> | ||

| + | {{comp table end}} | ||

| − | + | Intel also announced NNP-I in an [[EDSFF]] (ruler) form factor which was designed to provide the highest compute density possible for inference. Intel hasn't announced specific models. The rulers were planned t come with a 10-35W TDP range. 32 NNP-Is in a ruler form factor can be packed in a single 1U rack. | |

== See also == | == See also == | ||

| + | * [[neural processor]] | ||

* {{intel|DL Boost}} | * {{intel|DL Boost}} | ||

| + | * {{habana|HL Series}} | ||

Latest revision as of 11:42, 1 February 2020

| NNP | |

| |

| Developer | Intel |

| Manufacturer | Intel, TSMC |

| Type | Neural Processors |

| Introduction | May 23, 2018 (announced) 2019 (launch) |

| Process | 28 nm 0.028 μm , 16 nm2.8e-5 mm 0.016 μm , 10 nm1.6e-5 mm 0.01 μm

1.0e-5 mm |

| Technology | CMOS |

| Package | PCIe x16 Gen 3 Card, OCP OAM, M.2 |

| → | |

| Habana HL-Series | |

Neural Network Processors (NNP) is a family of neural processors designed by Intel Nervana for both inference and training.

The NNP family has been discontinued on January 31, 2019, in favor of the Habana HL series.

Contents

Overview[edit]

Neural network processors (NNP) is a family of neural processors designed by Intel for the acceleration of artificial intelligence workloads. The name and original architecture originated with the Nervana startup prior to its acquisition by Intel in 2016. Although the first product was announced in 2017, it never made it past customer sampling which eventually served as a learning product. Intel eventually productized those chips starting with their second-generation designs in late 2019.

The NNP family comprises two separate series - NNP-I for inference and NNP-T for training. The two series use entirely different architectures. The training chip is a direct descendent of Nervana's original ASIC design. Those chips use the PCIe and OAM form factors that have high TDPs designed for maximum performance at the data center and for workstations. Unlike the NNP-T, NNP-I inference chips are the product of Intel IDC which, architecturally, are very different from the training chips. They use Intel's low-power client SoC has the base SoC and build the AI architecture from there. The inference chips use low-power PCIe, M.2, and ruler form factors designed for servers, workstations, and embedded applications.

On January 31, 2020, Intel announced that it has discontinued the Nervana NNP product line in favor of the unified architecture it has acquired from Habana Labs a month earlier.

Codenames[edit]

| Introduction | Type | Microarchitecture | Process |

|---|---|---|---|

| 20171 | Training | Lake Crest | 28 nm |

| 2019 | Training | Spring Crest | 16 nm |

| 2019 | Inference | Spring Hill | 10 nm |

| 2020 | Training+CPU | Knights Crest | ? |

1 - Only sampled

Training (NNP-T)[edit]

Lake Crest[edit]

- Main article: Lake Crest µarch

The first generation of NNPs were based on the Lake Crest microarchitecture. Manufactured on TSMC's 28 nm process, those chips were never productized. Samples were used for customer feedback and the design mostly served as a software development vehicle for their follow-up design.

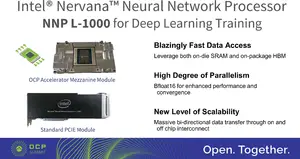

T-1000 Series (Spring Crest)[edit]

- Main article: Spring Crest µarch

Launched in late 2019, second-generation NNP-Ts are branded as the NNP T-1000 series and are the first chips to be productized. Fabricated TSMC's 16 nm process based on the Spring Crest microarchitecture, those chips feature a number of enhancements and refinments over the prior generation including a shift from Flexpoint to Bfloat16 and considerable performance uplift. Intel claims that these chips have about 3-4x the training performance of first generation. All NNP-T 1000 chips come with 32 GiB of four HBM2 stacks in a CoWoS package and come in two form factors: PCIe Gen 3 and an OCP OAM accelerator card.

- Proc 16 nm process

- Mem 32 GiB, HBM2-2400

- TDP 300-400 W (150-250 W typical power)

- Perf 108 TOPS (bfloat16)

| List of NNP-T 1000-based Processors | |||||||

|---|---|---|---|---|---|---|---|

| Main processor | Performance | ||||||

| Model | Launched | TDP | EUs | Frequency | HBM2 | Peak Perf (bfloat16) | |

| NNP-T 1300 | 12 November 2019 | 300 W 300,000 mW 0.402 hp 0.3 kW | 22 | 950 MHz 0.95 GHz 950,000 kHz | 32 GiB 32,768 MiB 33,554,432 KiB 34,359,738,368 B 0.0313 TiB | 93.39 TFLOPS 93,390,000,000,000 FLOPS 93,390,000,000 KFLOPS 93,390,000 MFLOPS 93,390 GFLOPS 0.0934 PFLOPS | |

| NNP-T 1400 | 12 November 2019 | 375 W 375,000 mW 0.503 hp 0.375 kW | 24 | 1,100 MHz 1.1 GHz 1,100,000 kHz | 32 GiB 32,768 MiB 33,554,432 KiB 34,359,738,368 B 0.0313 TiB | 108 TFLOPS 108,000,000,000,000 FLOPS 108,000,000,000 KFLOPS 108,000,000 MFLOPS 108,000 GFLOPS 0.108 PFLOPS | |

| Count: 2 | |||||||

POD Reference Design[edit]

Along with the launch of the NNP-T 1000 series, Intel also introduced the POD reference design. Those systems were intended for large-scale out systems for the processing of very large neural networks. The POD reference design featured 10 racks with 6 nodes per rack. Each of the nodes features eight interconnected OAM cards, producing a system with a total of 480 NNP-Ts.

Inference (NNP-I)[edit]

I-1000 Series (Spring Hill)[edit]

- Main article: Spring Hill µarch

The NNP I-1000 series is Intel's first series of devices designed specifically for the acceleration of inference workloads. Fabricated on Intel's 10 nm process, these chips are based on Spring Hill and incorporate a Sunny Cove core along with twelve specialized inference acceleration engines. The overall SoC design borrows considerable amount of IP from Ice Lake. Those devices come in M.2 and PCIe form factors.

- Proc 10 nm process

- Mem 4x32b LPDDR4x-4200

- TDP 10-50 W

- Eff 2.0-4.8 TOPs/W

- Perf 48-92 TOPS (Int8)

| List of NNP-I-1000-based Processors | |||||

|---|---|---|---|---|---|

| Main processor | Performance | ||||

| Model | Launched | TDP | EUs | Peak Perf (Int8) | |

| NNP-I 1100 | 12 November 2019 | 12 W 12,000 mW 0.0161 hp 0.012 kW | 12 | 50 TOPS 50,000,000,000,000 OPS 50,000,000,000 KOPS 50,000,000 MOPS 50,000 GOPS 0.05 POPS | |

| NNP-I 1300 | 12 November 2019 | 75 W 75,000 mW 0.101 hp 0.075 kW | 24 | 170 TOPS 170,000,000,000,000 OPS 170,000,000,000 KOPS 170,000,000 MOPS 170,000 GOPS 0.17 POPS | |

| Count: 2 | |||||

Intel also announced NNP-I in an EDSFF (ruler) form factor which was designed to provide the highest compute density possible for inference. Intel hasn't announced specific models. The rulers were planned t come with a 10-35W TDP range. 32 NNP-Is in a ruler form factor can be packed in a single 1U rack.

See also[edit]

| designer | Intel + |

| first announced | May 23, 2018 + |

| first launched | 2019 + |

| full page name | nervana/nnp + |

| instance of | integrated circuit family + |

| main designer | Intel + |

| manufacturer | Intel + and TSMC + |

| name | NNP + |

| package | PCIe x16 Gen 3 Card +, OCP OAM + and M.2 + |

| process | 28 nm (0.028 μm, 2.8e-5 mm) +, 16 nm (0.016 μm, 1.6e-5 mm) + and 10 nm (0.01 μm, 1.0e-5 mm) + |

| technology | CMOS + |