(→Architecture) |

(→Overview) |

||

| Line 33: | Line 33: | ||

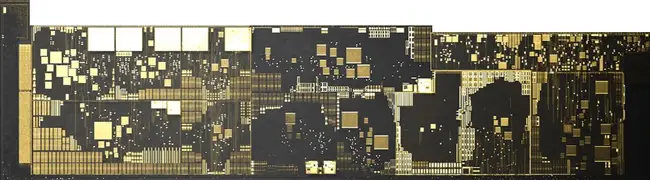

:[[File:xavier block.svg|500px]] | :[[File:xavier block.svg|500px]] | ||

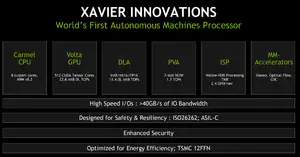

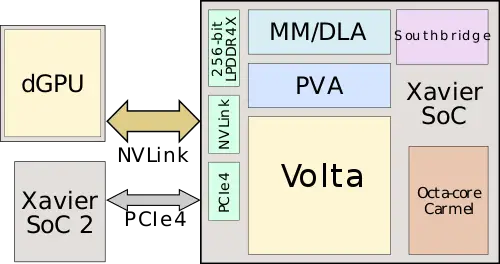

| − | The design targets and architecture started back in [[2014]]. | + | The design targets and architecture started back in [[2014]]. Fabricated on [[TSMC]] [[12 nm process]], the chip itself comprises an eight-core CPU cluster, GPU with additional inference optimizations, [[neural processor|deep learning accelerator]], vision accelerator, and a set of multimedia accelerators providing additional support for machine learning (stereo, LDC, optical flow). The ISP has been enhanced to provide native HDR support, higher precision math without offloading work to the GPU. Xavier features a large set of I/O and has been designed for safety and reliability supporting various standards such as Functional safety [[ISO-26262]] and [[ASIL]] level C. The CPU cluster is fully [[cache coherent]] and the coherency is extended to all the other [[accelerators]] on-chip. |

== Architecture == | == Architecture == | ||

Revision as of 21:55, 2 September 2018

| Edit Values | |

| Xavier | |

| |

| General Info | |

| Designer | Nvidia |

| Manufacturer | TSMC |

| Model Number | Tegra194 |

| Market | Artificial Intelligence, Embedded |

| Introduction | January 8, 2018 (announced) June, 2018 (launched) |

| General Specs | |

| Family | Tegra |

| Microarchitecture | |

| ISA | ARMv8 (ARM) |

| Microarchitecture | Carmel, Volta |

| Process | 12 nm |

| Transistors | 9,000,000,000 |

| Technology | CMOS |

| Die | 350 mm² |

| Word Size | 64 bit |

| Cores | 8 |

| Threads | 8 |

| Electrical | |

| TDP | 30 W |

| TDP (Typical) | 20 W |

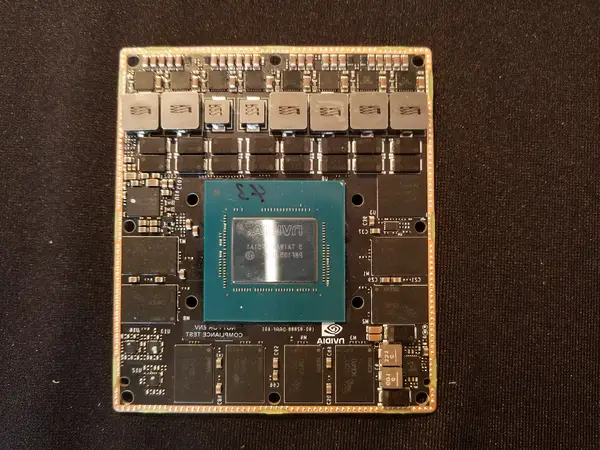

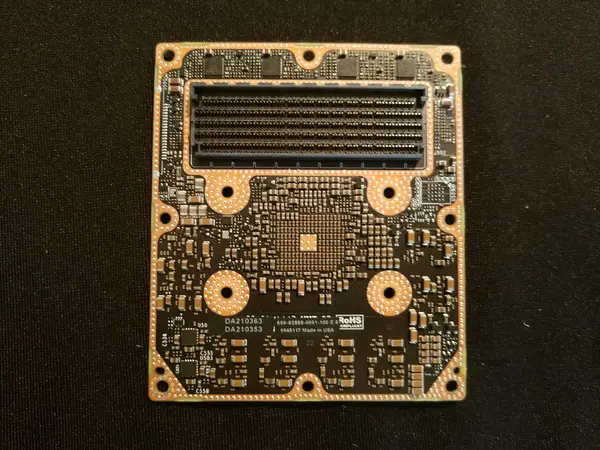

Tegra Xavier is a 64-bit ARM high-performance system on a chip for autonomous machines designed by Nvidia and introduced in 2018. Xavier is incorporated into a number of Nvidia's computers including the Jetson Xavier, Drive Xavier, and the Drive Pegasus.

Contents

Overview

Xavier is an autonomous machine process designed by Nvidia and introduced at CES 2018. Silicon came back in the last week of December 2017 with sampling started in the first quarter of 2018. NVIDIA plans on mass production by the end of the year. NVIDIA reported that the product is a result of $2 billion R&D and 8,000 engineering years. The chip is said to have full redundancy and diversity in its functional blocks.

The design targets and architecture started back in 2014. Fabricated on TSMC 12 nm process, the chip itself comprises an eight-core CPU cluster, GPU with additional inference optimizations, deep learning accelerator, vision accelerator, and a set of multimedia accelerators providing additional support for machine learning (stereo, LDC, optical flow). The ISP has been enhanced to provide native HDR support, higher precision math without offloading work to the GPU. Xavier features a large set of I/O and has been designed for safety and reliability supporting various standards such as Functional safety ISO-26262 and ASIL level C. The CPU cluster is fully cache coherent and the coherency is extended to all the other accelerators on-chip.

Architecture

CPU

- Main article: Carmel core

The chip features eight control/management Carmel cores, Nvidia's own custom 64-bit ARM cores. Those cores implement ARMv8.2 with RAS support and safety built-in, including dual-execution mode. All cores are fully cache coherent which is extended to the GPU and all the other accelerators in the chip.

GPU

- Main article: Volta

Xavier implements a derivative of their Volta GPU with a set of finer changes to address the machine earning market. It has eight Volta stream multiprocessors along with their standard 128 KiB of L1 cache and a 512 KiB of shared L2. Compared to Parker, Nvidia claims this GPU has 2.1x the graphics performance. Whereas their desktop parts (e.g., GV100) are a very powerful GPU that is used for training, the GPU here is optimized for inference. The most obvious change is that they added int8 support for lower precision to the CUDA tensor cores and those operate at the full 2x throughput of the FP16 ops. There is also 512 CUDA tensor cores, a number that's comparable to Nvidia's top-end models for machine learning (e.g., the GV100 has 672). All of this yields 22.6 tera-operations (int8) per second.

Accelerators

Xavier incorporates a set of accelerators designed to augment the functionality offered by the GPU and CPU in order to provide added flexibility and perhaps offer a way to implement some of the more common set of algorithms slightly more efficiently.

Programmable Vision Accelerator

Xavier incorporates a Programmable Vision Accelerator (PVA) for processing computer vision. There are actually two exact instances of the PVA on-chip, each can be used in lock-step or independently and are capable of implementing some of the common filter loop and other detection algorithms (e.g. Harris corner). For each of the PVAs, there are two dedicated vector processing units, each with its own memory and DMA. This 7-stock VLIW architecture made of 2 scalar slots, 2 vector slots, and 3 memory operations. The pipe is 256 bit wide and all types can operate at full throughput (32x8b, 16x16b, and 8x32b vector math). Additionally, since the chip is expected to be connected to a network of cameras (e.g., side, front, inside), the chip is capable of doing real-time encoding for all camera in high dynamic range.

| Video Processing | |

|---|---|

| Encode | Decode |

| 1.2 GPIX/s | 1.8 GPIX/s |

The chip has an ISP with native full-range HDR support and tile rendering capable of processing at 1.5 GPIX/s.

Deep Learning Accelerator

The chip incorporates a deep learning accelerator (DLA) that implements a number of specific set of deep learning functions common to many applications. This allows them to read the highest possible energy efficiency for those operations. The DLA has a peak performance of 5 TOPS for 16-bit integers or 10 TOPS for 8-bit integer.

Memory controller

|

Integrated Memory Controller

|

||||||||||

|

||||||||||

I/O

- 16 CSI channels

- 109 Gbps total bandwidth

- 1x gE interface

- 1x 10gE interface

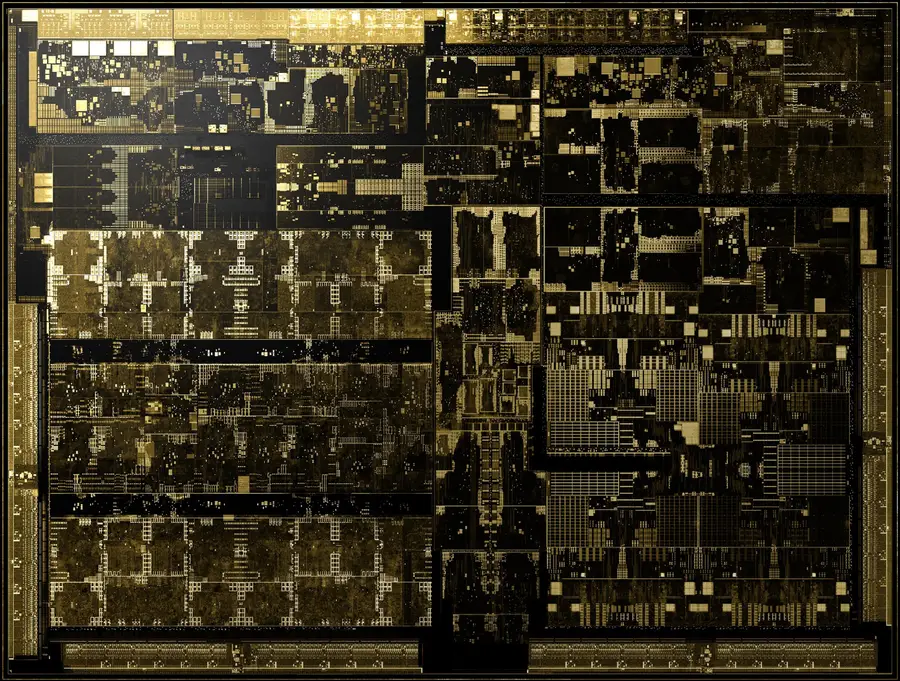

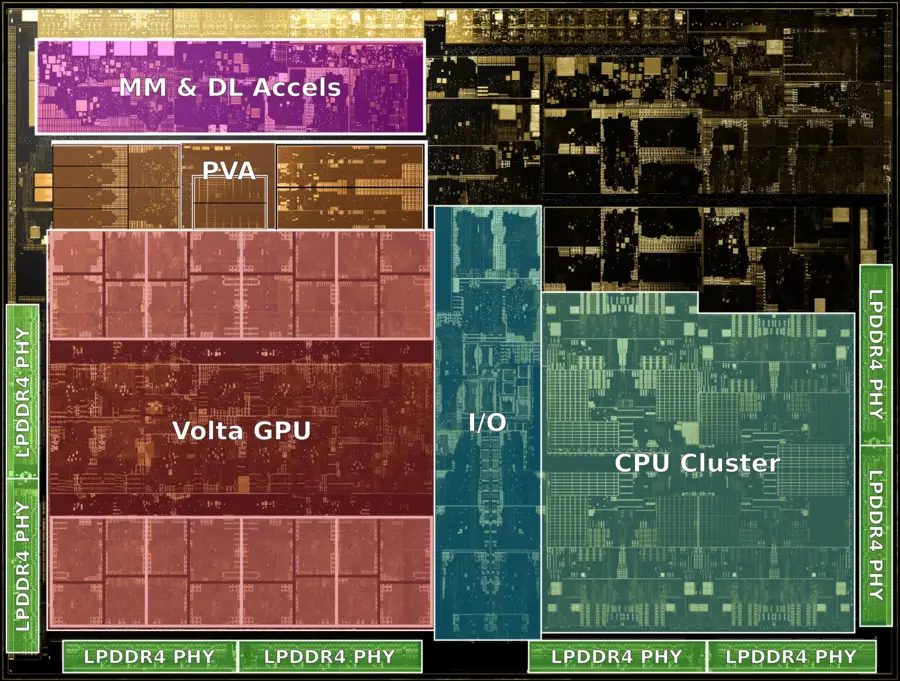

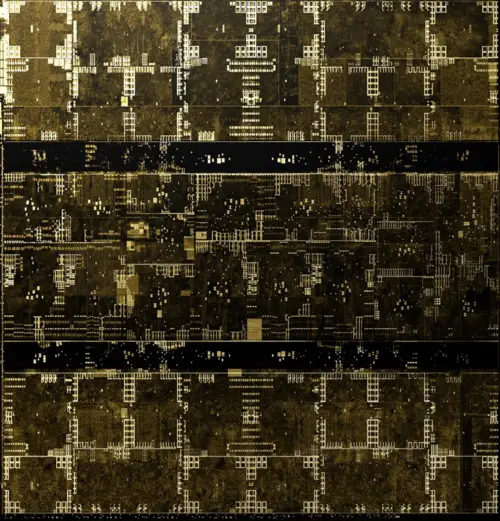

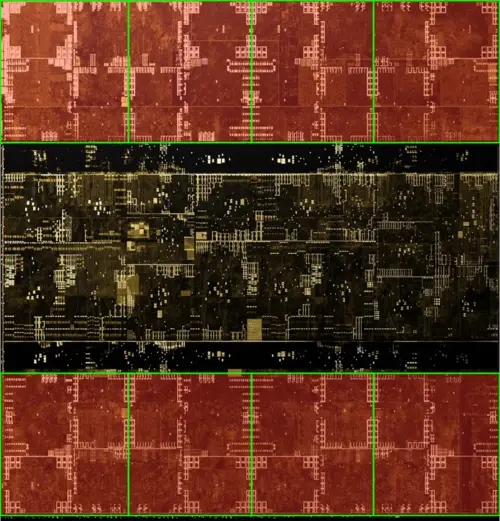

Die

SoC

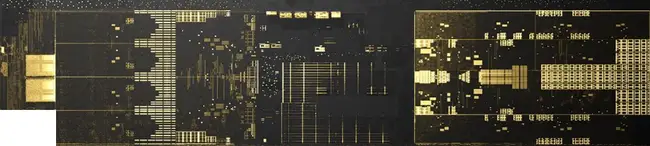

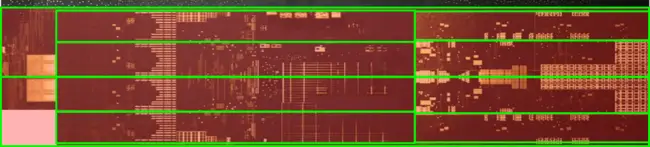

GPU

- ~89.2 mm² silicon area

CPU

See Carmel § CPU Complex.

PVA

MM Engine / DLA

- ~21.75 mm² silicon area

Borads

Documents

Bibliography

- IEEE Hot Chips 30 Symposium (HCS) 2018.

| core count | 8 + |

| designer | Nvidia + |

| die area | 350 mm² (0.543 in², 3.5 cm², 350,000,000 µm²) + |

| family | Tegra + |

| first announced | January 8, 2018 + |

| first launched | June 2018 + |

| full page name | nvidia/tegra/xavier + |

| has ecc memory support | true + |

| instance of | microprocessor + |

| isa | ARMv8 + |

| isa family | ARM + |

| ldate | June 2018 + |

| main image |  + + |

| manufacturer | TSMC + |

| market segment | Artificial Intelligence + and Embedded + |

| max memory bandwidth | 127.1 GiB/s (130,150.4 MiB/s, 136.473 GB/s, 136,472.586 MB/s, 0.124 TiB/s, 0.136 TB/s) + |

| max memory channels | 8 + |

| microarchitecture | Carmel + and Volta + |

| model number | Tegra194 + |

| name | Xavier + |

| process | 12 nm (0.012 μm, 1.2e-5 mm) + |

| supported memory type | LPDDR4X-4266 + |

| tdp | 30 W (30,000 mW, 0.0402 hp, 0.03 kW) + |

| tdp (typical) | 20 W (20,000 mW, 0.0268 hp, 0.02 kW) + |

| technology | CMOS + |

| thread count | 8 + |

| transistor count | 9,000,000,000 + |

| word size | 64 bit (8 octets, 16 nibbles) + |