m |

(→Memory Hierarchy) |

||

| Line 101: | Line 101: | ||

** Hardware prefetchers | ** Hardware prefetchers | ||

** L1 Cache: | ** L1 Cache: | ||

| − | *** 32 KB 8-way [[set associative]] instruction | + | *** 32 KB 8-way [[set associative]] instruction, 64 B line size |

| − | *** 24 KB 6-way set associative data | + | *** 24 KB 6-way set associative data, 64 B line size |

*** Per core | *** Per core | ||

** L2 Cache: | ** L2 Cache: | ||

| − | *** 512 KB 8-way set associative | + | *** 512 KB 8-way set associative, 64 B line size |

| − | + | *** Per 2 cores | |

| − | *** Per | ||

** L3 Cache: | ** L3 Cache: | ||

*** No level 3 cache | *** No level 3 cache | ||

| − | |||

** RAM | ** RAM | ||

*** Maximum of 1GB, 2 GB, and 4 GB | *** Maximum of 1GB, 2 GB, and 4 GB | ||

Revision as of 20:06, 9 April 2016

| Edit Values | |

| Silvermont µarch | |

| General Info |

Silvermont is Intel's 22 nm microarchitecture for the Atom family of system on chips. Introduced in 2013, Silvermont was the successor to Saltwell, targeting smartphones, tablets, embedded devices, and consumer electronics.

Contents

Codenames

| Platform | Core | Target |

|---|---|---|

| Merrifield | Tangier | Smartphones |

| Bay Trail | Valleyview | Tablets |

| Edisonville | Avoton | Microservers |

| Edisonville | Rangeley | Embedded Networking |

Architecture

Silvermont introduced a number of significant changes from the previous Atom microarchitecture in addition to the increase performance and lower power consumption.

Key changes from Saltwell

- Pipeline is now OoOE

- 14 stage (2 shorter)

- 10 stage panelty for miss (3 shorter)

- Support up to Westmere

- Multi-core modular system (up to 8 cores)

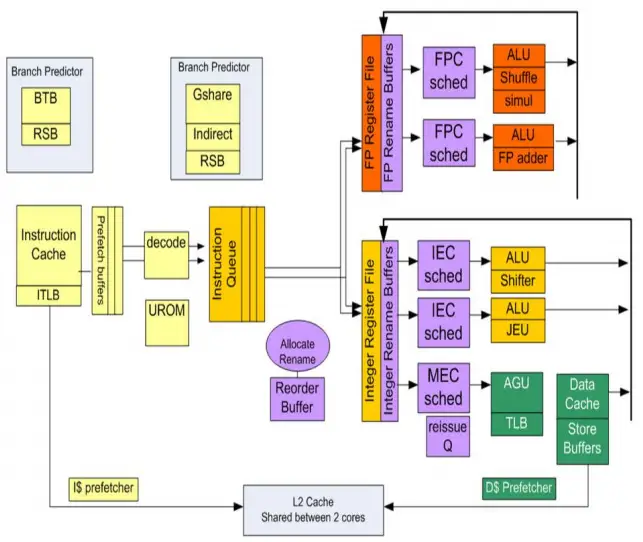

Block Diagram

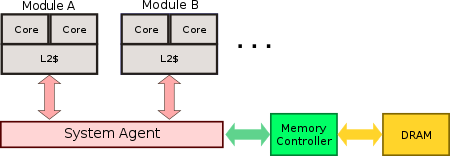

Core Modules

Silvermont employees a modular core design. Each module consists of 2 cores and 2 threads with exclusive hardware - resources are not shared. Within each module is a 1 MB L2 cache shared between the two cores. The L1 is still identical to Saltwell's: 32K L1I$ and 24K L1D$. Each module as a dedicated point-to-point interface (IDI) to the system agent. Each module has a per-core frequency and power management support. This is a departure from previous microarchitectures as well as similar desktop (e.g. Core) where all cores are tied to the same frequency.

System Agent

The system agent (Silvermont System Agent') acts very much like a North Bridge however it does a much better job than previous Atom microarchitectures performance-wise because it's capable of reordering all requests from all consumers (e.g. Core, GPU).

IDI

While the previous Atom architecture did away with the memory controller by integrating and other support chips on-die, it still used a Front Side Bus implementation to talk to North Bridge. In Silvermont, this was replaced with a lightweight in-die interconnect (IDI) - same one used in the Core processors. The use of IDI should have noticeable performance impact per thread.

Memory Hierarchy

- Cache

- Hardware prefetchers

- L1 Cache:

- 32 KB 8-way set associative instruction, 64 B line size

- 24 KB 6-way set associative data, 64 B line size

- Per core

- L2 Cache:

- 512 KB 8-way set associative, 64 B line size

- Per 2 cores

- L3 Cache:

- No level 3 cache

- RAM

- Maximum of 1GB, 2 GB, and 4 GB

- dual 32-bit channels, 1 or 2 ranks per channel

Pipeline

While Silvermont share some similarities with Saltwell, it introduces a number of significant changes that sets it apart from part Atom microarchitectures. Like Saltwell, the pipeline is still uses a dual-issue design; however it has a pipeline that is 2 stages shorter with a branch misprediction penalty of 3 cycles lower. Silvermont is the first microarchitecture to introduce out-of-order execution (OoOE)

Silvermont pipeline decodes and issues 2 instructions and dispatches 5 operations/cycle.

Instruction Fetch

Instruction Fetch, just like in previous microarchs make up the first three stages of the pipeline. However, with the introduction of out-of-order execution, silvermont's more aggressive fetching and branch prediction mean stalled instructions do not clog the entire pipeline as it did in Saltwell.

Instruction Decode

In previous generations of microarchitectures, common software code had roughly 5% of instructions split up into micro-ops. In Silvermont this is reduced down to just 1-2%. This reduction translates directly into performance because it eliminates the 3-4 additional cycles of overhead. Silvermont has a second branch predictor that can make more accurate predictions based on previously unknown information (e.g. target address from memory or register) and override the generic predictor. Nevertheless the expense of branch misprediction penalties was also reduced by 3 stages (down to 10 cycles from 13 in Saltwell).

Branch Prediction

Silvermont has two branch predictions: one that controls the instruction fetching and a second one that can override the first during the decode stage after gather additional information. The second predictor controls the speculative instruction issuing. For the first predictor, Silvermont uses a Branch Target Buffer to determine the next fetch address which also includes a 4-entry Return Stack Buffer for calls and returns handling.

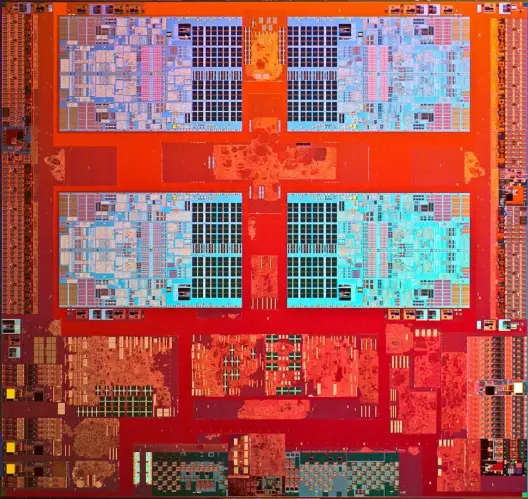

Die

8-core Avoton Die:

Cores

- Tangier - SoCs for Smartphones

- Valleyview - SoCs for Tablets

- Avoton - SoCs for Microservers

- Rangeley - SoCs for Embedded Networking