(update hotchip link) |

|||

| (35 intermediate revisions by 12 users not shown) | |||

| Line 1: | Line 1: | ||

| − | {{nvidia title|NVLink}} | + | {{nvidia title|NVLink}}{{interconnect arch}} |

'''NVLink''' is a proprietary system [[interconnect architecture]] that facilitates [[memory coherence|coherent]] data and control transmission accross multiple [[Nvidia]] [[GPU]]s and supporting [[CPU]]s. | '''NVLink''' is a proprietary system [[interconnect architecture]] that facilitates [[memory coherence|coherent]] data and control transmission accross multiple [[Nvidia]] [[GPU]]s and supporting [[CPU]]s. | ||

== Overview == | == Overview == | ||

| − | Announced in early 2014, NVLink was designed as an alternative solution to [[PCI Express]] with higher bandwidth and additional features specifically designed for multi-GPU systems. | + | Announced in early 2014, NVLink was designed as an alternative solution to [[PCI Express]] with higher bandwidth and additional features (e.g., shared memory) specifically designed to be compatible with Nvidia's own GPU ISA for multi-GPU systems. Prior to the introduction of NVLink with {{nvidia|Pascal|l=arch}} (e.g., {{nvidia|Kepler|l=arch}}), multiple Nvidia's GPUs would sit on a shared [[PCIe]] bus. Although direct GPU-GPU transfers and accesses were already possible using Nvidia's {{nvidia|Unified Virtual Addressing}} over the [[PCIe]] bus, as the size of data sets continued to grow, the bus became a growing system [[bottleneck]]. Throughput could further improve through the use of a [[PCIe switch]]. |

| + | |||

| + | |||

| + | :[[File:nvidia gpu pcie bus.svg|350px]] | ||

| + | |||

| + | |||

| + | NVLink is designed to replace the inter-GPU-GPU communication from going over the PCIe lanes. It's worth noting that NVLink was also designed for CPU-GPU communication with higher bandwidth than PCIe. Although it's unlikely that NVLink would be implemented on an x86 system by either [[AMD]] or [[Intel]], [[IBM]] has collaborated with Nvidia to support NVLink on their [[POWER]] microprocessors. For supported microprocessors, the NVLink can eliminate PCIe entirely for all links. | ||

| + | |||

| + | |||

| + | :[[File:nvidia gpu nvlink overview.svg|550px]] | ||

| + | |||

| + | === Links === | ||

| + | An NVLink channel is called a '''Brick''' (or an ''NVLink Brick''). A single NVLink is a bidirectional interface which comprises 8 differential pairs in each direction for a total of 32 wires. The pairs are DC coupled an use an 85Ω differential termination with an embedded clock. To ease routing, NVLink supports [[lane reversal]] and [[lane polarity]], meaning the physical lane ordering and their polarity between the two devices may be reversed. | ||

| + | |||

| + | |||

| + | :[[File:nvlink link.svg|class=wikichip_ogimage|800px]] | ||

| + | |||

| + | |||

| + | ==== Packet ==== | ||

| + | A single NVLink [[packet]] ranges from a one to eighteen [[flits]]. Each flit is 128-bit, allowing for the transfer of 256 bytes using a single header flit and 16 payload flits for a peak efficiency of 94.12% and 64 bytes using a single header flit and 4 data payload flits for an efficiency of 80% unidirectional. In bidirectional traffic, this is slightly reduced to 88.9% and 66.7% respectively. | ||

| + | |||

| + | A packet comprises of at least a header, and optionally, an address extension (AE) flit, a byte enable (BE) flit, and up to 16 data payload flits. A typical transaction has at least request and response with posted operations not necessitating a response. | ||

| + | |||

| + | :[[File:nvlink packet.svg|600px]] | ||

| + | ==== Header flit ==== | ||

| + | The header flit is 128-bit. It comprises a 25-bit CRC field (discussed below), 83-bit transaction field, and a 20-bit data link (DL) layer field. The transaction field includes the request type, address, flow control bits, and tag identifier. The data link field includes things such as the packet length, application number tag, and acknowledge identifier. | ||

| + | |||

| + | The address extension (AE) flit is reserved for fairly static bits and is usually transmitted only bits change. | ||

| + | ==== Error Correction ==== | ||

| + | Nvidia specifies the error rate at 1 in 1×10<sup>12</sup>. Error detection is done through the 25-bit [[cyclic redundancy check]] header field. The receiver is responsible for keeping the data in a [[replay buffer]]. Transmitted packets are sequenced and a positive acknowledgment is sent back to the source upon a good CRC. A missing acknowledgment following a timeout will initiate a reply sequence, retransmitting all subsequent packets. | ||

| + | |||

| + | The CRC field consists of 25 bits, allowing up to 5 random bits in error for the largest packet or alternatively, for differential pair bursts it can support up to 25 sequential bit errors. CRC is actually calculated over the header and the previous payload, eliminating the need for a separate CRC field for the data payload. Note that since the header also incorporates the packet length, it is also gets included in the CRC check. | ||

| + | |||

| + | For example, consider a sequence of two data payload (32 bytes) flits and their accompanying header. The next packet will have the CRC done over the current header as well as the two data payload from the prior transaction. If this is the first transaction, the CRC assumes the prior transaction was a [[NULL]] transaction. | ||

| + | |||

| + | === Data Rates === | ||

| + | <table class="wikitable"> | ||

| + | <tr><th> </th><th>[[#NVLink 1.0|NVLink 1.0]]</th><th>[[#NVLink 2.0|NVLink 2.0]]</th><th> [[#NVLink 3.0|NVlink 3.0]]</th><th> [[#NVLink 4.0|NVlink 4.0]]</th></tr> | ||

| + | <tr><th>Signaling Rate</th><td>20 GT/s</td><td>25 GT/s</td><td>50 GT/s</td><td>100 GT/s</td></tr> | ||

| + | <tr><th>Lanes/Link</th><td>8</td><td>8</td><td>4</td><td>2</td></tr> | ||

| + | <tr><th>Rate/Link</th><td>20 GB/s</td><td>25 GB/s</td><td>25 GB/s</td><td>25 GB/s</td></tr> | ||

| + | <tr><th>BiDir BW/Link</th><td>40 GB/s</td><td>50 GB/s</td><td> 50 GB/s</td><td> 50 GB/s</td></tr> | ||

| + | <tr><th>Links/Chip</th><td>4 (P100)</td><td>6 (V100)</td><td> 12 (A100)</td><td> 18 (H100)</td></tr> | ||

| + | <tr><th>BiDir BW/Chip</th><td>160 GB/s (P100)</td><td>300 GB/s (V100)</td><td>600 GB/s (A100)</td><td>900 GB/s (H100)</td></tr> | ||

| + | </table> | ||

== NVLink 1.0 == | == NVLink 1.0 == | ||

| − | NVLink 1.0 was first introduced with the {{nvidia| | + | NVLink 1.0 was first introduced with the {{nvidia|P100}} [[GPGPU]] based on the {{nvidia|Pascal|l=arch}} microarchitecture. {{nvidia|P100}} comes with its own [[HBM]] memory in addition to being able to access system memory from the CPU side. The P100 has four NVLinks, which supports up to 20 GB/s for a bidrectional bandwidth of 40 GB/s for a total aggregated bandwidth of 160 GB/s. In the most basic configuration, all four links are connected between the two GPUs for 160 GB/s GPU-GPU bandwidth in addition to the PCIe lanes connected to the CPU for accessing system [[DRAM]]. |

| − | + | ||

| + | |||

| + | :[[File:nvlink p100.svg|400px]] | ||

| + | |||

| + | |||

| + | The first CPU to support NVLink natively was the [[IBM]] {{ibm|POWER8+|l=arch}} which allowed the NVLink interconnect to extend to the CPU, replacing the slower PCIe link. Since the P100 only has four NVLinks, a single link from each GPU can be used to link the CPU to the GPU. A typical full configuration node consists of four P100 GPUs and two Power CPUs. The four GPUs are fully connected to each other with the fourth link going to the CPU. | ||

| + | |||

| + | |||

| + | :[[File:nvlink p100 ibm p8+.svg|400px]] | ||

| + | |||

| + | |||

| + | Since [[Intel]]-based CPUs do not support NVLinks (and are unlikely to ever support them), a couple of variations are possible from two to four P100 GPUs. In each configuration, the GPUs are all fully connected to each other with every two GPUs hooked up to a single [[PCIe switch]] which is directly connected to the CPU. Each link is 40 GB/s bidirectional regardless of the configuration and may be aggregated to provide higher bandwidth between any two GPUs the more links are used between them. | ||

| + | |||

| + | |||

| + | :[[File:nvlink p100 intel cpu configs.svg|800px]] | ||

| + | |||

| + | === DGX-1 Configuration === | ||

| + | [[File:nvidia dgx-1 nvlink hybrid cube-mesh.svg|right|300px]] | ||

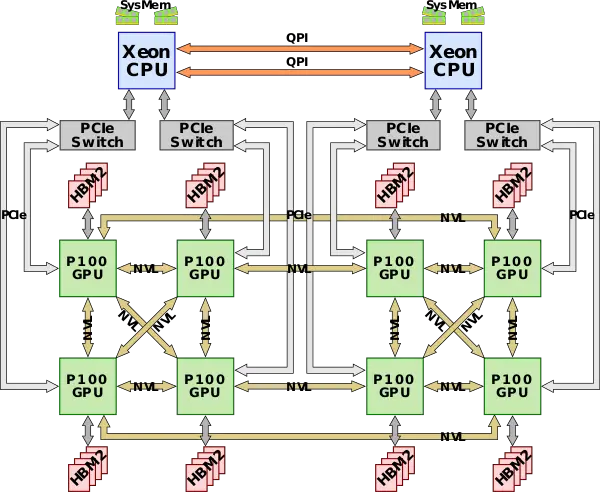

| + | In 2017 Nvidia introduced the DGX-1 system which takes full advantage of NVLink. The DGX-1 consists of eight Tesla P100 GPUs connected in a hybrid cube-mesh NVLink network topology along with dual-socket {{intel|Xeon}} CPUs. The two Xeons communicate with each other over [[Intel]]'s {{intel|QPI}} while the GPUs communicate via the NVLink. | ||

| + | |||

| + | :[[File:nvidia dgx-1 nvlink-gpu-xeon config.svg|600px]] | ||

| + | |||

== NVLink 2.0 == | == NVLink 2.0 == | ||

| − | NVLink 2.0 was first introduced with the {{nvidia|V100}} [[GPGPU]] based on the {{nvidia|Volta|l=arch}} microarchitecture along with [[IBM]]'s {{ibm|POWER9|l=arch}}. | + | NVLink 2.0 was first introduced with the {{nvidia|V100}} [[GPGPU]] based on the {{nvidia|Volta|l=arch}} microarchitecture along with [[IBM]]'s {{ibm|POWER9|l=arch}}. Nvidia added CPU mastering support, allowing both the GPU and CPU to access each others memory (i.e., direct load and stores) in a flat address space. The flat address space is supported through new address translation services. Additionally, there native support for [[atomic operations]] was added for both the CPU and GPU. With the addition of flat address space, NVLink now has [[cache coherence]] support, allowing the CPU to efficiently cache GPU memory, significantly improving latencies and thus performance. NVLink 2.0 has improved signaling rate to 25 Gbps per wire (25 GT/s) for 50 GB/s bidirectional bandwidth. The V100 also increased the number of NVLinks on-die to 6 for a total aggregated bandwidth of 300 GB/s. It's worth noting that additional power saving features were added such as deactivating lanes during idle. |

| + | |||

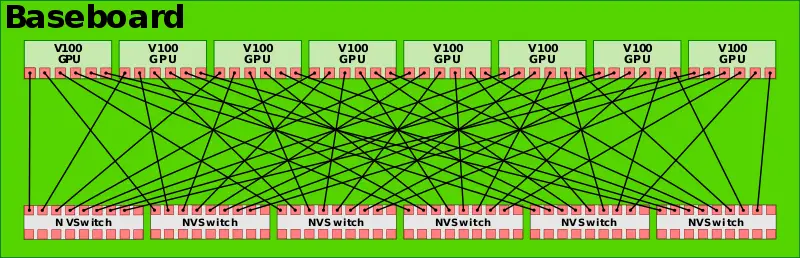

| + | NVLink 2.0 was introduced with the second-generation {{nvidia|DGX-1}}, but the full topology change took place with the {{nvidia|DGX-2}}. Nvidia also introduced the {{nvidia|NVSwitch}} with the DGX-2 which is an 18 NVLink ports switch. The 2-billion transistor switch can route traffic from nine ports to any of the other nine ports. With 50 GB/s per port, the switch is capable of a total of 900 GB/s of bandwidth. | ||

| + | |||

| + | For the DGX-2, Nvidia uses six {{nvidia|NVSwitches}} to fully connect every one of the eight GPUs to all the other seven GPUs on the same baseboard. | ||

| + | |||

| + | |||

| + | :[[File:dgx2 nvswitch baseboard diagram.svg|800px]] | ||

| + | |||

| + | |||

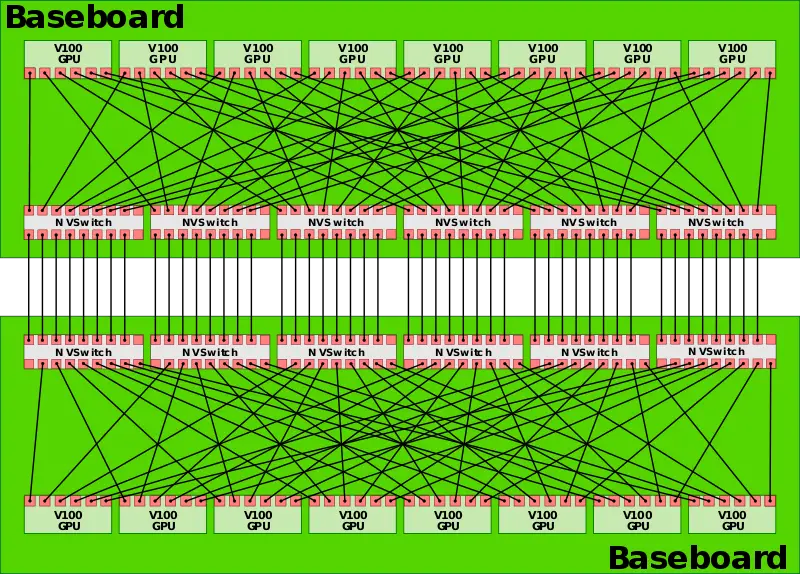

| + | Two baseboards are then connected to each other to fully connect all 16 GPUs to each other. | ||

| + | |||

| + | |||

| + | :[[File:dgx2 nvswitch baseboard diagram with two boards connected.svg|800px]] | ||

| + | |||

| + | == NVLink 3.0 == | ||

| + | NVLink 3.0 was first introduced with the {{nvidia|A100}} [[GPGPU]] based on the {{nvidia|Ampere|l=arch}} microarchitecture. NVLink 3.0 uses 50 Gbps signaling rate | ||

| + | |||

| + | == References == | ||

| + | * IEEE HotChips 34 (HC28), 2022 | ||

| + | * IEEE HotChips 30 (HC28), 2018 | ||

| + | * IEEE HotChips 29 (HC29), 2017 | ||

| + | * IEEE HotChips 28 (HC28), 2016 | ||

| + | |||

| + | |||

| + | |||

| + | [[category:nvidia]] | ||

| + | [[Category:interconnect_architectures]] | ||

Latest revision as of 03:38, 12 April 2024

| Interconnect Architectures | |

| |

| Concepts | |

| General | |

| Peripheral | |

| Storage Devices | |

| Audio Devices | |

NVLink is a proprietary system interconnect architecture that facilitates coherent data and control transmission accross multiple Nvidia GPUs and supporting CPUs.

Contents

Overview[edit]

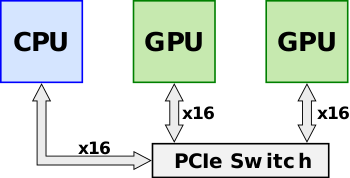

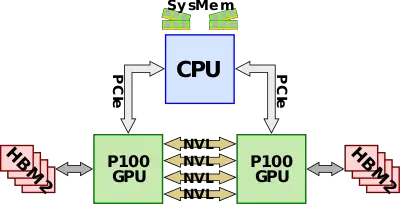

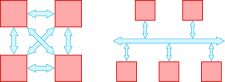

Announced in early 2014, NVLink was designed as an alternative solution to PCI Express with higher bandwidth and additional features (e.g., shared memory) specifically designed to be compatible with Nvidia's own GPU ISA for multi-GPU systems. Prior to the introduction of NVLink with Pascal (e.g., Kepler), multiple Nvidia's GPUs would sit on a shared PCIe bus. Although direct GPU-GPU transfers and accesses were already possible using Nvidia's Unified Virtual Addressing over the PCIe bus, as the size of data sets continued to grow, the bus became a growing system bottleneck. Throughput could further improve through the use of a PCIe switch.

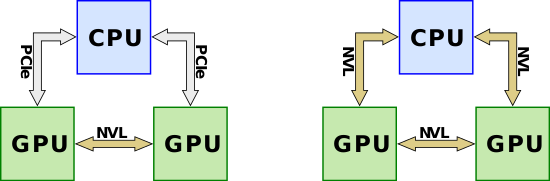

NVLink is designed to replace the inter-GPU-GPU communication from going over the PCIe lanes. It's worth noting that NVLink was also designed for CPU-GPU communication with higher bandwidth than PCIe. Although it's unlikely that NVLink would be implemented on an x86 system by either AMD or Intel, IBM has collaborated with Nvidia to support NVLink on their POWER microprocessors. For supported microprocessors, the NVLink can eliminate PCIe entirely for all links.

Links[edit]

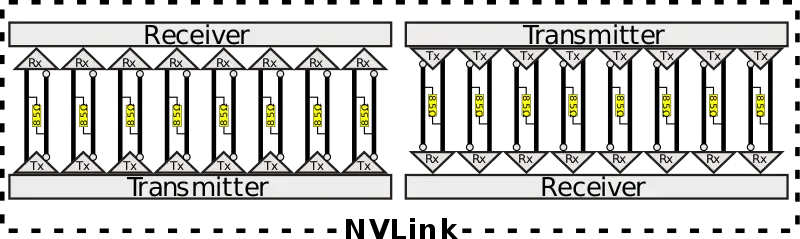

An NVLink channel is called a Brick (or an NVLink Brick). A single NVLink is a bidirectional interface which comprises 8 differential pairs in each direction for a total of 32 wires. The pairs are DC coupled an use an 85Ω differential termination with an embedded clock. To ease routing, NVLink supports lane reversal and lane polarity, meaning the physical lane ordering and their polarity between the two devices may be reversed.

Packet[edit]

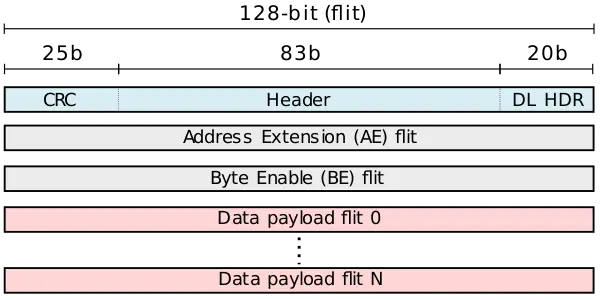

A single NVLink packet ranges from a one to eighteen flits. Each flit is 128-bit, allowing for the transfer of 256 bytes using a single header flit and 16 payload flits for a peak efficiency of 94.12% and 64 bytes using a single header flit and 4 data payload flits for an efficiency of 80% unidirectional. In bidirectional traffic, this is slightly reduced to 88.9% and 66.7% respectively.

A packet comprises of at least a header, and optionally, an address extension (AE) flit, a byte enable (BE) flit, and up to 16 data payload flits. A typical transaction has at least request and response with posted operations not necessitating a response.

Header flit[edit]

The header flit is 128-bit. It comprises a 25-bit CRC field (discussed below), 83-bit transaction field, and a 20-bit data link (DL) layer field. The transaction field includes the request type, address, flow control bits, and tag identifier. The data link field includes things such as the packet length, application number tag, and acknowledge identifier.

The address extension (AE) flit is reserved for fairly static bits and is usually transmitted only bits change.

Error Correction[edit]

Nvidia specifies the error rate at 1 in 1×1012. Error detection is done through the 25-bit cyclic redundancy check header field. The receiver is responsible for keeping the data in a replay buffer. Transmitted packets are sequenced and a positive acknowledgment is sent back to the source upon a good CRC. A missing acknowledgment following a timeout will initiate a reply sequence, retransmitting all subsequent packets.

The CRC field consists of 25 bits, allowing up to 5 random bits in error for the largest packet or alternatively, for differential pair bursts it can support up to 25 sequential bit errors. CRC is actually calculated over the header and the previous payload, eliminating the need for a separate CRC field for the data payload. Note that since the header also incorporates the packet length, it is also gets included in the CRC check.

For example, consider a sequence of two data payload (32 bytes) flits and their accompanying header. The next packet will have the CRC done over the current header as well as the two data payload from the prior transaction. If this is the first transaction, the CRC assumes the prior transaction was a NULL transaction.

Data Rates[edit]

| NVLink 1.0 | NVLink 2.0 | NVlink 3.0 | NVlink 4.0 | |

|---|---|---|---|---|

| Signaling Rate | 20 GT/s | 25 GT/s | 50 GT/s | 100 GT/s |

| Lanes/Link | 8 | 8 | 4 | 2 |

| Rate/Link | 20 GB/s | 25 GB/s | 25 GB/s | 25 GB/s |

| BiDir BW/Link | 40 GB/s | 50 GB/s | 50 GB/s | 50 GB/s |

| Links/Chip | 4 (P100) | 6 (V100) | 12 (A100) | 18 (H100) |

| BiDir BW/Chip | 160 GB/s (P100) | 300 GB/s (V100) | 600 GB/s (A100) | 900 GB/s (H100) |

NVLink 1.0[edit]

NVLink 1.0 was first introduced with the P100 GPGPU based on the Pascal microarchitecture. P100 comes with its own HBM memory in addition to being able to access system memory from the CPU side. The P100 has four NVLinks, which supports up to 20 GB/s for a bidrectional bandwidth of 40 GB/s for a total aggregated bandwidth of 160 GB/s. In the most basic configuration, all four links are connected between the two GPUs for 160 GB/s GPU-GPU bandwidth in addition to the PCIe lanes connected to the CPU for accessing system DRAM.

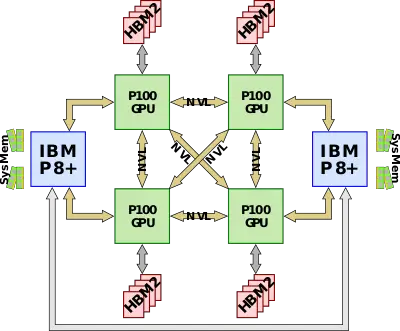

The first CPU to support NVLink natively was the IBM POWER8+ which allowed the NVLink interconnect to extend to the CPU, replacing the slower PCIe link. Since the P100 only has four NVLinks, a single link from each GPU can be used to link the CPU to the GPU. A typical full configuration node consists of four P100 GPUs and two Power CPUs. The four GPUs are fully connected to each other with the fourth link going to the CPU.

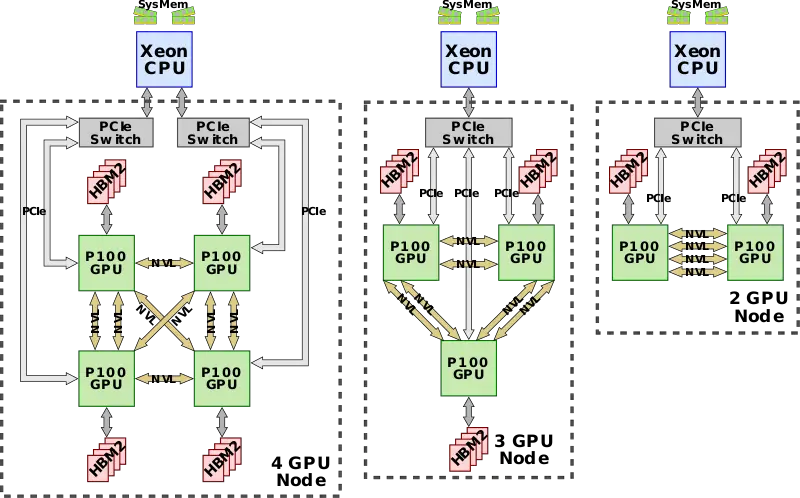

Since Intel-based CPUs do not support NVLinks (and are unlikely to ever support them), a couple of variations are possible from two to four P100 GPUs. In each configuration, the GPUs are all fully connected to each other with every two GPUs hooked up to a single PCIe switch which is directly connected to the CPU. Each link is 40 GB/s bidirectional regardless of the configuration and may be aggregated to provide higher bandwidth between any two GPUs the more links are used between them.

DGX-1 Configuration[edit]

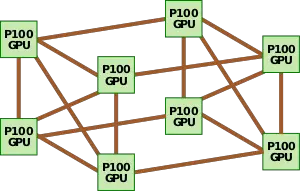

In 2017 Nvidia introduced the DGX-1 system which takes full advantage of NVLink. The DGX-1 consists of eight Tesla P100 GPUs connected in a hybrid cube-mesh NVLink network topology along with dual-socket Xeon CPUs. The two Xeons communicate with each other over Intel's QPI while the GPUs communicate via the NVLink.

NVLink 2.0[edit]

NVLink 2.0 was first introduced with the V100 GPGPU based on the Volta microarchitecture along with IBM's POWER9. Nvidia added CPU mastering support, allowing both the GPU and CPU to access each others memory (i.e., direct load and stores) in a flat address space. The flat address space is supported through new address translation services. Additionally, there native support for atomic operations was added for both the CPU and GPU. With the addition of flat address space, NVLink now has cache coherence support, allowing the CPU to efficiently cache GPU memory, significantly improving latencies and thus performance. NVLink 2.0 has improved signaling rate to 25 Gbps per wire (25 GT/s) for 50 GB/s bidirectional bandwidth. The V100 also increased the number of NVLinks on-die to 6 for a total aggregated bandwidth of 300 GB/s. It's worth noting that additional power saving features were added such as deactivating lanes during idle.

NVLink 2.0 was introduced with the second-generation DGX-1, but the full topology change took place with the DGX-2. Nvidia also introduced the NVSwitch with the DGX-2 which is an 18 NVLink ports switch. The 2-billion transistor switch can route traffic from nine ports to any of the other nine ports. With 50 GB/s per port, the switch is capable of a total of 900 GB/s of bandwidth.

For the DGX-2, Nvidia uses six NVSwitches to fully connect every one of the eight GPUs to all the other seven GPUs on the same baseboard.

Two baseboards are then connected to each other to fully connect all 16 GPUs to each other.

NVLink 3.0[edit]

NVLink 3.0 was first introduced with the A100 GPGPU based on the Ampere microarchitecture. NVLink 3.0 uses 50 Gbps signaling rate

References[edit]

- IEEE HotChips 34 (HC28), 2022

- IEEE HotChips 30 (HC28), 2018

- IEEE HotChips 29 (HC29), 2017

- IEEE HotChips 28 (HC28), 2016