(→History) |

(→Architecture) |

||

| Line 18: | Line 18: | ||

== Architecture == | == Architecture == | ||

| − | |||

=== System === | === System === | ||

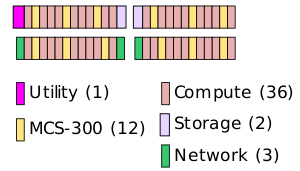

| − | { | + | Astra consists of 36 compute racks, 12 cooling racks, 3 networking racks, 2 storage racks, and a single utility rack. |

| + | |||

| + | :[[File:astra floorplan.svg|300px]] | ||

| + | |||

| + | :[[File:astra_supercomputer_illustration.png|500px]] | ||

| + | |||

| + | :[[File:astra 540-port switch.svg|thumb|right|540-port Switch]] | ||

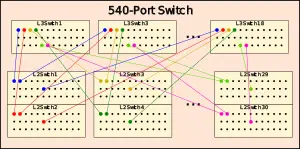

| + | Servers are linked via Mellanox IB EDR interconnect in a three-level fat tree topology with a 2:1 tapered fat-tree at L1. Astra uses three 540-port switches. Those are formed from 30 level 2 switches that provide 18 ports each (540 in total) with the remaining 18 links going for each of the 18 level 3 switches. The system has a peak Wall power of 1.6 MW. | ||

| + | |||

| + | {| class="wikitable" | ||

| + | |- | ||

| + | ! colspan="4" | Total Power (kW) | ||

| + | |- | ||

| + | ! Wall !! Peak !! Nominal ([[LINPACK]]) !! Idle | ||

| + | |- | ||

| + | | 1,631.5 || 1,440.3 || 1,357.3 || 274.9 | ||

| + | |} | ||

| + | |||

=== Compute Rack === | === Compute Rack === | ||

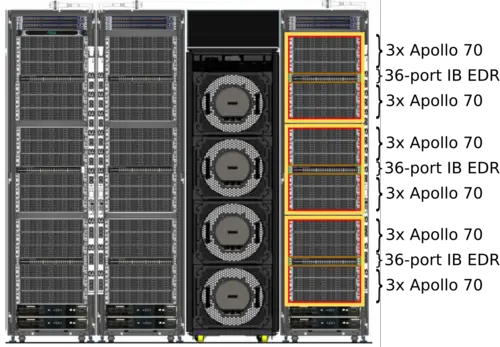

| − | + | Each of the 36 compute racks consist of 18 of HPE's Apollo 70 Chassis along with 3 36-port InfiniBand EDR switches. There is a single switch for every 6 chassis taking 24 ports. | |

| + | |||

| + | : [[File:astra racks and cooling (annotated).png|500px]] | ||

| + | |||

| + | A full rack with 72 nodes has 4,032 cores yielding a peak performance of 64.51 [[teraFLOPS]] with over 24.57 TB/s of peak bandwidth. | ||

| + | |||

| + | <table class="wikitable"> | ||

| + | <tr><th colspan="5">Full Rack Capabilities</th></tr> | ||

| + | <tr><th>Processors</th><td>144<br>72 × 2 × CPU</td></tr> | ||

| + | <tr><th>Core</th><td>4,032 (16,128 threads)<br>72 × 56 (224 threads)</td></tr> | ||

| + | <tr><th>FLOPS (SP)</th><td>64.51 TFLOPS<br>72 × 2 × 28 × 16 GFLOPS</td></tr> | ||

| + | <tr><th>FLOPS (DP)</th><td>32.26 TFLOPS<br>72 × 2 × 28 × 8 GFLOPS</td></tr> | ||

| + | <tr><th>Memory</th><td>9 TiB (DRR4)<br>72 × 2 × 8 × 8 GiB</td></tr> | ||

| + | <tr><th>Memory BW</th><td>24.57 TB/s<br>72 × 16 × 21.33 GB/s</td></tr> | ||

| + | </table> | ||

| + | |||

| + | Each compute rack has a projected peak power of 1295.8 kW (1436.0 kW Wall) with a nominal 1217.0 kW of power under [[linpack]]. | ||

=== Compute Node === | === Compute Node === | ||

{{empty section}} | {{empty section}} | ||

Revision as of 23:04, 23 August 2018

Astra is a petascale ARM supercomputer designed for Sandia National Laboratories expeced to be deployed in mid-2018. This is the first ARM-based supercomputer to exceed 1 petaFLOPS.

Contents

History

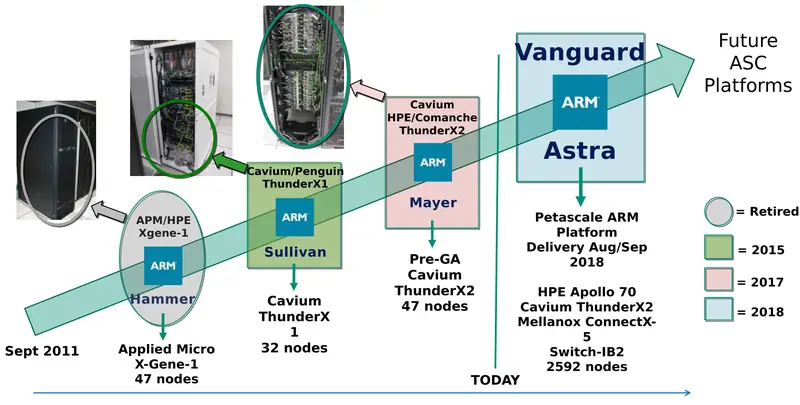

Astra is an ARM-based supercomputer expected to be deployed at Sandia National Laboratories. The computer is one of a series of prototypes commissioned by the U.S. Department of Energy as part of a program that evaluates the feasibility of emerging high-performance computing architectures as production platforms to support NNSA's mission. Specifically, Astra is designed to demonstrate the viability of ARM for DOE NNSA Supercomputing. Astra is the fourth prototype as part of the Vanguard project initiative and is by far the largest ARM-based supercomputer designed to that point.

Overview

Astra is the first ARM-based petascale supercomputer. The system consists of 5,184 Cavium ThunderX2 CN9975 processors with slightly over 1.2 MW power consumption for a peak performance of 2.322 petaFLOPS. Each ThunderX2 CN9975 has 28 cores operating at 2 GHz. Astra has close around 700 terabytes of memory and uses a 3-level fat tree interconnect.

| Components | Total Memory | ||||

| Processors | 5,184 2 x 72 x 36 | Type | DDR4 | NVMe | |

| Racks | 36 | Node | 128 GiB | ? | |

| Peak FLOPS | 2.322 petaFLOPS (SP) 1.161 petaFLOPS (DP) | Astra | 324 TiB | 403 TB | |

Architecture

System

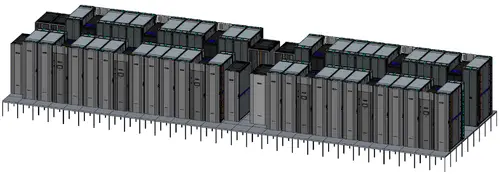

Astra consists of 36 compute racks, 12 cooling racks, 3 networking racks, 2 storage racks, and a single utility rack.

Servers are linked via Mellanox IB EDR interconnect in a three-level fat tree topology with a 2:1 tapered fat-tree at L1. Astra uses three 540-port switches. Those are formed from 30 level 2 switches that provide 18 ports each (540 in total) with the remaining 18 links going for each of the 18 level 3 switches. The system has a peak Wall power of 1.6 MW.

| Total Power (kW) | |||

|---|---|---|---|

| Wall | Peak | Nominal (LINPACK) | Idle |

| 1,631.5 | 1,440.3 | 1,357.3 | 274.9 |

Compute Rack

Each of the 36 compute racks consist of 18 of HPE's Apollo 70 Chassis along with 3 36-port InfiniBand EDR switches. There is a single switch for every 6 chassis taking 24 ports.

A full rack with 72 nodes has 4,032 cores yielding a peak performance of 64.51 teraFLOPS with over 24.57 TB/s of peak bandwidth.

| Full Rack Capabilities | ||||

|---|---|---|---|---|

| Processors | 144 72 × 2 × CPU | |||

| Core | 4,032 (16,128 threads) 72 × 56 (224 threads) | |||

| FLOPS (SP) | 64.51 TFLOPS 72 × 2 × 28 × 16 GFLOPS | |||

| FLOPS (DP) | 32.26 TFLOPS 72 × 2 × 28 × 8 GFLOPS | |||

| Memory | 9 TiB (DRR4) 72 × 2 × 8 × 8 GiB | |||

| Memory BW | 24.57 TB/s 72 × 16 × 21.33 GB/s | |||

Each compute rack has a projected peak power of 1295.8 kW (1436.0 kW Wall) with a nominal 1217.0 kW of power under linpack.

Compute Node

| This section is empty; you can help add the missing info by editing this page. |

Socket

| This section is empty; you can help add the missing info by editing this page. |

Full-node

| This section is empty; you can help add the missing info by editing this page. |

Bibliography

- SNL (personal communication, August 2018).

- DOE. (June 18, 2018). "Arm-based supercomputer prototype to be deployed at Sandia National Laboratories" [Press Release]

- Kevin Pedretti, Jim H. Laros III, Si Hammond. (June 28, 2018). "Vanguard Astra: Maturing the ARM Software Ecosystem for U.S. DOE/ASC Supercomputing"