(→Key changes from {{\\|Mongoose 1}}/{{\\|Mongoose 2|M2}}) |

(fetch, BPU) |

||

| Line 39: | Line 39: | ||

* Core | * Core | ||

** Front-end | ** Front-end | ||

| − | *** | + | *** Larger ITLB (512 entries, up from 256) |

| − | *** 6-way decode (from 4) | + | *** Larger branch prediction |

| + | **** Larger microBTB (128-entry, up from 64) | ||

| + | **** Larger and Wider L2 BTB (2x BW, 16K-entry, up from 8K) | ||

| + | **** Improved taken latency on main BTB | ||

| + | *** Larger [[instruction queue]] (40 entries, up from 24) | ||

| + | *** Larger [[instruction fetch]] (48B/cycle, up from 24B/cycle) | ||

| + | *** 6-way decode (from 4-way) | ||

*** µOP fusion | *** µOP fusion | ||

**** new fuse address generation and memory µOP support | **** new fuse address generation and memory µOP support | ||

**** new fuse literal generation µOP support | **** new fuse literal generation µOP support | ||

** Back-end | ** Back-end | ||

| − | *** Larger [[ReOrder buffer]] (228 entries, from | + | *** Larger [[ReOrder buffer]] (228 entries, from 100 entries) |

*** New fastpath logical shift of up to 3 places | *** New fastpath logical shift of up to 3 places | ||

| − | *** Larger dispatch window (12 µOP/cycle, from 9) | + | *** Larger dispatch window (12 µOP/cycle, up from 9) |

*** Larger Integer physical register file | *** Larger Integer physical register file | ||

*** Larger FP physical register | *** Larger FP physical register | ||

| Line 61: | Line 67: | ||

****** crypto EU, simple vector EU, vector shuffle/shift/mul, new FP store, new FP conversion | ****** crypto EU, simple vector EU, vector shuffle/shift/mul, new FP store, new FP conversion | ||

** Memory subsystem | ** Memory subsystem | ||

| + | *** 4x Larger L2 BTB (4k-entry, up from 1k-entry) | ||

*** New L3 Cache | *** New L3 Cache | ||

**** 4 MiB | **** 4 MiB | ||

| − | *** | + | *** Double bandwidth (32B (2x16B)/cycle from 16B/cycle) |

**** fast paired 128-bit loads and stores | **** fast paired 128-bit loads and stores | ||

* branch misprediction penalty increased (16 cycles, from 14) | * branch misprediction penalty increased (16 cycles, from 14) | ||

| Line 76: | Line 83: | ||

=== Memory Hierarchy === | === Memory Hierarchy === | ||

{{empty section}} | {{empty section}} | ||

| + | |||

| + | == Overview == | ||

| + | The M3 was originally planned to be an incremental design over the original {{\\|M1}}. Design for the M3 started in 2014 with RTL design starting in 2015. When started, the goals for the M3 were around 10-15% performance improvements and the polishing of low-hanging fruits that were left from their first generation design. Samsung stated that throughout the development cycle of the M3, goals were drastically changed for considerably higher performance along with a much wider and deeper pipeline. First M3-based product was productized in Q1 2018 on Samsung's own [[10 nm process]] operating at 2.7 GHz. | ||

== Core == | == Core == | ||

| − | {{ | + | The M3 is an [[out-of-order]] microprocessor with a 6-way decode and a 12-way dispatch. |

| + | |||

| + | === Front end === | ||

| + | The front-end of the M3 is tasked with the prediction of future instruction streams, the fetching of instructions, and the code of the [[ARM]] instructions into [[micro-operations]] to be executed by the back-end. | ||

| + | |||

| + | ==== Fetch & pre-decoding ==== | ||

| + | With the help of the [[branch predictor]], the instructions should already be found in the [[level 1 instruction cache]]. The L1I cache is 64 KiB, 4-way [[set associative]]. Samsung kept the L1I cache the same as prior generations. The L1I cache and has its own [[iTLB]] consisting of 512 entries, double the prior generation. A large change in the M3 is the instruction fetch bandwidth. Previously, up to 24 bytes could be read each cycle into the [[instruction queue]]. In the M3, now 48 bytes (up to 12 [[ARM]] instructions) are read each cycle into the [[instruction queue]] which allows them to hide very short [[branch bubbles]] and deliver a large number of instructions to be decoded by a larger decoder. The [[instruction queue]] is a slightly more complex component than a simple buffer. The byte stream gets split up into the [[ARM]] instructions its made off, including dealing with the various misaligned ARM instructions such as in the case of {{arm|thumb|thumb mode}}. If the queue is filled to capacity, the fetch is gated for a cycle in order to allow the queue to naturally decrease. | ||

| + | |||

| + | ===== Branch Predictor ==== | ||

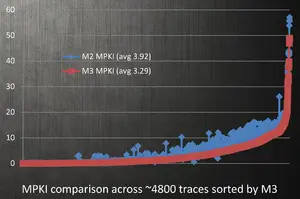

| + | [[File:m3-mpki rate.png|thumb|right|miss-predict/1K instructions is reduced by ~15% over the {{\\|M2}}.]] | ||

| + | The M1 has a perceptron branch predictor with a couple of perceptrons which can handle two branches per cycle. The branch predictor on the M3 largely enhances the one found in the {{\\|M1}}. A number of new weights were added and previous weights were re-tuned for various case accuracy. The unit is capable of indirect predictions as well as loop and stream predictions when it detects those traffic patterns. The indirect branches received a lot of attention in the M3. Additionally, the capacity of the micro-[[BTB]] was doubled to 128 entries which is used for caching very small tight loops and other hot kernels. Although the buffer size itself has not been increased, the average branch taken latency has also been improved through improvements of [[branch]]-taken turn-around time for various cases that cold be expidited. The second-level BTB capacity was also doubled to 16K entries deep. | ||

| + | |||

| + | In total, Samsung reported a net reduction of the average [[miss-predictions per thousand instructions]] (MPKI) of around 15% (shown in the graph on the right) over the {{\\|M2}}. | ||

| + | |||

| + | The branch predictor feeds a decoupled instruction address queue which in turn feeds the instruction cache. | ||

== All M3 Processors == | == All M3 Processors == | ||

| Line 115: | Line 139: | ||

| − | == | + | == Bibliography == |

| + | * {{hcbib|30}} | ||

* LLVM: lib/Target/AArch64/AArch64SchedExynosM3.td | * LLVM: lib/Target/AArch64/AArch64SchedExynosM3.td | ||

Revision as of 10:24, 12 January 2019

| Edit Values | |

| Mongoose 3 µarch | |

| General Info | |

| Arch Type | CPU |

| Designer | Samsung |

| Manufacturer | Samsung |

| Introduction | 2018 |

| Process | 10 nm |

| Core Configs | 4 |

| Pipeline | |

| OoOE | Yes |

| Speculative | Yes |

| Reg Renaming | Yes |

| Decode | 6-way |

| Instructions | |

| ISA | ARMv8 |

| Succession | |

Mongoose 3 (M3) is the successor to the Mongoose 2, a 10 nm ARM microarchitecture designed by Samsung for their consumer electronics.

Contents

Process Technology

The M3 was fabricated on Samsung's second generation 10LPP (Low Power Plus) process.

Compiler support

| Compiler | Arch-Specific | Arch-Favorable |

|---|---|---|

| GCC | -mcpu=exynos-m3 |

-mtune=exynos-m3

|

| LLVM | -mcpu=exynos-m3 |

-mtune=exynos-m3

|

Architecture

Key changes from M2

- 10nm (10LPP) process (from 1st gen 10LPP)

- Core

- Front-end

- Larger ITLB (512 entries, up from 256)

- Larger branch prediction

- Larger microBTB (128-entry, up from 64)

- Larger and Wider L2 BTB (2x BW, 16K-entry, up from 8K)

- Improved taken latency on main BTB

- Larger instruction queue (40 entries, up from 24)

- Larger instruction fetch (48B/cycle, up from 24B/cycle)

- 6-way decode (from 4-way)

- µOP fusion

- new fuse address generation and memory µOP support

- new fuse literal generation µOP support

- Back-end

- Larger ReOrder buffer (228 entries, from 100 entries)

- New fastpath logical shift of up to 3 places

- Larger dispatch window (12 µOP/cycle, up from 9)

- Larger Integer physical register file

- Larger FP physical register

- Integer cluster

- 9 pipes (from 7)

- New pipe for a second load unit added

- New pipe for a second ALU with 3-operand support and MUL/DIV

- Double throughput for most integer operations

- 9 pipes (from 7)

- Floating Point cluster

- 3 pipes (From 3)

- Throughput of most FP operation have increased by 50% or doubled

- Additional EUs

- crypto EU, simple vector EU, vector shuffle/shift/mul, new FP store, new FP conversion

- 3 pipes (From 3)

- Memory subsystem

- 4x Larger L2 BTB (4k-entry, up from 1k-entry)

- New L3 Cache

- 4 MiB

- Double bandwidth (32B (2x16B)/cycle from 16B/cycle)

- fast paired 128-bit loads and stores

- Front-end

- branch misprediction penalty increased (16 cycles, from 14)

This list is incomplete; you can help by expanding it.

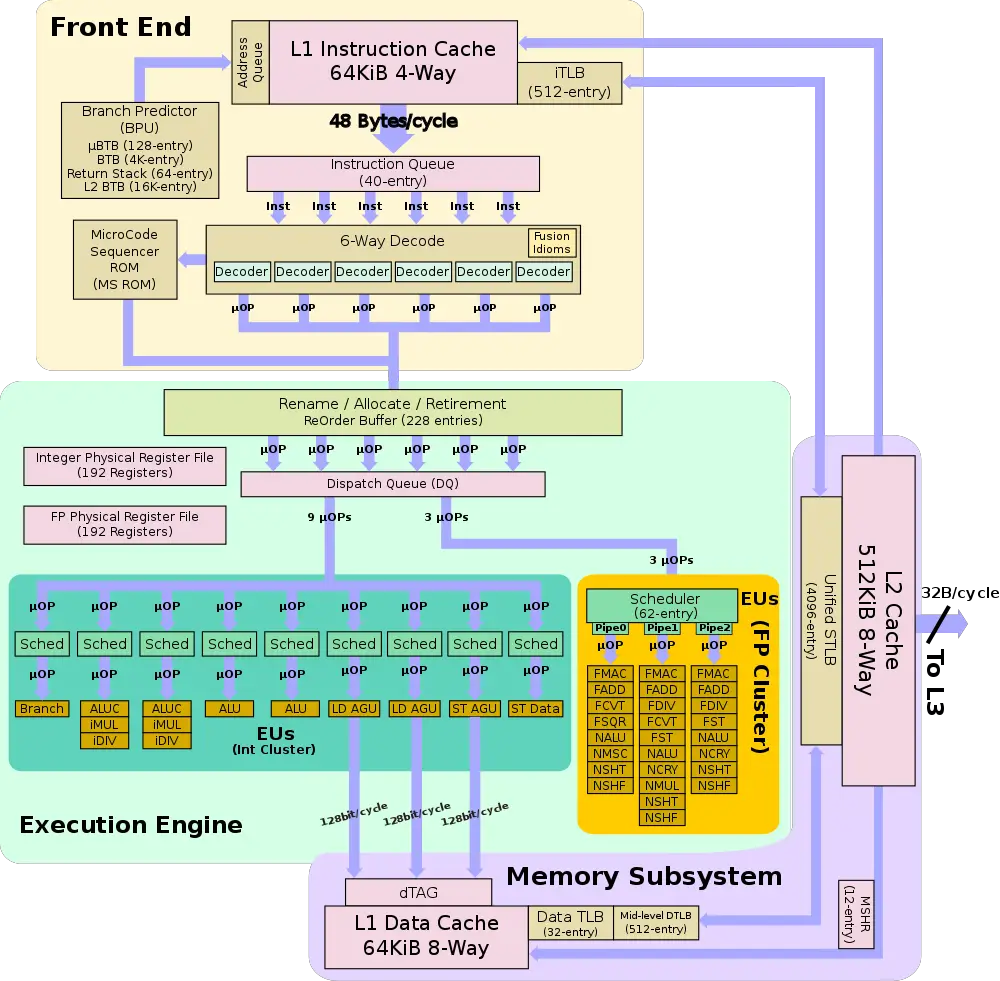

Block Diagram

Individual Core

Memory Hierarchy

| This section is empty; you can help add the missing info by editing this page. |

Overview

The M3 was originally planned to be an incremental design over the original M1. Design for the M3 started in 2014 with RTL design starting in 2015. When started, the goals for the M3 were around 10-15% performance improvements and the polishing of low-hanging fruits that were left from their first generation design. Samsung stated that throughout the development cycle of the M3, goals were drastically changed for considerably higher performance along with a much wider and deeper pipeline. First M3-based product was productized in Q1 2018 on Samsung's own 10 nm process operating at 2.7 GHz.

Core

The M3 is an out-of-order microprocessor with a 6-way decode and a 12-way dispatch.

Front end

The front-end of the M3 is tasked with the prediction of future instruction streams, the fetching of instructions, and the code of the ARM instructions into micro-operations to be executed by the back-end.

Fetch & pre-decoding

With the help of the branch predictor, the instructions should already be found in the level 1 instruction cache. The L1I cache is 64 KiB, 4-way set associative. Samsung kept the L1I cache the same as prior generations. The L1I cache and has its own iTLB consisting of 512 entries, double the prior generation. A large change in the M3 is the instruction fetch bandwidth. Previously, up to 24 bytes could be read each cycle into the instruction queue. In the M3, now 48 bytes (up to 12 ARM instructions) are read each cycle into the instruction queue which allows them to hide very short branch bubbles and deliver a large number of instructions to be decoded by a larger decoder. The instruction queue is a slightly more complex component than a simple buffer. The byte stream gets split up into the ARM instructions its made off, including dealing with the various misaligned ARM instructions such as in the case of thumb mode. If the queue is filled to capacity, the fetch is gated for a cycle in order to allow the queue to naturally decrease.

= Branch Predictor

The M1 has a perceptron branch predictor with a couple of perceptrons which can handle two branches per cycle. The branch predictor on the M3 largely enhances the one found in the M1. A number of new weights were added and previous weights were re-tuned for various case accuracy. The unit is capable of indirect predictions as well as loop and stream predictions when it detects those traffic patterns. The indirect branches received a lot of attention in the M3. Additionally, the capacity of the micro-BTB was doubled to 128 entries which is used for caching very small tight loops and other hot kernels. Although the buffer size itself has not been increased, the average branch taken latency has also been improved through improvements of branch-taken turn-around time for various cases that cold be expidited. The second-level BTB capacity was also doubled to 16K entries deep.

In total, Samsung reported a net reduction of the average miss-predictions per thousand instructions (MPKI) of around 15% (shown in the graph on the right) over the M2.

The branch predictor feeds a decoupled instruction address queue which in turn feeds the instruction cache.

All M3 Processors

| List of M3-based Processors | ||||||||

|---|---|---|---|---|---|---|---|---|

| Main processor | Integrated Graphics | |||||||

| Model | Family | Launched | Arch | Cores | Frequency | Turbo | GPU | Frequency |

| Count: 0 | ||||||||

Bibliography

- Template:hcbib

- LLVM: lib/Target/AArch64/AArch64SchedExynosM3.td

| codename | Mongoose 3 + |

| core count | 4 + |

| designer | Samsung + |

| first launched | 2018 + |

| full page name | samsung/microarchitectures/m3 + |

| instance of | microarchitecture + |

| instruction set architecture | ARMv8 + |

| manufacturer | Samsung + |

| microarchitecture type | CPU + |

| name | Mongoose 3 + |

| process | 10 nm (0.01 μm, 1.0e-5 mm) + |