-

WikiChip

WikiChip

-

Architectures

Popular x86

-

Intel

- Client

- Server

- Big Cores

- Small Cores

-

AMD

Popular ARM

-

ARM

- Server

- Big

- Little

-

Cavium

-

Samsung

-

-

Chips

Popular Families

-

Ampere

-

Apple

-

Cavium

-

HiSilicon

-

MediaTek

-

NXP

-

Qualcomm

-

Renesas

-

Samsung

-

From WikiChip

Cloud AI 100 - Microarchitectures - Qualcomm

< qualcomm

| Edit Values | |

| Cloud AI 100 µarch | |

| General Info | |

| Arch Type | NPU |

| Designer | Qualcomm |

| Manufacturer | TSMC |

| Introduction | March, 2021 |

| Process | 7 nm |

| PE Configs | 16 |

| Pipeline | |

| Type | VLIW |

| Decode | 4-way |

| Cache | |

| L2 Cache | 1 MiB/core |

| Side Cache | 8 MiB/core |

Cloud AI 100 is an NPU microarchitecture designed by Qualcomm for the server and edge market. Those NPUs are sold under the Cloud AI brand.

Contents

Process Technology

The Cloud AI 100 SoC is fabricated on TSMC's 7-nanometer process.

Architecture

Key Features

Block Diagram

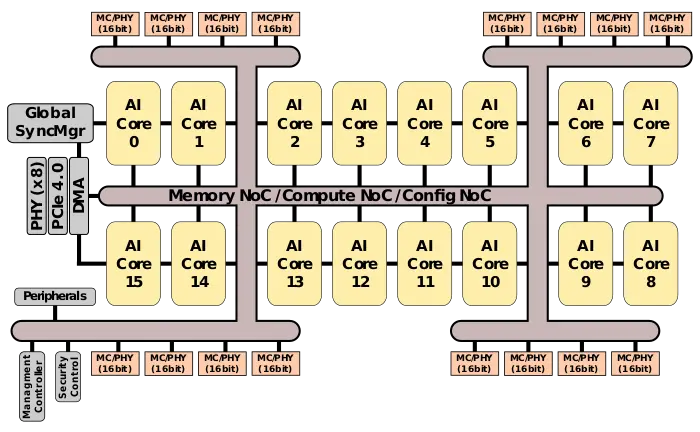

SoC

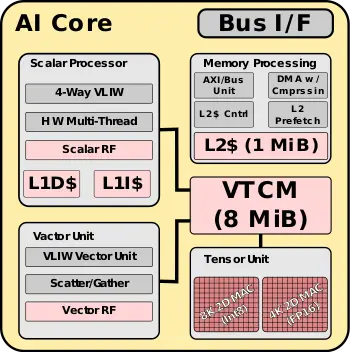

AI Core

Memory Hierarchy

Overview

AI Core

Performance claims

Bibliography

- Linley Fall Processor Conference 2021

- Qualcomm, IEEE Hot Chips 33 Symposium (HCS) 2021.

Retrieved from "https://en.wikichip.org/w/index.php?title=qualcomm/microarchitectures/cloud_ai_100&oldid=99316"

Facts about "Cloud AI 100 - Microarchitectures - Qualcomm"

| codename | Cloud AI 100 + |

| designer | Qualcomm + |

| first launched | March 2021 + |

| full page name | qualcomm/microarchitectures/cloud ai 100 + |

| instance of | microarchitecture + |

| manufacturer | TSMC + |

| name | Cloud AI 100 + |

| process | 7 nm (0.007 μm, 7.0e-6 mm) + |

| processing element count | 16 + |