From WikiChip

Difference between revisions of "x86/avx-512"

(→Overview) |

(→Overview) |

||

| Line 21: | Line 21: | ||

* '''AVX512IFMA''' - {{x86|AVX512IFMA|'''AVX-512 Integer Fused Multiply-Add'''}} Instructions add support for fused multiply add of integers using 52-bit precision. | * '''AVX512IFMA''' - {{x86|AVX512IFMA|'''AVX-512 Integer Fused Multiply-Add'''}} Instructions add support for fused multiply add of integers using 52-bit precision. | ||

| − | * '''AVX512VBMI''' - {{x86|AVX512VBMI|'''AVX-512 Vector Bit Manipulation'''}} | + | * '''AVX512VBMI''' - {{x86|AVX512VBMI|'''AVX-512 Vector Bit Manipulation, Version 1'''}} add additional vector byte permutation instructions. |

| + | |||

| + | * '''AVX512VBMI2''' - {{x86|AVX512VBMI2|'''AVX-512 Vector Bit Manipulation, Version 2'''}} add additional vector byte permutation instructions. | ||

| + | |||

| + | * '''AVX512VAES''' - {{x86|AVX512VAES|'''AVX-512 Vector AES'''}} Instructions add vector AES operations | ||

| + | |||

| + | * '''AVX512BITALG''' - {{x86|AVX512BITALG|'''AVX-512 Bit Algorithms'''}} add bit algorithms operations | ||

* '''AVX5124FMAPS''' - {{x86|AVX512_4FMAPS|'''AVX-512 Fused Multiply Accumulation Packed Single precision'''}} Instructions add vector instructions for deep learning on floating-point single precision | * '''AVX5124FMAPS''' - {{x86|AVX512_4FMAPS|'''AVX-512 Fused Multiply Accumulation Packed Single precision'''}} Instructions add vector instructions for deep learning on floating-point single precision | ||

| − | * '''AVX512VNNI''' - {{x86|AVX512VNNI|'''AVX-512 Vector Neural Network Instructions'''}} | + | * '''AVX512VPCLMULQDQ''' - {{x86|AVX512VPCLMULQDQ|'''AVX-512 Vector Carry-less Multiply'''}} add vector carry-less multiply operations |

| + | |||

| + | * '''AVX512GFNI''' - {{x86|AVX512GFNI|'''AVX-512 Galois Field New Instructions'''}} add Galois Field transformations instructions | ||

| + | |||

| + | * '''AVX512VNNI''' - {{x86|AVX512VNNI|'''AVX-512 Vector Neural Network Instructions'''}} add vector instructions for deep learning | ||

* '''AVX5124VNNIW''' - {{x86|AVX512_4VNNIW|'''AVX-512 Vector Neural Network Instructions Word Variable Precision'''}} Instructions add vector instructions for deep learning on enhanced word variable precision | * '''AVX5124VNNIW''' - {{x86|AVX512_4VNNIW|'''AVX-512 Vector Neural Network Instructions Word Variable Precision'''}} Instructions add vector instructions for deep learning on enhanced word variable precision | ||

Revision as of 17:58, 9 March 2018

x86

Instruction Set Architecture

Instruction Set Architecture

General

Variants

Topics

- Instructions

- Addressing Modes

- Registers

- Model-Specific Register

- Assembly

- Interrupts

- Micro-Ops

- Timer

- Calling Convention

- Microarchitectures

- CPUID

CPUIDs

Modes

Extensions(all)

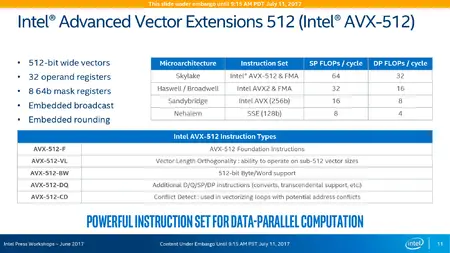

AVX-512 is collective name for a number of 512-bit SIMD x86 instruction set extensions. The extensions were formally introduced by Intel in July 2013 with first general-purpose microprocessors implementing the extensions introduced in July 2017.

Contents

Overview

AVX-512 is a set of 512-bit SIMD extensions that allow programs to pack sixteen single-precision eight double-precision floating-point numbers, or eight 64-bit or sixteen 32-bit integers within 512-bit vectors. The extension provides double the computation capabilities of that of AVX/AV2.

- AVX512F - AVX-512 Foundation is base of the 512-bit SIMD instruction extensions which is a comprehensive list of features for most HPC and enterprise applications. AVX-512 Foundation is the natural extensions to AVX/AVX2 which is extended using the EVEX prefix which builds on the existing VEX prefix. Any processor that implements any portion of the AVX-512 extensions MUST implement AVX512F.

- AVX512CD - AVX-512 Conflict Detection Instructions offer additional vectorization of loops with possible address conflict.

- AVX512DQ - AVX-512 Doubleword and Quadword Instructions add new 32-bit and 64-bit AVX-512 instructions (conversion, transcendental support, etc..).

- AVX512PF - AVX-512 Prefetch Instructions add new prefetch instructions for gather/scatter and PREFETCHWT1 .

- AVX512ER - AVX-512 Exponential and Reciprocal Instructions (ERI) offer 28-bit precision RCP, RSQRT and EXP transcendentals for various scientific applications.

- AVX512VL - AVX-512 Vector Length Instructions add vector length orthogonality, allowing most AVX-512 operations to also operate on XMM (128-bit SSE) registers and YMM (256-bit AVX) registers

- AVX512BW - AVX-512 Byte and Word Instructions add support for for 8-bit and 16-bit integer operations.

- AVX512IFMA - AVX-512 Integer Fused Multiply-Add Instructions add support for fused multiply add of integers using 52-bit precision.

- AVX512VBMI - AVX-512 Vector Bit Manipulation, Version 1 add additional vector byte permutation instructions.

- AVX512VBMI2 - AVX-512 Vector Bit Manipulation, Version 2 add additional vector byte permutation instructions.

- AVX512VAES - AVX-512 Vector AES Instructions add vector AES operations

- AVX512BITALG - AVX-512 Bit Algorithms add bit algorithms operations

- AVX5124FMAPS - AVX-512 Fused Multiply Accumulation Packed Single precision Instructions add vector instructions for deep learning on floating-point single precision

- AVX512VPCLMULQDQ - AVX-512 Vector Carry-less Multiply add vector carry-less multiply operations

- AVX512GFNI - AVX-512 Galois Field New Instructions add Galois Field transformations instructions

- AVX512VNNI - AVX-512 Vector Neural Network Instructions add vector instructions for deep learning

- AVX5124VNNIW - AVX-512 Vector Neural Network Instructions Word Variable Precision Instructions add vector instructions for deep learning on enhanced word variable precision

- AVX512VPOPCNTDQ - AVX-512 Vector Population Count Doubleword and Quadword Instructions add double and quad word population count instructions.

Note that,

- Formerly, the term AVX3.1 referred to

F + CD + ER + PF. - Formerly, the term AVX3.2 referred to

F + CD + BW + DQ + VL.

Detection

| CPUID | Instruction Set | |

|---|---|---|

| Input | Output | |

| EAX=07H, ECX=0 | EBX[bit 16] | AVX512F |

| ECX[bit 12] | AVX512BITALG | |

| EBX[bit 17] | AVX512DQ | |

| EBX[bit 21] | AVX512IFMA | |

| EBX[bit 26] | AVX512PF | |

| EBX[bit 27] | AVX512ER | |

| EBX[bit 28] | AVX512CD | |

| EBX[bit 30] | AVX512BW | |

| EBX[bit 31] | AVX512VL | |

| ECX[bit 01] | AVX512VBMI | |

| ECX[bit 11] | AVX512VNNI | |

| ECX[bit 14] | AVX512VPOPCNTDQ | |

| EDX[bit 02] | AVX5124VNNIW | |

| EDX[bit 03] | AVX5124FMAPS | |

Implementation

| Designer | Microarchitecture | Support Level | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| F | CD | ER | PF | BW | DQ | VL | IFMA | VBMI | 4FMAPS | 4VNNIW | VPOPCNTDQ | ||

| Intel | Knights Landing | ✔ | ✔ | ✔ | ✔ | ✘ | ✘ | ✘ | ✘ | ✘ | ✘ | ✘ | ✘ |

| Knights Mill | ✔ | ✔ | ✔ | ✔ | ✘ | ✘ | ✘ | ✘ | ✘ | ✔ | ✔ | ✔ | |

| Skylake | ✔ | ✔ | ✘ | ✘ | ✔ | ✔ | ✔ | ✘ | ✘ | ✘ | ✘ | ✘ | |

| Cannon Lake | ✔ | ✔ | ✘ | ✘ | ✔ | ✔ | ✔ | ✔ | ✔ | ✘ | ✘ | ✘ | |

| Ice Lake | ✔ | ✔ | ✘ | ✘ | ✔ | ✔ | ✔ | ✔ | ✔ | ✘ | ✘ | ✘ | |

Documents

- Intel® Advanced Vector Extensions 2015/2016; GNU Tools Cauldron 2014; Presented by Kirill Yukhin of Intel, July 2014