From WikiChip

Difference between revisions of "t-head/hanguang 800"

| Line 3: | Line 3: | ||

|name=Hanguang 800 | |name=Hanguang 800 | ||

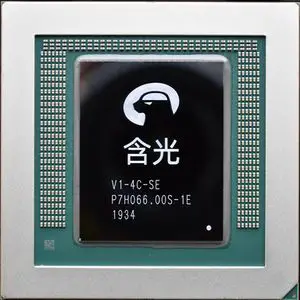

|image=hanguang800.jpg | |image=hanguang800.jpg | ||

| − | |designer= | + | |designer=T-Head |

|manufacturer=TSMC | |manufacturer=TSMC | ||

|market=Server | |market=Server | ||

Revision as of 23:23, 25 September 2019

| Edit Values | |

| Hanguang 800 | |

| |

| General Info | |

| Designer | T-Head |

| Manufacturer | TSMC |

| Market | Server, Artificial Intelligence |

| Introduction | September 26, 2019 (announced) September 26, 2019 (launched) |

| General Specs | |

| Family | Hanguang |

| Microarchitecture | |

| Process | 12 nm |

| Transistors | 17,000,000,000 |

| Technology | CMOS |

Hanguang 800 is a neural processor designed by Alibaba for inference acceleration in their data centers. Introduced in September 2019, the Hanguang 800 is fabricated on a 12 nm process. This chip is used exclusively by Alibaba in their Aliyun infestructure.

| This article is still a stub and needs your attention. You can help improve this article by editing this page and adding the missing information. |

Facts about "Hanguang 800 - T-Head"

| designer | T-Head + |

| family | Hanguang + |

| first announced | September 26, 2019 + |

| first launched | September 26, 2019 + |

| full page name | t-head/hanguang 800 + |

| instance of | microprocessor + |

| ldate | September 26, 2019 + |

| main image |  + + |

| manufacturer | TSMC + |

| market segment | Server + and Artificial Intelligence + |

| name | Hanguang 800 + |

| process | 12 nm (0.012 μm, 1.2e-5 mm) + |

| technology | CMOS + |

| transistor count | 17,000,000,000 + |