(→Compute Node) |

|||

| Line 51: | Line 51: | ||

:[[File:summit compute node (annotated).png|800px]] | :[[File:summit compute node (annotated).png|800px]] | ||

| + | |||

| + | ==== Socket ==== | ||

| + | Since [[IBM]] {{ibm|POWER9|l=arch}} processors have native on-die [[NVLink]] connectivity, they are connected directly to the CPUs. The {{ibm|POWER9|l=arch}} processor has six [[NVLink 2.0]] Bricks which are divided into three groups of two Bricks. Since [[NVLink 2.0]] has bumped the signaling rate to 25 GT/s, two Bricks allow for 100 GB/s of bandwidth between the CPU and GPU. In addition to everything else, there are x48 PCIe Gen 4 lanes for I/O. | ||

| + | |||

| + | The {{nvidia|Volta|l=arch}} GPUs have 6 [[NVLink 2.0]] Bricks which are divided into three groups. One group is used for the CPU while the other two groups interconnect every GPU to every other GPU. As with the GPU-CPU link, the aggregated bandwidth between two GPUs is also 100 GB/s. | ||

| + | |||

| + | :[[File:summit single-socket.svg|500px]] | ||

| + | |||

| + | |||

| + | <table class="wikitable"> | ||

| + | <tr><th colspan="5">Full Node Capabilities</th></tr> | ||

| + | <tr><th>Processor</th><td>POWER9</td><td>V100</td></tr> | ||

| + | <tr><th>Count</th><td>1</td><td>3</td></tr> | ||

| + | <tr><th>FLOPS (SP)</th><td>1.081 TFLOPS<br>22 × 49.12 GFLOPs</td><td>47.1 TFLOPS<br>3 × 15.7 TFLOPs</td></tr> | ||

| + | <tr><th>FLOPS (DP)</th><td>540.3 GFLOPs<br>22 × 24.56 GFLOPs</td><td>23.4 TFLOPS<br>3 × 7.8 TFLOPs</td></tr> | ||

| + | <tr><th>AI FLOPS</th><td>-</td><td>375 TFLOPS<br>3 × 125 TFLOPs</td></tr> | ||

| + | <tr><th>Memory</th><td>256 GiB (DRR4)<br>8 × 32 GiB</td><td>48 GiB (HBM2)<br>3 × 16 GiB</td></tr> | ||

| + | <tr><th>Bandwidth</th><td>170.7 GB/s<br>8 × 21.33 GB/s</td><td>900 GB/s/GPU</td></tr> | ||

| + | </table> | ||

| + | |||

| + | == Documents == | ||

| + | * [[:File:ornl summit node infographic.pdf|Summit Compute Node Infographic]] | ||

[[category:supercomputers]] | [[category:supercomputers]] | ||

Revision as of 19:12, 12 June 2018

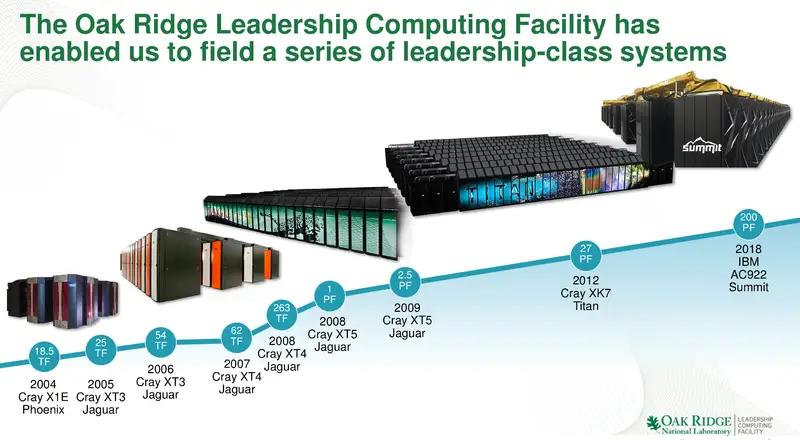

Summit (OLCF-4) is a 200-petaFLOP supercomputer operating by the DoE Oak Ridge National Laboratory. Summit was officially unveiled on June 8, 2018 as the fastest supercomputer in the world, overtaking Sunway TaihuLight.

Contents

History

Summit is one of three systems as part of the Collaboration of Oak Ridge, Argonne, and Lawrence Livermore Labs (CORAL) procurement program. Research and planning started in 2012 with initial system delivery arriving in late 2017. The full system arrived in early 2018 and the system was officially unveiled on June 8, 2018. Summit is estimated to have cost around $200 million as part of the CORAL procurement program.

Overview

Summit was designed to deliver 5-10x improvement in performance for real big science workload performance over Titan. Compared to Titan which had 18,688 nodes (AMD Opteron + Nvidia Kepler) with a 9 MW power consumption, Summit slightly increased the power consumption to 13 MW, reduced the number of nodes to only 4,608, but tenfold the peak theoretical performance from 27 petaFLOPS to around 225 PF.

Summit has over 200 petaFLOPS of theoretical compute power and over 3 AI exaFLOPS for AI workloads.

| Components | System | ||||||

|---|---|---|---|---|---|---|---|

| Processor | CPU | GPU | Rack | Compute Racks | Storage Racks | Switch Racks | |

| Type | POWER9 | V100 | Type | AC922 | SSC (4 ESS GL4) | Mellanox IB EDR | |

| Count | 9,216 2 × 18 x 256 | 27,648 6 × 18 x 256 | Count | 256 Racks × 18 Nodes | 40 Racks × 8 Servers | 18 Racks | |

| Peak FLOPS | 9.96 PF | 215.7 PF | Power | 59 kW | 38 kW | ||

| Peak AI FLOPS | 3.456 EF | 13 MW (Total System) | |||||

Summit has over 10 petabytes of memory.

| Summit Total Memory | |||

|---|---|---|---|

| Type | DDR4 | HBM2 | NVMe |

| Node | 512 GiB | 96 GiB | 1.6 GB |

| Summit | 2.53 PiB | 475 TiB | 7.37 PB |

Architecture

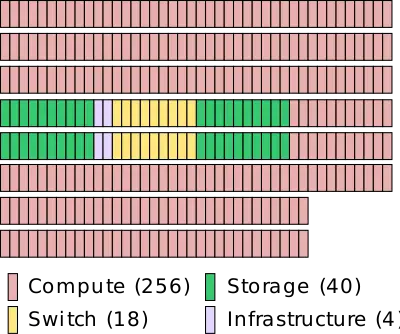

System

Weighing over 340 tons, Summit takes up 5,600 sq. ft. of floor space at Oak Ridge National Laboratory. Summit consists of 256 compute racks, 40 storage racks, 18 switching director racks, and 4 infrastructure racks. Servers are linked via Mellanox IB EDR interconnect in a three-level non-blocking fat-tree topology.

Compute Rack

Each of Summit's 256 Compute Racks consists of 18 Compute Nodes along with a Mellanox IB EDR for a non-blocking fat-tree interconnect topology (actually appears to be pruned 3-level fat-trees). With 18 nodes, each rack has 9 TiB of DDR4 memory and another 1.7 TiB of HBM2 memory for a total of 10.7 TiB of memory. A rack has a 59 kW max power and a total of 864 TF/s of peak compute power (ORNL reports 775 TF/s).

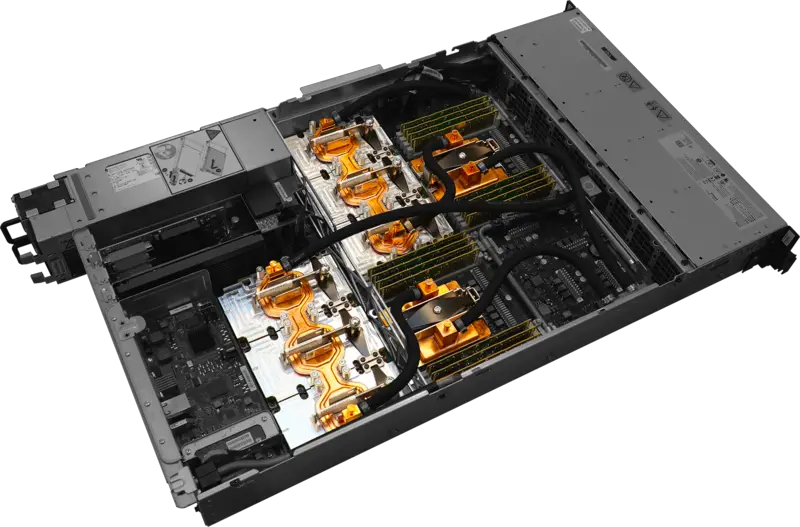

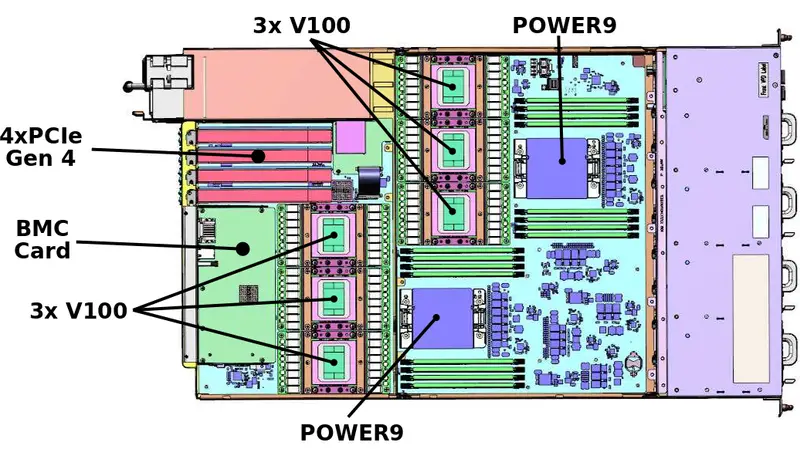

Compute Node

The basic compute node is the Power Systems AC922 (Accelerated Computing), formerly codename Witherspoon. The AC9222 comes in a 19-inch 2U rack-mount case.

Each node has two 2200W power supplies, 4 PCIe Gen 4 slots, and a BMC card. There are two 22-core POWER9 processors per node, each with 8 DIMMs. For the Summit supercomputer, there are 8 32-GiB DDR4-2666 DIMMs for a total of 256 GiB and 170.7 GB/s of aggregated memory bandwidth per socket. There are three V100 GPUs per POWER9 socket. Those use the SXM2 form factor and come with 16 GiB of HBM2 memory for a total of 48 GiB of HBM2 and 2.7 TBps of aggregated bandwidth per socket.

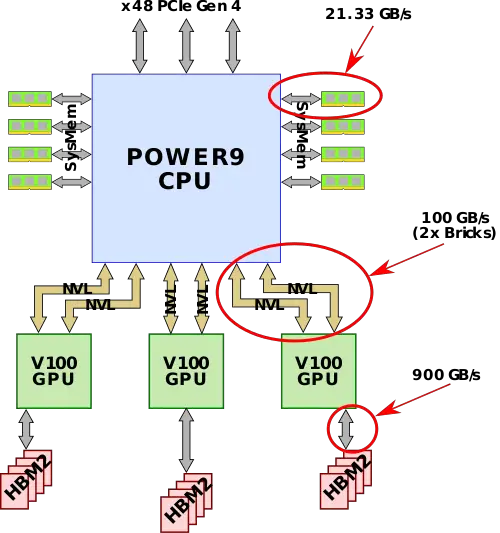

Socket

Since IBM POWER9 processors have native on-die NVLink connectivity, they are connected directly to the CPUs. The POWER9 processor has six NVLink 2.0 Bricks which are divided into three groups of two Bricks. Since NVLink 2.0 has bumped the signaling rate to 25 GT/s, two Bricks allow for 100 GB/s of bandwidth between the CPU and GPU. In addition to everything else, there are x48 PCIe Gen 4 lanes for I/O.

The Volta GPUs have 6 NVLink 2.0 Bricks which are divided into three groups. One group is used for the CPU while the other two groups interconnect every GPU to every other GPU. As with the GPU-CPU link, the aggregated bandwidth between two GPUs is also 100 GB/s.

| Full Node Capabilities | ||||

|---|---|---|---|---|

| Processor | POWER9 | V100 | ||

| Count | 1 | 3 | ||

| FLOPS (SP) | 1.081 TFLOPS 22 × 49.12 GFLOPs | 47.1 TFLOPS 3 × 15.7 TFLOPs | ||

| FLOPS (DP) | 540.3 GFLOPs 22 × 24.56 GFLOPs | 23.4 TFLOPS 3 × 7.8 TFLOPs | ||

| AI FLOPS | - | 375 TFLOPS 3 × 125 TFLOPs | ||

| Memory | 256 GiB (DRR4) 8 × 32 GiB | 48 GiB (HBM2) 3 × 16 GiB | ||

| Bandwidth | 170.7 GB/s 8 × 21.33 GB/s | 900 GB/s/GPU | ||