(→Execution engine) |

|||

| Line 190: | Line 190: | ||

==== Execution engine ==== | ==== Execution engine ==== | ||

| − | Continuing with the decoder is the [[register renaming]] stage. This is crucial for out-of-order execution. In this stage the architectural x86 registers get mapped into one of the many physical registers. The integer physical register file (PRF) has been enlarged by 8 addition registers for a total 168. Likewise the FP PRF was extended by 24 registers bringing it too to 168 registers. The larger | + | Continuing with the decoder is the [[register renaming]] stage. This is crucial for out-of-order execution. In this stage the architectural x86 registers get mapped into one of the many physical registers. The integer physical register file (PRF) has been enlarged by 8 addition registers for a total 168. Likewise the FP PRF was extended by 24 registers bringing it too to 168 registers. The larger increase in the FP PRF is likely to accommodate the new {{x86|AVX2}} extension. The [[reorder buffer|ROB]] in Haswell has been increased to 192 entries (from 168 in Ivy) where each entry corresponds to a single µOp. The ROD is fixed split between the two threads. Additional scheduler resources get allocated as well - this includes stores, loads, and branch buffer entries. Note that due to how dependencies are handled, there may be more or less µOps than what was fed in. For the most part, the renamer is unified and deals with both integers and vectors. Resources, however, are partitioned between the two threads. Finally, as a last step, the µOps are matched with a port depending on their intended execution purpose. Up to 4 fused µOps may be renamed and handled per thread per cycle. Both the load and store in-flight units were increased to 72 and 42 entries respectively. |

Haswell continues to use a unified scheduler for all µOps which holds 60 entries. µOps at this stage sit idle until they are cleared to be executed via their assigned dispatch port. µOps may be held due to resource unavailability. | Haswell continues to use a unified scheduler for all µOps which holds 60 entries. µOps at this stage sit idle until they are cleared to be executed via their assigned dispatch port. µOps may be held due to resource unavailability. | ||

Revision as of 13:29, 14 April 2016

| Edit Values | |

| Haswell µarch | |

| General Info |

Haswell (HSW) is Intel's microarchitecture based on the 22 nm process for mobile, desktops, and servers. Haswell, which was introduced in 2013, became the successor to Ivy Bridge. Haswell is named after Haswell, Colorado (Originally Molalla after Molalla, Oregon, it was later renamed due to the difficult pronunciation).

Contents

Codenames

| Core | Abbrev | Target |

|---|---|---|

| Haswell DT | HSW-DT | Desktops |

| Haswell MB | HSW-MB | Mobile/Laptops |

| Haswell H | HSW-H | All-in-ones |

| Haswell ULT | HSW-ULT | UltraBooks (MCPs) |

| Haswell ULX | HSW-ULX | Tablets/UltraBooks (SoCs) |

| Haswell EP | HSW-EP | Xeon chips |

| Haswell EX | HSW-EX | Xeon chips, QP |

| Haswell E | HSW-E | High-End Desktops (HEDT) |

Architecture

While sharing a lot of similarities with its predecessor Ivy Bridge, Haswell introduces many new enhancements and features. Haswell is the first desktop-line of x86s by Intel tailored for a system on chip architecture. This is a significant move since not every market segment has the same demands - high end desktops have a higher end GPU while servers don't even require one.

Key changes from Ivy Bridge

New instructions

- Main article: See #add_instructions for the complete list

Haswell introduced a number of new instructions:

-

AVX2- Advanced Vector Extensions 2; an extension that extends most integer instructions to 256 bits vectors.- Vector Gather supprt

- Any-to-Any permutes

- Vector-Vector Shifts

-

BMI1- Bit Manipulation Instructions Sets 1 -

BMI2- Bit Manipulation Instructions Sets 2 -

MOVBE- Move Big-Endian instruction -

FMA- Floating Point Multiply Accumulate -

TSX- Transactional Synchronization Extensions

Block Diagram

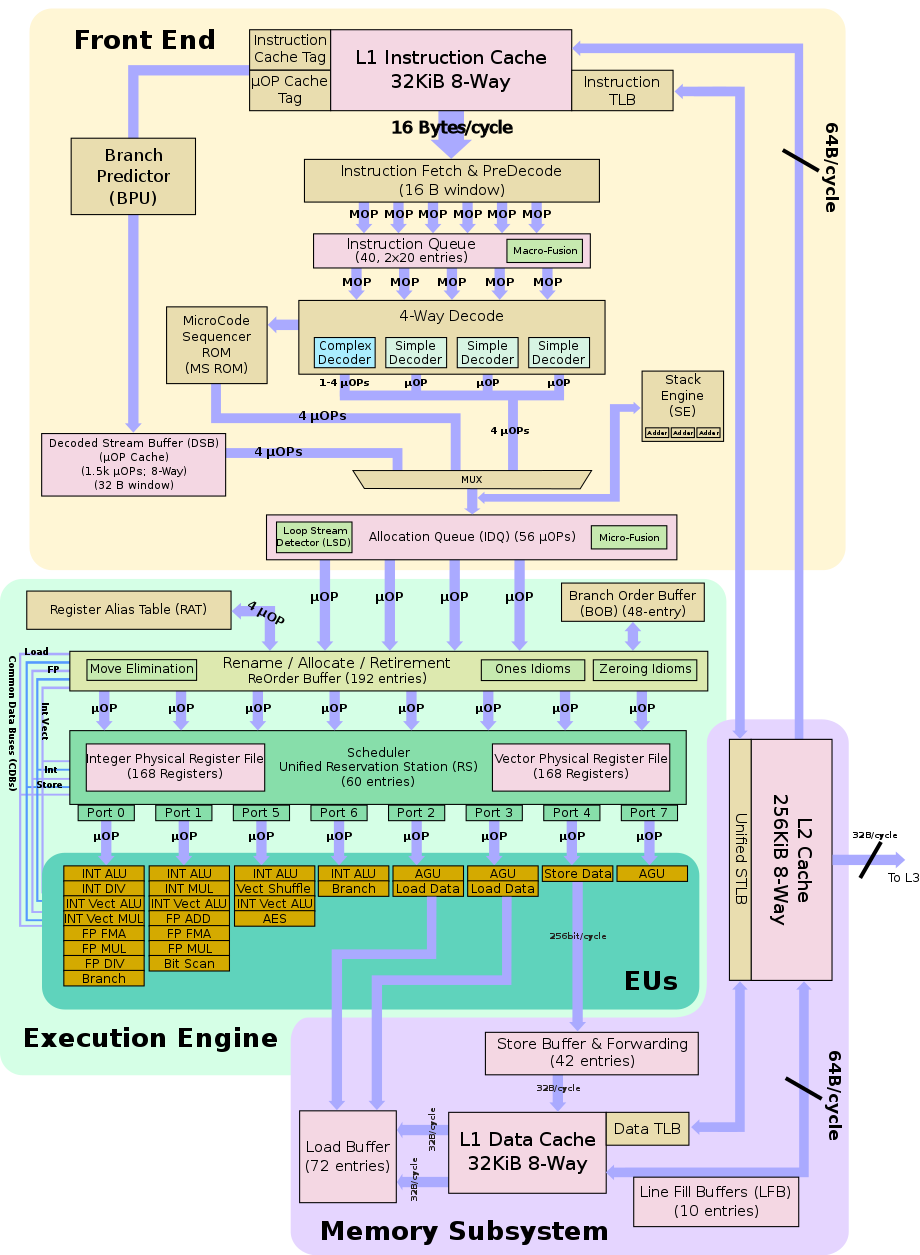

Due to the success of the front end in Ivy Bridge, very few changes were done in Haswell.

Memory Hierarchy

The memory hierarchy in Haswell had a number of changes from its predecessor. The cache bandwidth for both load and store have been doubled (64B/cycle for load and 32B/cycle for store; up from 32/16 respectively). Significant enhancements have been done to support the new gather instructions and transactional memory. With haswell new port 7 which adds an address generation for stores, up to two loads and one store are possible each cycle.

- Cache

- L1I Cache:

- 32 KB 8-way set associative

- 64 B line size

- shared by the two threads, per core

- 32 KB 8-way set associative

- L1D Cache:

- 32 KB 8-way set associative

- 64 B line size

- shared by the two threads, per core

- 4 cycles for fastest load-to-use

- 64 Bytes/cycle load bandwidth

- 32 Bytes/cycle store bandwidth

- 32 KB 8-way set associative

- L2 Cache:

- unified, 256 KB 8-way set associative

- 11 cycles for fastest load-to-use

- 64B/cycle bandwidth to L1$

- L3 Cache:

- 1.5 MB

- Per core

- L4 Cache:

- 128 MB

- Per package

- Only on the Template:inte GPUs

- TLBs:

- ITLB

- 4KB page translations:

- 128 entries; 4-way associative

- fixed partition; divided between the two threads

- 2MB/4MB page translations:

- 8 entries; fully associative

- Duplicated for each thread

- 4KB page translations:

- DTLB

- 4KB page translations:

- 64 entries; 4-way associative

- fixed partition; divided between the two threads

- 2MB/4MB page translations:

- 32 entries; 4-way associative

- 1G page translations:

- 4 entries; 4-way associative

- 4KB page translations:

- STLB

- 4KB+2M page translations:

- 1024 entries; 8-way associative

- shared

- 4KB+2M page translations:

- ITLB

- L1I Cache:

Pipeline

Haswell, like its predecessor Ivy Bridge, also has a dual-threaded and out-of-order pipeline.

Front-end

The front-end is the complicated part of the microarchitecture has it deals with variable length x86 instructions ranging from 1 to 15 bytes. The main goal here is to fetch and decode correctly the next set of instructions. The caches have not changed in Haswell from Ivy Bridge, with the L1i$ still 32KB , 8-way set associative shared dynamically by the two threads. Instruction cache instruction fetching remains 16B/cycle. TLB is also still 128-entries, 4-way for 4KB pages and 8-entries, fully associative for 2MB page mode. The fetched instructions are then moved on to an instruction queue which has 40 entries, 20 for each thread. Haswell continued to improve the branch misses although the exact details have not been made public.

Haswell has the same µOps cache as Ivy Bridge - 1,536 entries organized in 32 sets of 8 cache lines with 6 µOps each. Hits can yield up to 4-µOps/cycle. The cache supports microcoded instructions (being pointers to ROM entries). Cache is shared by the two threads.

Following the instruction queue, instructions are coded via the complex 4-way decoder. The decoder has 3 simple decoders and 1 complex decoder. In total, they are capable of emitting 3 single fused µOps and an additional 1-4 fused µOps. The unit handles both micro and macro fusions. Macro-fusion as a result of compatible adjacent µOps may be merged into a single µOp. Push and pops as well as call and return are also handled at this stage. 4 instructions, but with the aid of the macro-fusion, up to 5 instructions can be decoded each cycle.

Execution engine

Continuing with the decoder is the register renaming stage. This is crucial for out-of-order execution. In this stage the architectural x86 registers get mapped into one of the many physical registers. The integer physical register file (PRF) has been enlarged by 8 addition registers for a total 168. Likewise the FP PRF was extended by 24 registers bringing it too to 168 registers. The larger increase in the FP PRF is likely to accommodate the new AVX2 extension. The ROB in Haswell has been increased to 192 entries (from 168 in Ivy) where each entry corresponds to a single µOp. The ROD is fixed split between the two threads. Additional scheduler resources get allocated as well - this includes stores, loads, and branch buffer entries. Note that due to how dependencies are handled, there may be more or less µOps than what was fed in. For the most part, the renamer is unified and deals with both integers and vectors. Resources, however, are partitioned between the two threads. Finally, as a last step, the µOps are matched with a port depending on their intended execution purpose. Up to 4 fused µOps may be renamed and handled per thread per cycle. Both the load and store in-flight units were increased to 72 and 42 entries respectively.

Haswell continues to use a unified scheduler for all µOps which holds 60 entries. µOps at this stage sit idle until they are cleared to be executed via their assigned dispatch port. µOps may be held due to resource unavailability.

Following a successful execution, µOps retire at a rate of up to 4 fused µOps/cycle. Retirement is once again in-order and frees up any reserved resource (ROB entries, PRFs entries, and various other buffers).

Execution Units

Some of the biggest architectural changes were done in the area of the execution units. Haswell widened the scheduler by two ports - one new integer dispatch port and a new memory port bringing the total to 8 µOps/cycle. The various ports have also been rebalanced. The new port 6 adds another Integer ALU designs to improve integer workloads freeing up Port 0 and 1 for vector works. It also adds a second branch unit to low the congestion Port 0. The second port that was added, Port 7 adds a new AGU. This is largely due to the improvements for AVX2 that roughly doubled its throughput. Port 0 had its ALU/Mul/shifter extended to 256-bits; same is true for the vector ALU on port 1 and the ALU/shuffle on port 5. Additionally a 256-bit FMA unit were added to both port 0 and port 1. The change makes it possible for FMAs and FMULs to issue on both ports. In theory, Haswell can peak at over double the performance of Sandy Bridge, with 16 double / 32 single precision FLOP/cycle + Integer ALU option + Vector operation.

The scheduler dispatches up to 8 ready µOps/cycle in FIFO order through the dispatch ports. µOps involving computational operations are sent to ports 0, 1, 5, and 6 to the appropriate unit. Likewise ports 2, 3, 4 and 7 are used for load/store and address calculations.