(→Phases) |

(→Phases) |

||

| Line 101: | Line 101: | ||

=== Phase 2 === | === Phase 2 === | ||

| + | [[File:hector-2a-xt4.jpg|thumb|right|Phase 2a]][[File:hector-2a-cabinets.jpg|right|thumb|Phase 2a]] | ||

{{empty section}} | {{empty section}} | ||

=== Phase 3 === | === Phase 3 === | ||

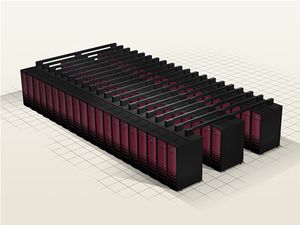

| + | [[File:hector phase3 cabs2.jpg|right|thumb|Phase 3]] | ||

HECToR Phase 3 is a Cray XE6 supercomputer. The system comprises 704 compute blades across 30 cabinets. Each blade incorporates four compute nodes. Within a compute node are two 16-core [[AMD]] {{amd|Opteron}} 2.3GHz {{amd|Interlagos|l=core}}-based processors. | HECToR Phase 3 is a Cray XE6 supercomputer. The system comprises 704 compute blades across 30 cabinets. Each blade incorporates four compute nodes. Within a compute node are two 16-core [[AMD]] {{amd|Opteron}} 2.3GHz {{amd|Interlagos|l=core}}-based processors. | ||

Revision as of 02:38, 21 October 2019

| Edit Values | |

| HECToR | |

| |

| General Info | |

| Sponsors | EPSRC, NERC, BBSRC |

| Designers | Cray |

| Operators | EPCC |

| Introduction | October 2007 |

| Retired | March 24 2014 |

| Peak FLOPS | 829 teraFLOPS |

| Price | $85,000,000 |

| Succession | |

HECToR (High-End Computing Terascale Resource) was terascale x86 supercomputer serving as the UK's primary academic research supercomputer. HECToR has been superseded by ARCHER.

Overview

HECToR was a UK academic national supercomputer service funded by NERC, EPSRC, and BBSRC for the academic community. HECTor was deployed in a number of phases with each phase upgrading it to over double the performance.

Phases

HECToR underwent 5 major upgrades.

| Cores | Peak Compute |

|---|---|

| 11,328 | 63.44 teraFLOPS |

| 12,288 | 113.05 teraFLOPS |

| 22,656 | 208.44 teraFLOPS |

| 44,544 | 366.74 teraFLOPS |

| 90,112 | 829.01 teraFLOPS |

Phase 1

Phase 1 was the initial system was based on the Cray XT4 (Rainier) system. The system comprised of 1416 compute blades across 60 cabinets. There are four sockets per blade. The processors are dual-core AMD Opteron operating at 2.8 GHz. There is 6 GB of memory per socket for a total system memory of 33.2 TiB. Rainier had a peak compute performance of 63.44 teraFLOPS.

| Type | Count |

|---|---|

| Nodes | 1,416 |

| CPUs | 5,664 |

| Cores | 11,328 |

In addition to the computational nodes, there were 24 additional service blades with dual-socket dual-core processors that were used for facilitating the supercomputer services such as login, control, and network management.

The system relies on Cray SeaStar2 interconnect. There's one SeaStar2 chip for every two node which comes with 6 network links. The network implements a 3D-torus. The MPI point-to-point bandwidth is was 2.17 GB/s, with a minimum bi-section bandwidth of 4.1 TB/s, and with a node-to-node latency of around 6 μs.

The memory subsystem:

| Type | Capacity |

|---|---|

| Direct attached storage | 576 TiB |

| NAS storage | 40 TiB |

| MAID storage | 56 TiB |

| Tape drives | 3 TiB |

Phase 1b

The system was upgraded in August 2008 with a small vector system. 28 Cray X2 (Black Widow) vector compute nodes were added. Each node had 4 Cray vector processor. There are 32 GiB of memory for node for a total of 896 GiB of memory.

| Type | Count |

|---|---|

| Nodes | 28 |

| CPUs | 112 |

With each vector processor capable of 25.6 gigaFLOPS, the new addition adds 2.87 teraFLOPS of compute to the existing system. The MPI point-to-point bandwidth is was 16 GB/s, with a minimum bi-section bandwidth of 254 GB/s, and with a node-to-node latency of around 4.6 μs.

The memory has also been upgraded.

| Type | Capacity |

|---|---|

| Direct attached storage | 934 TiB |

| NAS storage | 70 TiB |

| MAID storage | 112 TiB |

| Tape drives | 6 TiB |

Phase 2

| This section is empty; you can help add the missing info by editing this page. |

Phase 3

HECToR Phase 3 is a Cray XE6 supercomputer. The system comprises 704 compute blades across 30 cabinets. Each blade incorporates four compute nodes. Within a compute node are two 16-core AMD Opteron 2.3GHz Interlagos-based processors.

| Type | Count |

|---|---|

| Nodes | 2,816 |

| CPUs | 5,632 |

| Cores | 90,112 |

There are 16 GiB of memory per socket for a total system memory of 88 TiB. With 90,112 cores, the system had a peak compute performance of 829 teraFLOPS. In addition to the computational nodes, there are 16 additional blades with two dual-core processors per node which is used for various services such as login, control, and network management.

The system relies on Cray Gemini interconnect. There's one Gemini chip for every two node which comes with 10 network links. The network implements a 3D-torus. The MPI point-to-point bandwidth is >= 5 GB/s with a node-to-node latency of around 1-1.5μs.

TOP500

| List | Phase | Rank | Rmax | Rpeak |

|---|---|---|---|---|

| 11/2007 | Phase 1 | 17 | 54.6 | 63.4 |

| 06/2008 | 30 | |||

| 11/2008 | 47 | |||

| 06/2009 | 68 | |||

| 11/2010 | Phase 2a | 78 | 95.1 | 113.0 |

| 06/2011 | 94 | |||

| 11/2009 | Phase 2b | 20 | 174.1 | 208.4 |

| 06/2010 | 26 | |||

| 06/2010 | Phase 2b | 16 | 274.7 | 366.7 |

| 11/2010 | 25 | |||

| 06/2011 | Phase 3 | 24 | 660.2 | 829.0 |

| 11/2011 | 19 | |||

| 06/2012 | 32 | |||

| 11/2012 | 35 | |||

| 06/2013 | 41 | |||

| 11/2013 | 50 | |||

| 06/2014 | 59 | |||

| 11/2014 | 79 | |||

| 06/2015 | 105 |

| designer | Cray + |

| discontinuation date | March 24 2014 + |

| introductory date | October 2007 + |

| logo |  + + |

| name | HECToR + |

| operator | EPCC + |

| peak flops (double-precision) | 829,000,000,000,000 FLOPS (829,000,000,000 KFLOPS, 829,000,000 MFLOPS, 829,000 GFLOPS, 829 TFLOPS, 0.829 PFLOPS, 8.29e-4 EFLOPS, 8.29e-7 ZFLOPS) + |

| release price | $ 85,000,000.00 (€ 76,500,000.00, £ 68,850,000.00, ¥ 8,783,050,000.00) + |

| sponsor | EPSRC +, NERC + and BBSRC + |