| (One intermediate revision by the same user not shown) | |||

| Line 10: | Line 10: | ||

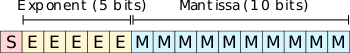

::[[File:float16 encoding format.svg|350px]] | ::[[File:float16 encoding format.svg|350px]] | ||

| + | :'''msfp11''': | ||

| + | |||

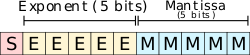

| + | ::[[File:msfp11 encoding format.svg|250px]] | ||

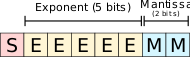

:'''msfp8''': | :'''msfp8''': | ||

| Line 23: | Line 26: | ||

== Bibliography == | == Bibliography == | ||

| − | * {{ | + | * {{bib|hc|31|Microsoft}} |

Latest revision as of 15:41, 15 October 2019

MSFP8-MSFP11 (Microsoft floating-point 8-11) is a series of number encoding formats occupying 8 to 11 bits representing a floating-point number. MSFP8 is equivalent to a standard half-precision floating-point value with a truncated mantissa field. It is the 8-bit equivalent of the bfloat16 number format and is used for the very same purpose of hardware accelerating machine learning algorithms. MSFP was first proposed and implemented by Microsoft.

Overview[edit]

MSFP8-11 follows the same format as a standard IEEE 754 single-precision floating-point but truncates the mantissa field from 11 bits to just 2-5 bits. Preserving the exponent bits keeps the format to the same range as the 16-bit single precision FP (~5.96e-8 to 65,504). This allows for relatively simpler conversion between the two data types. In other words, while some resolution is lost, numbers can still be represented. In many ways, MSFP8 is analogous to an 8-bit version of bfloat16.

- float16:

- msfp11:

- msfp8:

Hardware support[edit]

See also[edit]

Bibliography[edit]

- Microsoft, IEEE Hot Chips 31 Symposium (HCS) 2019.