| Line 1: | Line 1: | ||

{{intel title|Configurable Spatial Accelerator (CSA)}} | {{intel title|Configurable Spatial Accelerator (CSA)}} | ||

'''Configurable Spatial Accelerator''' ('''CSA''') is an [[explicit data graph execution|EDGE]] instruction set architecture designed by [[Intel]]. | '''Configurable Spatial Accelerator''' ('''CSA''') is an [[explicit data graph execution|EDGE]] instruction set architecture designed by [[Intel]]. | ||

| + | |||

| + | |||

| + | {{work-in-progress}} | ||

| + | |||

== Motivation == | == Motivation == | ||

| Line 9: | Line 13: | ||

Each of the processing elements supports a few highly efficient operations, thus they can only handle a few of the dataflow graph operations. Different PEs support different operations of the dataflow graph. PEs execute simultaneously irrespective of the operations of the other PEs. PEs can execute a dataflow operation whenever it has input data available and there is sufficient space available for storing the output data. | Each of the processing elements supports a few highly efficient operations, thus they can only handle a few of the dataflow graph operations. Different PEs support different operations of the dataflow graph. PEs execute simultaneously irrespective of the operations of the other PEs. PEs can execute a dataflow operation whenever it has input data available and there is sufficient space available for storing the output data. | ||

| + | |||

| + | :[[File:csa overview block.svg|400px]] | ||

| + | |||

| + | == Architecture == | ||

| + | The CSA executes dataflow graphs directly. Those tend to look very similar to the compiler's own internal representation (IR) of compiled programs. The graph consists of operators and edges which are the transfer of data between operators. Dataflow tokens representing data values are injected into the dataflow graph for executions. Tokens flow between nodes to form a complete computation. | ||

| + | |||

| + | |||

| + | :[[File:csa flow example.svg|800px]] | ||

| + | |||

| + | |||

| + | Suppose func is executed with 1 for X and 2 for Y. The value for X (a 1) may be a constant or a result of some other operation. A pick operation selects that correct input value and provides it to the downstream destination processing element. In this case, the pick outputs a data value of 1 to the [[multiplier]] to be multiplied by the value of Y (a 2). The multiplier outputs the value of 2 to the switch node which completes the operation and passes the value onward. Many such operations may be executed concurrently | ||

| + | |||

| + | === Communications arcs === | ||

| + | Communications arcs are latency insensitive, asynchronous, point-to-point communications channels. | ||

| + | |||

== Bibliography == | == Bibliography == | ||

* Kermin E.F. et al. (July 5, 2018). US Patent No. US20180189231A1. | * Kermin E.F. et al. (July 5, 2018). US Patent No. US20180189231A1. | ||

Revision as of 20:58, 30 September 2018

Configurable Spatial Accelerator (CSA) is an EDGE instruction set architecture designed by Intel.

Motivation

The push to exascale computing demands a very high floating-point performance while maintaining a very aggressive power budget. Intel claims that the CSA architecture is capable of replacing the traditional out-of-order superscalar while surpassing it in performance and power. CSA supports the same HPC programming models supported by traditional microprocessors.

Overview

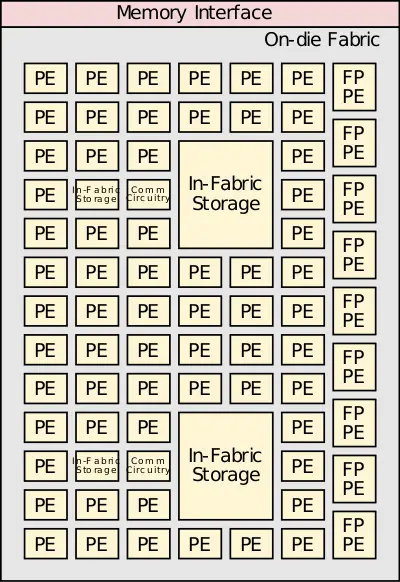

CSA claims to offer an order-of-magnitude gain in energy efficiency and performance relative to existing HPC microprocessors through the use of highly-dense and efficient atomic compute units. The basic structure of the configurable spatial accelerator (CSA) is a dense heterogeneous array of processing elements (PEs) tiles along with an on-die interconnect network and a memory interface. The array of processing elements can include integer arithmetic PEs, floating-point arithmetic PEs, communication circuitry, and in - fabric storage. This group of tiles may be stand-alone or may be part of an even larger tile. The accelerator executes data flow graphs directly instead traditional sequential instruction streams.

Each of the processing elements supports a few highly efficient operations, thus they can only handle a few of the dataflow graph operations. Different PEs support different operations of the dataflow graph. PEs execute simultaneously irrespective of the operations of the other PEs. PEs can execute a dataflow operation whenever it has input data available and there is sufficient space available for storing the output data.

Architecture

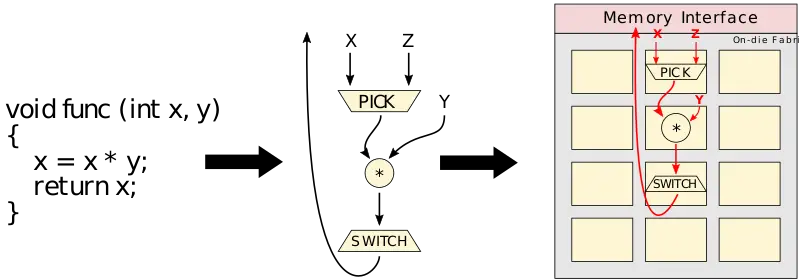

The CSA executes dataflow graphs directly. Those tend to look very similar to the compiler's own internal representation (IR) of compiled programs. The graph consists of operators and edges which are the transfer of data between operators. Dataflow tokens representing data values are injected into the dataflow graph for executions. Tokens flow between nodes to form a complete computation.

Suppose func is executed with 1 for X and 2 for Y. The value for X (a 1) may be a constant or a result of some other operation. A pick operation selects that correct input value and provides it to the downstream destination processing element. In this case, the pick outputs a data value of 1 to the multiplier to be multiplied by the value of Y (a 2). The multiplier outputs the value of 2 to the switch node which completes the operation and passes the value onward. Many such operations may be executed concurrently

Communications arcs

Communications arcs are latency insensitive, asynchronous, point-to-point communications channels.

Bibliography

- Kermin E.F. et al. (July 5, 2018). US Patent No. US20180189231A1.