(→Detection: table format fix) |

|||

| (8 intermediate revisions by one other user not shown) | |||

| Line 1: | Line 1: | ||

| − | {{x86 title| | + | {{x86 title|AVX-512 Vector Neural Network Instructions (VNNI)}}{{x86 isa main}} |

'''{{x86|AVX-512}} Vector Neural Network Instructions''' ('''AVX512 VNNI''') is an [[x86]] extension, part of the {{x86|AVX-512}}, designed to accelerate [[convolutional neural network]]-based [[algorithms]]. | '''{{x86|AVX-512}} Vector Neural Network Instructions''' ('''AVX512 VNNI''') is an [[x86]] extension, part of the {{x86|AVX-512}}, designed to accelerate [[convolutional neural network]]-based [[algorithms]]. | ||

| Line 22: | Line 22: | ||

:[[File:vnni-vpdpbusd-i.svg|400px]] [[File:vnni-vpdpwssd-i.svg|400px]] | :[[File:vnni-vpdpbusd-i.svg|400px]] [[File:vnni-vpdpwssd-i.svg|400px]] | ||

| + | |||

| + | == Detection == | ||

| + | {| class="wikitable" | ||

| + | ! colspan="2" | {{x86|CPUID}} !! rowspan="2" | Instruction Set | ||

| + | |- | ||

| + | ! Input !! Output | ||

| + | |- | ||

| + | | EAX=07H, ECX=0 || ECX[bit 11] || AVX512VNNI | ||

| + | |} | ||

| + | |||

| + | == Microarchitecture support == | ||

| + | {| class="wikitable" | ||

| + | |- | ||

| + | ! Instructions !! Introduction | ||

| + | |- | ||

| + | | AVX512VNNI || {{intel|Cascade Lake|l=arch}} (server)<br>{{intel|Ice Lake (client)|Ice Lake|l=arch}} (client) | ||

| + | |} | ||

| + | |||

| + | == Intrinsic functions == | ||

| + | <source lang=asm> | ||

| + | # vpdpbusd | ||

| + | __m512i _mm512_dpbusd_epi32 (__m512i src, __m512i a, __m512i b) | ||

| + | __m512i _mm512_mask_dpbusd_epi32 (__m512i src, __mmask16 k, __m512i a, __m512i b) | ||

| + | __m512i _mm512_maskz_dpbusd_epi32 (__mmask16 k, __m512i src, __m512i a, __m512i b) | ||

| + | # vpdpbusds | ||

| + | __m512i _mm512_dpbusds_epi32 (__m512i src, __m512i a, __m512i b) | ||

| + | __m512i _mm512_mask_dpbusds_epi32 (__m512i src, __mmask16 k, __m512i a, __m512i b) | ||

| + | __m512i _mm512_maskz_dpbusds_epi32 (__mmask16 k, __m512i src, __m512i a, __m512i b) | ||

| + | # vpdpwssd | ||

| + | __m512i _mm512_dpwssd_epi32 (__m512i src, __m512i a, __m512i b) | ||

| + | __m512i _mm512_mask_dpwssd_epi32 (__m512i src, __mmask16 k, __m512i a, __m512i b) | ||

| + | __m512i _mm512_maskz_dpwssd_epi32 (__mmask16 k, __m512i src, __m512i a, __m512i b) | ||

| + | # vpdpwssds | ||

| + | __m512i _mm512_dpwssds_epi32 (__m512i src, __m512i a, __m512i b) | ||

| + | __m512i _mm512_mask_dpwssds_epi32 (__m512i src, __mmask16 k, __m512i a, __m512i b) | ||

| + | __m512i _mm512_maskz_dpwssds_epi32 (__mmask16 k, __m512i src, __m512i a, __m512i b) | ||

| + | </source> | ||

== Bibliography == | == Bibliography == | ||

| − | * {{ | + | * {{bib|hc|30|Intel}} |

* Rodriguez, Andres, et al. "[https://ai.intel.com/nervana/wp-content/uploads/sites/53/2018/05/Lower-Numerical-Precision-Deep-Learning-Inference-Training.pdf Lower numerical precision deep learning inference and training]." Intel White Paper (2018). | * Rodriguez, Andres, et al. "[https://ai.intel.com/nervana/wp-content/uploads/sites/53/2018/05/Lower-Numerical-Precision-Deep-Learning-Inference-Training.pdf Lower numerical precision deep learning inference and training]." Intel White Paper (2018). | ||

| − | [[ | + | [[Category:x86_extensions]] |

Revision as of 08:05, 8 October 2020

Instruction Set Architecture

- Instructions

- Addressing Modes

- Registers

- Model-Specific Register

- Assembly

- Interrupts

- Micro-Ops

- Timer

- Calling Convention

- Microarchitectures

- CPUID

AVX-512 Vector Neural Network Instructions (AVX512 VNNI) is an x86 extension, part of the AVX-512, designed to accelerate convolutional neural network-based algorithms.

Contents

Overview

The AVX512 VNNI x86 extension extends AVX-512 Foundation by introducing four new instructions for accelerating inner convolutional neural network loops.

-

VPDPBUSD- Multiplies the individual bytes (8-bit) of the first source operand by the corresponding bytes (8-bit) of the second source operand, producing intermediate word (16-bit) results which are summed and accumulated in the double word (32-bit) of the destination operand.-

VPDPBUSDS- Same as above except on intermediate sum overflow which saturates to 0x7FFF_FFFF/0x8000_0000 for positive/negative numbers.

-

-

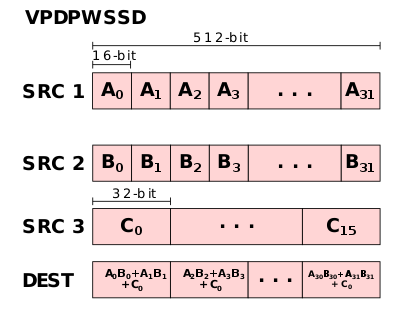

VPDPWSSD- Multiplies the individual words (16-bit) of the first source operand by the corresponding word (16-bit) of the second source operand, producing intermediate word results which are summed and accumulated in the double word (32-bit) of the destination operand.-

VPDPWSSDS- Same as above except on intermediate sum overflow which saturates to 0x7FFF_FFFF/0x8000_0000 for positive/negative numbers.

-

Motivation

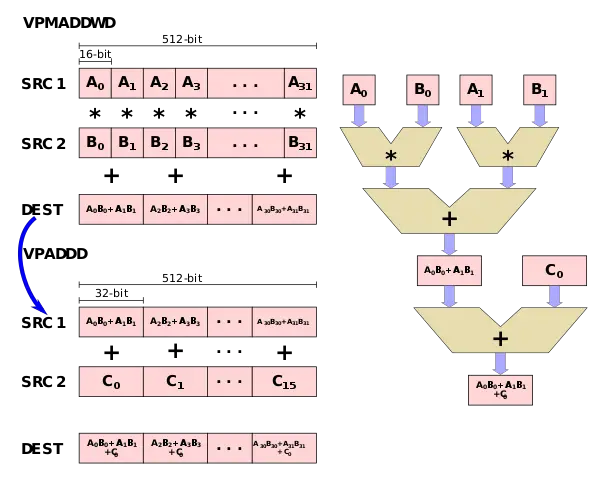

The major motivation behind the AVX512 VNNI extension is the observation that many tight convolutional neural network loops require the repeated multiplication of two 16-bit values or two 8-bit values and accumulate the result to a 32-bit accumulator. Using the foundation AVX-512, for 16-bit, this is possible using two instructions - VPMADDWD which is used to multiply two 16-bit pairs and add them together followed a VPADDD which adds the accumulate value.

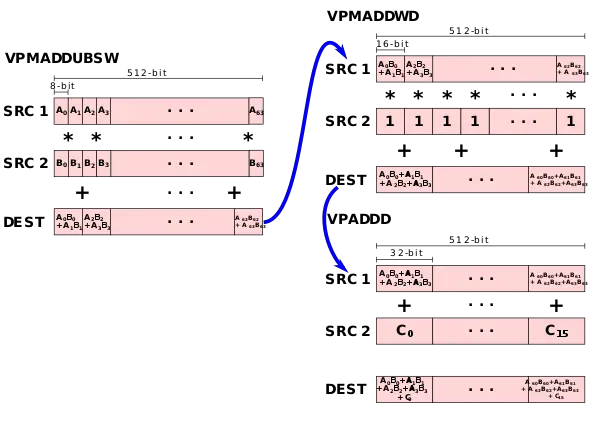

Likewise, for 8-bit values, three instructions are needed - VPMADDUBSW which is used to multiply two 8-bit pairs and add them together, followed by a VPMADDWD with the value 1 in order to simply up-convert the 16-bit values to 32-bit values, followed by the VPADDD instruction which adds the result to an accumulator.

To address those two common operations, two new instructions were added (as well as two saturated versions): VPDPBUSD fuses VPMADDUBSW, VPMADDWD, and VPADDD and VPDPWSSD fuses VPMADDWD and VPADDD.

Detection

| CPUID | Instruction Set | |

|---|---|---|

| Input | Output | |

| EAX=07H, ECX=0 | ECX[bit 11] | AVX512VNNI |

Microarchitecture support

| Instructions | Introduction |

|---|---|

| AVX512VNNI | Cascade Lake (server) Ice Lake (client) |

Intrinsic functions

# vpdpbusd

__m512i _mm512_dpbusd_epi32 (__m512i src, __m512i a, __m512i b)

__m512i _mm512_mask_dpbusd_epi32 (__m512i src, __mmask16 k, __m512i a, __m512i b)

__m512i _mm512_maskz_dpbusd_epi32 (__mmask16 k, __m512i src, __m512i a, __m512i b)

# vpdpbusds

__m512i _mm512_dpbusds_epi32 (__m512i src, __m512i a, __m512i b)

__m512i _mm512_mask_dpbusds_epi32 (__m512i src, __mmask16 k, __m512i a, __m512i b)

__m512i _mm512_maskz_dpbusds_epi32 (__mmask16 k, __m512i src, __m512i a, __m512i b)

# vpdpwssd

__m512i _mm512_dpwssd_epi32 (__m512i src, __m512i a, __m512i b)

__m512i _mm512_mask_dpwssd_epi32 (__m512i src, __mmask16 k, __m512i a, __m512i b)

__m512i _mm512_maskz_dpwssd_epi32 (__mmask16 k, __m512i src, __m512i a, __m512i b)

# vpdpwssds

__m512i _mm512_dpwssds_epi32 (__m512i src, __m512i a, __m512i b)

__m512i _mm512_mask_dpwssds_epi32 (__m512i src, __mmask16 k, __m512i a, __m512i b)

__m512i _mm512_maskz_dpwssds_epi32 (__mmask16 k, __m512i src, __m512i a, __m512i b)

Bibliography

- Intel, IEEE Hot Chips 30 Symposium (HCS) 2018.

- Rodriguez, Andres, et al. "Lower numerical precision deep learning inference and training." Intel White Paper (2018).